DexFuncGrasp: A Robotic Dexterous Functional Grasp Dataset Constructed from a Cost-Effective Real-Simulation Annotation System (AAAI2024)

Jinglue Hang, Xiangbo Lin†, Tianqiang Zhu, Xuanheng Li, Rina Wu, Xiaohong Ma and Yi Sun;

Dalian University of Technology

† corresponding author

project page

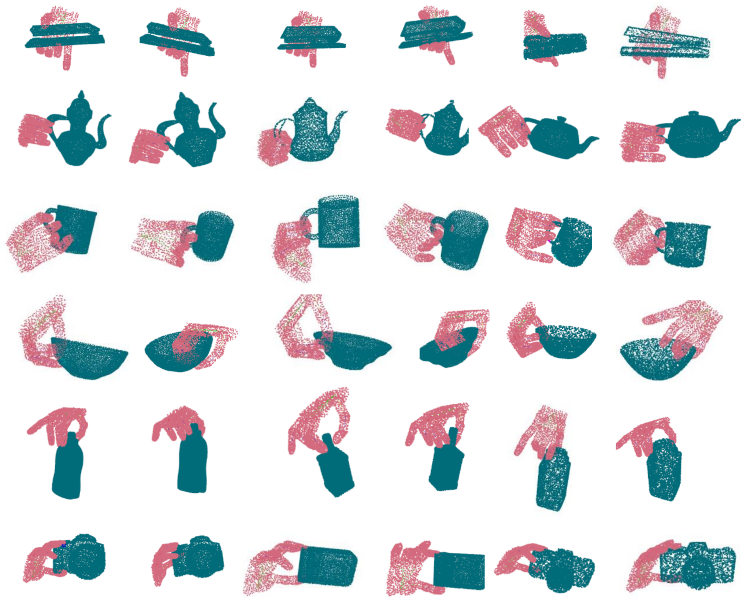

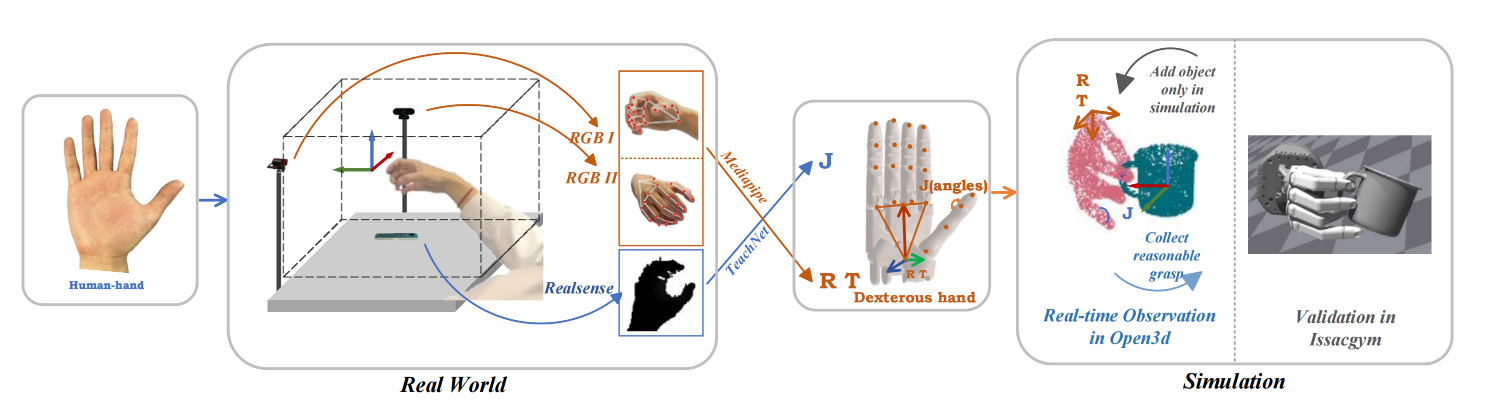

Robot grasp dataset is the basis of designing the robot’s grasp generation model. Compared with the building grasp dataset for Low-DOF grippers, it is harder for High-DOF dexterous robot hand. Most current datasets meet the needs of generating stable grasps, but they are not suitable for dexterous hands to complete human-like functional grasp, such as grasp the handle of a cup or pressing the button of a flashlight, so as to enable robots to complete subsequent functional manipulation action autonomously, and there is no dataset with functional grasp pose annotations at present. This paper develops a unique Cost-Effective Real-Simulation Annotation System by leveraging natural hand’s actions. The system is able to capture a functional grasp of a dexterous hand in a simulated environment assisted by human demonstration in real world. By using this system, dexterous grasp data can be collected efficiently as well as cost-effective. Finally, we construct the first dexterous functional grasp dataset with rich pose annotations. A Functional Grasp Synthesis Model is also provided to validate the effectiveness of the proposed system and dataset.

- Isaac Gym preview 4.0 (3.0)

- Obj_Data

- VRC-Dataset

- Pretrained VRCNET Model

- DFG-Dataset

- Pretrained DexFuncGraspNet Model

- Baseline-Results

- annotate for - Grasp pose collection, Grasp Transfer for Dataset Extension , and DexFuncGraspNet.

- vrcnet for - VRCNET

- Follow the instruction from vrcnet-project to create conda env.

- dexfuncgrasp for - DexFuncGraspNet - CVAE.

- Our Annotation system: we use TeachNet mapping human hand to ShadowHand and collect functional dexterous hand grasp. Other dexterous hands collection which use directly angle mapping from ShadowHand are also provided.

- follow the realsense website and install realsense

two RGB cameras ===== our frame_shape = [720, 1280]

one realsense camera ==== we use Inter SR305

-

Ubuntu 20.04 (optional)

-

Python 3.8

-

PyTorch 1.10.1

-

Numpy 1.22.0

-

mediapipe 0.8.11

-

pytorch-kinematics 0.3.0

-

Isaac Gym preview 4.0 (3.0)

-

CUDA 11.1

conda create -n annotate python==3.8.13

conda activate annotate

# Install pytorch with cuda

pip install torch==1.10.1 torchvision==0.11.2 ## or using offical code from pytorch website

pip install numpy==1.22.0

cd Annotation/

cd pytorch_kinematics/ #need download from up link

pip install -e.

cd ..

pip install -r requirement.txt

# Install IsaacGym :

# download from up link and put in to folder Annotation/

cd IsaacGym/python/

pip install -e .

export LD_LIBRARY_PATH=/home/your/path/to/anaconda3/envs/annotate/lib-

Download Isaac Gym preview 4.0 (3.0)

|-- Annotation |-- IsaacGym

-

Download Obj_Data

|-- Annotation |-- IsaacGym |-- assets |-- urdf |-- off |-- Obj_Data |-- Obj_Data_urdf

-

Set the cameras in real as shown in the figure.

-

Follow the instruction from handpose3d, get the camera_paremeters folder, or use mine.

-

Create a folder, for example, named /Grasp_Pose.

-

Run .py, which --idx means the id of category, and --instance means which object to be grasped, --cam_1 and --cam_2 means the ids of them:

python shadow_dataset_human_shadow_add_issacgym_system_pytorch3d_mesh_new_dataset.py --idx 0 --instance 0 --cam_1 6 --cam_2 4- We read the grasp pose file from Grasp_Pose/. and sent to IsaacGym to verify at the same time, success grasps and collected success rate will be saved in dir /Tink_Grasp_Transfer/Dataset/Grasps/.

cd..

cd IsaacGym/python

python grasp_gym_runtime_white_new_data.py --pipeline cpu --grasp_mode dynamic --idx 0 --instance 0-

If you think this grasp is good grasp, press blank and poses can be saved, try to collect less than 30 grasps, and click x in isaacgym in the top right to close. The grasp pose could be saved in dir Grasp_Pose/.

-

After collection, unit axis for grasps in /Tink_Grasp_Transfer/Dataset/Grasps/ in order to learn sdf function of each category.

python trans_unit.py - Other dexterous hand collection demo (Optional)

python shadow_dataset_human_shadow_add_issacgym_system_pytorch3d_mesh_new_dataset_multi_dexterous.py --idx 0 --instance 0 --cam_1 6 --cam_2 4- Visualization

# Put .pkl in to Annotation/visual_dict/new/

python show_data_mesh.py-

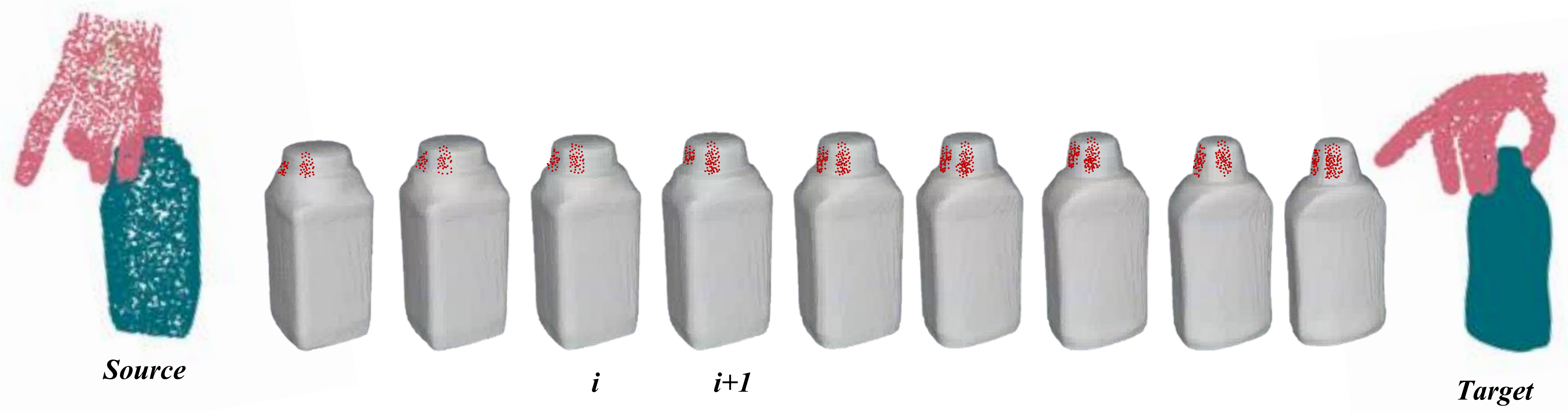

Tink , this part is modified from Tink(OakInk)

-

git clone https://github.com/oakink/DeepSDF_OakInk follow the instruction and install all requirements: The code is in C++ and has the following requirements: (using the same conda env annotate)

pip install termcolor

pip install plyfile

### prepare mesh-to-sdf env

git clone https://github.com/marian42/mesh_to_sdf

cd mesh_to_sdf

pip install -e.

pip install scikit-image==0.16.2

put download packages in Transfer/third-party/

cd CLI11 # cd Pangolin/nanofl...

mkdir build

cd build

cmake ..

make -j8 - The same process using Tink.

cd Tink_Grasp_Transfer/

python generate_sdf.py --idx 0

python train_deep_sdf.py --idx 0

python reconstruct_train.py --idx 0 --mesh_include

python tink/gen_interpolate.py --all --idx 0

python tink/cal_contact_info_shadow.py --idx 0 --tag trans

python tink/info_transform.py --idx 0 --all

python tink/pose_refine.py --idx 0 --all #--vis- Or directly bash:

sh transfer.sh- Only save the success grasp and unit axis of dataset:

cd ../../../IsaacGym/python/collect_grasp/

# save the success grasp

sh run_clean.sh

# unit axis of dataset

python trans_unit_dataset_func.py

- You can change the grasp in to folder to make them small size

cd DexFuncGraspNet/Grasps_Dataset

python data_process_m.py- Till now, the grasp dataset in folder: Annotation/Tink_Grasp_Transfer/Dataset/Grasps, each grasps used for training in /0_unit_025_mug/sift/unit_mug_s009/new, which object quat are all [1 0 0 0], at same axis.

- We collect objects from online dataset such as OakInk, and collect grasps through steps above. we name it DFG dataset.

- Download source meshes and grasp labels for 12 categories from DFG-Dataset dataset.

- Arrange the files as follows:

|-- DexFuncGraspNet

|-- Grasps_Dataset

|-- train

|-- 0_unit_025_mug ##labeled objects

|--unit_mug_s009.npy ##transferred objects

|--unit_mug_s010.npy

|--unit_mug_s011.npy

| ...

| ...

|-- 0_unit_mug_s001

|-- 1_unit_bowl_s101

|-- 1_unit_bowl_s102

|-- 4_unit_bottle12

|-- 4_unit_bottle13

| ...

|-- test ###for testing

Some of our grasp poses are in collision. we are sorry for not condisering this more.

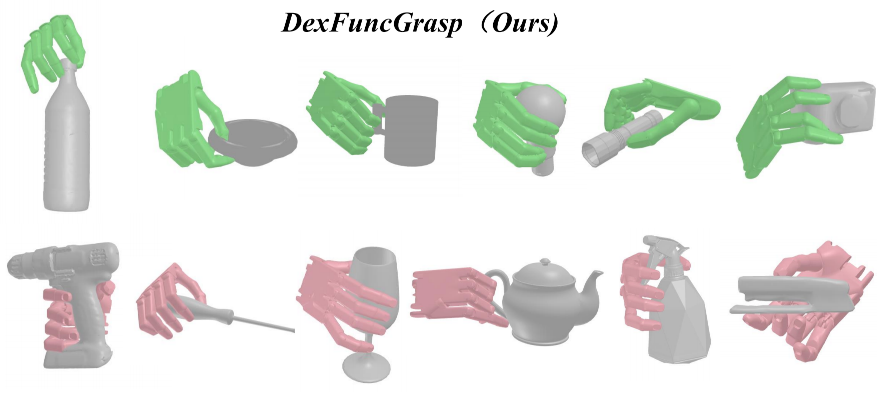

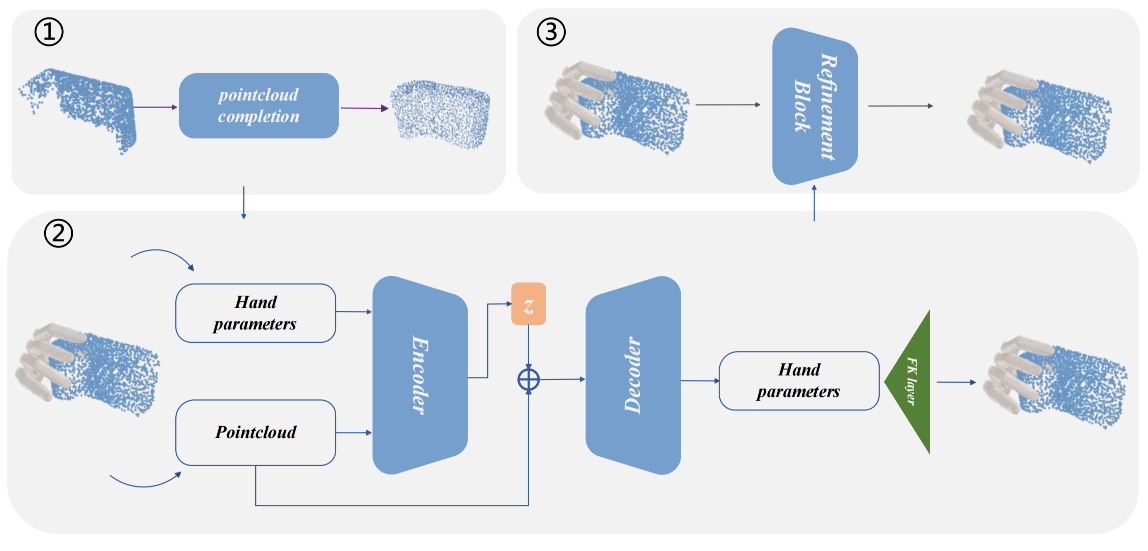

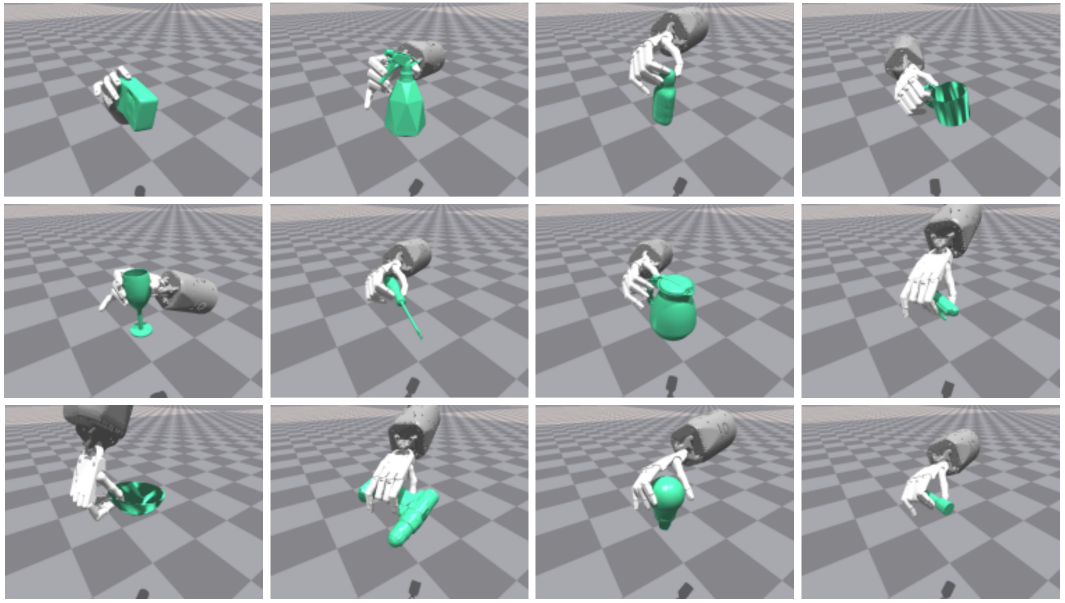

- As we propose this dataset, we provide the baseline method based on CVAE as shown in figure above:

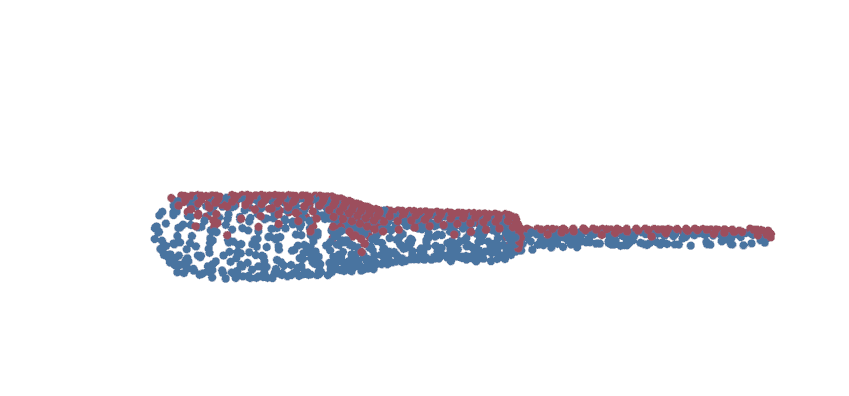

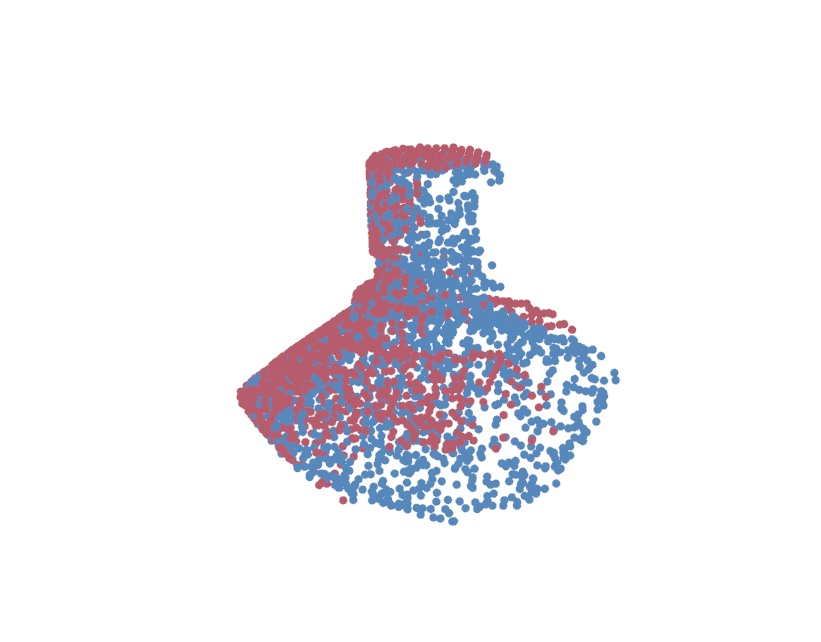

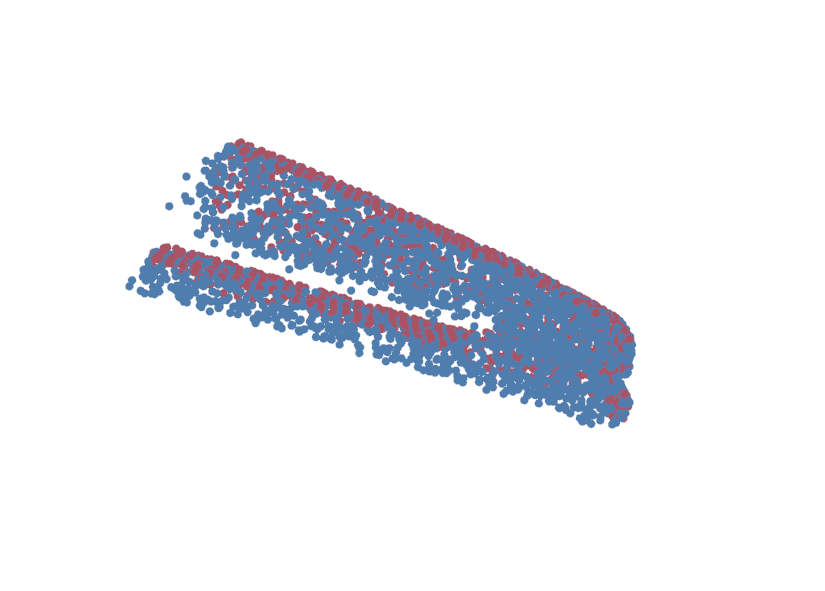

- First, we train the off-the-shelf VRCNET using our DFG dataset.

-

We use Pytorch3D to generate different view of partial point cloud.

cd data_preprocess/ sh process.sh -

OR Partial-Complete dataset from our DFG dataset can be download here : VRC-Dataset

|-- VRCNET-DFG |-- data |-- complete_pc |-- render_pc_for_completion

-

Train VRCNET

- Pretrained VRCNET Model for simulation is provided.

|-- VRCNET-DFG |--log |--vrcnet_cd_debug |--best_cd_p_network.pth

conda activate vrcnet ### change cfgs/vrcnet.yaml --load_model cd ../ python train.py --config cfgs/vrcnet.yaml ### change cfgs/vrcnet.yaml --load_model python test.py --config cfgs/vrcnet.yaml # for test

-

Second, we train the CVAE grasp generation moudle.

-

Dependencies

- pytorch 1.7.1

- Pointnet2_Pytorch

- open3d 0.9.0

-

Common Packages

conda create -n dexfuncgrasp python==3.7 conda activate dexfuncgrasp pip install torch==1.7.1 git clone https://github.com/erikwijmans/Pointnet2_PyTorch cd Pointnet2_PyTorch/pointnet2_ops_lib/ pip install -e. pip install trimesh tqdm open3d==0.9.0 pyyaml easydict pyquaternion scipy matplotlib -

Pretrained DexFuncGraspNet Model for simulation is provided.

|-- DexFuncGraspNet |-- checkpoints |-- vae_lr_0002_bs_64_scale_1_npoints_128_radius_02_latent_size_2 |-- latest_net.pth

cd DexFuncGraspNet/ # train cvae grasp generation net python train_vae.py --num_grasps_per_object 64 ###64 means batch_size(default:64) # generate grasps on test set python test.py # grasps generated in folder DexFuncGraspNet/test_result_sim [we use results from VRCNET]

- Third, the Refinement is using Pytorch Adam Optimizer.

python refine_after_completion.py # grasps optimized in folder DexFuncGraspNet/test_result_sim_refine

-

Put .pkl in to Annotation/visual_dict/new/

cd ../Annotation/visual_dict/new/ python show_data_mesh.py

- run the grasp verify in IsaacGym and get the success rate

cd ../../../IsaacGym/python/collect_grasp/

# simulation verify

sh run_clean_test_sim.sh

# calcutate final success rate

python success_caulcate.py-

Our baseline results can be download here : Baseline-Results. And run bash above.

Category Successrate Train/Test Bowl 69.48 43/5 Lightbulb 74.23 44/7 Bottle 68.62 51/8 Flashlight 91.03 44/6 Screwdriver 75.60 32/7 Spraybottle 58.07 20/3 Stapler 85.77 28/6 Wineglass 55.70 35/5 Mug 54.62 57/7 Drill 55.00 10/2 Camera 63.33 87/7 Teapot 57.14 41/7 Total 68.50 492/67

Total success rate is the average each success rate. not successgrasp/total grasp.

This repo is based on TeachNet, handpose3d, OakInk, DeepSDF, TransGrasp, 6dofgraspnet, VRCNET. Many thanks for their excellent works.

And our previous works about functional grasp generation as follows: Toward-Human-Like-Grasp, FGTrans, Functionalgrasp.

@inproceedings{hang2024dexfuncgrasp,

title={DexFuncGrasp: A Robotic Dexterous Functional Grasp Dataset Constructed from a Cost-Effective Real-Simulation Annotation System.},

author={Hang, Jinglue and Lin, Xiangbo and Zhu, Tianqiang and Li, Xuanheng and Wu, Rina and Ma, Xiaohong and Sun, Yi},

booktitle={Proceedings of the AAAI Conference on Artificial Intelligence},

volume={38},

number={9},

pages={10306-10313},

year={2024}

}Our code is released under MIT License.