DINO-SD: Champion Solution for ICRA 2024 RoboDepth Challenge

Yifan Mao*, Ming Li*, Jian Liu*, Jiayang Liu, Zihan Qin, Chunxi Chu, Jialei Xu, Wenbo Zhao, Junjun Jiang, Xianming Liu

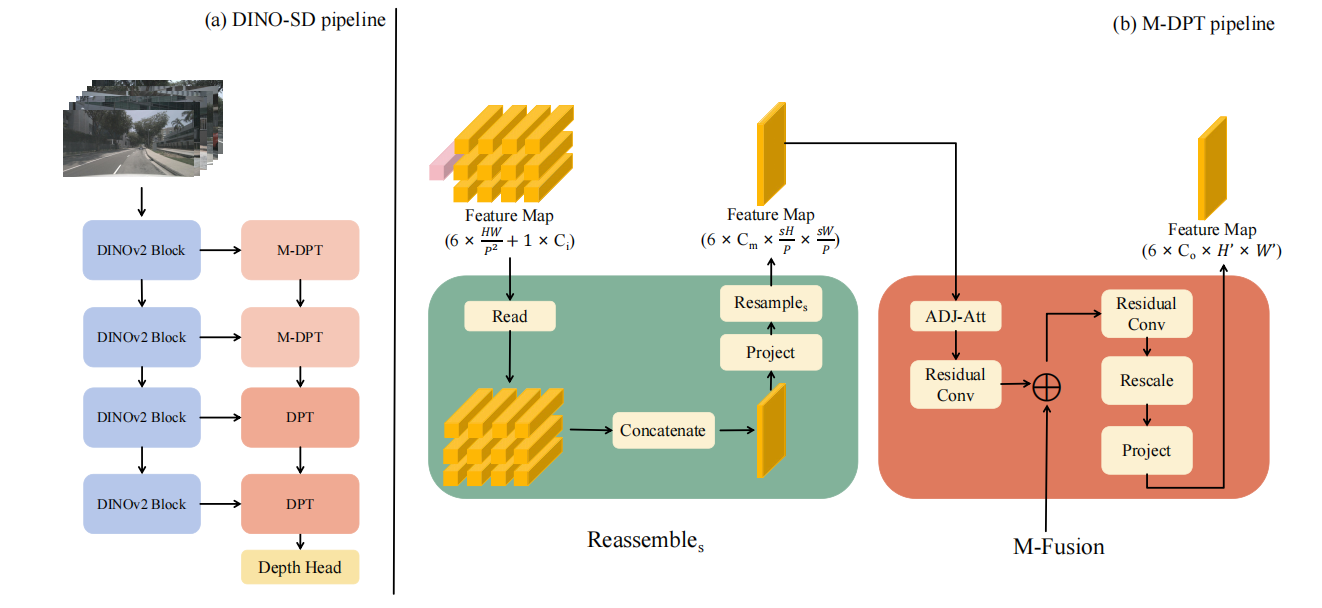

Surround-view depth estimation is a crucial task aims to acquire the depth maps of the surrounding views. It has many applications in real world scenarios such as autonomous driving, AR/VR and 3D reconstruction, etc. However, given that most of the data in the autonomous driving dataset is collected in daytime scenarios, this leads to poor depth model performance in the face of out-of-distribution(OoD) data. While some works try to improve the robustness of depth model under OoD data, these methods either require additional training data or lake generalizability. In this report, we introduce the DINO-SD, a novel surround-view depth estimation model. Our DINO-SD does not need additional data and has strong robustness. Our DINO-SD get the best performance in the track4 of ICRA 2024 RoboDepth Challenge.

| model | dataset | Abs Rel ↓ | Sq Rel ↓ | RMSE ↓ | RMSE Log ↓ | a1 ↑ | a2 ↑ | a3 ↑ | download |

|---|---|---|---|---|---|---|---|---|---|

| Baseline | nuScenes | 0.304 | 3.060 | 8.527 | 0.400 | 0.544 | 0.784 | 0.891 | model |

| DINO-SD | nuScenes | 0.187 | 1.468 | 6.236 | 0.276 | 0.734 | 0.895 | 0.953 | model |

- python 3.8, pytorch 2.2.2, CUDA 11.7, RTX 3090

git clone https://github.com/hitcslj/DINO-SD.git

conda create -n dinosd python=3.8

conda activate dinosd

pip install -r requirements.txtDatasets are assumed to be downloaded under data/<dataset-name>.

- Please download the official nuScenes dataset to

data/nuscenes/raw_data - Export depth maps for evaluation

cd tools

python export_gt_depth_nusc.py valFor evaluation data preparation, kindly download the dataset from the following resources:

| Type | Phase 1 | Phase 2 |

|---|---|---|

| Google Drive | link1 or link2 |

link1 or link2 |

- The final data structure should be:

DINO-SD

├── data

│ ├── nuscenes

│ │ ├── depth

│ │ └── raw_data

| | ├── maps

| | ├── robodrive-v1.0-test

| | └── samples

│ └── robodrive

├── datasets

│ ├── __init__.py

│ ├── corruption_dataset.py

│ ├── ddad_dataset.py

│ ├── mono_dataset.py

│ ├── nusc_dataset.py

│ ├── nusc

│ ├── ddad

│ └── robodrive

│

...

bash train.sh # baseline

bash train_dinosd.sh # dino-sdbash eval.sh

bash eval_dinosd.sh # dino-sdThe generated results will be saved in the folder structure as follows. Each results.pkl is a dictionary, its key is sample_idx and its value is np.ndarray.

.

├── brightness

│ └── results.pkl

├── color_quant

│ └── results.pkl

├── contrast

│ └── results.pkl

...

├── snow

└── zoom_blurNext, kindly merge all the .pkl files into a single pred.npz file.

You can merge the results using the following command:

python ./convert_submit.py

⚠️ Note: The prediction file MUST be named aspred.npz.

Finally, upload the compressed file to Track 4's evaluation server for model evaluation.

🚙 Hint: We provided the baseline submission file at this Google Drive link. Feel free to download and check it for reference and learn how to correctly submit the prediction files to the server.

Our code is based on SurroundDepth.

If you find this project useful in your research, please consider cite:

@article{mao2024dino,

title={DINO-SD: Champion Solution for ICRA 2024 RoboDepth Challenge},

author={Mao, Yifan and Li, Ming and Liu, Jian and Liu, Jiayang and Qin, Zihan and Chu, Chunxi and Xu, Jialei and Zhao, Wenbo and Jiang, Junjun and Liu, Xianming},

journal={arXiv preprint arXiv:2405.17102},

year={2024}

}