- General Information

- Export Documentation

- Contributions

- License

- Installation with Docker

- Installation on Kubernetes using Confluent For Kubernetes

- Apache Kafka® producers

- Apache Kafka® consumers

- Admin & Management

- Schema Registry

- Apache Kafka® Connect

- Unix commands Source Connector

- Custom SMT: composite key from json records.

- SMT: log records with AOP

- Postgres to Mongo

- HTTP Sink Connector example

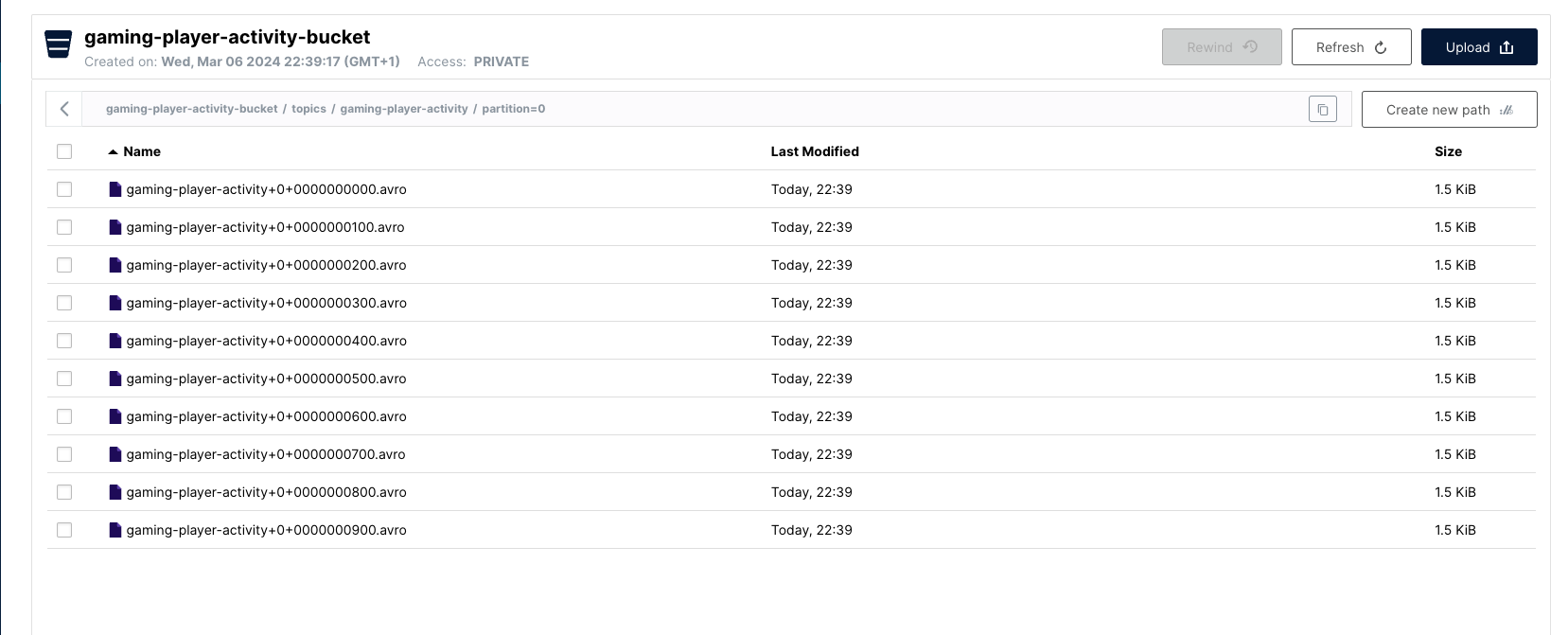

- S3 Sink Connector example

- SAP HANA Source Connector example

- Outbox Table: Event Router with SMT and JDBC Source Connector

- CDC with Debezium PostgreSQL Source Connector

- CDC with Debezium Informix Source Connector

- CDC with Debezium MongoDB Source Connector and Outbox Event Router

- Tasks distributions using a Datagen Source Connector

- Apache Kafka® Streams

- ksqlDB

- Flink SQL

- Transactions

- Frameworks

- Security

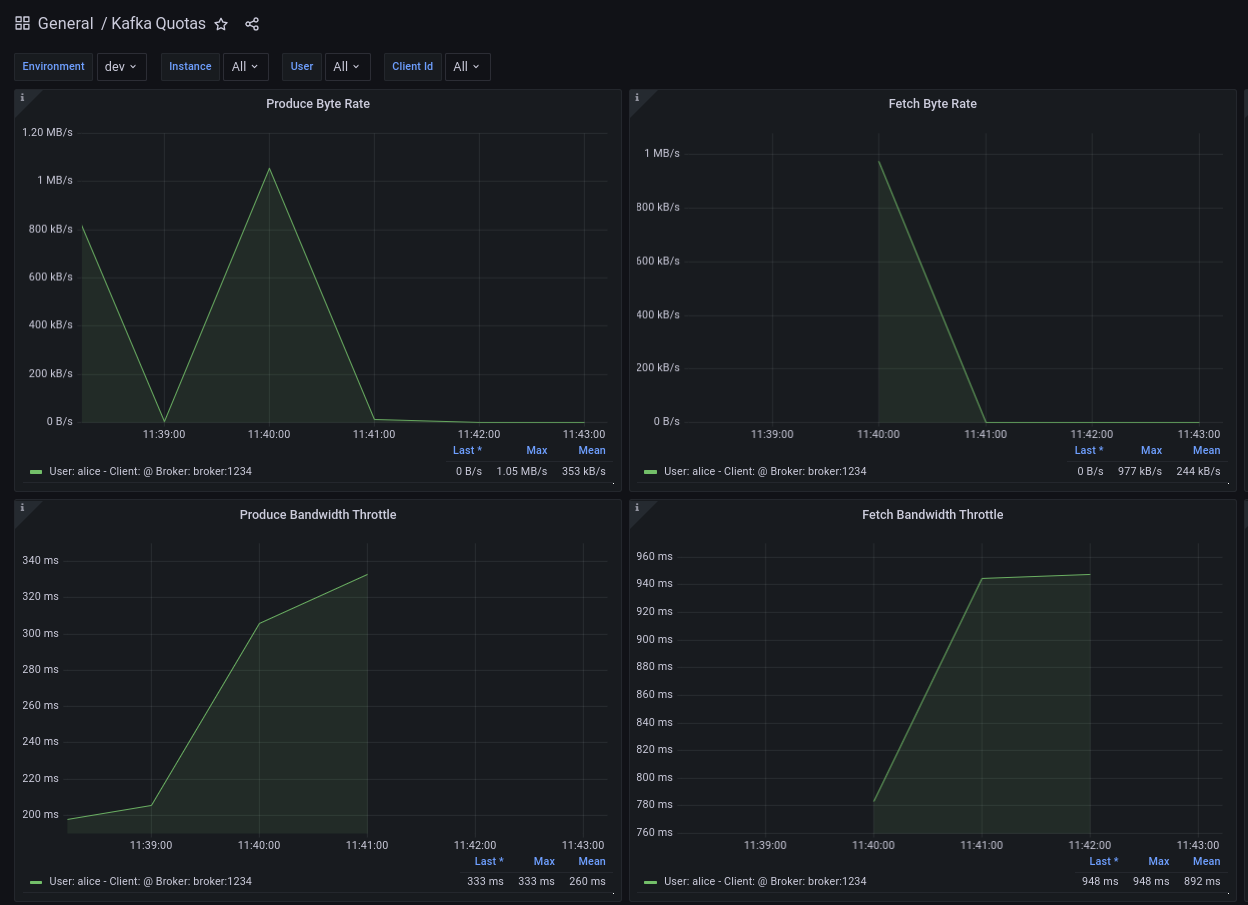

- Service Quota

- High Availability and Disaster Recovery

- Monitoring

- Observability

- Performance

- Proxy

- UDP Proxy

- Large objects

This repository contains various examples designed to demonstrate the functionality of Apache Kafka®. The examples are mostly extracted from more complex projects and should not be considered complete or ready to be used in a production environment, unless after a serious refactoring and test work.

Clone this repository:

git clone git@github.com:hifly81/kafka-examples.gitInstall asciidoctor:

Linux:

gem install asciidoctor --preMac:

brew install asciidoctorExport documentation:

# PDF

asciidoctor-pdf README.adoc

# HTML

asciidoctor README.htmlOfficial documentation on how to install Docker on Linux/Mac/Windows is available at this link: https://docs.docker.com/engine/install/

List of software required on your local machine to run the examples:

-

curl

-

wget

-

openssl

-

Java SE 17 or 21

-

keytool from Java distribution

-

Apache Maven 3.x

-

Go Programming language (for proxy example)

-

Python (for python clients)

Default image version for required components is listed in file .env

If you needed to change the docker image version for the specific components, just update file .env.

Apache Kafka® docker images are downloaded from Docker Hub apache/kafka and are based on Apache Kafka® version 3.8.x).

To run a single-node cluster (KRaft controller and Broker node combined) using Docker, run the docker-compose.yml file available in the root directory. It also contains a container with kcat:

-

broker: apache/kafka, listening on port 9092

-

kcat: confluentinc/cp-kcat

To run a cluster with 3 KRaft controller and 3 Broker nodes using Docker on different containers, use the file in apache-kafka github repository: https://raw.githubusercontent.com/apache/kafka/trunk/docker/examples/jvm/cluster/isolated/plaintext/docker-compose.yml

-

kafka-1: apache/kafka, listening on port 29092

-

kafka-2: apache/kafka, listening on port 39092

-

kafka-3: apache/kafka, listening on port 49092

List of software required on your local machine to run the examples:

-

helm

-

kubectl

-

Minikube

-

Confluent for Kubernetes operator (CFK): https://docs.confluent.io/operator/current/overview.html

Follow instructions for ArchLinux (also tested with Fedora)

Start Minikube with kvm2 driver (Linux):

minikube delete

minikube config set driver kvm2Start Minikube with docker driver (Mac):

minikube delete

minikube config set driver dockertouch /tmp/config && export KUBECONFIG=/tmp/config

minikube start --memory 16384 --cpus 4Create a k8s namespace named confluent:

kubectl create namespace confluent

kubectl config set-context --current --namespace confluentAdd confluent repository to helm:

helm repo add confluentinc https://packages.confluent.io/helm

helm repo updatehelm upgrade --install confluent-operator confluentinc/confluent-for-kubernetes --set kRaftEnabled=true1 controller, 3 brokers:

kubectl apply -f confluent-for-kubernetes/k8s/confluent-platform-reducted.yamlList pods:

kubectl get pods

NAME READY STATUS RESTARTS AGE

confluent-operator-665db446b7-j52rj 1/1 Running 0 6m35s

kafka-0 1/1 Running 0 65s

kafka-1 1/1 Running 0 65s

kafka-2 1/1 Running 0 65s

kraftcontroller-0 1/1 Running 0 5m5sVerify events and pods:

watch -n 5 "kubectl get events --sort-by='.lastTimestamp'"

watch -n 5 "kubectl get pods"alternately, you can install additional Confluent components: 1 controller, 3 brokers, 1 connect, 1 ksqldb, 1 schema registry, 1 rest proxy:

kubectl apply -f confluent-for-kubernetes/k8s/confluent-platform.yamlTopic create:

kubectl exec --stdin --tty kafka-0 -- /bin/bash

kafka-topics --bootstrap-server localhost:9092 --create --topic test-1

exitTopic list:

kubectl exec --stdin --tty kafka-0 -- /bin/bash

kafka-topics --bootstrap-server localhost:9092 --list

exitTopic describe:

kubectl exec --stdin --tty kafka-0 -- /bin/bash

kafka-topics --bootstrap-server localhost:9092 --topic test-1 --describe

exitProduce messages to Topic:

kubectl exec --stdin --tty kafka-0 -- /bin/bash

kafka-producer-perf-test --num-records 1000000 --record-size 1000 --throughput -1 --topic test-1 --producer-props bootstrap.servers=localhost:9092

exitConsume messages from Topic:

kubectl exec --stdin --tty kafka-0 -- /bin/bash

kafka-console-consumer --bootstrap-server localhost:9092 --topic test-1 --from-beginning

exitShut down Confluent components and the data:

kubectl delete -f confluent-for-kubernetes/k8s/topic.yml

kubectl delete -f confluent-for-kubernetes/k8s/producer.yml

kubectl delete -f confluent-for-kubernetes/k8s/confluent-platform.yaml

helm delete confluent-operatorDelete namespace confluent:

kubectl delete namespace confluentDelete minikube:

minikube deleteSome implementations of Apache Kafka® producers.

Folder kafka-producer/

Execute tests:

cd kafka-producer

mvn clean testIt uses org.apache.kafka.common.serialization.StringSerializer class for key and value serialization.

Create topic topic1:

docker exec -it broker /opt/kafka/bin/kafka-topics.sh --bootstrap-server broker:9092 --create --topic topic1 --replication-factor 1 --partitions 1Produce on topic topic1:

cd kafka-producer

mvn clean compile && mvn exec:java -Dexec.mainClass="org.hifly.kafka.demo.producer.serializer.string.Runner"It uses org.apache.kafka.common.serialization.StringSerializer for key serialization and a org.hifly.kafka.demo.producer.serializer.json.CustomDataJsonSerializer for value serialization.

Create topic test_custom_data:

docker exec -it broker /opt/kafka/bin/kafka-topics.sh --bootstrap-server broker:9092 --create --topic test_custom_data --replication-factor 1 --partitions 1Produce on topic test_custom_data:

cd kafka-producer

mvn clean compile && mvn exec:java -Dexec.mainClass="org.hifly.kafka.demo.producer.serializer.json.Runner"It uses a custom partitioner for keys.

Messages with key Mark go to partition 1, with key Antony to partition 2 and with key Paul to partition 3.

Create topic demo-test with 3 partitions:

docker exec -it broker /opt/kafka/bin/kafka-topics.sh --bootstrap-server broker:9092 --create --topic demo-test --replication-factor 1 --partitions 3Produce on topic demo-test:

cd kafka-producer

mvn clean compile && mvn exec:java -Dexec.mainClass="org.hifly.kafka.demo.producer.partitioner.custom.Runner"Message timestamp is set on headers when the message has been produced. This is the default behaviour, Create Timestamp.

Create topic topic1:

docker exec -it broker /opt/kafka/bin/kafka-topics.sh --bootstrap-server broker:9092 --create --topic topic1 --replication-factor 1 --partitions 1Consume from topic1 and print out the message timestamp:

docker exec -it broker /opt/kafka/bin/kafka-console-consumer.sh --topic topic1 --bootstrap-server broker:9092 --from-beginning --property print.timestamp=trueProduce records on topic1:

docker exec broker /opt/kafka/bin/kafka-producer-perf-test.sh --topic topic1 --num-records 1000 --record-size 100 --throughput -1 --producer-props bootstrap.servers=broker:9092Check consumer log for message timestamp:

CreateTime:1697359570614 YQHHNEBSEPDNSEIFGAMSUJXKOLTXSPLGHDIOYZJFNIDSPWHZMKVJAXDBZFCOXYKYRJOGYKDESSJMOIIOWVKYUAVWJLXSEPPFEILV

CreateTime:1697359570621 BASHCGRHSYGIFSYLVGRXCDVABWWTRQZTMMPBAXGHEPHTASSORYKGVPFGQYJKINSZUJLXQUUDVALUSBFRSXNQHSDFDBAKQZZNTYXF

CreateTime:1697359570621 HYGDPYGNRETYAXIXXYQKMKURDSJYIZNEDAHVIVHCJAPGOBQLHUZTKIWTVFEHVYPNGHIDSERMARFXCPYFEPQMFDOTDPWNKMYRMFIA

CreateTime:1697359570621 BIQAWWOIFIAKNYFEPTPMIXPQAXFEIKUFFXIDHILBPCBTHWDRMALHFNDCRHAYVLLMRCKJIPNPKGWCIWQCHNHSFSCTYSAKSLVZCCAIMessage timestamp is set on headers when the record arrives at the broker, the broker will override the timestamp of the producer record with its own timestamp (the current time of the broker environment) as it appends the record to the log.

Create topic topic2 with message.timestamp.type=LogAppendTime:

docker exec -it broker /opt/kafka/bin/kafka-topics.sh --bootstrap-server broker:9092 --create --topic topic2 --replication-factor 1 --partitions 1 --config message.timestamp.type=LogAppendTimeConsume from topic2 and print out the message timestamp:

docker exec -it broker /opt/kafka/bin/kafka-console-consumer.sh --topic topic2 --bootstrap-server broker:9092 --from-beginning --property print.timestamp=trueProduce records on topic2:

docker exec broker /opt/kafka/bin/kafka-producer-perf-test.sh --topic topic2 --num-records 1000 --record-size 100 --throughput -1 --producer-props bootstrap.servers=broker:9092Check consumer log for message timestamp:

LogAppendTime:1697359857981 YQHHNEBSEPDNSEIFGAMSUJXKOLTXSPLGHDIOYZJFNIDSPWHZMKVJAXDBZFCOXYKYRJOGYKDESSJMOIIOWVKYUAVWJLXSEPPFEILV

LogAppendTime:1697359857981 BASHCGRHSYGIFSYLVGRXCDVABWWTRQZTMMPBAXGHEPHTASSORYKGVPFGQYJKINSZUJLXQUUDVALUSBFRSXNQHSDFDBAKQZZNTYXF

LogAppendTime:1697359857981 HYGDPYGNRETYAXIXXYQKMKURDSJYIZNEDAHVIVHCJAPGOBQLHUZTKIWTVFEHVYPNGHIDSERMARFXCPYFEPQMFDOTDPWNKMYRMFIA

LogAppendTime:1697359857981 BIQAWWOIFIAKNYFEPTPMIXPQAXFEIKUFFXIDHILBPCBTHWDRMALHFNDCRHAYVLLMRCKJIPNPKGWCIWQCHNHSFSCTYSAKSLVZCCAIFolder interceptors/

This example shows how to create a custom producer interceptor. Java class CreditCardProducerInterceptor will mask a sensitive info on producer record (credit card number).

Compile and package:

cd interceptors

mvn clean packageRun a consumer:

mvn clean compile && mvn exec:java -Dexec.mainClass="org.hifly.kafka.interceptor.consumer.Runner"Run a producer:

mvn clean compile && mvn exec:java -Dexec.mainClass="org.hifly.kafka.interceptor.producer.Runner"Verify output:

record is:XXXXXX

Topic: test_custom_data - Partition: 0 - Offset: 1Folder kafka-python-producer/

Install confluent-kafka-python lib confluent-kafka:

pip install confluent-kafkaor:

python3 -m pip install confluent-kafkaCreate kafka-topic topic:

docker exec -it broker /opt/kafka/bin/kafka-topics.sh --bootstrap-server broker:9092 --create --topic kafka-topic --replication-factor 1 --partitions 1Run producer:

cd kafka-python-producer

python producer.pyImplementation of a consumer that can be used with different deserializer classes (for key and value).

Folder kafka-consumer/

Java class ConsumerInstance can be customized with:

-

clientId (string)

-

groupId (string)

-

topics (string separated by comma)

-

key deserializer class (string)

-

value deserializer class (string)

-

partition assignment strategy (org.apache.kafka.clients.consumer.RangeAssignor|org.apache.kafka.clients.consumer.RoundRobinAssignor|org.apache.kafka.clients.consumer.StickyAssignor|org.apache.kafka.clients.consumer.CooperativeStickyAssignor)

-

isolation.level (read_uncommitted|read_committed)

-

poll timeout (ms)

-

consume duration (ms)

-

autoCommit (true|false)

-

commit sync (true|false)

-

subscribe mode (true|false)

Topics can be passed as argument 1 of the main program:

-Dexec.args="users,users_clicks"Partition assignment strategy can be passed as argument 2 of the main program:

-Dexec.args="users,users_clicks org.apache.kafka.clients.consumer.RoundRobinAssignor"Group id can be passed as argument 3 of the main program:

-Dexec.args="users,users_clicks org.apache.kafka.clients.consumer.RoundRobinAssignor group-1"Execute tests:

cd kafka-consumer

mvn clean testIt uses org.apache.kafka.common.serialization.StringDeserializer for key and value deserialization. Default topic is topic1.

cd kafka-consumer

mvn clean compile && mvn exec:java -Dexec.mainClass="org.hifly.kafka.demo.consumer.core.Runner"Send messages to topic1:

docker exec -it broker /opt/kafka/bin/kafka-console-producer.sh --broker-list broker:9092 --topic topic1 --property "parse.key=true" --property "key.separator=:"

> Frank:1Create 2 topics, users and users_clicks with the same number of partitions:

docker exec -it broker /opt/kafka/bin/kafka-topics.sh --bootstrap-server broker:9092 --create --topic users --replication-factor 1 --partitions 3

docker exec -it broker /opt/kafka/bin/kafka-topics.sh --bootstrap-server broker:9092 --create --topic users_clicks --replication-factor 1 --partitions 3Run 2 consumer instances (2 different shells/terminals) belonging to the same consumer group and subscribed to user and user_clicks topics. Consumers uses org.apache.kafka.clients.consumer.RangeAssignor to distribute partition ownership.

mvn clean compile && mvn exec:java -Dexec.mainClass="org.hifly.kafka.demo.consumer.core.Runner" -Dexec.args="users,users_clicks org.apache.kafka.clients.consumer.RangeAssignor range-group-app"Send messages to both topics using the same key (Frank):

docker exec -it broker /opt/kafka/bin/kafka-console-producer.sh --broker-list broker:9092 --topic users --property "parse.key=true" --property "key.separator=:"

> Frank:1

docker exec -it broker /opt/kafka/bin/kafka-console-producer.sh --broker-list broker:9092 --topic users_clicks --property "parse.key=true" --property "key.separator=:"

> Frank:1Verify that the same consumer instance will read both messages.

Group id group-XX - Consumer id: consumer-group-XX-1-421db3e2-6501-45b1-acfd-275ce8d18368 - Topic: users - Partition: 1 - Offset: 0 - Key: frank - Value: 1

Group id group-XX - Consumer id: consumer-group-XX-1-421db3e2-6501-45b1-acfd-275ce8d18368 - Topic: users_clicks - Partition: 1 - Offset: 0 - Key: frank - Value: 1Create 2 topics, users and users_clicks with same number of partitions:

docker exec -it broker /opt/kafka/bin/kafka-topics.sh --bootstrap-server broker:9092 --create --topic users --replication-factor 1 --partitions 3

docker exec -it broker /opt/kafka/bin/kafka-topics.sh --bootstrap-server broker:9092 --create --topic users_clicks --replication-factor 1 --partitions 3Run 2 consumer instances (2 different shells/terminals) belonging to the same consumer group and subscribed to user and user_clicks topics; consumers uses org.apache.kafka.clients.consumer.RoundRobinAssignor to distribute partition ownership.

mvn clean compile && mvn exec:java -Dexec.mainClass="org.hifly.kafka.demo.consumer.core.Runner" -Dexec.args="users,users_clicks org.apache.kafka.clients.consumer.RoundRobinAssignor rr-group-app"Send messages to both topics using the same key (Frank):

docker exec -it broker /opt/kafka/bin/kafka-console-producer.sh --broker-list broker:9092 --topic users --property "parse.key=true" --property "key.separator=:"

> Frank:1

docker exec -it broker /opt/kafka/bin/kafka-console-producer.sh --broker-list broker:9092 --topic users_clicks --property "parse.key=true" --property "key.separator=:"

> Frank:1Verify that messages are read by different consumer instances.

This example will show how to configure different consumer instances to use a unique group instance id and define a static membership for topic partitions.

After shutting down and then restarting the consumer instance, this will consume from the same partitions avoiding re-balancing.

Create topic topic1 with 12 partitions:

docker exec broker /opt/kafka/bin/kafka-topics.sh --bootstrap-server broker:9092 --create --topic topic1 --replication-factor 1 --partitions 12Run 3 different consumer instances (from 3 different terminals) belonging to the same consumer group:

member1:

cd kafka-consumer

mvn clean compile && mvn exec:java -Dexec.mainClass="org.hifly.kafka.demo.consumer.staticmembership.Runner" -Dexec.args="consumer-member1.properties"member2:

cd kafka-consumer

mvn clean compile && mvn exec:java -Dexec.mainClass="org.hifly.kafka.demo.consumer.staticmembership.Runner" -Dexec.args="consumer-member2.properties"member3:

cd kafka-consumer

mvn clean compile && mvn exec:java -Dexec.mainClass="org.hifly.kafka.demo.consumer.staticmembership.Runner" -Dexec.args="consumer-member3.properties"Run a producer perf test to send messages to topic1:

docker exec -it broker /opt/kafka/bin/kafka-producer-perf-test.sh --topic topic1 --num-records 10000 --throughput -1 --record-size 2000 --producer-props bootstrap.servers=broker:9092Consumers will start reading messages from partitions (e.g.):

-

member1 (1,2,3,4)

-

member2 (5,6,7,8)

-

member3 (9,10,11,12)

Try to shut down consumer instances (CTRL+C) and then re-start them again; verify that re-balancing will not happen and consumers will always read from the same partitions.

This example shows how to use the feature (since Apache Kafka® 2.4+) for consumers to read messages from the closest replica, even if it is not a leader of the partition.

Start a cluster with 3 brokers on 3 different racks, dc1, dc2 and dc3:

scripts/bootstrap-racks.shCreate topic topic-regional and assign partition leaderships only on broker 1 and 3 (dc1 and dc3):

docker exec broker /opt/kafka/bin/kafka-topics.sh --bootstrap-server broker:9092 --create --topic topic-regional --replication-factor 3 --partitions 3docker exec -it broker /opt/kafka/bin/kafka-reassign-partitions.sh --bootstrap-server broker:9092 --reassignment-json-file /tmp/assignment.json --execute

docker exec -it broker /opt/kafka/bin/kafka-leader-election.sh --bootstrap-server broker:9092 --topic topic-regional --election-type PREFERRED --partition 0

docker exec -it broker /opt/kafka/bin/kafka-leader-election.sh --bootstrap-server broker:9092 --topic topic-regional --election-type PREFERRED --partition 1

docker exec -it broker /opt/kafka/bin/kafka-leader-election.sh --bootstrap-server broker:9092 --topic topic-regional --election-type PREFERRED --partition 2Verify partitions with topic describe command:

docker exec -it broker /opt/kafka/bin/kafka-topics.sh --bootstrap-server broker:9092 --topic topic-regional --describe

Topic: topic-regional TopicId: p-sy0qiQTtSTLTJSG7s7Ew PartitionCount: 3 ReplicationFactor: 3 Configs:

Topic: topic-regional Partition: 0 Leader: 1 Replicas: 1,2,3 Isr: 2,3,1 Offline:

Topic: topic-regional Partition: 1 Leader: 3 Replicas: 3,2,1 Isr: 3,1,2 Offline:

Topic: topic-regional Partition: 2 Leader: 1 Replicas: 1,3,2 Isr: 1,2,3 Offline:Run a consumer that will read messages from broker2 from rack dc2:

cd kafka-consumer

mvn clean compile && mvn exec:java -Dexec.mainClass="org.hifly.kafka.demo.consumer.rack.Runner"Produce 50 messages:

docker exec -it broker /opt/kafka/bin/kafka-producer-perf-test.sh --topic topic-regional --num-records 50 --throughput 10 --record-size 1 --producer-props bootstrap.servers=broker:9092Teardown:

scripts/tear-down-racks.shFolder kafka-consumer-retry-topics/

This solution could be implemented on consumer side to handle errors in processing records without blocking the input topic.

-

Consumer processes records and commit the offset (auto-commit).

-

If a record can’t be processed (simple condition here to raise an error, is the existence of a specific message HEADER named ERROR), it is sent to a retry topic, if the number of retries is not yet exhausted.

-

When the number of retries is exhausted, record is sent to a DLQ topic.

-

Number of retries is set at Consumer instance level.

Create topics retry-topic and dlq-topic:

docker exec broker /opt/kafka/bin/kafka-topics.sh --bootstrap-server broker:9092 --create --topic retry-topic --replication-factor 1 --partitions 1

docker exec broker /opt/kafka/bin/kafka-topics.sh --bootstrap-server broker:9092 --create --topic dlq-topic --replication-factor 1 --partitions 1Run consumer managing retry topics:

cd kafka-consumer-retry-topics

mvn clean compile && mvn exec:java -Dexec.mainClass="org.hifly.kafka.demo.consumer.retry.ConsumerRetries"Send records:

docker exec kcat bash -c "echo 'alice,{"col_foo":1}'|kcat -b broker:9092 -t input-topic -P -K ,"

docker exec kcat bash -c "echo 'alice,{"col_foo":1}'|kcat -b broker:9092 -t input-topic -P -H ERROR=xxxxx -K ,"

docker exec kcat bash -c "echo 'alice,{"col_foo":1}'|kcat -b broker:9092 -t input-topic -P -H ERROR=xxxxx -K ,"

docker exec kcat bash -c "echo 'alice,{"col_foo":1}'|kcat -b broker:9092 -t input-topic -P -H ERROR=xxxxx -K ,"

docker exec kcat bash -c "echo 'alice,{"col_foo":1}'|kcat -b broker:9092 -t input-topic -P -H ERROR=xxxxx -K ,"Verify in consumer log if messages are sent to retry and dlq topics:

Group id c9a19a62-0284-4251-be22-5d691243646a - Consumer id: consumer-c9a19a62-0284-4251-be22-5d691243646a-1-86fb972e-b5c8-4621-8464-9c1a747a920b - Topic: input-topic - Partition: 0 - Offset: 0 - Key: alice - Value: {col_foo:1}

Group id c9a19a62-0284-4251-be22-5d691243646a - Consumer id: consumer-c9a19a62-0284-4251-be22-5d691243646a-1-86fb972e-b5c8-4621-8464-9c1a747a920b - Topic: input-topic - Partition: 0 - Offset: 1 - Key: alice - Value: {col_foo:1}

Error message detected: number of retries 3 left for key alice

send to RETRY topic: retry-topic

Group id c9a19a62-0284-4251-be22-5d691243646a - Consumer id: consumer-c9a19a62-0284-4251-be22-5d691243646a-1-86fb972e-b5c8-4621-8464-9c1a747a920b - Topic: input-topic - Partition: 0 - Offset: 2 - Key: alice - Value: {col_foo:1}

Error message detected: number of retries 2 left for key alice

send to RETRY topic: retry-topic

Group id c9a19a62-0284-4251-be22-5d691243646a - Consumer id: consumer-c9a19a62-0284-4251-be22-5d691243646a-1-86fb972e-b5c8-4621-8464-9c1a747a920b - Topic: input-topic - Partition: 0 - Offset: 3 - Key: alice - Value: {col_foo:1}

Error message detected: number of retries 1 left for key alice

send to RETRY topic: retry-topic

Group id c9a19a62-0284-4251-be22-5d691243646a - Consumer id: consumer-c9a19a62-0284-4251-be22-5d691243646a-1-86fb972e-b5c8-4621-8464-9c1a747a920b - Topic: input-topic - Partition: 0 - Offset: 4 - Key: alice - Value: {col_foo:1}

Error message detected: number of retries 0 left for key alice

number of retries exhausted, send to DLQ topic: dlq-topicFolder interceptors/

This example shows how to create a custom consumer interceptor. Java class CreditCardConsumerInterceptor will intercept records before deserialization and print headers.

Run a consumer:

mvn clean compile && mvn exec:java -Dexec.mainClass="org.hifly.kafka.interceptor.consumer.Runner"Run a producer:

cd interceptors

mvn clean compile && mvn exec:java -Dexec.mainClass="org.hifly.kafka.interceptor.producer.Runner"Verify output:

record headers:RecordHeaders(headers = [], isReadOnly = false)

Group id consumer-interceptor-g2 - Consumer id: consumer-consumer-interceptor-g2-1-0e20b2b6-3269-4bc5-bfdb-ca787cf68aa8 - Topic: test_custom_data - Partition: 0 - Offset: 0 - Key: null - Value: XXXXXX

Consumer 23d06b51-5780-4efc-9c33-a93b3caa3b48 - partition 0 - lastOffset 1Folder kafka-python-consumer/

Install confluent kafka python lib confluent-kafka:

pip install confluent-kafkaCreate topic kafka-topic:

docker exec -it broker /opt/kafka/bin/kafka-topics.sh --bootstrap-server broker:9092 --create --topic kafka-topic --replication-factor 1 --partitions 1Run producer:

cd kafka-python-producer

python producer.pyRun consumer:

cd kafka-python-consumer

python consumer.pyApache Kafka® CLI are located in $KAFKA_HOME/bin directory.

Docker images provided are already shipped with CLI.

-

kafka-acls - manage acls

-

kafka-topics - create, delete, describe, or change a topic

-

kafka-configs - create, delete, describe, or change cluster settings

-

kafka-consumer-groups - manage consumer groups

-

kafka-console-consumer - read data from topics and outputs it to standard output

-

kafka-console-producer - produce data to topics

-

kafka-consumer-perf-test - consume high volumes of data through your cluster

-

kafka-producer-perf-test - produce high volumes of data through your cluster

-

kafka-avro-console-producer - produce Avro data to topics with a schema (only with confluent installation)

-

kafka-avro-console-consumer - read Avro data from topics with a schema and outputs it to standard output (only with confluent installation)

Create a topic cars with retention for old segments set to 5 minutes and size of segments set to 100 KB.

Be aware that log.retention.check.interval.ms is set by default to 5 minutes and this is the frequency in milliseconds that the log cleaner checks whether any log is eligible for deletion.

docker exec -it broker /opt/kafka/bin/kafka-topics.sh --bootstrap-server broker:9092 --create --topic cars --replication-factor 1 --partitions 1 --config segment.bytes=100000 --config segment.ms=604800000 --config retention.ms=300000 --config retention.bytes=-1Launch a producer performance session:

docker exec -it broker /opt/kafka/bin/kafka-producer-perf-test.sh --topic cars --num-records 99999999999999 --throughput -1 --record-size 1 --producer-props bootstrap.servers=broker:9092Check the log dir for cars topic and wait for deletion of old segments (5 minutes + log cleaner trigger delta)

docker exec -it broker watch ls -ltr /tmp/kraft-combined-logs/cars-0/Folder admin-client

It uses org.apache.kafka.clients.admin.AdminClient to execute Admin API.

Operations currently added:

-

list of cluster nodes

-

list topics

cd admin-client

mvn clean compile && mvn exec:java -Dexec.mainClass="org.hifly.kafka.admin.AdminClientWrapper" -Dexec.args="admin.properties"Folder compression/

This example will show that messages sent to the same topic with different compression.type. Messages with different compression can be read by the same consumer instance.

Compressions supported on producer are:

-

none (no compression)

-

gzip

-

snappy

-

lz4

-

zstd

Send messages with different compression type and with batching disabled:

docker exec -it broker /opt/kafka/bin/kafka-console-producer.sh --broker-list broker:9092 --topic topic1 --producer.config compression/client-none.properties --property "parse.key=true" --property "key.separator=:"

0:nonedocker exec -it broker /opt/kafka/bin/kafka-console-producer.sh --broker-list broker:9092 --topic topic1 --producer.config compression/client-gzip.properties --property "parse.key=true" --property "key.separator=:"

1:gzipdocker exec -it broker /opt/kafka/bin/kafka-console-producer.sh --broker-list broker:9092 --topic topic1 --producer.config compression/client-snappy.properties --property "parse.key=true" --property "key.separator=:"

2:snappydocker exec -it broker /opt/kafka/bin/kafka-console-producer.sh --broker-list broker:9092 --topic topic1 --producer.config compression/client-lz4.properties --property "parse.key=true" --property "key.separator=:"

3:lz4docker exec -it broker /opt/kafka/bin/kafka-console-producer.sh --broker-list broker:9092 --topic topic1 --producer.config compression/client-zstd.properties --property "parse.key=true" --property "key.separator=:"

4:zstdRun a consumer on topic1 topic:

docker exec -it broker /opt/kafka/bin/kafka-console-consumer.sh --topic topic1 --bootstrap-server broker:9092 --from-beginning

none

gzip

snappy

lz4

zstdIt uses io.confluent.kafka.serializers.KafkaAvroSerializer for value serializer, sending an Avro GenericRecord.

Confluent Schema Registry is needed to run the example.

More Info at: https://github.com/confluentinc/schema-registry

Avro schema car.avsc:

{

"type": "record",

"name": "Car",

"namespace": "org.hifly.kafka.demo.producer.serializer.avro",

"fields": [

{

"name": "model",

"type": "string"

},

{

"name": "brand",

"type": "string"

}

]

}Start Confluent Schema Registry:

scripts/bootstrap-cflt-schema-registry.shConsume messages:

cd kafka-consumer

mvn clean compile && mvn exec:java -Dexec.mainClass="org.hifly.kafka.demo.consumer.deserializer.avro.Runner" -Dexec.args="CONFLUENT"Produce messages:

cd kafka-producer

mvn clean compile && mvn exec:java -Dexec.mainClass="org.hifly.kafka.demo.producer.serializer.avro.Runner" -Dexec.args="CONFLUENT"Teardown:

scripts/tear-down-cflt-schema-registry.shIt uses io.apicurio.registry.utils.serde.AvroKafkaSerializer for value serializer, sending an Avro GenericRecord.

Apicurio Schema Registry is needed to run the example.

Avro schema car.avsc:

{

"type": "record",

"name": "Car",

"namespace": "org.hifly.kafka.demo.producer.serializer.avro",

"fields": [

{

"name": "model",

"type": "string"

},

{

"name": "brand",

"type": "string"

}

]

}Start Apicurio:

scripts/bootstrap-apicurio.shConsume messages:

cd kafka-consumer

mvn clean compile && mvn exec:java -Dexec.mainClass="org.hifly.kafka.demo.consumer.deserializer.avro.Runner" -Dexec.args="APICURIO"Produce messages:

cd kafka-producer

mvn clean compile && mvn exec:java -Dexec.mainClass="org.hifly.kafka.demo.producer.serializer.avro.Runner" -Dexec.args="APICURIO"Teardown:

scripts/tear-down-apicurio.shIt uses com.hortonworks.registries.schemaregistry.serdes.avro.kafka.KafkaAvroSerializer for value serializer, sending an Avro GenericRecord.

Hortonworks Schema Registry is needed to run the example.

Info at: https://registry-project.readthedocs.io/en/latest/schema-registry.html#running-kafka-example

Avro schema car.avsc:

{

"type": "record",

"name": "Car",

"namespace": "org.hifly.kafka.demo.producer.serializer.avro",

"fields": [

{

"name": "model",

"type": "string"

},

{

"name": "brand",

"type": "string"

}

]

}Start Hortonworks Schema Registry:

scripts/bootstrap-hortonworks-sr.shcd kafka-producer

mvn clean compile && mvn exec:java -Dexec.mainClass="org.hifly.kafka.demo.producer.serializer.avro.Runner" -Dexec.args="HORTONWORKS"Teardown:

scripts/tear-down-hortonworks-sr.shFolder: confluent-avro-specific-record

Implementation of a producer and a consumer using Avro SpecificRecord for serializing and deserializing.

Confluent Schema Registry is needed to run the example.

scripts/bootstrap-cflt-schema-registry.shCreate cars topic:

docker exec -it broker kafka-topics --bootstrap-server broker:9092 --create --topic cars --replication-factor 1 --partitions 1Avro schema car_v1.avsc:

{"schema": "{\"type\": \"record\",\"name\": \"Car\",\"namespace\": \"org.hifly.kafka.demo.avro\",\"fields\": [{\"name\": \"model\",\"type\": \"string\"},{\"name\": \"brand\",\"type\": \"string\"}]}"}Register first version of schema:

curl -X POST -H "Content-Type: application/vnd.schemaregistry.v1+json" \

--data @confluent-avro-specific-record/src/main/resources/car_v1.avsc \

http://localhost:8081/subjects/cars-value/versionsRun the consumer:

cd confluent-avro-specific-record

mvn clean compile package && mvn exec:java -Dexec.mainClass="org.hifly.kafka.demo.avro.RunnerConsumer"Run the producer:

cd confluent-avro-specific-record

mvn clean compile package && mvn exec:java -Dexec.mainClass="org.hifly.kafka.demo.avro.RunnerProducer"Teardown:

scripts/tear-down-cflt-schema-registry.shFor documentation see the official docs at: https://docs.confluent.io/platform/current/schema-registry/fundamentals/schema-evolution.html

Changes allowed:

-

Delete fields

-

Add optional fields

Confluent Schema Registry is needed to run the example.

scripts/bootstrap-cflt-schema-registry.shCreate car topic:

docker exec -it broker kafka-topics --bootstrap-server broker:9092 --create --topic cars --replication-factor 1 --partitions 1Avro schema car_v1.avsc:

{"schema": "{ \"type\": \"record\", \"name\": \"Car\", \"namespace\": \"org.hifly.kafka.demo.producer.serializer.avro\",\"fields\": [ {\"name\": \"model\",\"type\": \"string\"},{\"name\": \"brand\",\"type\": \"string\"}] }" }Register a first version of schema:

curl -X POST -H "Content-Type: application/vnd.schemaregistry.v1+json" \

--data @avro/car_v1.avsc \

http://localhost:8081/subjects/cars-value/versionsSet compatibility on BACKWARD:

curl -X PUT -H "Content-Type: application/vnd.schemaregistry.v1+json" \

--data '{"compatibility": "BACKWARD"}' \

http://localhost:8081/config/cars-valueVerify compatibility for cars-value subject:

curl -X GET http://localhost:8081/config/cars-valueRun the producer:

cd confluent-avro-specific-record

mvn clean compile package && mvn exec:java -Dexec.mainClass="org.hifly.kafka.demo.avro.RunnerProducer"Run the consumer (don’t stop it):

cd confluent-avro-specific-record

mvn clean compile package && mvn exec:java -Dexec.mainClass="org.hifly.kafka.demo.avro.RunnerConsumer"View the latest schema for cars-value subject:

curl -X GET http://localhost:8081/subjects/cars-value/versions/latest | jq .Avro schema car_v2.avsc:

{"schema": "{ \"type\": \"record\", \"name\": \"Car\", \"namespace\": \"org.hifly.kafka.demo.producer.serializer.avro\",\"fields\": [ {\"name\": \"engine\",\"type\": \"string\", \"default\":\"diesel\"}, {\"name\": \"model\",\"type\": \"string\"},{\"name\": \"brand\",\"type\": \"string\"}] }" }Register a new version of schema, with the addition of a field with default value:

curl -X POST -H "Content-Type: application/vnd.schemaregistry.v1+json" \

--data @avro/car_v2.avsc \

http://localhost:8081/subjects/cars-value/versionsProduce data with using the new schema:

sh produce-avro-records.shVerify that consumer will not break and continue to process messages.

Avro schema car_v3.avsc:

{"schema": "{ \"type\": \"record\", \"name\": \"Car\", \"namespace\": \"org.hifly.kafka.demo.producer.serializer.avro\",\"fields\": [ {\"name\": \"engine\",\"type\": \"string\"}, {\"name\": \"model\",\"type\": \"string\"},{\"name\": \"brand\",\"type\": \"string\"}] }" }Register a new version of schema, with the addition of a field with a required value:

curl -X POST -H "Content-Type: application/vnd.schemaregistry.v1+json" \

--data @avro/car_v3.avsc \

http://localhost:8081/subjects/cars-value/versionsyou will get an error:

{"error_code":42201,"message":"Invalid schemaTeardown:

scripts/tear-down-cflt-schema-registry.shFolder: confluent-avro-multi-event

This example shows how to use Avro unions with schema references.

In this example a topic named car-telemetry will be configured with a schema car-telemetry.avsc and will store different Avro messages:

-

car-info messages from schema car-info.avsc

-

car-telemetry messages from schema car-telemetry-data.avsc

[

"org.hifly.kafka.demo.avro.references.CarInfo",

"org.hifly.kafka.demo.avro.references.CarTelemetryData"

]{

"type": "record",

"name": "CarTelemetryData",

"namespace": "org.hifly.kafka.demo.avro.references",

"fields": [

{

"name": "speed",

"type": "double"

},

{

"name": "latitude",

"type": "string"

},

{

"name": "longitude",

"type": "string"

}

]

}{

"type": "record",

"name": "CarInfo",

"namespace": "org.hifly.kafka.demo.avro.references",

"fields": [

{

"name": "model",

"type": "string"

},

{

"name": "brand",

"type": "string"

}

]

}Confluent Schema Registry is needed to run the example.

scripts/bootstrap-cflt-schema-registry.shRegister the subjects using Confluent Schema Registry maven plugin:

cd confluent-avro-multi-event

mvn schema-registry:register

[INFO] --- kafka-schema-registry-maven-plugin:7.4.0:register (default-cli) @ confluent-avro-references ---

[INFO] Registered subject(car-info) with id 1 version 1

[INFO] Registered subject(car-telemetry-data) with id 2 version 1

[INFO] Registered subject(car-telemetry-value) with id 3 version 1Verify the subjects:

curl -X GET http://localhost:8081/subjects

["car-info","car-telemetry-data","car-telemetry-value"]Verify the resulting schema for car-telemetry-value subject:

curl -X GET http://localhost:8081/subjects/car-telemetry-value/versions/1

{"subject":"car-telemetry-value","version":1,"id":3,"references":[{"name":"io.confluent.examples.avro.references.CarInfo","subject":"car-info","version":1},{"name":"io.confluent.examples.avro.references.CarTelemetryData","subject":"car-telemetry-data","version":1}],"schema":"[\"org.hifly.kafka.demo.avro.references.CarInfo\",\"org.hifly.kafka.demo.avro.references.CarTelemetryData\"]"}Generate Java Pojo from avro schemas:

cd confluent-avro-multi-event

mvn clean packageRun a Consumer:

cd confluent-avro-multi-event

mvn clean compile && mvn exec:java -Dexec.mainClass="org.hifly.kafka.demo.avro.references.RunnerConsumer"On a different shell, run a Producer:

cd confluent-avro-multi-event

mvn clean compile && mvn exec:java -Dexec.mainClass="org.hifly.kafka.demo.avro.references.RunnerProducer"Verify records on Consumer:

Car Info event {"model": "Ferrari", "brand": "F40"} - offset-> 4

Car Telemetry event {"speed": 156.8, "latitude": "42.8", "longitude": "22.6"} - offset-> 5Teardown:

scripts/tear-down-cflt-schema-registry.shFolder: confluent-avro-hierarchy-event

This example shows how to use Avro nested objects.

In this example a topic named car-telemetry will be configured with a schema car-telemetry-data.avsc with a nested schema reference from car.avsc

{

"type": "record",

"name": "CarTelemetryData",

"namespace": "org.hifly.kafka.demo.avro.references",

"fields": [

{

"name": "speed",

"type": "double"

},

{

"name": "latitude",

"type": "string"

},

{

"name": "longitude",

"type": "string"

},

{

"name": "info",

"type": "org.hifly.kafka.demo.avro.references.CarInfo"

}

]

}{

"type": "record",

"name": "CarInfo",

"namespace": "org.hifly.kafka.demo.avro.references",

"fields": [

{

"name": "model",

"type": "string"

},

{

"name": "brand",

"type": "string"

}

]

}Confluent Schema Registry is needed to run the example.

scripts/bootstrap-cflt-schema-registry.shRegister the subjects using Confluent Schema Registry maven plugin:

cd confluent-avro-hierarchy-event

mvn schema-registry:register

[INFO] --- kafka-schema-registry-maven-plugin:7.4.0:register (default-cli) @ confluent-avro-hierarchy-event ---

[INFO] Registered subject(car-info) with id 4 version 2

[INFO] Registered subject(car-telemetry-value) with id 5 version 3Generate Java Pojo from avro schemas:

cd confluent-avro-hierarchy-event

mvn clean packageRun a Consumer:

cd confluent-avro-hierarchy-event

mvn clean compile && mvn exec:java -Dexec.mainClass="org.hifly.kafka.demo.avro.references.app.RunnerConsumer"On a different shell, run a Producer:

cd confluent-avro-hierarchy-event

mvn clean compile && mvn exec:java -Dexec.mainClass="org.hifly.kafka.demo.avro.references.app.RunnerProducer"Verify records on Consumer:

Record:{"speed": 156.8, "latitude": "42.8", "longitude": "22.6", "info": {"model": "Ferrari", "brand": "F40"}}Teardown:

scripts/tear-down-cflt-schema-registry.shFolder: kafka-unixcommand-connector

Implementation of a sample Kafka Connect Source Connector; it executes unix commands (e.g. fortune, ls -ltr, netstat) and sends its output to a topic.

|

Important

|

unix commands are executed on connect worker node. |

This connector relies on Confluent Schema Registry to convert messages using an Avro converter: io.confluent.connect.avro.AvroConverter.

Connector source.quickstart.json:

{

"name" : "unixcommandsource",

"config": {

"connector.class" : "org.hifly.kafka.demo.connector.UnixCommandSourceConnector",

"command" : "fortune",

"topic": "unixcommands",

"poll.ms" : 5000,

"tasks.max": 1

}

}Parameters for source connector:

-

command – unix command to execute (e.g. ls -ltr, fortune)

-

topic – output topic

-

poll.ms – poll interval in milliseconds between every execution

Create the connector package:

cd kafka-unixcommand-connector

mvn clean packageRun the Docker container:

scripts/bootstrap-unixcommand-connector.shThis will create an image based on confluentinc/cp-kafka-connect-base using a custom Dockerfile.

It will use the confluent-hub utility confluent-hub install to install the plugin in connect.

Deploy the connector:

curl -X POST -H Accept:application/json -H Content-Type:application/json http://localhost:8083/connectors/ -d @kafka-unixcommand-connector/config/source.quickstart.jsonTeardown:

scripts/tear-down-unixcommand-connector.shFolder: kafka-smt-custom

Implementation of a custom Single Message Transformation (SMT); it creates a key from a list of json fields from message record value. Fields are configurable using SMT property fields.

Example:

Original record:

key: null

value: {"FIELD1": "01","FIELD2": "20400","FIELD3": "001","FIELD4": "0006084655017","FIELD5": "20221117","FIELD6": 9000018}Result after SMT:

"transforms.createKey.fields": "FIELD1,FIELD2,FIELD3"

key: 0120400001

value: {"FIELD1": "01","FIELD2": "20400","FIELD3": "001","FIELD4": "0006084655017","FIELD5": "20221117","FIELD6": 9000018}The example applies the SMT to a MongoDB sink connector.

Run the example:

scripts/bootstrap-smt-connector.shA MongoDB sink connector will be created with this config:

{

"name": "mongo-sink",

"config": {

"connector.class": "com.mongodb.kafka.connect.MongoSinkConnector",

"topics": "test",

"connection.uri": "mongodb://admin:password@mongo:27017",

"key.converter": "org.apache.kafka.connect.storage.StringConverter",

"value.converter": "org.apache.kafka.connect.storage.StringConverter",

"key.converter.schemas.enable": false,

"value.converter.schemas.enable": false,

"database": "Tutorial2",

"collection": "pets",

"transforms": "createKey",

"transforms.createKey.type": "org.hifly.kafka.smt.KeyFromFields",

"transforms.createKey.fields": "FIELD1,FIELD2,FIELD3"

}

}Original json messages will be sent to test topic.

Sink connector will apply the SMT and store the records in MongoDB pets collection from Tutorial2 database, using a key generated by the SMT.

Teardown:

scripts/tear-down-smt-connector.shFolder: kafka-smt-aspectj

Usage of a predefined SMT to a MongoDB sink connector.

apply method for SMT classes in package org.apache.kafka.connect.transforms is intercepted by a Java AOP Aspect implemented using AspectJ framework.

The @Aspect, implemented in class org.hifly.kafka.smt.aspectj.SMTAspect, logs the input arg (SinkRecord object) to the standard output.

@Pointcut("execution(* org.apache.kafka.connect.transforms.*.apply(..)) && !execution(* org.apache.kafka.connect.runtime.PredicatedTransformation.apply(..))")

public void standardMethod() {}

@Before("standardMethod()")

public void log(JoinPoint jp) throws Throwable {

Object[] array = jp.getArgs();

if(array != null) {

for(Object tmp: array)

LOGGER.info(tmp.toString());

}

}Connect log will show sink records entries:

SinkRecord{kafkaOffset=0, timestampType=CreateTime} ConnectRecord{topic='test', kafkaPartition=2, key=null, keySchema=Schema{STRING}, value={"FIELD1": "01","FIELD2": "20400","FIELD3": "001","FIELD4": "0006084655017","FIELD5": "20221117","FIELD6": 9000018}, valueSchema=Schema{STRING}, timestamp=1683701851358, headers=ConnectHeaders(headers=)}Run the example:

scripts/bootstrap-smt-aspectj.shConnect will start with aspectjweaver java agent:

-Dorg.aspectj.weaver.showWeaveInfo=true -Daj.weaving.verbose=true -javaagent:/usr/share/java/aspectjweaver-1.9.19.jarAspects are deployed as standard jars and copied to Kafka Connect classpath /etc/kafka-connect/jars/kafka-smt-aspectj-1.2.1.jar

A MongoDB sink connector will be created with this config:

{

"name": "mongo-sink",

"config": {

"connector.class": "com.mongodb.kafka.connect.MongoSinkConnector",

"topics": "test",

"connection.uri": "mongodb://admin:password@mongo:27017",

"key.converter": "org.apache.kafka.connect.storage.StringConverter",

"value.converter": "org.apache.kafka.connect.storage.StringConverter",

"key.converter.schemas.enable": false,

"value.converter.schemas.enable": false,

"database": "Tutorial2",

"collection": "pets",

"transforms": "Filter",

"transforms.Filter.type": "org.apache.kafka.connect.transforms.Filter",

"transforms.Filter.predicate": "IsFoo",

"predicates": "IsFoo",

"predicates.IsFoo.type": "org.apache.kafka.connect.transforms.predicates.TopicNameMatches",

"predicates.IsFoo.pattern": "test"

}

}Original json messages will be sent to test topic.

Sink connector will apply the SMT and store the records in MongoDB pets collection from Tutorial2 database.

Teardown:

scripts/tear-down-smt-aspectj.shIn this example a JDBC source connector will copy rows from a Postgres table to a MongoDB collection. Rows containing a JSON CLOB not properly parsable will be sent to DLQ topic.

Folder: postgres-to-mongo

MongoDB sink connector example configured to send bad messages to a DLQ topic named dlq-mongo-accounts.

MongoDB Sink Connector has been configured to use a id strategy to determine the _id value for each document.

MongoDB Sink Connector has been configured to use a delete strategy when it receives a tombstone event.

Run the example:

scripts/bootstrap-postgres-to-mongo.shA JDBC source connector will be created with this config:

{

"name": "jdbc-source-connector",

"config": {

"connector.class": "io.confluent.connect.jdbc.JdbcSourceConnector",

"tasks.max": "1",

"connection.url": "jdbc:postgresql://postgres:5432/postgres",

"connection.user": "postgres",

"connection.password": "postgres",

"table.whitelist": "accounts",

"mode": "incrementing",

"incrementing.column.name": "seq_id",

"topic.prefix": "jdbc_",

"poll.interval.ms": "5000",

"numeric.mapping": "best_fit",

"value.converter": "io.confluent.connect.avro.AvroConverter",

"key.converter": "org.apache.kafka.connect.json.JsonConverter",

"key.converter.schemas.enable": "false",

"value.converter.schema.registry.url": "http://schema-registry:8081",

"transforms": "createKey,nestKey",

"transforms.createKey.type": "org.apache.kafka.connect.transforms.ValueToKey",

"transforms.createKey.fields": "id",

"transforms.nestKey.type": "org.apache.kafka.connect.transforms.ReplaceField$Key",

"transforms.nestKey.renames": "id:originalId"

}

}A MongoDB sink connector will be created with this config:

{

"name": "mongo-sink-dlq",

"config": {

"connector.class": "com.mongodb.kafka.connect.MongoSinkConnector",

"errors.tolerance": "all",

"topics": "jdbc_accounts",

"errors.deadletterqueue.topic.name": "dlq-mongo-accounts",

"errors.deadletterqueue.topic.replication.factor": "1",

"errors.deadletterqueue.context.headers.enable": "true",

"connection.uri": "mongodb://admin:password@mongo:27017",

"database": "Employee",

"collection": "account",

"mongo.errors.log.enable":"true",

"delete.on.null.values": "true",

"document.id.strategy.overwrite.existing": "true",

"document.id.strategy": "com.mongodb.kafka.connect.sink.processor.id.strategy.FullKeyStrategy",

"delete.writemodel.strategy": "com.mongodb.kafka.connect.sink.writemodel.strategy.DeleteOneDefaultStrategy",

"publish.full.document.only": "true",

"value.converter": "io.confluent.connect.avro.AvroConverter",

"key.converter": "org.apache.kafka.connect.json.JsonConverter",

"key.converter.schemas.enable": "false",

"value.converter.schema.registry.url": "http://schema-registry:8081"

}

}Validate results, query documents in Mongo collection account from database Employee:

docker exec -it mongo mongosh "mongodb://admin:password@localhost:27017" --eval 'db.getSiblingDB("Employee").account.find()'[

{ _id: { originalId: '1' }, id: '1', ssn: 'AAAA' },

{ _id: { originalId: '2' }, id: '2', ssn: 'BBBB' },

{ _id: { originalId: '3' }, id: '3', ssn: 'CCCC' },

{ _id: { originalId: '4' }, id: '4', ssn: 'DDDD' },

{ _id: { originalId: '5' }, id: '5', ssn: 'EEEE' }

]Teardown:

scripts/tear-down-postgres-to-mongo.shFolder: kafka-connect-sink-http

Example of usage of HTTP Sink Connector.

Run the example:

scripts/bootstrap-connect-sink-http.shA web application, exposing REST APIs, listening on port 8010 will start up.

A HTTP sink connector will be created with this config:

{

"name": "SimpleHttpSink",

"config":

{

"topics": "topicA",

"tasks.max": "2",

"connector.class": "io.confluent.connect.http.HttpSinkConnector",

"http.api.url": "http://host.docker.internal:8010/api/message",

"value.converter": "org.apache.kafka.connect.storage.StringConverter",

"confluent.topic.bootstrap.servers": "broker:9092",

"confluent.topic.replication.factor": "1",

"reporter.bootstrap.servers": "broker:9092",

"reporter.result.topic.name": "success-responses",

"reporter.result.topic.replication.factor": "1",

"reporter.error.topic.name": "error-responses",

"reporter.error.topic.replication.factor": "1",

"consumer.override.max.poll.interval.ms": "5000"

}

}Send json messages to topicA topic:

docker exec -it broker kafka-console-producer --broker-list broker:9092 --topic topicA --property "parse.key=true" --property "key.separator=:"

> 1:{"FIELD1": "01","FIELD2": "20400","FIELD3": "001","FIELD4": "0006084655017","FIELD5": "20221117","FIELD6": 9000018}Sink connector will execute an HTTP POST Request to the endpoint http://localhost:8010/api/message

Teardown:

scripts/tear-down-connect-sink-http.shFolder: kafka-connect-sink-s3

Example of usage of S3 Sink Connector.

Run the example:

scripts/bootstrap-connect-sink-s3.shMinIO will start listening on port 9000 (admin/minioadmin)

A S3 sink connector will be created with this config:

{

"name": "sink-s3",

"config":

{

"topics": "gaming-player-activity",

"tasks.max": "1",

"connector.class": "io.confluent.connect.s3.S3SinkConnector",

"store.url": "http://minio:9000",

"s3.region": "us-west-2",

"s3.bucket.name": "gaming-player-activity-bucket",

"s3.part.size": "5242880",

"flush.size": "100",

"storage.class": "io.confluent.connect.s3.storage.S3Storage",

"format.class": "io.confluent.connect.s3.format.avro.AvroFormat",

"schema.generator.class": "io.confluent.connect.storage.hive.schema.DefaultSchemaGenerator",

"partitioner.class": "io.confluent.connect.storage.partitioner.DefaultPartitioner",

"schema.compatibility": "NONE"

}

}Sink connector will read messages from topic gaming-player-activity and store them in a S3 bucket gaming-player-activity-bucket using io.confluent.connect.s3.format.avro.AvroFormat as format class.

Sink connector will generate a new object storage entry every 100 messages (flush_size).

To generate random records for topic gaming-player-activity we will use jr tool.

Send 1000 messages to gaming-player-activity topic using jr:

docker exec -it -w /home/jr/.jr jr jr template run gaming_player_activity -n 1000 -o kafka -t gaming-player-activity -s --serializer avro-genericVerify that 10 entries are stored in MinIO into gaming-player-activity-bucket bucket, connecting to MiniIO web console, http://localhost:9000 (admin/minioadmin):

Teardown:

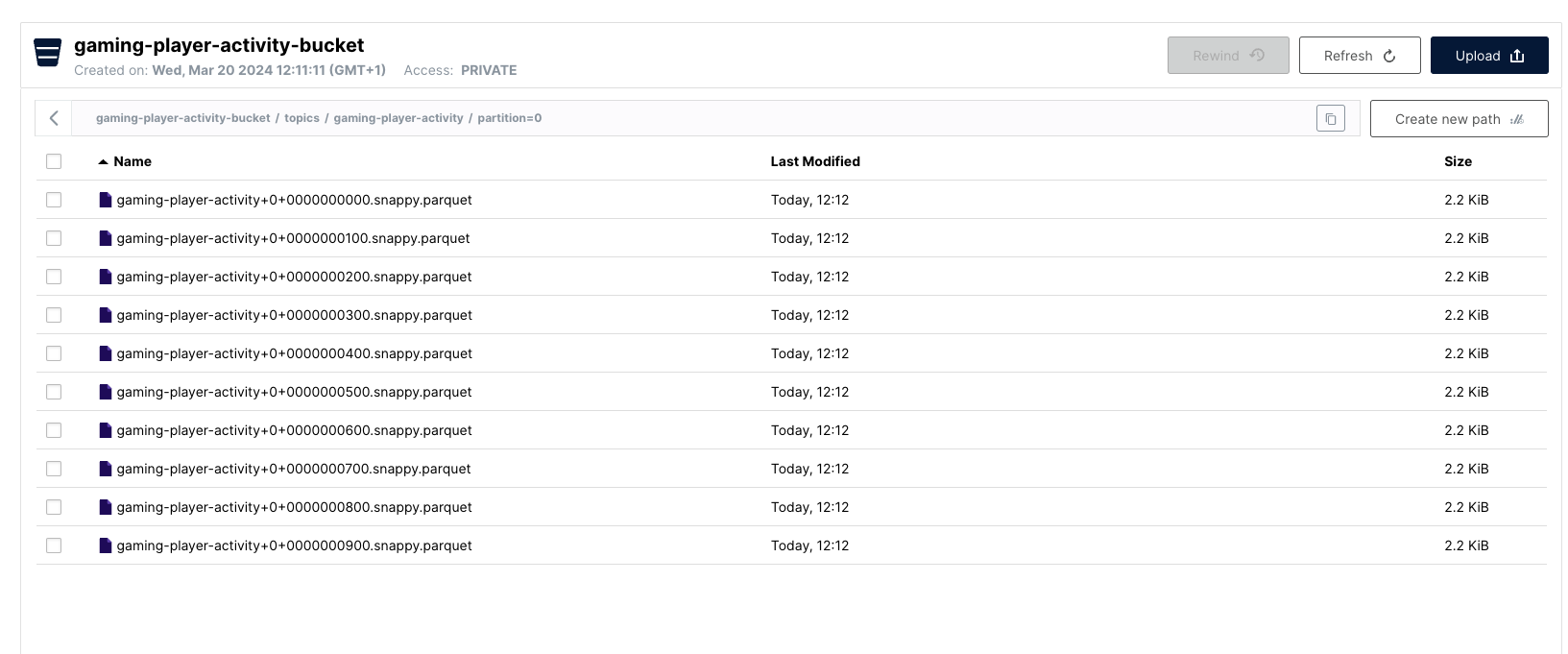

scripts/tear-down-connect-sink-s3.shSame example but Sink connector will read Avro messages from topic gaming-player-activity and store them in a S3 bucket gaming-player-activity-bucket using io.confluent.connect.s3.format.parquet.ParquetFormat as format class.

The format of data stored in MinIO will be Parquet.

Run the example:

scripts/bootstrap-connect-sink-s3-parquet.shA S3 sink connector will be created with this config:

{

"name": "sink-parquet-s3",

"config":

{

"topics": "gaming-player-activity",

"tasks.max": "1",

"connector.class": "io.confluent.connect.s3.S3SinkConnector",

"store.url": "http://minio:9000",

"s3.region": "us-west-2",

"s3.bucket.name": "gaming-player-activity-bucket",

"s3.part.size": "5242880",

"flush.size": "100",

"storage.class": "io.confluent.connect.s3.storage.S3Storage",

"partitioner.class": "io.confluent.connect.storage.partitioner.DefaultPartitioner",

"format.class": "io.confluent.connect.s3.format.parquet.ParquetFormat",

"parquet.codec": "snappy",

"schema.registry.url": "http://schema-registry:8081",

"value.converter": "io.confluent.connect.avro.AvroConverter",

"key.converter": "org.apache.kafka.connect.storage.StringConverter",

"value.converter.schema.registry.url": "http://schema-registry:8081"

}

}Send 1000 messages to gaming-player-activity topic using jr:

docker exec -it -w /home/jr/.jr jr jr template run gaming_player_activity -n 1000 -o kafka -t gaming-player-activity -s --serializer avro-genericVerify that 10 entries are stored in MinIO into gaming-player-activity-bucket bucket, connecting to MiniIO web console, http://localhost:9000 (admin/minioadmin):

Teardown:

scripts/tear-down-connect-sink-s3.shFolder: kafka-connect-source-sap-hana

Example of usage of SAP HANA Source Connector.

Run the example:

scripts/bootstrap-connect-source-sap-hana.shInsert rows in LOCALDEV.TEST table:

docker exec -i hana /usr/sap/HXE/HDB90/exe/hdbsql -i 90 -d HXE -u LOCALDEV -p Localdev1 > /tmp/result.log 2>&1 <<-EOF

INSERT INTO TEST (111, 'foo', 100,50);

INSERT INTO TEST (222, 'bar', 100,50);

EOFA SAP HANA source connector will be created with this config:

{

"name": "sap-hana-source",

"config":

{

"topics": "testtopic",

"tasks.max": "1",

"connector.class": "com.sap.kafka.connect.source.hana.HANASourceConnector",

"connection.url": "jdbc:sap://sap:39041/?databaseName=HXE&reconnect=true&statementCacheSize=512",

"connection.user": "LOCALDEV",

"connection.password" : "Localdev1",

"value.converter.schema.registry.url": "http://schema-registry:8081",

"auto.create": "true",

"testtopic.table.name": "\"LOCALDEV\".\"TEST\"",

"key.converter": "io.confluent.connect.avro.AvroConverter",

"key.converter.schema.registry.url": "http://schema-registry:8081",

"value.converter": "io.confluent.connect.avro.AvroConverter",

"value.converter.schema.registry.url": "http://schema-registry:8081"

}

}Source will read rows from LOCALDEV.TEST table and store in testtopic topic.

Teardown:

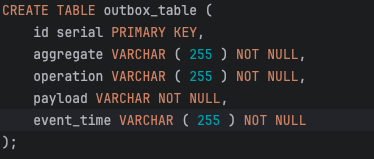

scripts/tear-down-connect-source-sap-hana.shIn this example, some SMT transformations (chained) are used to create an Event Router starting from an input outbox table.

The outbox table contains different operations for the same aggregate (Consumer Loan); the different operations are sent on specific topics following these routing rules:

-

operation: CREATE -→ topic: loan

-

operation: INSTALLMENT_PAYMENT -→ topic: loan_payment

-

operation: EARLY_LOAN_CLOSURE -→ topic: loan

Records from the outbox table are fetched using a JDBC Source Connector.

Run the example:

scripts/bootstrap-connect-event-router.shOutbox table:

insert into outbox_table (id, aggregate, operation, payload, event_time) values (1, 'Consumer Loan', 'CREATE', '{\"event\": {\"type\":\"Mortgage Opening\",\"timestamp\":\"2023-11-20T10:00:00\",\"data\":{\"mortgageId\":\"ABC123\",\"customer\":\"John Doe\",\"amount\":200000,\"duration\": 20}}}','2023-11-20 10:00:00');

insert into outbox_table (id, aggregate, operation, payload, event_time) values (2, 'Consumer Loan', 'INSTALLMENT_PAYMENT', '{\"event\": {\"type\":\"Mortgage Opening\",\"timestamp\":\"2023-11-20T10:00:00\",\"data\":{\"mortgageId\":\"ABC123\",\"customer\":\"John Doe\",\"amount\":200000,\"duration\": 20}}}','2023-12-01 09:30:00');

insert into outbox_table (id, aggregate, operation, payload, event_time) values (3, 'Consumer Loan', 'EARLY_LOAN_CLOSURE', '{\"event\":{\"type\":\"Early Loan Closure\",\"timestamp\":\"2023-11-25T14:15:00\",\"data\":{\"mortgageId\":\"ABC12\",\"closureAmount\":150000,\"closureDate\":\"2023-11-25\",\"paymentMethod\":\"Bank Transfer\",\"transactionNumber\":\"PQR456\"}}}','2023-11-25 09:30:00');A JDBC Source Connector will be created with this config:

{

"name" : "pgsql-sample-source",

"config": {

"connector.class": "io.confluent.connect.jdbc.JdbcSourceConnector",

"connection.url": "jdbc:postgresql://postgres:5432/postgres",

"connection.user": "postgres",

"connection.password": "postgres",

"topic.prefix": "",

"poll.interval.ms" : 3600000,

"table.whitelist" : "public.outbox_table",

"mode":"bulk",

"key.converter": "org.apache.kafka.connect.storage.StringConverter",

"transforms":"valueToTopic,addPrefix,removeString1,removeString2",

"transforms.valueToTopic.type":"io.confluent.connect.transforms.ExtractTopic$Value",

"transforms.valueToTopic.field":"operation",

"transforms.addPrefix.type": "org.apache.kafka.connect.transforms.RegexRouter",

"transforms.addPrefix.regex": ".*",

"transforms.addPrefix.replacement": "loan$0",

"transforms.removeString1.type": "org.apache.kafka.connect.transforms.RegexRouter",

"transforms.removeString1.regex": "(.*)CREATE(.*)",

"transforms.removeString1.replacement": "$1$2",

"transforms.removeString2.type": "org.apache.kafka.connect.transforms.RegexRouter",

"transforms.removeString2.regex": "(.*)INSTALLMENT(.*)",

"transforms.removeString2.replacement": "$1$2",

"topic.creation.default.replication.factor": 1,

"topic.creation.default.partitions": 1

}

}Verify topic list:

docker exec -it broker kafka-topics --bootstrap-server broker:9092 --list

__consumer_offsets

_schemas

docker-connect-configs

docker-connect-offsets

docker-connect-status

loan

loan_PAYMENTdocker exec -it broker /bin/bash

[appuser@broker ~]$ cat /tmp/kraft-combined-logs/loan-0/00000000000000000000.log

�����Wz���Wz�����������������Consumer Loan

CREATE�{\"event\": {\"type\":\"Mortgage Opening\",\"timestamp\":\"2023-11-20T10:00:00\",\"data\":{\"mortgageId\":\"ABC123\",\"customer\":\"John Doe\",\"amount\":200000,\"duration\": 20}}}&2023-11-20 10:00:00k'�z<��Wz���Wz�����������������Consumer Loan$EARLY_LOAN_CLOSURE�{\"event\":{\"type\":\"Early Loan Closure\",\"timestamp\":\"2023-11-25T14:15:00\",\"data\":{\"mortgageId\":\"ABC12\",\"closureAmount\":150000,\"closureDate\":\"2023-11-25\",\"paymentMethod\":\"Bank Transfer\",\"transactionNumber\":\"PQR456\"}}}&2023-11-25 09:30:00docker exec -it broker /bin/bash

[appuser@broker ~]$ cat /tmp/kraft-combined-logs/loan_PAYMENT-0/00000000000000000000.log

,��A��Wz���Wz�����������������Consumer Loan&INSTALLMENT_PAYMENT�{\"event\": {\"type\":\"Mortgage Opening\",\"timestamp\":\"2023-11-20T10:00:00\",\"data\":{\"mortgageId\":\"ABC123\",\"customer\":\"John Doe\",\"amount\":200000,\"duration\": 20}}}&2023-12-01 09:30:00

Teardown:

scripts/tear-down-connect-event-router.shFolder: cdc-debezium-postgres

Usage of Debezium Source Connector for PostgreSQL to send RDMS table updates into a topic.

The debezium/debezium-connector-postgresql:1.7.1 connector has been installed into connect docker image using confluent hub (see docker-compose.yml file).

More details on the connector are available at: https://docs.confluent.io/debezium-connect-postgres-source/current/overview.html.

Run cluster:

scripts/bootstrap-cdc.shThe connector uses pgoutput plugin for replication. This plug-in is always present in PostgreSQL server. The Debezium connector interprets the raw replication event stream directly into change events.

Verify the existence of account table and data in PostgreSQL:

docker exec -it postgres psql -h localhost -p 5432 -U postgres -c 'select * from accounts;' user_id | username | password | email | created_on | last_login

---------+----------+----------+--------------+----------------------------+----------------------------

1 | foo | bar | foo@bar.com | 2023-10-16 10:48:08.595034 | 2023-10-16 10:48:08.595034

2 | foo2 | bar2 | foo2@bar.com | 2023-10-16 10:48:08.596646 | 2023-10-16 10:48:08.596646

3 | foo3 | bar3 | foo3@bar.com | 2023-10-16 10:51:22.671384 | 2023-10-16 10:51:22.671384

4 | foo4 | bar4 | foo4@bar.com | 2024-02-28 12:12:08.665137 | 2024-02-28 12:12:08.665137Deploy the connector:

curl -v -X POST -H 'Content-Type: application/json' -d @cdc-debezium-postgres/config/debezium-source-pgsql.json http://localhost:8083/connectorsRun a consumer on postgres.public.accounts topic and see the records:

docker exec -it broker kafka-console-consumer --topic postgres.public.accounts --bootstrap-server broker:9092 --from-beginning --property print.key=true --property print.value=falseInsert a new record into account table:

docker exec -it postgres psql -h localhost -p 5432 -U postgres -c "insert into accounts (user_id, username, password, email, created_on, last_login) values (3, 'foo3', 'bar3', 'foo3@bar.com', current_timestamp, current_timestamp);"Verify in consumer log the existence of 3 records:

Struct{user_id=1}

Struct{user_id=2}

Struct{user_id=3}Teardown:

scripts/tear-down-cdc.shFolder: cdc-debezium-informix

Usage of Debezium Source Connector for Informix to send RDMS table updates into a topic.

Run environment:

scripts/bootstrap-cdc-informix.shPerform the following tasks to prepare for using the Change Data Capture API and create tables on iot database:

docker exec -it ifx /bin/bash

export DBDATE=Y4MD

dbaccess iot /opt/ibm/informix/etc/syscdcv1.sql

dbaccess iot /tmp/informix_ddl_sample.sql

exitDeploy the connector:

curl -v -X POST -H 'Content-Type: application/json' -d @cdc-debezium-informix/config/debezium-source-informix.json http://localhost:8083/connectorsRun a consumer on test.informix.cust_db topic and see the records (expect to see 6 records):

kafka-avro-console-consumer --bootstrap-server localhost:9092 --from-beginning --topic test.informix.cust_db --property schema.registry.url=http://localhost:8081{"before":null,"after":{"test.informix.cust_db.Value":{"c_key":"\u0004W","c_status":{"string":"Z"},"c_date":{"int":19100}}},"source":{"version":"2.6.1.Final","connector":"informix","name":"test","ts_ms":1713272938000,"snapshot":{"string":"first"},"db":"iot","sequence":null,"ts_us":1713272938000000,"ts_ns":1713272938000000000,"schema":"informix","table":"cust_db","commit_lsn":{"string":"21484679168"},"change_lsn":null,"txId":null,"begin_lsn":null},"op":"r","ts_ms":{"long":1713272939104},"ts_us":{"long":1713272939104761},"ts_ns":{"long":1713272939104761000},"transaction":null}

{"before":null,"after":{"test.informix.cust_db.Value":{"c_key":"\b®","c_status":{"string":"Z"},"c_date":{"int":18735}}},"source":{"version":"2.6.1.Final","connector":"informix","name":"test","ts_ms":1713272938000,"snapshot":{"string":"true"},"db":"iot","sequence":null,"ts_us":1713272938000000,"ts_ns":1713272938000000000,"schema":"informix","table":"cust_db","commit_lsn":{"string":"21484679168"},"change_lsn":null,"txId":null,"begin_lsn":null},"op":"r","ts_ms":{"long":1713272939105},"ts_us":{"long":1713272939105769},"ts_ns":{"long":1713272939105769000},"transaction":null}

{"before":null,"after":{"test.informix.cust_db.Value":{"c_key":"\r\u0005","c_status":{"string":"Z"},"c_date":{"int":18370}}},"source":{"version":"2.6.1.Final","connector":"informix","name":"test","ts_ms":1713272938000,"snapshot":{"string":"true"},"db":"iot","sequence":null,"ts_us":1713272938000000,"ts_ns":1713272938000000000,"schema":"informix","table":"cust_db","commit_lsn":{"string":"21484679168"},"change_lsn":null,"txId":null,"begin_lsn":null},"op":"r","ts_ms":{"long":1713272939105},"ts_us":{"long":1713272939105848},"ts_ns":{"long":1713272939105848000},"transaction":null}

{"before":null,"after":{"test.informix.cust_db.Value":{"c_key":"\u0011\\","c_status":{"string":"Z"},"c_date":{"int":18004}}},"source":{"version":"2.6.1.Final","connector":"informix","name":"test","ts_ms":1713272938000,"snapshot":{"string":"true"},"db":"iot","sequence":null,"ts_us":1713272938000000,"ts_ns":1713272938000000000,"schema":"informix","table":"cust_db","commit_lsn":{"string":"21484679168"},"change_lsn":null,"txId":null,"begin_lsn":null},"op":"r","ts_ms":{"long":1713272939105},"ts_us":{"long":1713272939105931},"ts_ns":{"long":1713272939105931000},"transaction":null}

{"before":null,"after":{"test.informix.cust_db.Value":{"c_key":"\u0015³","c_status":{"string":"Z"},"c_date":{"int":17639}}},"source":{"version":"2.6.1.Final","connector":"informix","name":"test","ts_ms":1713272938000,"snapshot":{"string":"true"},"db":"iot","sequence":null,"ts_us":1713272938000000,"ts_ns":1713272938000000000,"schema":"informix","table":"cust_db","commit_lsn":{"string":"21484679168"},"change_lsn":null,"txId":null,"begin_lsn":null},"op":"r","ts_ms":{"long":1713272939105},"ts_us":{"long":1713272939105984},"ts_ns":{"long":1713272939105984000},"transaction":null}

{"before":null,"after":{"test.informix.cust_db.Value":{"c_key":"\u001A\n","c_status":{"string":"Z"},"c_date":{"int":17274}}},"source":{"version":"2.6.1.Final","connector":"informix","name":"test","ts_ms":1713272938000,"snapshot":{"string":"last"},"db":"iot","sequence":null,"ts_us":1713272938000000,"ts_ns":1713272938000000000,"schema":"informix","table":"cust_db","commit_lsn":{"string":"21484679168"},"change_lsn":null,"txId":null,"begin_lsn":null},"op":"r","ts_ms":{"long":1713272939106},"ts_us":{"long":1713272939106252},"ts_ns":{"long":1713272939106252000},"transaction":null}Teardown:

scripts/tear-down-cdc-informix.shFolder: cdc-debezium-mongo

Usage of Debezium Source Connector for MongoDB to send updates into a topic. This example will use Debezium Event Router to implement a scenario for the Outbox pattern.

Run environment:

scripts/bootstrap-cdc-mongo.shScript will create a user data-platform-cdc with the privileges required to run CDC:

use admin

db.createRole({

role: "CDCRole",

privileges: [

{ resource: { cluster: true }, actions: ["find", "changeStream"] },

{ resource: { db: "outbox", collection: "loans" }, actions: [ "find", "changeStream" ] }

],

roles: []

});

db.createUser({

user: "data-platform-cdc",

pwd: "password",

roles: [

{ role: "read", db: "admin" },

{ role: "clusterMonitor", db: "admin" },

{ role: "read", db: "config" },

{ role: "read", db: "outbox" },

{ role: "CDCRole", db: "admin"}

]

});Script will also insert a document into outbox database and loans collection:

{

"aggregateId": "012313",

"aggregateType": "Consumer Loan",

"topicName": "CONSUMER_LOAN",

"eventDate": "2024-08-20T09:42:02.665Z",

"eventId": 1,

"eventType": "INSTALLMENT_PAYMENT",

"payload": {

"amount": "200000"

}

}Script will deploy a source connector. Topic destination will be extracted from field topicName in document. Message Key will be set using filed aggregateId:

{

"name": "mongo-debezium-connector",

"config": {

"connector.class": "io.debezium.connector.mongodb.MongoDbConnector",

"mongodb.connection.string": "mongodb://mongo:27017/?replicaSet=rs0",

"topic.prefix": "test",

"database.include.list" : "outbox",

"collection.include.list" : "outbox.loans",

"mongodb.user" : "data-platform-cdc",

"mongodb.password" : "password",

"value.converter": "org.apache.kafka.connect.json.JsonConverter",

"value.converter.schemas.enable": "true",

"transforms": "outbox,unwrap",

"transforms.outbox.type": "io.debezium.connector.mongodb.transforms.outbox.MongoEventRouter",

"transforms.outbox.tracing.span.context.field": "propagation",

"transforms.outbox.tracing.with.context.field.only": "false",

"transforms.outbox.tracing.operation.name": "debezium-read",

"transforms.outbox.collection.field.event.key": "aggregateId",

"transforms.outbox.collection.field.event.id": "aggregateId",

"transforms.outbox.collection.field.event.payload": "payload",

"transforms.outbox.collection.expand.json.payload": "true",

"transforms.outbox.collection.fields.additional.placement": "aggregateType:header:aggregateType,eventDate:header:eventTime,eventType:header:type,eventId:header:id",

"transforms.outbox.route.by.field": "topicName",

"transforms.outbox.route.topic.replacement": "${routedByValue}",

"transforms.unwrap.type": "io.debezium.connector.mongodb.transforms.ExtractNewDocumentState",

"transforms.unwrap.drop.tombstones": "false",

"transforms.unwrap.operation.header": "false",

"transforms.unwrap.delete.handling.mode": "drop",

"transforms.unwrap.array.encoding": "array"

}

}Run a consumer on CONSUMER_LOAN topic and see the records (headers - key - value):

docker exec -it broker kafka-console-consumer --bootstrap-server broker:9092 --topic CONSUMER_LOAN --from-beginning --property print.key=true --property print.headers=trueid:012313,aggregateType:Consumer Loan,eventTime:2024-08-20T09:42:02.665Z,type:INSTALLMENT_PAYMENT,id:1 012313 {"amount":"200000"}Teardown:

scripts/tear-down-cdc-mongo.shFolder: kafka-connect-task-distribution

This example will show how tasks are automatically balanced between Running worker nodes.

A connect cluster will be created with 2 workers, connect and connect2 and using a Datagen Source Connector with 4 tasks continuously inserting data.

After some seconds connect2 will be stopped and all tasks will be redistributed to connect worker node.

Run sample:

scripts/bootstrap-connect-tasks.shYou will first see tasks distributed between the 2 Running workers:

{"datagen-sample":{"status":{"name":"datagen-sample","connector":{"state":"RUNNING","worker_id":"connect:8083"},"tasks":[{"id":0,"state":"RUNNING","worker_id":"connect:8083"},{"id":1,"state":"RUNNING","worker_id":"connect2:8083"},{"id":2,"state":"RUNNING","worker_id":"connect:8083"},{"id":3,"state":"RUNNING","worker_id":"connect2:8083"}],"type":"source"}}}After stopping connect2, you will see tasks only distributed to connect worker:

{"datagen-sample":{"status":{"name":"datagen-sample","connector":{"state":"RUNNING","worker_id":"connect:8083"},"tasks":[{"id":0,"state":"RUNNING","worker_id":"connect:8083"},{"id":1,"state":"RUNNING","worker_id":"connect:8083"},{"id":2,"state":"RUNNING","worker_id":"connect:8083"},{"id":3,"state":"RUNNING","worker_id":"connect:8083"}],"type":"source"}}}Teardown:

scripts/tear-down-connect-tasks.shFolder: kafka-streams

Implementation of a series of Apache Kafka® Streams topologies.

Execute tests:

cd kafka-streams

mvn clean testCount number of events grouped by key.

Create topics:

docker exec -it broker /opt/kafka/bin/kafka-topics.sh --bootstrap-server broker:9092 --create --topic counter-input-topic --replication-factor 1 --partitions 2

docker exec -it broker /opt/kafka/bin/kafka-topics.sh --bootstrap-server broker:9092 --create --topic counter-output-topic --replication-factor 1 --partitions 2Run the topology:

cd kafka-streams

mvn clean compile && mvn exec:java -Dexec.mainClass="org.hifly.kafka.demo.streams.stream.StreamCounter"Send messages to counter-input-topic topics:

docker exec -it broker /opt/kafka/bin/kafka-console-producer.sh --broker-list broker:9092 --topic counter-input-topic --property "parse.key=true" --property "key.separator=:"

"John":"transaction_1"

"Mark":"transaction_1"

"John":"transaction_2"Read from counter-output-topic topic:

docker exec -it broker /opt/kafka/bin/kafka-console-consumer.sh --topic counter-output-topic --bootstrap-server broker:9092 --from-beginning --property print.key=true --property key.separator=" : " --value-deserializer "org.apache.kafka.common.serialization.LongDeserializer"Sum values grouping by key.

Create topics:

docker exec -it broker /opt/kafka/bin/kafka-topics.sh --bootstrap-server broker:9092 --create --topic sum-input-topic --replication-factor 1 --partitions 2

docker exec -it broker /opt/kafka/bin/kafka-topics.sh --bootstrap-server broker:9092 --create --topic sum-output-topic --replication-factor 1 --partitions 2Run the topology:

cd kafka-streams

mvn clean compile && mvn exec:java -Dexec.mainClass="org.hifly.kafka.demo.streams.stream.StreamSum"Send messages to sum-input-topic topics:

docker exec -it broker /opt/kafka/bin/kafka-console-producer.sh --broker-list broker:9092 --topic sum-input-topic --property "parse.key=true" --property "key.separator=:"

"John":1

"Mark":2

"John":5Read from sum-output-topic topic:

docker exec -it broker /opt/kafka/bin/kafka-console-consumer.sh --topic sum-output-topic --bootstrap-server broker:9092 --from-beginning --property print.key=true --property key.separator=" : " --value-deserializer "org.apache.kafka.common.serialization.IntegerDeserializer"The stream filters out speed data from car data sensor records. Speed limit is set to 150km/h and only events exceeding the limits are filtered out.

A KTable stores the car info data.

A left join between the KStream and the KTable produces a new aggregated object published to an output topic.

Create carinfo-topic, carsensor-topic and carsensor-output-topic topics:

docker exec -it broker /opt/kafka/bin/kafka-topics.sh --bootstrap-server broker:9092 --create --topic carinfo-topic --replication-factor 1 --partitions 2

docker exec -it broker /opt/kafka/bin/kafka-topics.sh --bootstrap-server broker:9092 --create --topic carsensor-topic --replication-factor 1 --partitions 2

docker exec -it broker /opt/kafka/bin/kafka-topics.sh --bootstrap-server broker:9092 --create --topic carsensor-output-topic --replication-factor 1 --partitions 2Run the topology:

cd kafka-streams

mvn clean compile && mvn exec:java -Dexec.mainClass="org.hifly.kafka.demo.streams.stream.CarSensorStream"Send messages to carinfo-topic and carsensor-topic topics:

docker exec -it broker /opt/kafka/bin/kafka-console-producer.sh --broker-list broker:9092 --topic carinfo-topic --property "parse.key=true" --property "key.separator=:"

1:{"id":"1","brand":"Ferrari","model":"F40"}docker exec -it broker /opt/kafka/bin/kafka-console-producer.sh --broker-list broker:9092 --topic carsensor-topic --property "parse.key=true" --property "key.separator=:"

1:{"id":"1","speed":350}Read from carsensor-output-topic topic:

docker exec -it broker /opt/kafka/bin/kafka-console-consumer.sh --topic carsensor-output-topic --bootstrap-server broker:9092 --from-beginning --property print.key=true --property key.separator=" : "The stream splits the original data into 2 different topics, one for Ferrari cars and one for all other car brands.

Create cars-input-topic, ferrari-input-topic and cars-output-topic topics:

docker exec -it broker /opt/kafka/bin/kafka-topics.sh --bootstrap-server broker:9092 --create --topic cars-input-topic --replication-factor 1 --partitions 2

docker exec -it broker /opt/kafka/bin/kafka-topics.sh --bootstrap-server broker:9092 --create --topic ferrari-input-topic --replication-factor 1 --partitions 2

docker exec -it broker /opt/kafka/bin/kafka-topics.sh --bootstrap-server broker:9092 --create --topic cars-output-topic --replication-factor 1 --partitions 2Run the topology:

cd kafka-streams

mvn clean compile && mvn exec:java -Dexec.mainClass="org.hifly.kafka.demo.streams.stream.CarBrandStream"Send messages to cars-input-topic topic:

docker exec -it broker /opt/kafka/bin/kafka-console-producer.sh --broker-list broker:9092 --topic cars-input-topic --property "parse.key=true" --property "key.separator=:"

1:{"id":"1","brand":"Ferrari","model":"F40"}

2:{"id":"2","brand":"Bugatti","model":"Chiron"}Read from ferrari-input-topic and cars-output-topic topics: