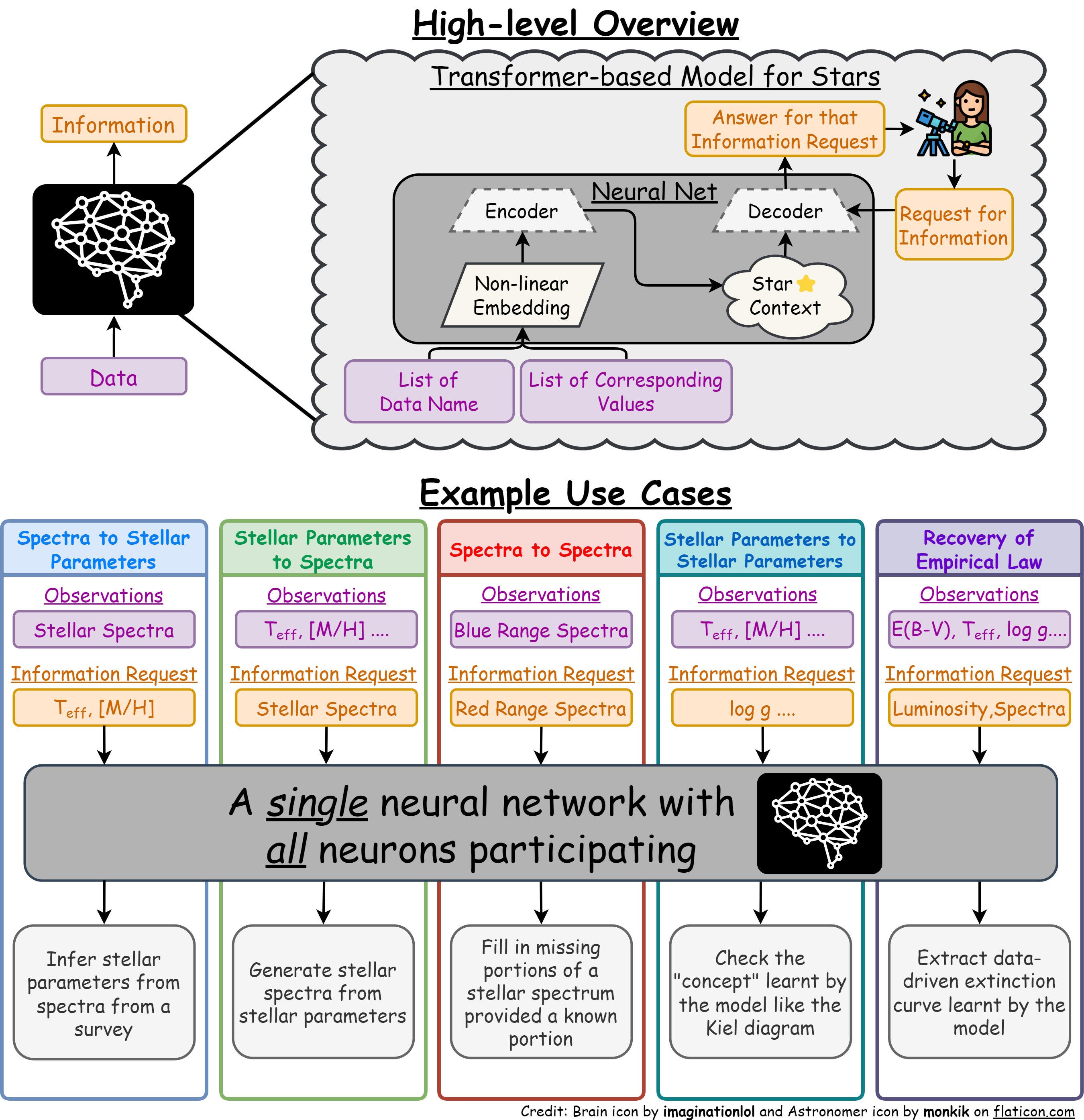

Rapid strides are currently being made in the field of 🤖artificial intelligence🧠 using Transformer-based models like Large Language Models (LLMs). The potential of these methods for creating a single, large, versatile model in astronomy has not yet been explored. In this work, we propose a framework for data-driven astronomy that uses the same core techniques and architecture as used by LLMs. Using a variety of observations and labels of stars as an example, we build a Transformer-based model and train it in a self-supervised manner with cross-survey data sets to perform a variety of inference tasks. In particular, we demonstrate that a single model can perform both discriminative and generative tasks even if the model was not trained or fine-tuned to do any specific task. For example, on the discriminative task of deriving stellar parameters from Gaia XP spectra, we achieve an accuracy of 47 K in T_eff, 0.11 dex in log(g), and 0.07 dex in [M/H], outperforming an expert XGBoost model in the same setting. But the same model can also generate XP spectra from stellar parameters, inpaint unobserved spectral regions, extract empirical stellar loci, and even determine the interstellar extinction curve. Our framework demonstrates that building and training a single foundation model without fine-tuning using data and parameters from multiple surveys to predict unmeasured observations and parameters is well within reach. Such 'Large Astronomy Models' trained on large quantities of observational data will play a large role in the analysis of current and future large surveys.

This repository is to make sure all figures and results are reproducible by anyone easily for this paper🤗.

If Github has issue (or too slow) to load the Jupyter Notebooks, you can go http://nbviewer.jupyter.org/github/henrysky/astroNN_stars_foundation/tree/main/

This project uses astroNN and MyGaiaDB to manage APOGEE and Gaia data respectively, PyTorch as the deep learning framework. mwdust and extinction are used to calculate extinctions. gaiadr3_zeropoint and GaiaXPy>=2.1.0 are used for Gaia data reduction. XGBoost>=2.0.1 as a baseline machine learning method for comparison.

Some notebooks require Zhang et al. 2023 trained model to run as a comparison to our model. You can download them from here. You need to extract the model stellar_flux_model.tar.gz to the root directory of this repository and rename the folder to zhanggreenrix2023_stellar_flux_model. Their model requires TensorFlow to run.

Some notebooks require Andrae et al. 2023 Gaia DR3 "vetted" RGB catalog named table_2_catwise.fits.gz. You can download them from here. You need to put the file(s) to a folder named andae2023_catalog at the root directory of this repository.

- Dataset_Reduction.ipynb

The notebook contains code to generate the dataset used by this paper.

Terabytes of (mostly gaia) data need to be downloaded in the process to construct the datasets. - Inference_Spec2Labels.ipynb

The notebook contains code to do inference on tasks of stellar spectra to stellar parameters. - Inference_Labels2Spec.ipynb

The notebook contains code to do inference on tasks of stellar parameters to stellar spectra. - Inference_Spec2Spec.ipynb

The notebook contains code to do inference on tasks of stellar spectra to stellar spectra. - Inference_Labels2Labels.ipynb

The notebook contains code to do inference on tasks of stellar parameters to stellar parameters. - Inference_ExternalComparison.ipynb

The notebook contains code to do inference on tasks of stellar parameters to stellar parameters compared to external catalog. - Task_TopKSearch.ipynb

The notebook contains code for an example of how our model can act as a Foundation model.

Our trained model will be fine-tuned with contrastive objective to do a stars similarity searching task.

If you use this training script to train your own model, please notice that details of your system will be saved automatically in the model folder as training_system_info.txt for developers to debug should anything went wrong. Delete the file before you share your model with others if you concern about privacy.

- training.py

Python script to train the model.

model_torchis a trained PyTorch model

The model has ~8.8 millions parameters trained on ~16 millions tokens from ~397k stars with 118 unque "unit vector" tokens.model_torch_searchis a trained PyTorch model

The model is fine-tuned on the main model to do a stars similarity searching task between spectra and parameters as a demonstration of how our model can act as a Foundation model.

All these graphics can be opened and edited by draw.io.

- model_overview.drawio

Source for Figure 1 in the paper, - model_specs.drawio

Source for Figure 2 in the paper. - model_foundation_showcase.drawio

Source for Figure C1 in the paper.

Here are some examples of basic usage of the model using Python. For the codes to work, you need to execute them at the root directory of this repository.

Although our model has a context window of 64 tokens, you do not need to fill up the whole context window.

from stellarperceptron.model import StellarPerceptron

nn_model = StellarPerceptron.load("./model_torch/", device="cpu")

# give context of two stars

# [[star1 teff, star1 logg], [star2 teff, star2 logg]]

nn_model.perceive([[4700., 2.5], [5500, 4.2]], ["teff", "logg"])

# request for information for them

print(nn_model.request(["teff"]))import numpy as np

from utils.gaia_utils import xp_spec_online

from stellarperceptron.model import StellarPerceptron

# Gaia DR3 source_id as integer

gdr3_source_id = 2130706307446806144

bprp_coeffs = xp_spec_online(gdr3_source_id, absolute_flux=False)

nn_model = StellarPerceptron.load("./model_torch/", device="cpu")

# Give the context of a star by giving XP coefficients to the NN model

nn_model.perceive(np.concatenate([bprp_coeffs["bp"][:32], bprp_coeffs["rp"][:32]]), [*[f"bp{i}" for i in range(32)], *[f"rp{i}" for i in range(32)]])

# Request for information like teff, logg, m_h

print(nn_model.request(["teff", "logg", "m_h"]))import pylab as plt

from stellarperceptron.model import StellarPerceptron

from utils.gaia_utils import nn_xp_coeffs_phys, xp_sampling_grid

nn_model = StellarPerceptron.load("./model_torch/", device="cpu")

# to generate a spectrum from stellar parameters

# absolute_flux boolean flag if you want to get spectra in flux at 10 parsec or flux normalized by overall G-band flux

# other keywords are not mandatory, but you can specify them if you want to as long as they are in the vocabs

spectrum = nn_xp_coeffs_phys(nn_model, absolute_flux=True, teff=4700., logg=2.5, m_h=0.0, logebv=-7)

plt.plot(xp_sampling_grid, spectrum)

plt.xlabel("Wavelength (nm)")

plt.ylabel("Flux at 10 pc ($ \mathrm{W} \mathrm{nm}^{-1} \mathrm{m}^{-2}$)")

plt.xlim(392, 992)

plt.show()- Henry Leung - henrysky

Department of Astronomy and Astrophysics, University of Toronto

Contact Henry: henrysky.leung [at] utoronto.ca - Jo Bovy - jobovy

Department of Astronomy and Astrophysics, University of Toronto

Contact Jo: bovy [at] astro.utoronto.ca

This project is licensed under the MIT License - see the LICENSE file for details