The primary use of this repo is to store my attempts at deep reinforcement-learning algorithms, and benchmark them against the implementations in OpenAI's spinup repository. This is my first attempt at deep reinforcement learning, hence the name.

Running this

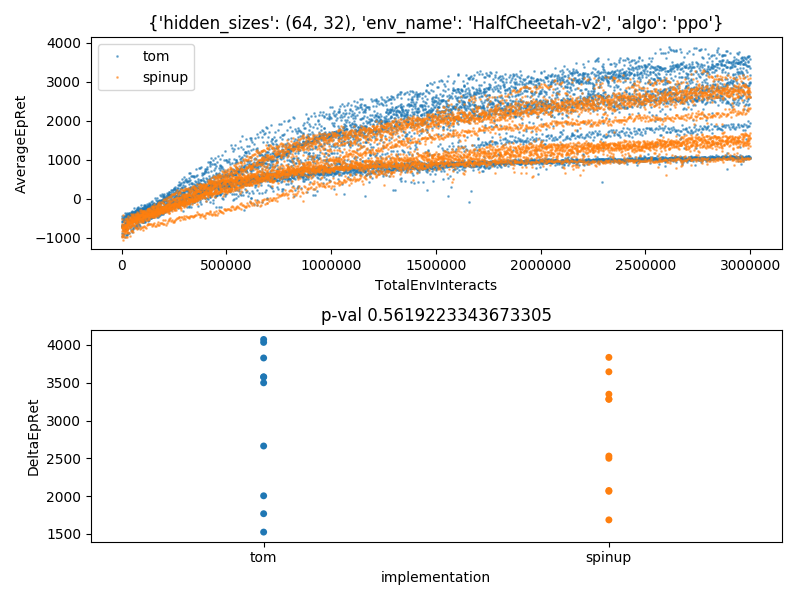

$ deeprl -hid "(64,32)" -n 10 -env 'HalfCheetah-v2' -a ppo --epochs 750 benchmarkand waiting produces this

With the above line, we have run my PPO implementation against OpenAI's for 10 different random seeds. The top plot shows the history of the episode return. The bottom shows the change in the episode return over training for each implementation and each seed. To get a rough idea if the change in the return is meaningfully different between two implementations, I compare the distributions in the bottom plot with student's t-test to calculate the p-value shown.

I used click to make this nifty command-line-tool, which automatically gives you --help flags

$ deeprl --help

Usage: deeprl [OPTIONS] COMMAND [ARGS]...

Main entry point

Options:

-exp, --exp_name TEXT Prefix added to experiment name

-n, --num_runs INTEGER Number of different random seeds to run

[default: 3]

--epochs INTEGER Number of epochs [default: 50]

-steps, --steps_per_epoch INTEGER

Number of steps per epoch [default: 4000]

-env, --env_name TEXT Environment name [default: Swimmer-v2]

-hid, --hidden_sizes TEXT Hidden sizes for actor and critic MLPs

[default: (64,64)]

--activation TEXT Activation to use in actor-critic MLPs

[default: tanh]

-a, --algo [vpg|trpo|ppo|ddpg|td3|sac]

Algorithm (ie agent) to use [default: vpg]

--help Show this message and exit.

Commands:

benchmark Benchmark tom's implementation against spinup and plot

plot plot Logging Results

run Run experiment and plot Episode ReturnIf we then want to know how to use "plot", we can get help on that as well

$ deeprl plot --help

Usage: deeprl plot [OPTIONS]

plot Logging Results

Options:

-imp, --implementation [tom|spinup]

Which implementation to run, spinup's or

Tom's

-v, --value TEXT Value to plot [default: AverageEpRet]

--help Show this message and exit.git clone git@github.com:henighan/deeprl-intro.git

cd deeprl-intro

make install

deeprl --helpmake testIf you want to use the mujoco environments, you will also need to follow the instructions here.

Thus far I have only implemented VPG and PPO for gaussian and categorical policies, (I'm still hunting down a bug in the DDPG agent). I stole spinup's logger, so I could easily plot and compare their and my results side-by-side.