Please consider citing our paper in your publications if the project helps your research.

@inproceedings{vision-language-transformer,

title={Vision-Language Transformer and Query Generation for Referring Segmentation},

author={Ding, Henghui and Liu, Chang and Wang, Suchen and Jiang, Xudong},

booktitle={Proceedings of the IEEE International Conference on Computer Vision},

year={2021}

}

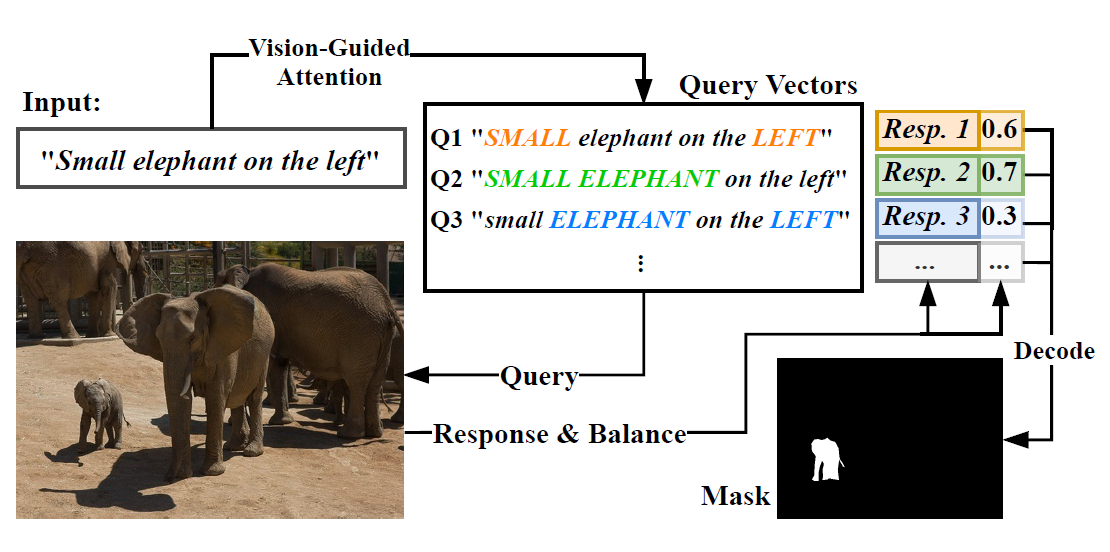

Vision-Language Transformer (VLT) is a framework for referring segmentation task. Our method produces multiple query vector for one input language expression, and use each of them to “query” the input image, generating a set of responses. Then the network selectively aggregates these responses, in which queries that provide better comprehensions are spotlighted.

-

Environment:

-

Python 3.6

-

tensorflow 1.15

-

Other dependencies in

requirements.txt -

SpaCy model for embedding:

python -m spacy download en_vectors_web_lg

-

-

Dataset preparation

-

Put the folder of COCO training set ("

train2014") underdata/images/. -

Download the RefCOCO dataset from here and extract them to

data/. Then run the script for data preparation underdata/:cd data python data_process_v2.py --data_root . --output_dir data_v2 --dataset [refcoco/refcoco+/refcocog] --split [unc/umd/google] --generate_mask

-

-

Download pretrained models & config files from here.

-

In the config file, set:

evaluate_model: path to the pretrained weightsevaluate_set: path to the dataset for evaluation.

-

Run

python vlt.py test [PATH_TO_CONFIG_FILE]

-

Pretrained Backbones: We use the backbone weights proviede by MCN.

Note: we use the backbone that excludes all images that appears in the val/test splits of RefCOCO, RefCOCO+ and RefCOCOg.

-

Specify hyperparameters, dataset path and pretrained weight path in the configuration file. Please refer to the examples under

/config, or config file of our pretrained models. -

Run

python vlt.py train [PATH_TO_CONFIG_FILE]

We borrowed a lot of codes from MCN, keras-transformer, RefCOCO API and keras-yolo3. Thanks for their excellent works!