TL;DL: this is the repo for "Building Math Agents with Multi-Turn Iterative Preference Learning"

We consider the math problem solving with python interpreter, which means that the model can write a python code and ask the external environmnet to execute and receive the excutaion result, before the LLM makes its next decision.

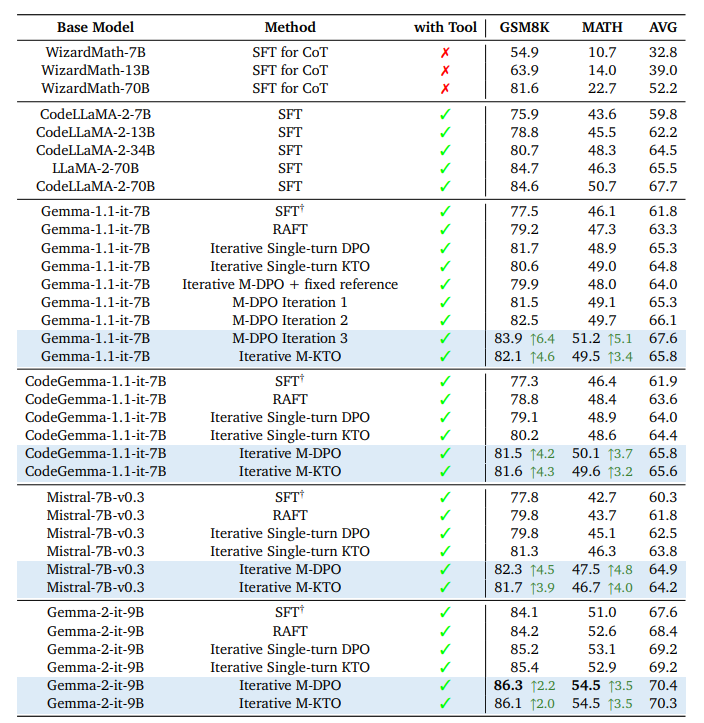

Figure 1: Main evaluation results on the MATH and GSK8K datasets.

The main pipeline is divided into three steps:

SFTto train the SFT model.Inferenceto generate new data and evaluate the model.Multi-turn Alignment Algorithmsto conduct the multi-turn DPO/KTO training.

It is recommended to have three separate environments for sft, inference, and alignment_train. Please refer to the corresponding part of this project for the detailed installation instruction.

- SFT Dataset: RLHF4MATH/SFT_510K, which is a subset of nvidia/OpenMathInstruct-1

- Prompt: RLHF4MATH/prompt_iter1, RLHF4MATH/prompt_iter2, RLHF4MATH/prompt_iter3

- SFT Model: RLHF4MATH/Gemma-7B-it-SFT3epoch

- Aligned Model: RLHF4MATH/Gemma-7B-it-M-DPO

The authors would like to thank the great open-source communities, including the Huggingface TRL team, Axolotl team, and Tora project for sharing the models, and codes.

If you find the content of this repo useful, please consider cite it as follows:

@article{xiong2024building,

title={Building Math Agents with Multi-Turn Iterative Preference Learning},

author={Xiong, Wei and Shi, Chengshuai and Shen, Jiaming and Rosenberg, Aviv and Qin, Zhen and Calandriello, Daniele and Khalman, Misha and Joshi, Rishabh and Piot, Bilal and Saleh, Mohammad and others},

journal={arXiv preprint arXiv:2409.02392},

year={2024}

}