Tests of L4S

Pete Heist

Jonathan Morton

- Introduction

- Key Findings

- Elaboration on Key Findings

- Unsafety in Shared RFC3168 Queues

- Tunneled Non-L4S Flows Not Protected by FQ

- Network Bias

- RTT Unfairness

- Between-Flow Induced Delay

- Underutilization with Bursty Traffic

- Intra-Flow Latency Spikes from Underreaction to RFC3168 CE

- Underutilization with Bursty Links

- Dropped Packets for Tunnels with Replay Protection Enabled

- Risk Assessment

- Full Results

- Appendix

The Transport Area Working Group (TSVWG) is working on L4S, which proposes to use the available ECT(1) codepoint for two purposes:

- to redefine the existing CE codepoint as a high-fidelity congestion control signal, which is incompatible with the present definition of CE in RFC3168 and RFC8511

- as a PHB (per-hop behavior) to select alternate treatment in bottlenecks

These tests evaluate some aspects of L4S's safety and performance.

Readers wishing for a quick background in high-fidelity congestion control may wish to read the Background section, while those already familiar with the topic can proceed to the Key Findings.

- Unsafety in Shared RFC3168 Queues: L4S flows dominate non-L4S flows, whether ECN enabled or not, when they occupy a shared RFC3168 signaling queue.

- Tunneled Non-L4S Flows Not Protected by FQ: Tunnels are a path to the result in Key Finding #1 at FQ bottlenecks like fq_codel.

- The DualPI2 qdisc introduces a network bias for TCP Prague flows over existing CUBIC flows.

- TCP Prague and DualPI2 exhibit a greater level of RTT unfairness than the presently used CUBIC and pfifo.

- Bursty traffic in the L queue, whether from applications (e.g. videoconferencing) or the network layer (e.g. WiFi) can cause between-flow induced delay that far exceeds the sub 1ms ultra-low latency goal.

- Bursty traffic in both the L and C queues can cause underutilization for flows in L.

- L4S transports experience intra-flow latency spikes at RFC3168 bottlenecks, particularly with the widely deployed fq_codel.

- The marking scheme in the DualPI2 qdisc is burst intolerant, causing under-utilization with bursty links (i.e. wireless).

- Tunnels may drop packets that traverse the C queue when anti-replay is enabled, leading to harm to classic traffic and enabling a DoS attack.

When L4S and non-L4S flows are in the same queue signaled by an RFC3168 AQM, the L4S flows can dominate non-L4S flows that use a conventional cwnd/2 response to CE and drop, regardless of whether the non-L4S flows are ECN capable.

The reason is that the L4S and RFC3168 responses to CE are incompatible. L4S flows expect high-frequency CE signaling, while non-L4S flows expect low-frequency CE signaling or drops. Not-ECT flows (flows not supporting ECN) are also dominated by L4S flows, because the AQM similarly drops packets for these flows instead of signaling CE.

See the Risk Assessment section for a discussion on the risks posed by this issue.

Figure 1 shows Prague vs CUBIC(Non-ECN) through a shared fq_codel queue. Note

that here we use the parameter flows 1 for fq_codel, which simulates traffic

in a shared queue. That happens for

tunneled traffic,

when a flow hash collision occurs, or may also happen when the qdisc hash has

been customized.

Figure 2 shows Prague vs Reno(Non-ECN) through a shared fq_codel queue. Reno seems to be more apt to be driven to minimum cwnd in these tests.

Figure 3 shows Prague vs Reno(ECN) through a shared PIE queue with ECN enabled.

See the Scenario 6 Fairness Table for the steady-state throughput ratios of each run.

See the Scenario 6 results for fq_codel, PIE and RED, each with two different common configurations.

Using a real world test setup, we looked at the harm that TCP Prague can do in shared RFC3168 queues to video streaming services, relative to the harm done by CUBIC. In each test, there is two minutes of competition each with both CUBIC and Prague, with one minute of no competition before, after and in-between.

Here are the results:

| Service | Protocol | Bottleneck Bandwidth | CUBIC Throughput (Harm) | Prague Throughput (Harm) | Results |

|---|---|---|---|---|---|

| YouTube | QUIC (BBR?) | 30 Mbps | 7.8 Mbps (none apparent) | 19 Mbps (resolution reduction, 2160p to 1440p) | mp4, pcap |

| O2TV | HTTP/TCP (unknown CCA) | 20 Mbps | 16 Mbps (none apparent) | 19 Mbps (two freezes, quality reduction) | mov, pcap |

| SledovaniTV | HTTP/TCP (unknown CCA) | 5 Mbps | 3.9 Mbps (none apparent) | 4.6 Mbps (persistent freezes, quality reduction) | mp4, pcap |

The above results suggest that the harm that L4S flows do in shared RFC3168 queues goes beyond just unfairness towards long-running flows. They also show that YouTube (which appears to use BBR) is significantly less affected than O2TV or SledovaniTV (which appear to use conventional TCP). This may be because BBR's response to CE and drop differ from conventional TCP. Thus, it would seem that video streaming services using CUBIC or Reno are likely to be more affected than those using BBR.

Note that the SledovaniTV service uses a lower bitrate for its web-based streams, so a low bottleneck bandwidth was required for an effective comparison. This may not be as common a bottleneck bandwidth anymore, but can still occur in low rate ADSL or WiFi.

Using a standalone test program, FCT (flow completion time) tests were performed. Those results are here.

In the test, a series of short CUBIC flows (with lengths following a lognormal distribution) were run first with no competition, then with a long-running CUBIC flow, then with a long-running Prague flow. FCT Harm was calculated for each result.

Related to the unsafety in shared RFC3168 queues, when traffic passes through a tunnel, its encapsulated packets usually share the same 5-tuple, so inner flows lose the flow isolation provided by FQ bottlenecks. This means that when tunneled L4S and non-L4S traffic traverse the same RFC3168 bottleneck, even when it has FQ, there is no flow isolation to maintain safety between the flows.

In practical terms, the result is that L4S flows dominate non-L4S flows in the same tunnel (e.g. Wireguard or OpenVPN), when the tunneled traffic passes through fq_codel or CAKE qdiscs.

See the Risk Assessment section for a further discussion on this issue.

Here is a common sample topology:

------------------- ------------ -------------------

| Tunnel Endpoint |----| fq_codel |----| Tunnel Endpoint |

------------------- ------------ -------------------

In Figure 10 below, we can see how an L4S Prague flow (the red trace) dominates a standard CUBIC flow (the blue trace) in the same Wireguard tunnel:

See Unsafety in Shared RFC3168 Queues for more information on why this happens and what the result is.

See Scenario 5 in the Appendix for links to these results, which are expected to be similar with most any tunnel.

Note #1 In testing this scenario, it was discovered that the Foo over

UDP tunnel has the ability to use an

automatic source port (encap-sport auto), which restores flow isolation by

using a different source port for each inner flow. However, this is tunnel

dependent, and secure tunnels like VPNs are not likely to support this option,

as doing so would be a security risk.

Note #2 Also in testing, we found that when using a netns (network namespaces) environment, the Linux kernel (5.4 at least) tracks a tunnel's inner flows even as their encapsulated packets cross namespace boundaries, making the results not representative of what typically happens in the real world. Flows not only get their own hash, but that hash can actually change across the lifetime of the flow, resulting in an unexpected AQM response. To avoid this problem, make sure the client, middlebox and server all run on different kernels when testing tunnels, as would be expected in the real world.

Measurements show that DualPI2 consistently gives TCP Prague flows a throughput advantage over conventional CUBIC flows, where both flows run over the same path RTT. In Figure 1 above, we compare the typical status quo in the form of a 250ms-sized dumb FIFO (middle) to DualPI2 (left) and an Approximate Fairness AQM (right) which actively considers queue occupancy of each flow. The baseline path RTT for both flows is 20ms, which is in the range expected for CDN to consumer traffic. Both flows start simultaneously and run for 3 minutes, with the throughput figures being taken from the final minute of the run as an approximation of reaching steady-state.

It is well-known that CUBIC outperforms NewReno on high-BDP paths where the polynomial curve grows faster than the linear one; the 250ms queue depth of the dumb FIFO and the relatively high throughput of the link puts the middle chart firmly in that regime. Because no AQM is present at the bottleneck, TCP Prague behaves approximately like NewReno and, as expected, is outperformed by CUBIC. It is difficult, incidentally, to see where L4S' "scalable throughput" claim is justified here, as CUBIC clearly scales up in throughput better in today's typical Internet environment.

L4S assumes that an L4S-aware AQM is present at the bottleneck. The left-hand chart shows what happens when DualPI2, which is claimed to implement L4S in the network, is indeed present there. In a stark reversal from the dumb FIFO scenario, TCP Prague is seen to have a large throughput advantage over CUBIC, in more than a 2:1 ratio. This cannot be explained by CUBIC's sawtooth behaviour, as that would leave much less than 50% of available capacity unused. We believe that several effects, both explicit and accidental, in DualPI's design are giving TCP Prague an unfair advantage in throughput.

The CodelAF results are presented as an example of what can easily be achieved by actively equalising queue occupancy across flows through differential AQM activity, which compensates for differing congestion control algorithms and path characteristics. CodelAF was initially developed as part of SCE, but the version used here is purely RFC-3168 compliant. On the right side of Figure 2, you can see that CUBIC and TCP Prague are given very nearly equal access to the link, with considerably less queuing than in the dumb FIFO.

These results also hold on 10ms and 80ms paths, with only minor variations; most notably, at 80ms CUBIC loses a bit of throughput in CodelAF due to its sawtooth behaviour, but is still not disadvantaged to the extent that DualPI2 imposes. We also see very similar results to CodelAF when the current state-of-the-art fq_codel and CAKE qdiscs are used. Hence we show that DualPI2 represents a regression in behaviour from both the currently typical deployment and the state of the art, with respect to throughput fairness on a common RTT. We could even hypothesise from this data that a deliberate attempt to introduce a "fast lane" is in evidence here.

One of the so-called "Prague Requirements" adopted by L4S is to reduce the dependence on path RTT for flow throughput. Conventional single-queue AQM tends to result in a consistent average cwnd across flows sharing the bottleneck, and since BDP == cwnd * MTU == throughput * RTT, the throughput of each flow is inversely proportional to the effective RTT experienced by that flow, which in turn is the baseline path RTT plus the queue delay.

However, DualPI2 is designed to perpetuate this equalising of average cwnd, not only between flows in the same queue, but between the two classes of traffic it manages (L4S and conventional). Further, the effective RTT differs between the two classes of traffic due to the different AQM target in each, and the queue depth in the L4S class is limited to a very small value. The result is that the ratio of effective RTTs is not diluted by queue depth, as it would be in a deeper queue, and also not compensated for by differential per-flow AQM action, as it would be in FQ or AF AQMs which are already deployed to some extent.

This can be clearly seen in Figure 3 above, in which a comparatively extreme ratio of path RTTs has been introduced between two flows to illustrate the effect. In the middle, the 250ms dumb FIFO is clearly seen to dilute the effect (the effective RTTs are 260ms and 410ms respectively) to the point where, except for two CUBIC flows competing against each other, other effects dominate the result in terms of steady-state throughput. On the right, the AF AQM clearly reduces the RTT bias effect to almost parity, with the exception of the pair of CUBIC flows which are still slightly improved over the dumb FIFO.

But on the left, when the bottleneck is managed by DualPI2, the shorter-RTT flow has a big throughput advantage in every case - even overcoming the throughput advantage that DualPI2 normally gives to TCP Prague, as shown previously. Indeed the only case where DualPI2 shows better elimination of RTT bias than the dumb FIFO is entirely due to this bias in favour of TCP Prague. Additionally, in the pure-L4S scenario in which both flows are TCP Prague, the ratio of throughput actually exceeds the nominal 16:1 ratio of path RTTs.

We conclude that DualPI2 does not represent "running code" supporting the L4S specification in respect of the "reduce RTT dependence" element of the Prague Requirements. Observing that the IETF standardisation process is predicated upon "rough consensus and running code", we strongly suggest that this deficiency be remedied before a WGLC process is considered.

Between-flow induced delay refers to the delay induced by one flow on another when they're in the same FIFO queue.

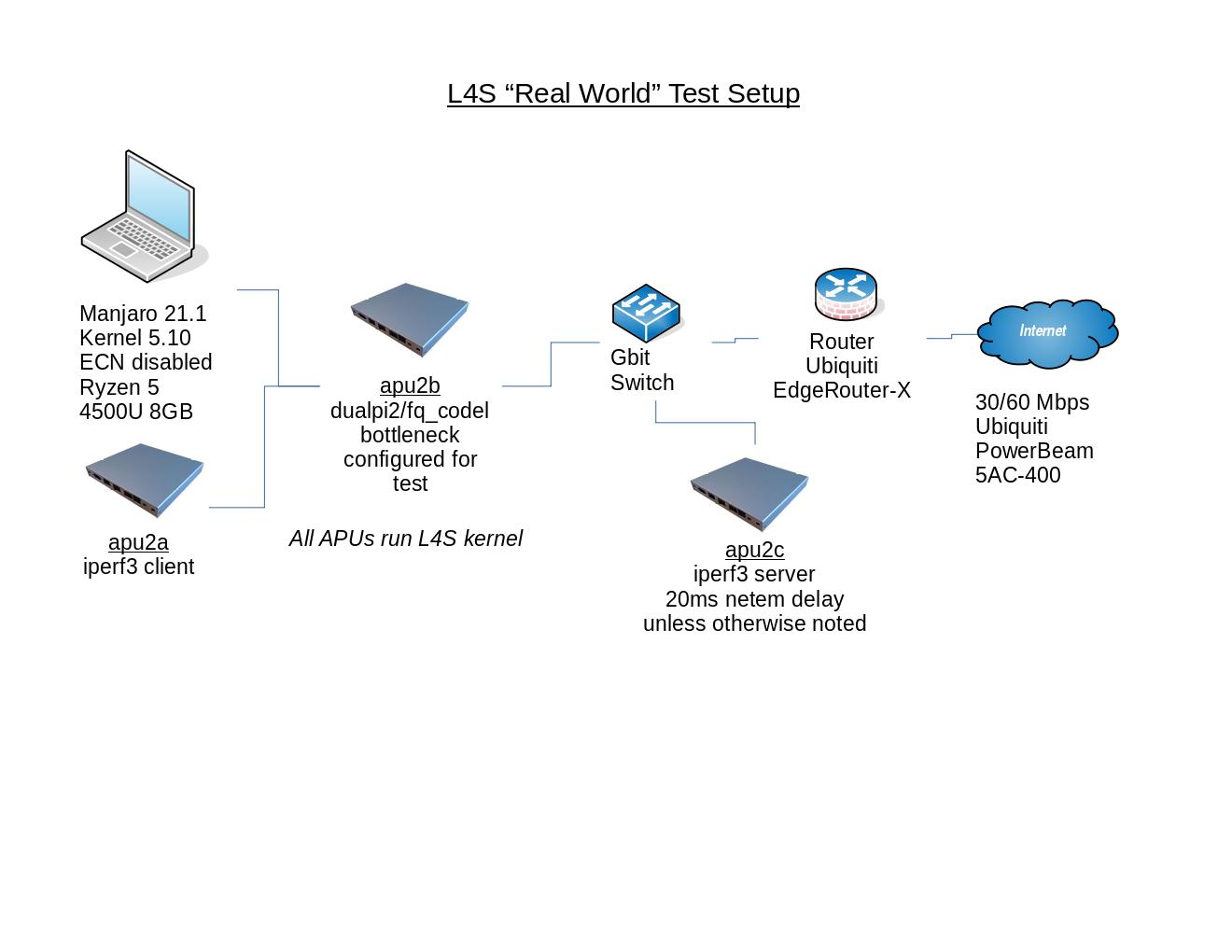

Using a real world test setup, we placed Skype video traffic (~2.5 Mbps bitrate) in the L queue on both egress and ingress dualpi2 instances at three different bottleneck bandwidths, 5 Mbps, 10 Mbps and 20 Mbps. Concurrently, we measured RTT through the bottleneck's L queue using irtt.

The results suggest that the bursty traffic typical of videoconferencing applications, which would seem to be good candidates for placement in the L queue, can induce delays that are far greater than the sub 1ms ultra-low latency goal.

A few notes about the CDF plot in Figure 5 below:

- The percentages are reversed from what may be expected, e.g. a percentile of 2% indicates that 98% of the sample were below the corresponding induced latency value.

- Induced latency is the RTT measurement from irtt, so delay through the L queue in each direction can be expected to be approximately half of that.

- fq_codel was also tested at each bandwidth, which just shows the flow switching times for FQ, since the measurement flow goes through a separate queue.

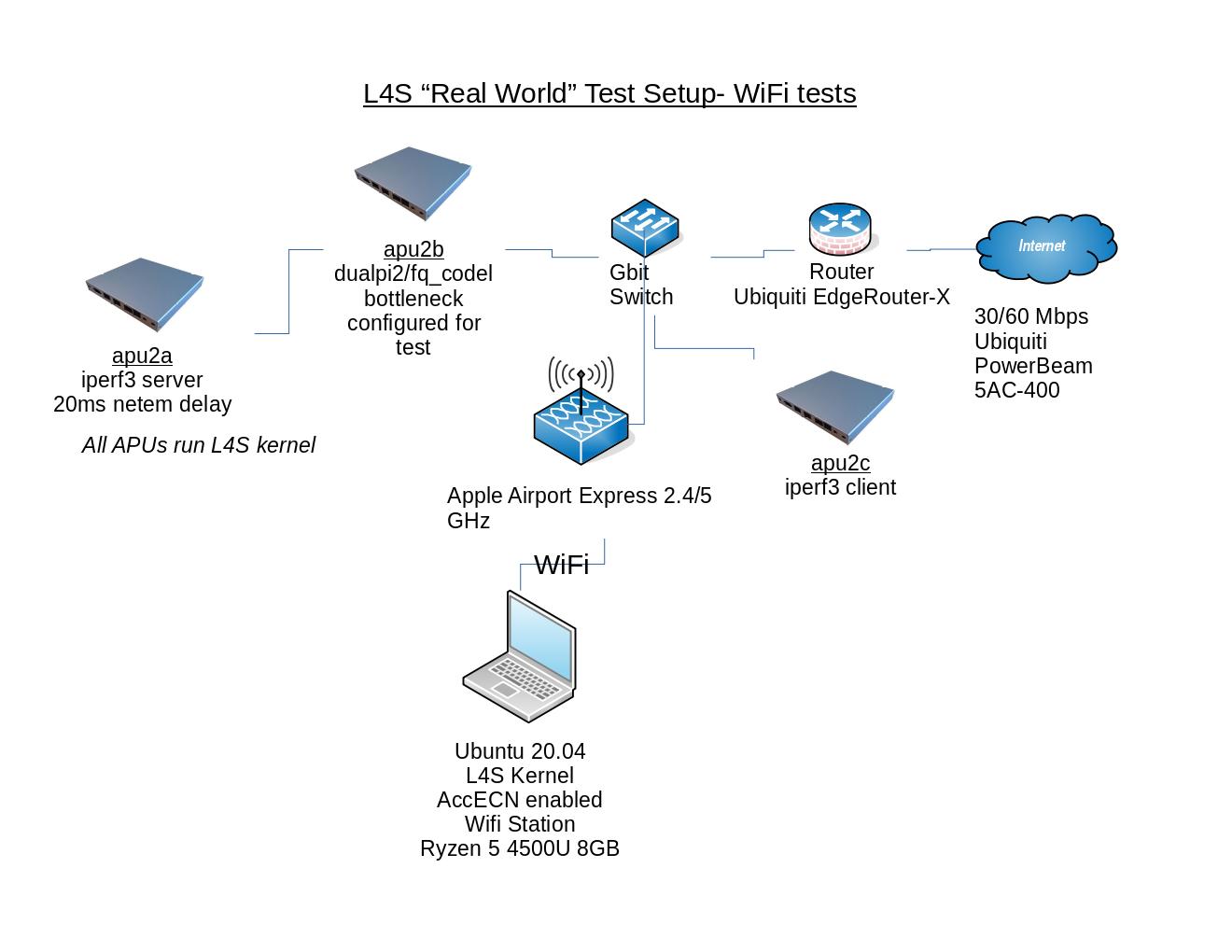

Using a real world test setup for WiFi, we ran two-flow competition between two TCP Prague flows, one wired and one WiFi, at 20Mbps with 20ms RTT. The wired flow starts at T=0 and runs the length of the test. The WiFi flow starts at T=30 and ends at T=90. In Figure 6a below, we can compare the difference in TCP RTT when the WiFi flow is active, and observe that the induced delay in the wired flow exceeds the sub 1ms ultra-low latency goal.

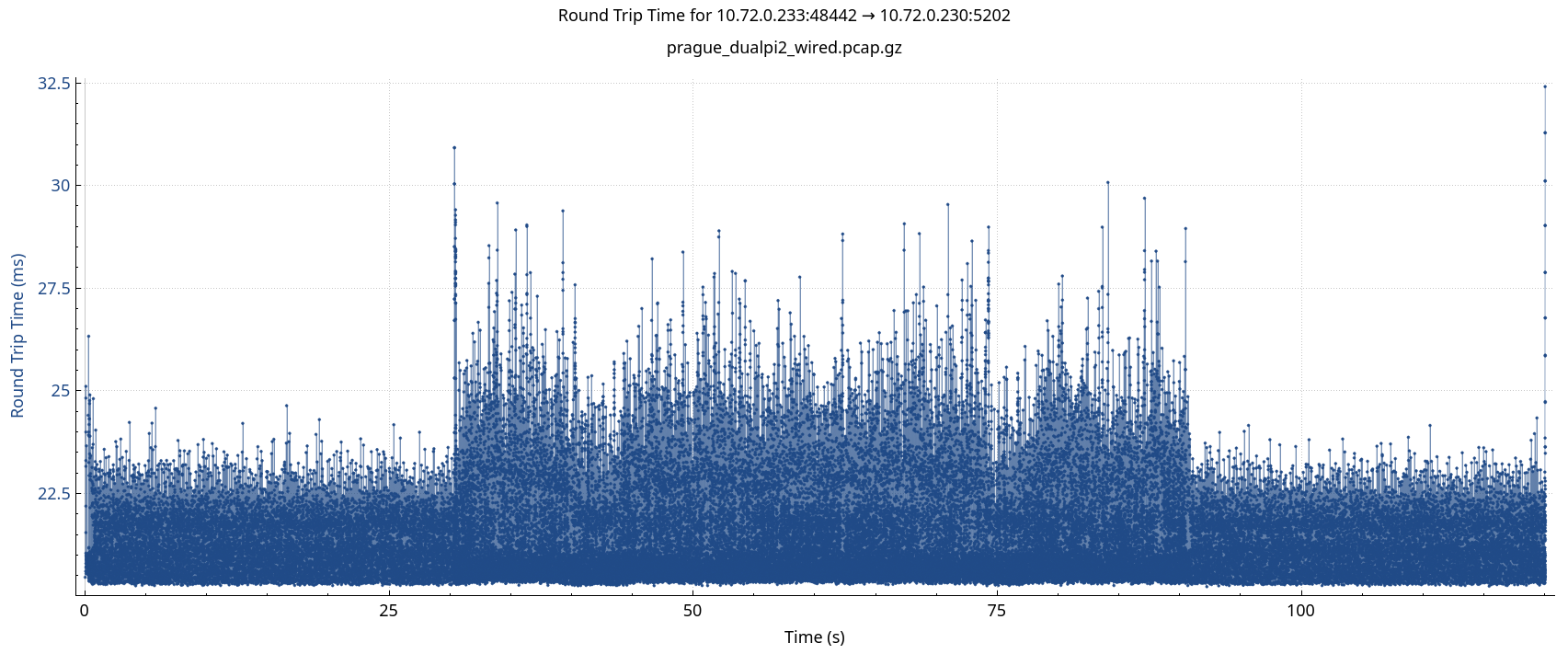

Figure 6a- TCP Prague TCP RTT for wired flow, with competing WiFi flow

Figure 6a- TCP Prague TCP RTT for wired flow, with competing WiFi flow

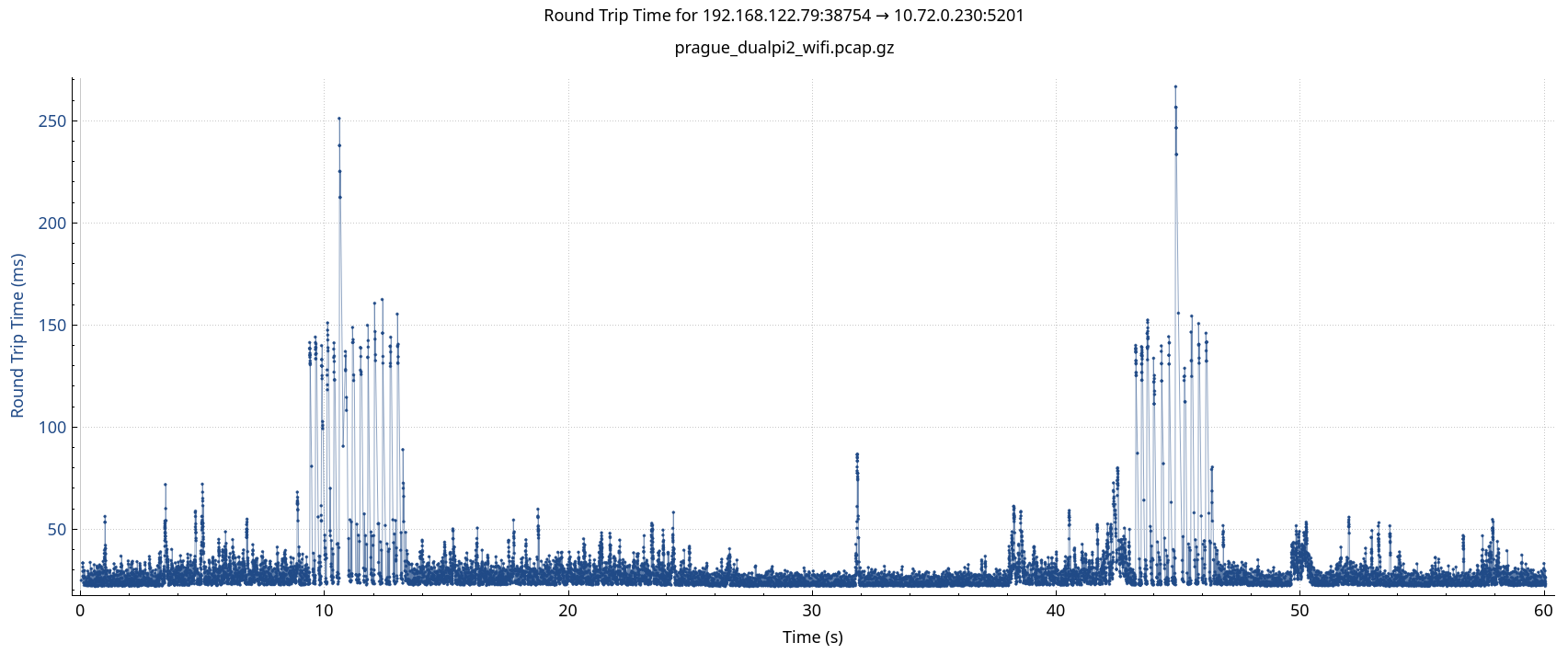

Just to put things in perspective, in Figure 6b below, we can also see that the WiFi flow itself shows delays that far exceed those that come from queueing. Note that T=0 to T=60 here corresponds to T=30 to T=90 in Figure 6a.

Figure 6b- TCP Prague TCP RTT for WiFi flow

Figure 6b- TCP Prague TCP RTT for WiFi flow

It may be argued that the above means "we must fix WiFi". There may be room for improvement with WiFi, however this is the Internet as it is today, and there is a discussion to be had around whether reliably low queueing delays will ultimately require flow separation.

Using a real world test setup, we placed Skype video traffic in DualPI2(L), DualPI2(C) and Codel queues, and measured the goodput for CUBIC and Prague, with and without Skype competition. While the bursty Skype traffic has little impact on conventional AQMs and CCAs, we see significantly reduced utilization for L4S Prague flows in DualPI2 when Skype is in the L queue, and also, to a lesser extent, when Skype traffic is in the C queue.

Important Note: There was a mistake in the test configuration that placed UDP packets with length > 1024 in the L queue, instead of UDP packets with port > 1024. This affects only the two tests with Skype in the L queue, and means that for those tests, there was less Skype traffic in L than was intended.

Figure 7 below uses data from the subsections that follow. Goodput is measured using relative TCP sequence number differences over time. The theoretical maximum goodput with Skype is the CCA's goodput without competition, minus the Skype bitrate calculated from the pcap (about 2.3 Mbps for the Prague runs and 1.8 Mbps for the CUBIC runs).

Figure 7

With Prague in DualPI2, we see remarkable underutilization with Skype traffic in L, with a 15.97 Mbps backoff in steady-state goodput in response to 2.3 Mbps of Skype traffic. Some would argue that bursty traffic must not be placed in L. We leave it as an exercise for the reader to determine how feasible that is.

We also see significant underutilization with Skype traffic in C, with a 5.63 Mbps backoff in goodput in response to 2.3 Mbps of Skype traffic, when bursts that arrive in C impact L via the queue coupling mechanism.

| CCA | Qdisc | Skype | Tstart | Tend | State | Goodput (Mbps) | Backoff (Mbps) | Links |

|---|---|---|---|---|---|---|---|---|

| Prague | DualPI2 | no | 43.08 | 120 | steady | 18.27 | n/a | (plot, pcap) |

| Prague | DualPI2 | yes, in L | 38.13 | 120 | steady | 2.29 | 15.97 | (plot, pcap) |

| Prague | DualPI2 | yes, in C | 35.08 | 120 | steady | 12.64 | 5.63 | (plot, pcap) |

With CUBIC in DualPI2 and Skype traffic in C, CUBIC utilizes the link in full. With Skype traffic in L, the bursts that arrive in L impact C via the queue coupling mechanism, reducing CUBIC's utilization somewhat.

CUBIC in DualPI2 sees two distinct phases of operation during steady-state, so it's divided here into early and late. We also see a pathological drop in throughput after slow-start exit visible in the time series plot, due to an apparent overreaction from PI in combination with the bursty Skype traffic.

| CCA | Qdisc | Skype | Tstart | Tend | State | Goodput (Mbps) | Backoff (Mbps) | Links |

|---|---|---|---|---|---|---|---|---|

| CUBIC | DualPI2 | no | 1.88 | 120 | steady | 16.22 | n/a | (plot, pcap) |

| CUBIC | DualPI2 | yes, in L | 3.42 | 120 | steady | 13.39 | 2.83 | (plot, pcap) |

| CUBIC | DualPI2 | yes, in C | 16.29 | 38.67 | steady (early) | 13.67 | 2.54 | (plot, pcap) |

| CUBIC | DualPI2 | yes, in C | 38.67 | 120 | steady (late) | 14.77 | 1.45 | (plot, pcap) |

| CUBIC | DualPI2 | yes, in C | 16.29 | 120 | steady | 14.53 | 1.69 | (plot, pcap) |

With CUBIC in a single Codel queue, Codel absorbs Skype's bursts with little to no spurious signalling, allowing CUBIC to fully utilize the available link capacity.

| CCA | Qdisc | Skype | Tstart | Tend | State | Goodput (Mbps) | Backoff (Mbps) | Links |

|---|---|---|---|---|---|---|---|---|

| CUBIC | Codel | no | 1.88 | 120 | steady | 16.86 | n/a | (plot, pcap) |

| CUBIC | Codel | yes | 1.98 | 120 | steady | 15.08 | 1.78 | (plot, pcap) |

Intra-flow latency refers to the delay experienced by a single flow. Increases in intra-flow latency lead to:

- longer recovery times on loss or CE, which may be experienced by the user as response time delays

- delays for requests that are multiplexed over a single flow

Because [l4s-id] redefines the CE codepoint in a way that is incompatible with RFC3168, L4S transports underreact to the CE signals sent by existing RFC3168 AQMs, which still take ECT(1) to mean RFC3168 capability and are not aware of its use as an L4S identifier. This causes them to inflate queues where these AQMs are deployed, even when FQ is present. We usually discuss this in the context of safety for non-L4S flows in the same RFC3168 queue, but the added delay that L4S flows can induce on themselves in RFC3168 queues is a performance issue.

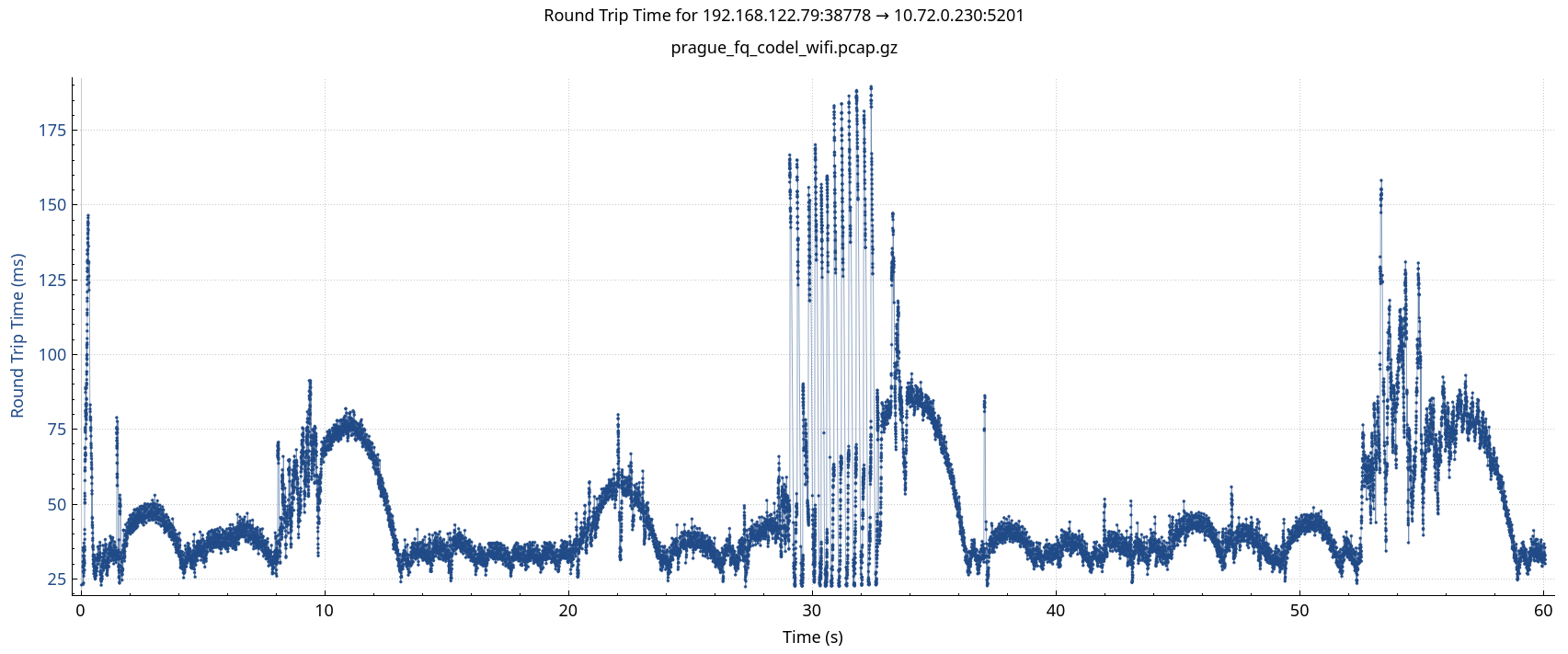

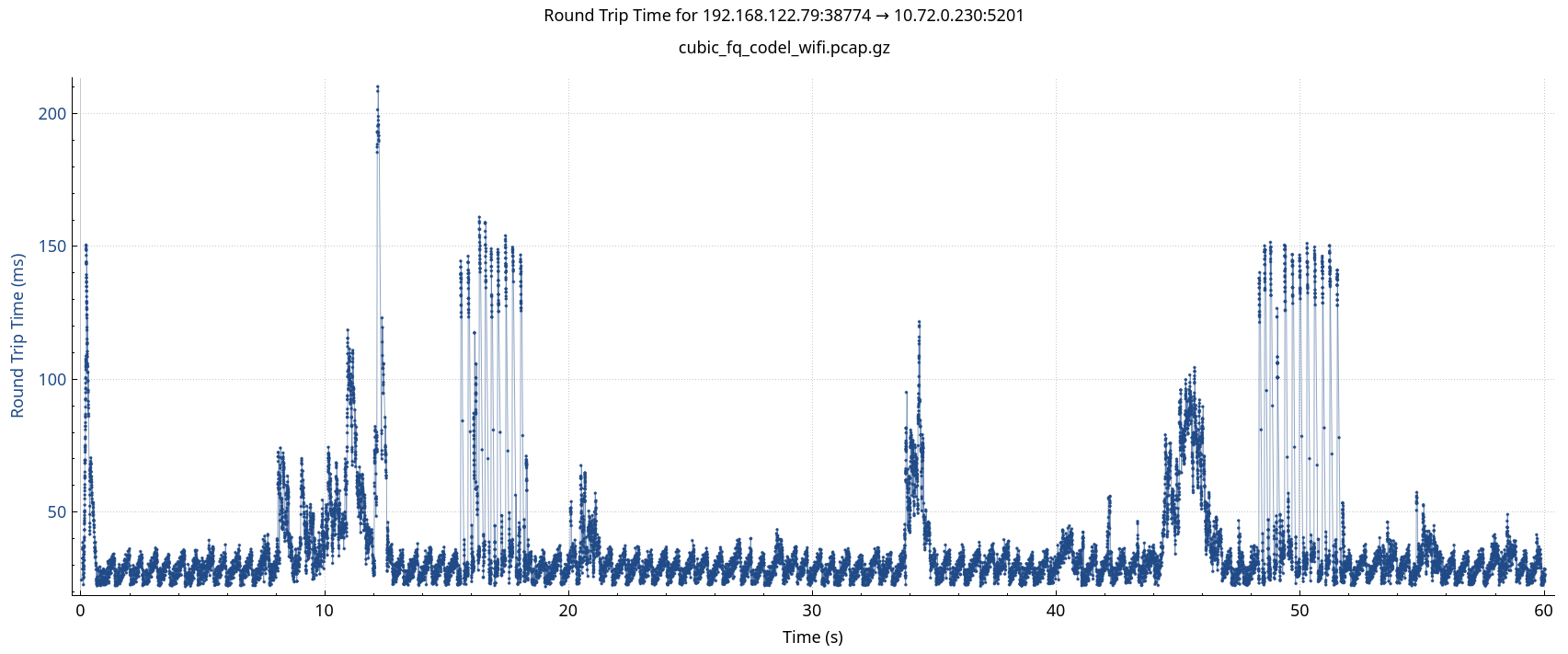

For a practical example, we can look at the TCP RTT of two TCP Prague flows through an fq_codel bottleneck, where one sender is using WiFi (see WiFi Setup), and the other Ethernet. WiFi's naturally varying rates cause varying rates of arrival at the bottleneck, leading to TCP RTT spikes on rate reductions.

First, the TCP RTT for Prague from the WiFi client through fq_codel:

Figure 8a- TCP Prague in fq_codel, WiFi client

Figure 8a- TCP Prague in fq_codel, WiFi client

and for the corresponding Prague flow from the wired client (also affected from T-30 to T=90 while the WiFi flow is active, as its available capacity in fq_codel changes):

Figure 8b- TCP Prague in fq_codel, wired client

Figure 8b- TCP Prague in fq_codel, wired client

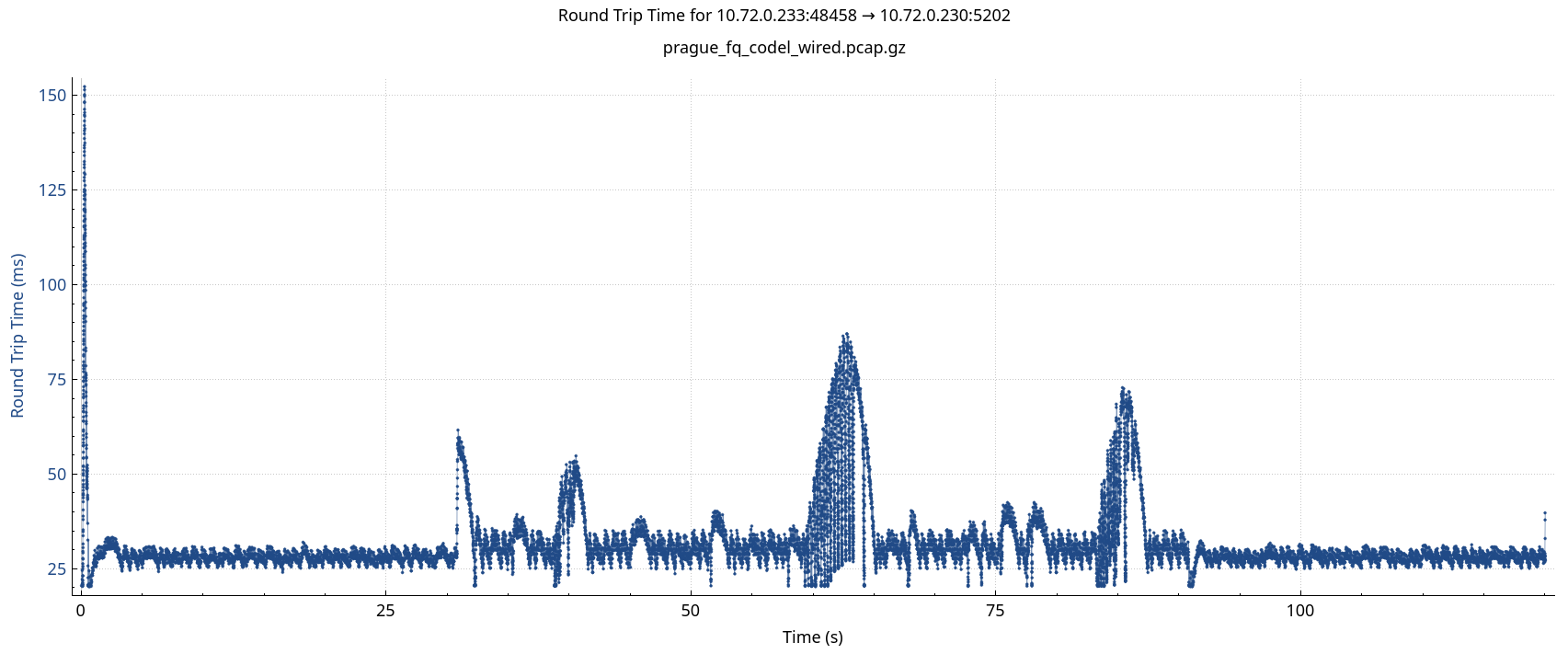

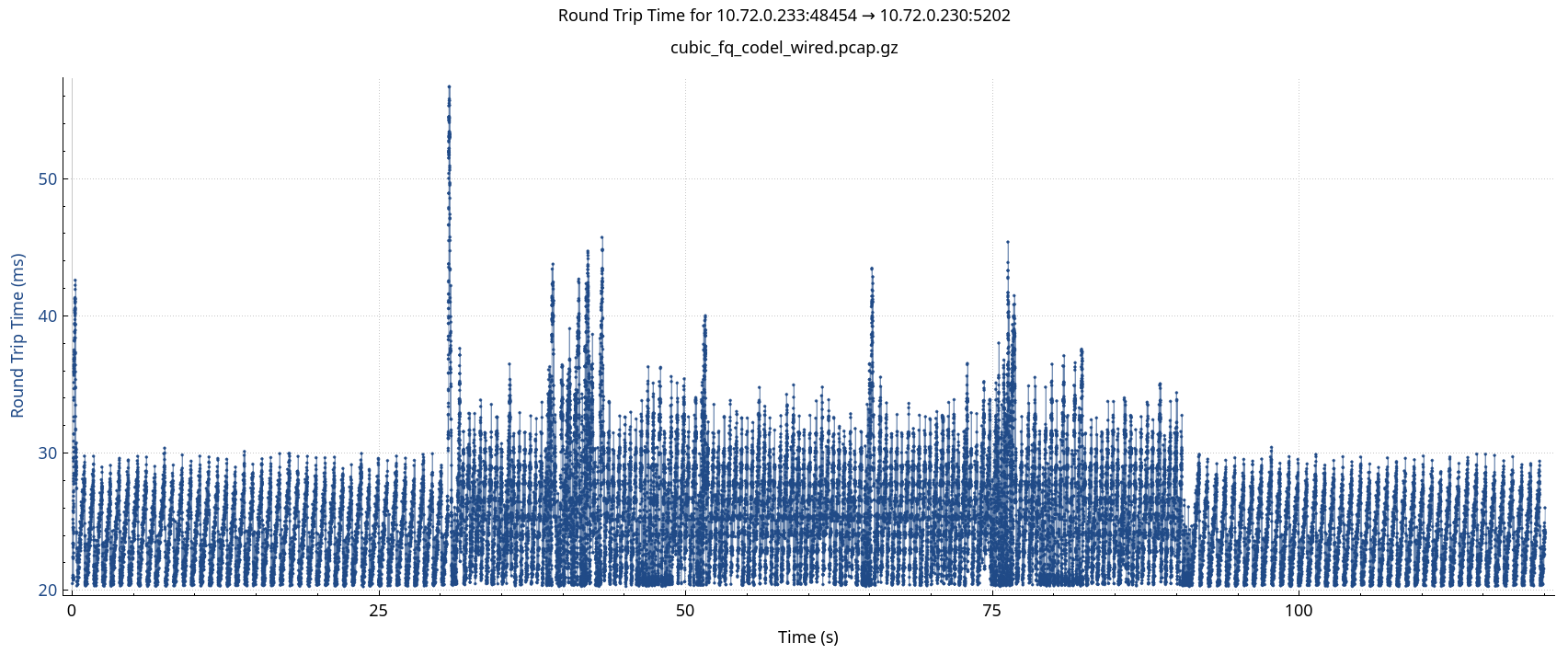

This in comparison to how a CUBIC flow from the same WiFi client behaves through fq_codel:

Figure 9a- TCP CUBIC in fq_codel, WiFi client

Figure 9a- TCP CUBIC in fq_codel, WiFi client

and the corresponding CUBIC flow from a wired client through fq_codel:

Figure 9b- TCP CUBIC in fq_codel, wired client

Figure 9b- TCP CUBIC in fq_codel, wired client

For a more controlled example, let's look at what happens when a standard CUBIC flow experiences a 50% rate reduction in an fq_codel queue, from 50Mbps to 25Mbps (see Figure 10).

In Figure 10 above, we can see a brief spike in intra-flow latency (TCP RTT) at around T=30, as Codel's estimator detects the queue, and the flow is signaled to slow down. CUBIC reacts with the expected 50% multiplicative decrease.

Next, let's look at the result when an L4S TCP Prague flow experiences the same 50% rate reduction (see Figure 11 below):

Comparing Figure 10 and Figure 11, we can see that the induced latency spike has a much longer duration for TCP Prague than CUBIC. Note that although the spike may appear small in magnitude due to the plot scale, 100ms is a significant induced delay when targets in the L4S queue are around 1ms, and further, we can see that the spike lasts around 5 seconds. This occurs because TCP Prague mis-interprets the CE signal as coming from an L4S instead of an RFC3168 queue. Prague reacts with a small linear cwnd reduction instead of the expected multiplicative decrease, building excessive queue until Codel's signaling eventually gets it under control.

The consequences of L4S transports underreacting to RFC3168 CE signals can be more severe as the rate reductions get larger. See Figure 12 and Figure 13 below for what happens to TCP Prague flows when reduced from 50Mbps to 5Mbps and 1Mbps, respectively. These larger reductions may be encountered, for example, as wireless devices with fq_codel built into the driver change rates in areas of intermittent AP coverage.

In Figure 13 above, we see a latency spike that has exceeded the fixed scale of our plot. However, a review of the .flent.gz file shows the maximum TCP RTT to be 4346ms, and we can see that the spike lasts for over 30 seconds.

See the Scenario 3 results, in particular for TCP Prague through fq_codel, to look at what happens when rates vary several times over the course of a flow.

The default marking scheme used in the DualPI2 L queue begins at a shallow, sub

1 ms threshold. While intended to keep queues shorter, it can also cause

excessive marking upon bursty packet arrivals. This can result in link

under-utilization, for example when packets have passed through a wireless link,

where they are grouped into aggregates then sent at line rate from the receiver.

With WiFi, bursts of up to 4ms may occur. Noting

RFC7567, Section 4.3,

it is possible to tune DualPI2 using the step_thresh parameter.

Note that burstiness is distinguished from jitter, which is associated with a variance in inter-packet gaps, but does not necessarily consist of well-defined bursts of packets at line rate.

Using a real world test setup, we ran 5 minute single flow tests from the Czech Republic under two different conditions:

- upload tests were done to Portland, USA, with a 160ms baseline RTT

- download tests were done from a server within the same ISP, with a 7ms baseline RTT

This was an upload test from Czech the Portland. The access link in Czech uses a PowerBeam 5AC-400 with Ubiquiti's airMAX AC technology. The access link in Portland is 1Gbit fiber. The Qdisc was applied at the receiving server, on ingress.

20 Mbps Bottleneck

| CCA | Qdisc | Queue | GoodputSS | Tstart | Tend | Retr. | Links |

|---|---|---|---|---|---|---|---|

| Prague | DualPI2 | L | 11.00 | 145.55 | 295 | 3 | tput, rtt, pcap |

| BBR2 | DualPI2 | L | 13.49 | 2.94 | 295 | 7 | tput, rtt, pcap |

| BBR2 | fq_codel | - | 18.47 | 2.75 | 295 | 11 | tput, rtt, pcap |

| CUBIC | DualPI2 | C | 16.24 | 22.94 | 295 | 5 | tput, rtt, pcap |

| CUBIC | fq_codel | - | 15.78 | 2.54 | 295 | 7 | tput, rtt, pcap |

25 Mbps Bottleneck

| CCA | Qdisc | Queue | GoodputSS | Tstart | Tend | Retr. | Links |

|---|---|---|---|---|---|---|---|

| Prague | DualPI2 | L | 12.58 | 52.22 | 169.03 | 2 | tput, rtt, pcap |

| BBR2 | DualPI2 | L | 15.18 | 14.13 | 295 | 8 | tput, rtt, pcap |

| BBR2 | fq_codel | - | 23.10 | 2.74 | 295 | 13 | tput, rtt, pcap |

| CUBIC | DualPI2 | C | 19.75 | 13.44 | 295 | 11 | tput, rtt, pcap |

| CUBIC | fq_codel | - | 18.64 | 13.85 | 295 | 4 | tput, rtt, pcap |

The table columns are as follows:

- CCA: congestion control algorithm

- Qdisc: queueing discipline

- Queue: DualPI2 queue (L or C), where applicable

- GoodputSS: goodput at steady-state

- Tstart: start time of steady-state, relative to start of measurement flow

- Tend: end time of steady-state, relative to start of measurement flow

- Retr.: number of TCP retransmits during test, as determined by

Wireshark's

tcp.analysis.retransmission - Links: links to throughput plot, TCP RTT plot, and pcap

Analysis:

It can be seen that the CCAs using L4S signalling (in the L queue) significantly underutilize the link compared to classic CCAs using conventional RFC3168 signalling.

In Figure 14 below, we can see TCP Prague's throughput through the bursty link, to Portland, with a 20 Mbps DualPI2 bottleneck. The reductions in throughput around T=80 to T=130 are responses to dropped packets, and are excluded from the steady-state throughput calculations. There is some amount of "random" loss on the path, so we didn't want that to be the determining factor in the results, but instead wanted to focus primarily on the high-fidelity congestion control response. Even allowing for that, TCP Prague significantly underutilizes the link.

This compared to Figure 15 below, the throughput from a conventional CUBIC flow through the DualPI2 L queue:

This was download test from a server within the same ISP, connected with 1Gbit

Ethernet. The access link uses a PowerBeam 5AC-400 with Ubiquiti's

airMAX AC technology. The Qdisc was

applied on a middlebox in the same Ethernet LAN as the receiving server, behind

the wireless access link. Here, we also explore the effects of raising the

step_thresh parameter from the default of 1ms, to 5ms.

20 Mbps Bottleneck

| CCA | Qdisc | Queue | step_thresh | Goodputmean | Links |

|---|---|---|---|---|---|

| Prague | DualPI2 | L | 1ms (default) | 14.3 | tput, rtt, pcap |

| Prague | DualPI2 | L | 5ms | 18.5 | tput, rtt, pcap |

| BBR2 | DualPI2 | L | 1ms (default) | 11.3 | tput, rtt, pcap |

| BBR2 | DualPI2 | L | 5ms | 18.1 | tput, rtt, pcap |

| BBR2 | fq_codel | - | n/a | 18.5 | tput, rtt, pcap |

| CUBIC | DualPI2 | C | n/a | 18.9 | tput, rtt, pcap |

| CUBIC | fq_codel | - | n/a | 18.8 | tput, rtt, pcap |

40 Mbps Bottleneck

| CCA | Qdisc | Queue | step_thresh | Goodputmean | Links |

|---|---|---|---|---|---|

| Prague | DualPI2 | L | 1ms (default) | 22.0 | tput, rtt, pcap |

| Prague | DualPI2 | L | 5ms | 36.9 | tput, rtt, pcap |

| BBR2 | DualPI2 | L | 1ms (default) | 16.9 | tput, rtt, pcap |

| BBR2 | DualPI2 | L | 5ms | 30.6 | tput, rtt, pcap |

| BBR2 | fq_codel | - | n/a | 36.8 | tput, rtt, pcap |

| CUBIC | DualPI2 | C | n/a | 37.5 | tput, rtt, pcap |

| CUBIC | fq_codel | - | n/a | 37.5 | tput, rtt, pcap |

The table columns are as follows:

- CCA: congestion control algorithm

- Qdisc: queueing discipline

- Queue: DualPI2 queue (L or C), where applicable

- step_thresh: DualPI2

step_threshparameter, where applicable - Goodputmean: mean goodput (according to iperf3 receiver)

- Links: links to throughput plot, TCP RTT plot, and pcap

Analysis:

The results at local 7ms RTTs mirror those at 160ms, in that we see L4S transports underutilize the link with the default threshold of 1ms. Raising the bottleneck bandwidth from 20 Mbps to 40 Mbps seemed to increase the relative underutilization (this comparing Prague's goodput of 14.3 Mbps in a 20 Mbps bottleneck, and 22 Mbps in a 40 Mbps bottleneck). One explanation for this could be larger aggregate sizes.

Here, we also test raising the threshold to 5ms. This increases Prague's goodput nearly to that of conventional CCAs, but even with the increase, BBR2 still saw a lower goodput through the 40 Mbps bottleneck than conventional CCAs (30.6 Mbps vs 37.5 Mbps).

The difference in goodputs is likely due to the tight AQM marking and short L queue, relative to the bursty packet arrivals. In the TCP sequence diagrams in Figure 16 and Figure 17 taken from tests to Portland, we can compare the difference in burstiness between the paced packets from TCP Prague as they are sent:

and as they are received at a bottleneck:

Scenario 2 and Scenario 3 both include runs with netem simulated bursts of approximately 4ms in duration. In Figure 20, we can see how CUBIC through fq_codel handles such bursts.

Next, in Figure 21 we see how TCP Prague through DualPI2 handles the same bursts:

In Figure 20 and Figure 21 we can see that the lower TCP RTT of TCP Prague comes with a tradeoff of about a 50% reduction in link utilization. While this may be appropriate for low-latency traffic, capacity seeking bulk downloads may prefer increased utilization at the expense of some intra-flow delay. We raise this point merely to help set the expectation that maintaining strictly low delays at bottlenecks comes at the expense of some link utilization for typical Internet traffic.

Two important tsvwg mailing list posts on this issue:

- L4S & VPN anti-replay interaction: Explanation (explaining the exact mechanics)

- L4S dual-queue re-ordering and VPNs (on use of this as a DoS attack vector)

Tunnels that use anti-replay may drop packets that arrive outside the protection window after they traverse the DualPI2 C queue. This can cause reduced performance for tunneled, non-L4S traffic, and is a safety issue from the standpoint that conventional traffic in tunnels with replay protection enabled may be harmed by by the deployment of DualPI2, including IPsec tunnels using common defaults. It also enables a DoS attack that can halt C queue traffic (see above).

The plots in Table 1 below show two-flow competition between CUBIC and Prague at a few bandwidths and replay window sizes, as a way to illustrate what the effect is and when it occurs. A replay window of 0 means replay protection is disabled, showing the standard DualPI2 behavior in these conditions. With other replay window sizes, conventional traffic (CUBIC) can show reduced throughput, until the window is high enough such that drops do not occur.

| Bandwidth | Replay Window | RTT | Plot |

|---|---|---|---|

| 10Mbps | 0 | 20ms | SVG |

| 10Mbps | 32 | 20ms | SVG |

| 10Mbps | 64 | 20ms | SVG |

| 20Mbps | 0 | 20ms | SVG |

| 20Mbps | 32 | 20ms | SVG |

| 20Mbps | 64 | 20ms | SVG |

| 100Mbps | 0 | 20ms | SVG |

| 100Mbps | 32 | 20ms | SVG |

| 100Mbps | 64 | 20ms | SVG |

| 100Mbps | 128 | 20ms | SVG |

| 100Mbps | 256 | 20ms | SVG |

Table 1

As an example, Figure 22 below shows the plot for a 20Mbps bottleneck with a 32 packet window. We can see that throughput for CUBIC is much lower than what would be expected under these conditions, due to packets drops by anti-replay.

IPsec tunnels commonly use a 32 or 64 packet replay window as the default (32 for strongswan, as an example). Tunnels using either of these values are affected by this problem at virtually all bottleneck bandwidths.

To avoid this problem, the replay window should be sufficiently large to at least allow for the number of packets that can arrive during the maximum difference between the sojourn times for C and L. Assuming that the sojourn time through L can sometimes be close to 0, the peak sojourn time through C becomes the most significant quantity. Under some network conditions (e.g. lower bandwidths or higher loads), peak C sojourn times can increase to 50ms or higher. As an example, to account for 100ms peak sojourn times in a 20Mbps link, a value of at least 166 packets could be used (20,000,000 / 8 / 1500 / (1000 / 100)). Note that some tunnels may only be configured with replay window sizes that are a power of 2.

Modern Linux kernels have a fixed maximum replay window size of 4096

(XFRMA_REPLAY_ESN_MAX in

xfrm.h).

Wireguard uses a hardcoded value of 8192 with no option for runtime

configuration, increased from 2048 in May 2020 by this

commit.

Depending on the maximum limit supported by a particular tunnel implementation,

replay protection may need to be disabled for high-speed tunnels, if it's

possible to do so.

Thanks to Sebastian Moeller for reporting this issue.

Risk = Severity * Likelihood

To estimate the risk of the reported safety problems, this section looks at both Severity and Likelihood.

The results reported in Unsafety in Shared RFC3168 Queues indicate a high severity when safety problems occur. Competing flows are driven to at or near minimum cwnd. Referring to draft-fairhurst-tsvwg-cc-05, Section 3.2:

Transports MUST avoid inducing flow starvation to other flows that share resources along the path they use.

We have previously defined starvation as "you know it when you see it", a definition that applies here.

The only requirement for the reported unsafety to occur is congestion in a shared queue managed by an RFC3168 AQM. That can happen either in a single queue AQM, or for traffic that ends up in the same queue in an FQ AQM, most commonly by tunneled traffic, but also by hash collision, or when the flow hash has been customized.

The non-L4S flow being dominated does not need to be ECN capable in order to be affected.

We consider it from the angle of both deployments and observed signaling. While the number of deployments suggests the likelihood, the observed signaling on the Internet may more directly indicate the likelihood.

It has been suggested that deployment of single-queue RFC3168 AQMs is minimal. We have no data supporting or denying that.

The fq_codel qdisc has been in the Linux kernel since version 3.6 (late 2012) and ships with commercial products and open source projects. It has been integrated into the ath9k, ath10k, mt76 and iwl WiFi drivers, and is used in Google WiFi and OpenWrt, as well as vendor products that depend on OpenWrt, such as Open Mesh products. OpenWrt uses fq_codel in the supported WiFi drivers by default, and Open Mesh products do as well. The Ubiquiti EdgeMAX and UniFi products use it for their Smart Queueing feature. The Preseem platform uses it for managing queues in ISP backhauls.

An earlier tsvwg thread on fq_codel deployments is here

Contributions to this section would be useful, including known products, distibutions or drivers that mark or do not mark by default.

A previously shared slide deck on observations of AQM marking is here. This reports a "low but growing" level of CE marking.

ECN counter data from a Czech ISP is here. While the stateless nature of the counters makes a complete interpretation challenging, both incoming and outgoing CE marks are observed, however the possibility that some were due to incorrect usage of the former ToS byte has not been eliminated. Work to improve the quality of that data is ongoing.

On MacOS machines, the command sudo netstat -sp tcp may be run to display

ECN statistics, which includes information on observed ECN signaling.

Contributions to this section from various regions and network positions would be useful.

In a typical risk matrix, the risk of high severity outcomes such as these leave very little tolerance for the probability of occurrence. If the reported outcome is not acceptable, then the tolerance for likelihood is exactly 0. What we can say for sure is that observed AQM signaling on the Internet is greater than 0.

In the following results, the links are named as follows:

- plot: the plot svg

- cli.pcap: the client pcap

- srv.pcap: the server pcap

- teardown: the teardown log, showing qdisc config and stats

| Bandwidth | RTTs | qdisc | vs | L4S |

|---|---|---|---|---|

| 50Mbit | 10ms-10ms | dualpi2 | cubic-vs-cubic | plot - cli.pcap - srv.pcap - teardown |

| 50Mbit | 10ms-10ms | dualpi2 | prague-vs-prague | plot - cli.pcap - srv.pcap - teardown |

| 50Mbit | 10ms-10ms | dualpi2 | cubic-vs-prague | plot - cli.pcap - srv.pcap - teardown |

| 50Mbit | 10ms-10ms | dualpi2 | prague-vs-cubic | plot - cli.pcap - srv.pcap - teardown |

| 50Mbit | 10ms-10ms | pfifo | cubic-vs-cubic | plot - cli.pcap - srv.pcap - teardown |

| 50Mbit | 10ms-10ms | pfifo | prague-vs-prague | plot - cli.pcap - srv.pcap - teardown |

| 50Mbit | 10ms-10ms | pfifo | cubic-vs-prague | plot - cli.pcap - srv.pcap - teardown |

| 50Mbit | 10ms-10ms | pfifo | prague-vs-cubic | plot - cli.pcap - srv.pcap - teardown |

| 50Mbit | 10ms-10ms | fq_codel | cubic-vs-cubic | plot - cli.pcap - srv.pcap - teardown |

| 50Mbit | 10ms-10ms | fq_codel | prague-vs-prague | plot - cli.pcap - srv.pcap - teardown |

| 50Mbit | 10ms-10ms | fq_codel | cubic-vs-prague | plot - cli.pcap - srv.pcap - teardown |

| 50Mbit | 10ms-10ms | fq_codel | prague-vs-cubic | plot - cli.pcap - srv.pcap - teardown |

| 50Mbit | 10ms-10ms | cnq_codel_af | cubic-vs-cubic | plot - cli.pcap - srv.pcap - teardown |

| 50Mbit | 10ms-10ms | cnq_codel_af | prague-vs-prague | plot - cli.pcap - srv.pcap - teardown |

| 50Mbit | 10ms-10ms | cnq_codel_af | cubic-vs-prague | plot - cli.pcap - srv.pcap - teardown |

| 50Mbit | 10ms-10ms | cnq_codel_af | prague-vs-cubic | plot - cli.pcap - srv.pcap - teardown |

| 50Mbit | 20ms-20ms | dualpi2 | cubic-vs-cubic | plot - cli.pcap - srv.pcap - teardown |

| 50Mbit | 20ms-20ms | dualpi2 | prague-vs-prague | plot - cli.pcap - srv.pcap - teardown |

| 50Mbit | 20ms-20ms | dualpi2 | cubic-vs-prague | plot - cli.pcap - srv.pcap - teardown |

| 50Mbit | 20ms-20ms | dualpi2 | prague-vs-cubic | plot - cli.pcap - srv.pcap - teardown |

| 50Mbit | 20ms-20ms | pfifo | cubic-vs-cubic | plot - cli.pcap - srv.pcap - teardown |

| 50Mbit | 20ms-20ms | pfifo | prague-vs-prague | plot - cli.pcap - srv.pcap - teardown |

| 50Mbit | 20ms-20ms | pfifo | cubic-vs-prague | plot - cli.pcap - srv.pcap - teardown |

| 50Mbit | 20ms-20ms | pfifo | prague-vs-cubic | plot - cli.pcap - srv.pcap - teardown |

| 50Mbit | 20ms-20ms | fq_codel | cubic-vs-cubic | plot - cli.pcap - srv.pcap - teardown |

| 50Mbit | 20ms-20ms | fq_codel | prague-vs-prague | plot - cli.pcap - srv.pcap - teardown |

| 50Mbit | 20ms-20ms | fq_codel | cubic-vs-prague | plot - cli.pcap - srv.pcap - teardown |

| 50Mbit | 20ms-20ms | fq_codel | prague-vs-cubic | plot - cli.pcap - srv.pcap - teardown |

| 50Mbit | 20ms-20ms | cnq_codel_af | cubic-vs-cubic | plot - cli.pcap - srv.pcap - teardown |

| 50Mbit | 20ms-20ms | cnq_codel_af | prague-vs-prague | plot - cli.pcap - srv.pcap - teardown |

| 50Mbit | 20ms-20ms | cnq_codel_af | cubic-vs-prague | plot - cli.pcap - srv.pcap - teardown |

| 50Mbit | 20ms-20ms | cnq_codel_af | prague-vs-cubic | plot - cli.pcap - srv.pcap - teardown |

| 50Mbit | 80ms-80ms | dualpi2 | cubic-vs-cubic | plot - cli.pcap - srv.pcap - teardown |

| 50Mbit | 80ms-80ms | dualpi2 | prague-vs-prague | plot - cli.pcap - srv.pcap - teardown |

| 50Mbit | 80ms-80ms | dualpi2 | cubic-vs-prague | plot - cli.pcap - srv.pcap - teardown |

| 50Mbit | 80ms-80ms | dualpi2 | prague-vs-cubic | plot - cli.pcap - srv.pcap - teardown |

| 50Mbit | 80ms-80ms | pfifo | cubic-vs-cubic | plot - cli.pcap - srv.pcap - teardown |

| 50Mbit | 80ms-80ms | pfifo | prague-vs-prague | plot - cli.pcap - srv.pcap - teardown |

| 50Mbit | 80ms-80ms | pfifo | cubic-vs-prague | plot - cli.pcap - srv.pcap - teardown |

| 50Mbit | 80ms-80ms | pfifo | prague-vs-cubic | plot - cli.pcap - srv.pcap - teardown |

| 50Mbit | 80ms-80ms | fq_codel | cubic-vs-cubic | plot - cli.pcap - srv.pcap - teardown |

| 50Mbit | 80ms-80ms | fq_codel | prague-vs-prague | plot - cli.pcap - srv.pcap - teardown |

| 50Mbit | 80ms-80ms | fq_codel | cubic-vs-prague | plot - cli.pcap - srv.pcap - teardown |

| 50Mbit | 80ms-80ms | fq_codel | prague-vs-cubic | plot - cli.pcap - srv.pcap - teardown |

| 50Mbit | 80ms-80ms | cnq_codel_af | cubic-vs-cubic | plot - cli.pcap - srv.pcap - teardown |

| 50Mbit | 80ms-80ms | cnq_codel_af | prague-vs-prague | plot - cli.pcap - srv.pcap - teardown |

| 50Mbit | 80ms-80ms | cnq_codel_af | cubic-vs-prague | plot - cli.pcap - srv.pcap - teardown |

| 50Mbit | 80ms-80ms | cnq_codel_af | prague-vs-cubic | plot - cli.pcap - srv.pcap - teardown |

| 50Mbit | 20ms-10ms | dualpi2 | cubic-vs-cubic | plot - cli.pcap - srv.pcap - teardown |

| 50Mbit | 20ms-10ms | dualpi2 | prague-vs-prague | plot - cli.pcap - srv.pcap - teardown |

| 50Mbit | 20ms-10ms | dualpi2 | cubic-vs-prague | plot - cli.pcap - srv.pcap - teardown |

| 50Mbit | 20ms-10ms | dualpi2 | prague-vs-cubic | plot - cli.pcap - srv.pcap - teardown |

| 50Mbit | 20ms-10ms | pfifo | cubic-vs-cubic | plot - cli.pcap - srv.pcap - teardown |

| 50Mbit | 20ms-10ms | pfifo | prague-vs-prague | plot - cli.pcap - srv.pcap - teardown |

| 50Mbit | 20ms-10ms | pfifo | cubic-vs-prague | plot - cli.pcap - srv.pcap - teardown |

| 50Mbit | 20ms-10ms | pfifo | prague-vs-cubic | plot - cli.pcap - srv.pcap - teardown |

| 50Mbit | 20ms-10ms | fq_codel | cubic-vs-cubic | plot - cli.pcap - srv.pcap - teardown |

| 50Mbit | 20ms-10ms | fq_codel | prague-vs-prague | plot - cli.pcap - srv.pcap - teardown |

| 50Mbit | 20ms-10ms | fq_codel | cubic-vs-prague | plot - cli.pcap - srv.pcap - teardown |

| 50Mbit | 20ms-10ms | fq_codel | prague-vs-cubic | plot - cli.pcap - srv.pcap - teardown |

| 50Mbit | 20ms-10ms | cnq_codel_af | cubic-vs-cubic | plot - cli.pcap - srv.pcap - teardown |

| 50Mbit | 20ms-10ms | cnq_codel_af | prague-vs-prague | plot - cli.pcap - srv.pcap - teardown |

| 50Mbit | 20ms-10ms | cnq_codel_af | cubic-vs-prague | plot - cli.pcap - srv.pcap - teardown |

| 50Mbit | 20ms-10ms | cnq_codel_af | prague-vs-cubic | plot - cli.pcap - srv.pcap - teardown |

| 50Mbit | 80ms-20ms | dualpi2 | cubic-vs-cubic | plot - cli.pcap - srv.pcap - teardown |

| 50Mbit | 80ms-20ms | dualpi2 | prague-vs-prague | plot - cli.pcap - srv.pcap - teardown |

| 50Mbit | 80ms-20ms | dualpi2 | cubic-vs-prague | plot - cli.pcap - srv.pcap - teardown |

| 50Mbit | 80ms-20ms | dualpi2 | prague-vs-cubic | plot - cli.pcap - srv.pcap - teardown |

| 50Mbit | 80ms-20ms | pfifo | cubic-vs-cubic | plot - cli.pcap - srv.pcap - teardown |

| 50Mbit | 80ms-20ms | pfifo | prague-vs-prague | plot - cli.pcap - srv.pcap - teardown |

| 50Mbit | 80ms-20ms | pfifo | cubic-vs-prague | plot - cli.pcap - srv.pcap - teardown |

| 50Mbit | 80ms-20ms | pfifo | prague-vs-cubic | plot - cli.pcap - srv.pcap - teardown |

| 50Mbit | 80ms-20ms | fq_codel | cubic-vs-cubic | plot - cli.pcap - srv.pcap - teardown |

| 50Mbit | 80ms-20ms | fq_codel | prague-vs-prague | plot - cli.pcap - srv.pcap - teardown |

| 50Mbit | 80ms-20ms | fq_codel | cubic-vs-prague | plot - cli.pcap - srv.pcap - teardown |

| 50Mbit | 80ms-20ms | fq_codel | prague-vs-cubic | plot - cli.pcap - srv.pcap - teardown |

| 50Mbit | 80ms-20ms | cnq_codel_af | cubic-vs-cubic | plot - cli.pcap - srv.pcap - teardown |

| 50Mbit | 80ms-20ms | cnq_codel_af | prague-vs-prague | plot - cli.pcap - srv.pcap - teardown |

| 50Mbit | 80ms-20ms | cnq_codel_af | cubic-vs-prague | plot - cli.pcap - srv.pcap - teardown |

| 50Mbit | 80ms-20ms | cnq_codel_af | prague-vs-cubic | plot - cli.pcap - srv.pcap - teardown |

| 50Mbit | 160ms-10ms | dualpi2 | cubic-vs-cubic | plot - cli.pcap - srv.pcap - teardown |

| 50Mbit | 160ms-10ms | dualpi2 | prague-vs-prague | plot - cli.pcap - srv.pcap - teardown |

| 50Mbit | 160ms-10ms | dualpi2 | cubic-vs-prague | plot - cli.pcap - srv.pcap - teardown |

| 50Mbit | 160ms-10ms | dualpi2 | prague-vs-cubic | plot - cli.pcap - srv.pcap - teardown |

| 50Mbit | 160ms-10ms | pfifo | cubic-vs-cubic | plot - cli.pcap - srv.pcap - teardown |

| 50Mbit | 160ms-10ms | pfifo | prague-vs-prague | plot - cli.pcap - srv.pcap - teardown |

| 50Mbit | 160ms-10ms | pfifo | cubic-vs-prague | plot - cli.pcap - srv.pcap - teardown |

| 50Mbit | 160ms-10ms | pfifo | prague-vs-cubic | plot - cli.pcap - srv.pcap - teardown |

| 50Mbit | 160ms-10ms | fq_codel | cubic-vs-cubic | plot - cli.pcap - srv.pcap - teardown |

| 50Mbit | 160ms-10ms | fq_codel | prague-vs-prague | plot - cli.pcap - srv.pcap - teardown |

| 50Mbit | 160ms-10ms | fq_codel | cubic-vs-prague | plot - cli.pcap - srv.pcap - teardown |

| 50Mbit | 160ms-10ms | fq_codel | prague-vs-cubic | plot - cli.pcap - srv.pcap - teardown |

| 50Mbit | 160ms-10ms | cnq_codel_af | cubic-vs-cubic | plot - cli.pcap - srv.pcap - teardown |

| 50Mbit | 160ms-10ms | cnq_codel_af | prague-vs-prague | plot - cli.pcap - srv.pcap - teardown |

| 50Mbit | 160ms-10ms | cnq_codel_af | cubic-vs-prague | plot - cli.pcap - srv.pcap - teardown |

| 50Mbit | 160ms-10ms | cnq_codel_af | prague-vs-cubic | plot - cli.pcap - srv.pcap - teardown |

| 10Mbit | 10ms-10ms | dualpi2 | cubic-vs-cubic | plot - cli.pcap - srv.pcap - teardown |

| 10Mbit | 10ms-10ms | dualpi2 | prague-vs-prague | plot - cli.pcap - srv.pcap - teardown |

| 10Mbit | 10ms-10ms | dualpi2 | cubic-vs-prague | plot - cli.pcap - srv.pcap - teardown |

| 10Mbit | 10ms-10ms | dualpi2 | prague-vs-cubic | plot - cli.pcap - srv.pcap - teardown |

| 10Mbit | 10ms-10ms | pfifo | cubic-vs-cubic | plot - cli.pcap - srv.pcap - teardown |

| 10Mbit | 10ms-10ms | pfifo | prague-vs-prague | plot - cli.pcap - srv.pcap - teardown |

| 10Mbit | 10ms-10ms | pfifo | cubic-vs-prague | plot - cli.pcap - srv.pcap - teardown |

| 10Mbit | 10ms-10ms | pfifo | prague-vs-cubic | plot - cli.pcap - srv.pcap - teardown |

| 10Mbit | 10ms-10ms | fq_codel | cubic-vs-cubic | plot - cli.pcap - srv.pcap - teardown |

| 10Mbit | 10ms-10ms | fq_codel | prague-vs-prague | plot - cli.pcap - srv.pcap - teardown |

| 10Mbit | 10ms-10ms | fq_codel | cubic-vs-prague | plot - cli.pcap - srv.pcap - teardown |

| 10Mbit | 10ms-10ms | fq_codel | prague-vs-cubic | plot - cli.pcap - srv.pcap - teardown |

| 10Mbit | 10ms-10ms | cnq_codel_af | cubic-vs-cubic | plot - cli.pcap - srv.pcap - teardown |

| 10Mbit | 10ms-10ms | cnq_codel_af | prague-vs-prague | plot - cli.pcap - srv.pcap - teardown |

| 10Mbit | 10ms-10ms | cnq_codel_af | cubic-vs-prague | plot - cli.pcap - srv.pcap - teardown |

| 10Mbit | 10ms-10ms | cnq_codel_af | prague-vs-cubic | plot - cli.pcap - srv.pcap - teardown |

| 10Mbit | 20ms-20ms | dualpi2 | cubic-vs-cubic | plot - cli.pcap - srv.pcap - teardown |

| 10Mbit | 20ms-20ms | dualpi2 | prague-vs-prague | plot - cli.pcap - srv.pcap - teardown |

| 10Mbit | 20ms-20ms | dualpi2 | cubic-vs-prague | plot - cli.pcap - srv.pcap - teardown |

| 10Mbit | 20ms-20ms | dualpi2 | prague-vs-cubic | plot - cli.pcap - srv.pcap - teardown |

| 10Mbit | 20ms-20ms | pfifo | cubic-vs-cubic | plot - cli.pcap - srv.pcap - teardown |

| 10Mbit | 20ms-20ms | pfifo | prague-vs-prague | plot - cli.pcap - srv.pcap - teardown |

| 10Mbit | 20ms-20ms | pfifo | cubic-vs-prague | plot - cli.pcap - srv.pcap - teardown |

| 10Mbit | 20ms-20ms | pfifo | prague-vs-cubic | plot - cli.pcap - srv.pcap - teardown |

| 10Mbit | 20ms-20ms | fq_codel | cubic-vs-cubic | plot - cli.pcap - srv.pcap - teardown |

| 10Mbit | 20ms-20ms | fq_codel | prague-vs-prague | plot - cli.pcap - srv.pcap - teardown |

| 10Mbit | 20ms-20ms | fq_codel | cubic-vs-prague | plot - cli.pcap - srv.pcap - teardown |

| 10Mbit | 20ms-20ms | fq_codel | prague-vs-cubic | plot - cli.pcap - srv.pcap - teardown |

| 10Mbit | 20ms-20ms | cnq_codel_af | cubic-vs-cubic | plot - cli.pcap - srv.pcap - teardown |

| 10Mbit | 20ms-20ms | cnq_codel_af | prague-vs-prague | plot - cli.pcap - srv.pcap - teardown |

| 10Mbit | 20ms-20ms | cnq_codel_af | cubic-vs-prague | plot - cli.pcap - srv.pcap - teardown |

| 10Mbit | 20ms-20ms | cnq_codel_af | prague-vs-cubic | plot - cli.pcap - srv.pcap - teardown |

| 10Mbit | 80ms-80ms | dualpi2 | cubic-vs-cubic | plot - cli.pcap - srv.pcap - teardown |

| 10Mbit | 80ms-80ms | dualpi2 | prague-vs-prague | plot - cli.pcap - srv.pcap - teardown |

| 10Mbit | 80ms-80ms | dualpi2 | cubic-vs-prague | plot - cli.pcap - srv.pcap - teardown |

| 10Mbit | 80ms-80ms | dualpi2 | prague-vs-cubic | plot - cli.pcap - srv.pcap - teardown |

| 10Mbit | 80ms-80ms | pfifo | cubic-vs-cubic | plot - cli.pcap - srv.pcap - teardown |

| 10Mbit | 80ms-80ms | pfifo | prague-vs-prague | plot - cli.pcap - srv.pcap - teardown |

| 10Mbit | 80ms-80ms | pfifo | cubic-vs-prague | plot - cli.pcap - srv.pcap - teardown |

| 10Mbit | 80ms-80ms | pfifo | prague-vs-cubic | plot - cli.pcap - srv.pcap - teardown |

| 10Mbit | 80ms-80ms | fq_codel | cubic-vs-cubic | plot - cli.pcap - srv.pcap - teardown |

| 10Mbit | 80ms-80ms | fq_codel | prague-vs-prague | plot - cli.pcap - srv.pcap - teardown |

| 10Mbit | 80ms-80ms | fq_codel | cubic-vs-prague | plot - cli.pcap - srv.pcap - teardown |

| 10Mbit | 80ms-80ms | fq_codel | prague-vs-cubic | plot - cli.pcap - srv.pcap - teardown |

| 10Mbit | 80ms-80ms | cnq_codel_af | cubic-vs-cubic | plot - cli.pcap - srv.pcap - teardown |

| 10Mbit | 80ms-80ms | cnq_codel_af | prague-vs-prague | plot - cli.pcap - srv.pcap - teardown |

| 10Mbit | 80ms-80ms | cnq_codel_af | cubic-vs-prague | plot - cli.pcap - srv.pcap - teardown |

| 10Mbit | 80ms-80ms | cnq_codel_af | prague-vs-cubic | plot - cli.pcap - srv.pcap - teardown |

| 10Mbit | 20ms-10ms | dualpi2 | cubic-vs-cubic | plot - cli.pcap - srv.pcap - teardown |

| 10Mbit | 20ms-10ms | dualpi2 | prague-vs-prague | plot - cli.pcap - srv.pcap - teardown |

| 10Mbit | 20ms-10ms | dualpi2 | cubic-vs-prague | plot - cli.pcap - srv.pcap - teardown |

| 10Mbit | 20ms-10ms | dualpi2 | prague-vs-cubic | plot - cli.pcap - srv.pcap - teardown |

| 10Mbit | 20ms-10ms | pfifo | cubic-vs-cubic | plot - cli.pcap - srv.pcap - teardown |

| 10Mbit | 20ms-10ms | pfifo | prague-vs-prague | plot - cli.pcap - srv.pcap - teardown |

| 10Mbit | 20ms-10ms | pfifo | cubic-vs-prague | plot - cli.pcap - srv.pcap - teardown |

| 10Mbit | 20ms-10ms | pfifo | prague-vs-cubic | plot - cli.pcap - srv.pcap - teardown |

| 10Mbit | 20ms-10ms | fq_codel | cubic-vs-cubic | plot - cli.pcap - srv.pcap - teardown |

| 10Mbit | 20ms-10ms | fq_codel | prague-vs-prague | plot - cli.pcap - srv.pcap - teardown |

| 10Mbit | 20ms-10ms | fq_codel | cubic-vs-prague | plot - cli.pcap - srv.pcap - teardown |

| 10Mbit | 20ms-10ms | fq_codel | prague-vs-cubic | plot - cli.pcap - srv.pcap - teardown |

| 10Mbit | 20ms-10ms | cnq_codel_af | cubic-vs-cubic | plot - cli.pcap - srv.pcap - teardown |

| 10Mbit | 20ms-10ms | cnq_codel_af | prague-vs-prague | plot - cli.pcap - srv.pcap - teardown |

| 10Mbit | 20ms-10ms | cnq_codel_af | cubic-vs-prague | plot - cli.pcap - srv.pcap - teardown |

| 10Mbit | 20ms-10ms | cnq_codel_af | prague-vs-cubic | plot - cli.pcap - srv.pcap - teardown |

| 10Mbit | 80ms-20ms | dualpi2 | cubic-vs-cubic | plot - cli.pcap - srv.pcap - teardown |

| 10Mbit | 80ms-20ms | dualpi2 | prague-vs-prague | plot - cli.pcap - srv.pcap - teardown |

| 10Mbit | 80ms-20ms | dualpi2 | cubic-vs-prague | plot - cli.pcap - srv.pcap - teardown |

| 10Mbit | 80ms-20ms | dualpi2 | prague-vs-cubic | plot - cli.pcap - srv.pcap - teardown |

| 10Mbit | 80ms-20ms | pfifo | cubic-vs-cubic | plot - cli.pcap - srv.pcap - teardown |

| 10Mbit | 80ms-20ms | pfifo | prague-vs-prague | plot - cli.pcap - srv.pcap - teardown |

| 10Mbit | 80ms-20ms | pfifo | cubic-vs-prague | plot - cli.pcap - srv.pcap - teardown |

| 10Mbit | 80ms-20ms | pfifo | prague-vs-cubic | plot - cli.pcap - srv.pcap - teardown |

| 10Mbit | 80ms-20ms | fq_codel | cubic-vs-cubic | plot - cli.pcap - srv.pcap - teardown |

| 10Mbit | 80ms-20ms | fq_codel | prague-vs-prague | plot - cli.pcap - srv.pcap - teardown |

| 10Mbit | 80ms-20ms | fq_codel | cubic-vs-prague | plot - cli.pcap - srv.pcap - teardown |

| 10Mbit | 80ms-20ms | fq_codel | prague-vs-cubic | plot - cli.pcap - srv.pcap - teardown |

| 10Mbit | 80ms-20ms | cnq_codel_af | cubic-vs-cubic | plot - cli.pcap - srv.pcap - teardown |

| 10Mbit | 80ms-20ms | cnq_codel_af | prague-vs-prague | plot - cli.pcap - srv.pcap - teardown |

| 10Mbit | 80ms-20ms | cnq_codel_af | cubic-vs-prague | plot - cli.pcap - srv.pcap - teardown |

| 10Mbit | 80ms-20ms | cnq_codel_af | prague-vs-cubic | plot - cli.pcap - srv.pcap - teardown |

| 10Mbit | 160ms-10ms | dualpi2 | cubic-vs-cubic | plot - cli.pcap - srv.pcap - teardown |

| 10Mbit | 160ms-10ms | dualpi2 | prague-vs-prague | plot - cli.pcap - srv.pcap - teardown |

| 10Mbit | 160ms-10ms | dualpi2 | cubic-vs-prague | plot - cli.pcap - srv.pcap - teardown |

| 10Mbit | 160ms-10ms | dualpi2 | prague-vs-cubic | plot - cli.pcap - srv.pcap - teardown |

| 10Mbit | 160ms-10ms | pfifo | cubic-vs-cubic | plot - cli.pcap - srv.pcap - teardown |

| 10Mbit | 160ms-10ms | pfifo | prague-vs-prague | plot - cli.pcap - srv.pcap - teardown |

| 10Mbit | 160ms-10ms | pfifo | cubic-vs-prague | plot - cli.pcap - srv.pcap - teardown |

| 10Mbit | 160ms-10ms | pfifo | prague-vs-cubic | plot - cli.pcap - srv.pcap - teardown |

| 10Mbit | 160ms-10ms | fq_codel | cubic-vs-cubic | plot - cli.pcap - srv.pcap - teardown |

| 10Mbit | 160ms-10ms | fq_codel | prague-vs-prague | plot - cli.pcap - srv.pcap - teardown |

| 10Mbit | 160ms-10ms | fq_codel | cubic-vs-prague | plot - cli.pcap - srv.pcap - teardown |

| 10Mbit | 160ms-10ms | fq_codel | prague-vs-cubic | plot - cli.pcap - srv.pcap - teardown |

| 10Mbit | 160ms-10ms | cnq_codel_af | cubic-vs-cubic | plot - cli.pcap - srv.pcap - teardown |

| 10Mbit | 160ms-10ms | cnq_codel_af | prague-vs-prague | plot - cli.pcap - srv.pcap - teardown |

| 10Mbit | 160ms-10ms | cnq_codel_af | cubic-vs-prague | plot - cli.pcap - srv.pcap - teardown |

| 10Mbit | 160ms-10ms | cnq_codel_af | prague-vs-cubic | plot - cli.pcap - srv.pcap - teardown |

| Burstiness | Bandwidth1 | RTT | qdisc | CC | L4S |

|---|---|---|---|---|---|

| clean | 25Mbit | 20 | dualpi2 | cubic | plot - cli.pcap - srv.pcap - teardown |

| clean | 25Mbit | 20 | dualpi2 | prague | plot - cli.pcap - srv.pcap - teardown |

| clean | 25Mbit | 20 | fq_codel | cubic | plot - cli.pcap - srv.pcap - teardown |

| clean | 25Mbit | 20 | fq_codel | prague | plot - cli.pcap - srv.pcap - teardown |

| clean | 25Mbit | 80 | dualpi2 | cubic | plot - cli.pcap - srv.pcap - teardown |

| clean | 25Mbit | 80 | dualpi2 | prague | plot - cli.pcap - srv.pcap - teardown |

| clean | 25Mbit | 80 | fq_codel | cubic | plot - cli.pcap - srv.pcap - teardown |

| clean | 25Mbit | 80 | fq_codel | prague | plot - cli.pcap - srv.pcap - teardown |

| clean | 10Mbit | 20 | dualpi2 | cubic | plot - cli.pcap - srv.pcap - teardown |

| clean | 10Mbit | 20 | dualpi2 | prague | plot - cli.pcap - srv.pcap - teardown |

| clean | 10Mbit | 20 | fq_codel | cubic | plot - cli.pcap - srv.pcap - teardown |

| clean | 10Mbit | 20 | fq_codel | prague | plot - cli.pcap - srv.pcap - teardown |

| clean | 10Mbit | 80 | dualpi2 | cubic | plot - cli.pcap - srv.pcap - teardown |

| clean | 10Mbit | 80 | dualpi2 | prague | plot - cli.pcap - srv.pcap - teardown |

| clean | 10Mbit | 80 | fq_codel | cubic | plot - cli.pcap - srv.pcap - teardown |

| clean | 10Mbit | 80 | fq_codel | prague | plot - cli.pcap - srv.pcap - teardown |

| clean | 5Mbit | 20 | dualpi2 | cubic | plot - cli.pcap - srv.pcap - teardown |

| clean | 5Mbit | 20 | dualpi2 | prague | plot - cli.pcap - srv.pcap - teardown |

| clean | 5Mbit | 20 | fq_codel | cubic | plot - cli.pcap - srv.pcap - teardown |

| clean | 5Mbit | 20 | fq_codel | prague | plot - cli.pcap - srv.pcap - teardown |

| clean | 5Mbit | 80 | dualpi2 | cubic | plot - cli.pcap - srv.pcap - teardown |

| clean | 5Mbit | 80 | dualpi2 | prague | plot - cli.pcap - srv.pcap - teardown |

| clean | 5Mbit | 80 | fq_codel | cubic | plot - cli.pcap - srv.pcap - teardown |

| clean | 5Mbit | 80 | fq_codel | prague | plot - cli.pcap - srv.pcap - teardown |

| clean | 1Mbit | 20 | dualpi2 | cubic | plot - cli.pcap - srv.pcap - teardown |

| clean | 1Mbit | 20 | dualpi2 | prague | plot - cli.pcap - srv.pcap - teardown |

| clean | 1Mbit | 20 | fq_codel | cubic | plot - cli.pcap - srv.pcap - teardown |

| clean | 1Mbit | 20 | fq_codel | prague | plot - cli.pcap - srv.pcap - teardown |

| clean | 1Mbit | 80 | dualpi2 | cubic | plot - cli.pcap - srv.pcap - teardown |

| clean | 1Mbit | 80 | dualpi2 | prague | plot - cli.pcap - srv.pcap - teardown |

| clean | 1Mbit | 80 | fq_codel | cubic | plot - cli.pcap - srv.pcap - teardown |

| clean | 1Mbit | 80 | fq_codel | prague | plot - cli.pcap - srv.pcap - teardown |

| bursty | 25Mbit | 20 | dualpi2 | cubic | plot - cli.pcap - srv.pcap - teardown |

| bursty | 25Mbit | 20 | dualpi2 | prague | plot - cli.pcap - srv.pcap - teardown |

| bursty | 25Mbit | 20 | fq_codel | cubic | plot - cli.pcap - srv.pcap - teardown |

| bursty | 25Mbit | 20 | fq_codel | prague | plot - cli.pcap - srv.pcap - teardown |

| bursty | 25Mbit | 80 | dualpi2 | cubic | plot - cli.pcap - srv.pcap - teardown |

| bursty | 25Mbit | 80 | dualpi2 | prague | plot - cli.pcap - srv.pcap - teardown |

| bursty | 25Mbit | 80 | fq_codel | cubic | plot - cli.pcap - srv.pcap - teardown |

| bursty | 25Mbit | 80 | fq_codel | prague | plot - cli.pcap - srv.pcap - teardown |

| bursty | 10Mbit | 20 | dualpi2 | cubic | plot - cli.pcap - srv.pcap - teardown |

| bursty | 10Mbit | 20 | dualpi2 | prague | plot - cli.pcap - srv.pcap - teardown |

| bursty | 10Mbit | 20 | fq_codel | cubic | plot - cli.pcap - srv.pcap - teardown |

| bursty | 10Mbit | 20 | fq_codel | prague | plot - cli.pcap - srv.pcap - teardown |

| bursty | 10Mbit | 80 | dualpi2 | cubic | plot - cli.pcap - srv.pcap - teardown |

| bursty | 10Mbit | 80 | dualpi2 | prague | plot - cli.pcap - srv.pcap - teardown |

| bursty | 10Mbit | 80 | fq_codel | cubic | plot - cli.pcap - srv.pcap - teardown |

| bursty | 10Mbit | 80 | fq_codel | prague | plot - cli.pcap - srv.pcap - teardown |

| bursty | 5Mbit | 20 | dualpi2 | cubic | plot - cli.pcap - srv.pcap - teardown |

| bursty | 5Mbit | 20 | dualpi2 | prague | plot - cli.pcap - srv.pcap - teardown |

| bursty | 5Mbit | 20 | fq_codel | cubic | plot - cli.pcap - srv.pcap - teardown |

| bursty | 5Mbit | 20 | fq_codel | prague | plot - cli.pcap - srv.pcap - teardown |

| bursty | 5Mbit | 80 | dualpi2 | cubic | plot - cli.pcap - srv.pcap - teardown |

| bursty | 5Mbit | 80 | dualpi2 | prague | plot - cli.pcap - srv.pcap - teardown |

| bursty | 5Mbit | 80 | fq_codel | cubic | plot - cli.pcap - srv.pcap - teardown |

| bursty | 5Mbit | 80 | fq_codel | prague | plot - cli.pcap - srv.pcap - teardown |

| bursty | 1Mbit | 20 | dualpi2 | cubic | plot - cli.pcap - srv.pcap - teardown |

| bursty | 1Mbit | 20 | dualpi2 | prague | plot - cli.pcap - srv.pcap - teardown |

| bursty | 1Mbit | 20 | fq_codel | cubic | plot - cli.pcap - srv.pcap - teardown |

| bursty | 1Mbit | 20 | fq_codel | prague | plot - cli.pcap - srv.pcap - teardown |

| bursty | 1Mbit | 80 | dualpi2 | cubic | plot - cli.pcap - srv.pcap - teardown |

| bursty | 1Mbit | 80 | dualpi2 | prague | plot - cli.pcap - srv.pcap - teardown |

| bursty | 1Mbit | 80 | fq_codel | cubic | plot - cli.pcap - srv.pcap - teardown |

| bursty | 1Mbit | 80 | fq_codel | prague | plot - cli.pcap - srv.pcap - teardown |

| Burstiness | RTT | qdisc | CC | L4S |

|---|---|---|---|---|

| not_bursty | 20 | dualpi2 | cubic | plot - cli.pcap - srv.pcap - teardown |

| not_bursty | 20 | dualpi2 | prague | plot - cli.pcap - srv.pcap - teardown |

| not_bursty | 20 | fq_codel | cubic | plot - cli.pcap - srv.pcap - teardown |

| not_bursty | 20 | fq_codel | prague | plot - cli.pcap - srv.pcap - teardown |

| not_bursty | 80 | dualpi2 | cubic | plot - cli.pcap - srv.pcap - teardown |

| not_bursty | 80 | dualpi2 | prague | plot - cli.pcap - srv.pcap - teardown |

| not_bursty | 80 | fq_codel | cubic | plot - cli.pcap - srv.pcap - teardown |

| not_bursty | 80 | fq_codel | prague | plot - cli.pcap - srv.pcap - teardown |

| bursty | 20 | dualpi2 | cubic | plot - cli.pcap - srv.pcap - teardown |

| bursty | 20 | dualpi2 | prague | plot - cli.pcap - srv.pcap - teardown |

| bursty | 20 | fq_codel | cubic | plot - cli.pcap - srv.pcap - teardown |

| bursty | 20 | fq_codel | prague | plot - cli.pcap - srv.pcap - teardown |

| bursty | 80 | dualpi2 | cubic | plot - cli.pcap - srv.pcap - teardown |

| bursty | 80 | dualpi2 | prague | plot - cli.pcap - srv.pcap - teardown |

| bursty | 80 | fq_codel | cubic | plot - cli.pcap - srv.pcap - teardown |

| bursty | 80 | fq_codel | prague | plot - cli.pcap - srv.pcap - teardown |

| Tunnel | RTT | qdisc | vs | L4S |

|---|---|---|---|---|

| wireguard | 20ms | fq_codel | prague-vs-cubic | plot - cli.pcap - srv.pcap - teardown |

| wireguard | 20ms | fq_codel | prague-vs-reno | plot - cli.pcap - srv.pcap - teardown |

| ipfou | 20ms | fq_codel | prague-vs-cubic | plot - cli.pcap - srv.pcap - teardown |

| ipfou | 20ms | fq_codel | prague-vs-reno | plot - cli.pcap - srv.pcap - teardown |

| RTT | qdisc | vs | ECN | L4S |

|---|---|---|---|---|

| 20ms | fq_codel_1q_ | prague-vs-cubic | ecn | plot - cli.pcap - srv.pcap - teardown |

| 20ms | fq_codel_1q_ | prague-vs-cubic | noecn | plot - cli.pcap - srv.pcap - teardown |

| 20ms | fq_codel_1q_ | prague-vs-reno | ecn | plot - cli.pcap - srv.pcap - teardown |

| 20ms | fq_codel_1q_ | prague-vs-reno | noecn | plot - cli.pcap - srv.pcap - teardown |

| 20ms | fq_codel_1q_1ms_20ms_ | prague-vs-cubic | ecn | plot - cli.pcap - srv.pcap - teardown |

| 20ms | fq_codel_1q_1ms_20ms_ | prague-vs-cubic | noecn | plot - cli.pcap - srv.pcap - teardown |

| 20ms | fq_codel_1q_1ms_20ms_ | prague-vs-reno | ecn | plot - cli.pcap - srv.pcap - teardown |

| 20ms | fq_codel_1q_1ms_20ms_ | prague-vs-reno | noecn | plot - cli.pcap - srv.pcap - teardown |

| 20ms | pie | prague-vs-cubic | ecn | plot - cli.pcap - srv.pcap - teardown |

| 20ms | pie | prague-vs-cubic | noecn | plot - cli.pcap - srv.pcap - teardown |

| 20ms | pie | prague-vs-reno | ecn | plot - cli.pcap - srv.pcap - teardown |

| 20ms | pie | prague-vs-reno | noecn | plot - cli.pcap - srv.pcap - teardown |

| 20ms | pie_100p_5ms_ | prague-vs-cubic | ecn | plot - cli.pcap - srv.pcap - teardown |

| 20ms | pie_100p_5ms_ | prague-vs-cubic | noecn | plot - cli.pcap - srv.pcap - teardown |

| 20ms | pie_100p_5ms_ | prague-vs-reno | ecn | plot - cli.pcap - srv.pcap - teardown |

| 20ms | pie_100p_5ms_ | prague-vs-reno | noecn | plot - cli.pcap - srv.pcap - teardown |

| 20ms | red_150000b_ | prague-vs-cubic | ecn | plot - cli.pcap - srv.pcap - teardown |

| 20ms | red_150000b_ | prague-vs-cubic | noecn | plot - cli.pcap - srv.pcap - teardown |

| 20ms | red_150000b_ | prague-vs-reno | ecn | plot - cli.pcap - srv.pcap - teardown |

| 20ms | red_150000b_ | prague-vs-reno | noecn | plot - cli.pcap - srv.pcap - teardown |

| 20ms | red_400000b_ | prague-vs-cubic | ecn | plot - cli.pcap - srv.pcap - teardown |

| 20ms | red_400000b_ | prague-vs-cubic | noecn | plot - cli.pcap - srv.pcap - teardown |

| 20ms | red_400000b_ | prague-vs-reno | ecn | plot - cli.pcap - srv.pcap - teardown |

| 20ms | red_400000b_ | prague-vs-reno | noecn | plot - cli.pcap - srv.pcap - teardown |

RTT fairness competition, DSS Delivery rate (throughput) at steady state (mean of 60 second window ending 2 seconds before end of test)

| Rate | qdisc | CC1 (RTT) | DSS1 | CC2 (RTT) | DSS2 | Jain's |

|---|---|---|---|---|---|---|

| 50Mbit | dualpi2 | cubic(10ms) | 12.65 | prague(10ms) | 34.57 | 0.823 |

| 50Mbit | dualpi2 | prague(10ms) | 34.09 | cubic(10ms) | 13.04 | 0.834 |

| 50Mbit | dualpi2 | cubic(10ms) | 23.11 | cubic(10ms) | 24.18 | 0.999 |

| 50Mbit | dualpi2 | prague(10ms) | 23.51 | prague(10ms) | 22.75 | 1.000 |

| 50Mbit | dualpi2 | prague(20ms) | 28.40 | cubic(10ms) | 18.84 | 0.961 |

| 50Mbit | dualpi2 | cubic(20ms) | 9.21 | prague(10ms) | 37.86 | 0.730 |

| 50Mbit | dualpi2 | prague(20ms) | 15.84 | prague(10ms) | 30.77 | 0.907 |

| 50Mbit | dualpi2 | cubic(20ms) | 20.61 | cubic(10ms) | 26.76 | 0.983 |

| 50Mbit | dualpi2 | cubic(20ms) | 14.94 | prague(20ms) | 32.39 | 0.880 |

| 50Mbit | dualpi2 | prague(20ms) | 23.50 | prague(20ms) | 23.36 | 1.000 |

| 50Mbit | dualpi2 | prague(20ms) | 31.85 | cubic(20ms) | 15.51 | 0.894 |

| 50Mbit | dualpi2 | cubic(20ms) | 24.80 | cubic(20ms) | 22.67 | 0.998 |

| 50Mbit | dualpi2 | cubic(80ms) | 6.43 | prague(20ms) | 40.69 | 0.654 |

| 50Mbit | dualpi2 | prague(80ms) | 5.80 | prague(20ms) | 41.48 | 0.637 |

| 50Mbit | dualpi2 | prague(80ms) | 22.43 | cubic(20ms) | 24.89 | 0.997 |

| 50Mbit | dualpi2 | cubic(80ms) | 14.75 | cubic(20ms) | 32.73 | 0.875 |

| 50Mbit | dualpi2 | cubic(80ms) | 17.85 | prague(80ms) | 29.58 | 0.942 |

| 50Mbit | dualpi2 | prague(80ms) | 29.92 | cubic(80ms) | 17.48 | 0.936 |

| 50Mbit | dualpi2 | cubic(80ms) | 23.88 | cubic(80ms) | 23.47 | 1.000 |

| 50Mbit | dualpi2 | prague(80ms) | 23.45 | prague(80ms) | 23.60 | 1.000 |

| 50Mbit | dualpi2 | cubic(160ms) | 4.87 | prague(10ms) | 41.67 | 0.615 |

| 50Mbit | dualpi2 | prague(160ms) | 1.56 | prague(10ms) | 45.46 | 0.534 |

| 50Mbit | dualpi2 | prague(160ms) | 10.13 | cubic(10ms) | 37.28 | 0.753 |

| 50Mbit | dualpi2 | cubic(160ms) | 10.74 | cubic(10ms) | 36.50 | 0.771 |

| 50Mbit | pfifo(1000) | cubic(10ms) | 22.22 | cubic(10ms) | 25.26 | 0.996 |

| 50Mbit | pfifo(1000) | prague(10ms) | 9.78 | cubic(10ms) | 37.77 | 0.743 |

| 50Mbit | pfifo(1000) | cubic(10ms) | 39.98 | prague(10ms) | 7.55 | 0.682 |

| 50Mbit | pfifo(1000) | prague(10ms) | 26.04 | prague(10ms) | 21.69 | 0.992 |

| 50Mbit | pfifo(1000) | prague(20ms) | 6.32 | cubic(10ms) | 41.25 | 0.650 |

| 50Mbit | pfifo(1000) | cubic(20ms) | 18.89 | cubic(10ms) | 28.60 | 0.960 |

| 50Mbit | pfifo(1000) | cubic(20ms) | 39.98 | prague(10ms) | 7.55 | 0.682 |

| 50Mbit | pfifo(1000) | prague(20ms) | 21.75 | prague(10ms) | 25.92 | 0.992 |

| 50Mbit | pfifo(1000) | cubic(20ms) | 23.46 | cubic(20ms) | 24.07 | 1.000 |

| 50Mbit | pfifo(1000) | prague(20ms) | 24.06 | prague(20ms) | 23.65 | 1.000 |

| 50Mbit | pfifo(1000) | prague(20ms) | 5.88 | cubic(20ms) | 41.63 | 0.638 |

| 50Mbit | pfifo(1000) | cubic(20ms) | 35.44 | prague(20ms) | 12.24 | 0.809 |

| 50Mbit | pfifo(1000) | prague(80ms) | 6.96 | cubic(20ms) | 40.45 | 0.667 |

| 50Mbit | pfifo(1000) | cubic(80ms) | 22.32 | cubic(20ms) | 25.14 | 0.996 |

| 50Mbit | pfifo(1000) | cubic(80ms) | 42.33 | prague(20ms) | 5.14 | 0.620 |

| 50Mbit | pfifo(1000) | prague(80ms) | 14.50 | prague(20ms) | 33.16 | 0.867 |

| 50Mbit | pfifo(1000) | prague(80ms) | 3.95 | cubic(80ms) | 43.59 | 0.590 |

| 50Mbit | pfifo(1000) | cubic(80ms) | 43.71 | prague(80ms) | 3.74 | 0.585 |

| 50Mbit | pfifo(1000) | prague(80ms) | 24.38 | prague(80ms) | 23.36 | 1.000 |

| 50Mbit | pfifo(1000) | cubic(80ms) | 24.14 | cubic(80ms) | 23.27 | 1.000 |

| 50Mbit | pfifo(1000) | cubic(160ms) | 35.71 | prague(10ms) | 11.80 | 0.798 |

| 50Mbit | pfifo(1000) | prague(160ms) | 10.35 | prague(10ms) | 37.36 | 0.757 |

| 50Mbit | pfifo(1000) | prague(160ms) | 4.54 | cubic(10ms) | 43.00 | 0.605 |

| 50Mbit | pfifo(1000) | cubic(160ms) | 17.22 | cubic(10ms) | 30.27 | 0.930 |

| 50Mbit | cnq_codel_af | cubic(10ms) | 23.34 | prague(10ms) | 23.84 | 1.000 |

| 50Mbit | cnq_codel_af | prague(10ms) | 23.87 | cubic(10ms) | 23.34 | 1.000 |

| 50Mbit | cnq_codel_af | prague(10ms) | 23.64 | prague(10ms) | 23.64 | 1.000 |

| 50Mbit | cnq_codel_af | cubic(10ms) | 23.30 | cubic(10ms) | 23.81 | 1.000 |

| 50Mbit | cnq_codel_af | prague(20ms) | 23.51 | prague(10ms) | 23.86 | 1.000 |

| 50Mbit | cnq_codel_af | cubic(20ms) | 22.57 | cubic(10ms) | 24.58 | 0.998 |

| 50Mbit | cnq_codel_af | prague(20ms) | 23.87 | cubic(10ms) | 23.36 | 1.000 |

| 50Mbit | cnq_codel_af | cubic(20ms) | 22.42 | prague(10ms) | 24.87 | 0.997 |

| 50Mbit | cnq_codel_af | prague(20ms) | 23.74 | prague(20ms) | 23.75 | 1.000 |

| 50Mbit | cnq_codel_af | cubic(20ms) | 22.66 | prague(20ms) | 24.68 | 0.998 |

| 50Mbit | cnq_codel_af | cubic(20ms) | 24.00 | cubic(20ms) | 23.37 | 1.000 |

| 50Mbit | cnq_codel_af | prague(20ms) | 24.80 | cubic(20ms) | 22.60 | 0.998 |

| 50Mbit | cnq_codel_af | cubic(80ms) | 22.04 | prague(20ms) | 25.43 | 0.995 |

| 50Mbit | cnq_codel_af | cubic(80ms) | 21.36 | cubic(20ms) | 25.43 | 0.992 |

| 50Mbit | cnq_codel_af | prague(80ms) | 24.09 | prague(20ms) | 23.44 | 1.000 |

| 50Mbit | cnq_codel_af | prague(80ms) | 27.53 | cubic(20ms) | 19.87 | 0.975 |

| 50Mbit | cnq_codel_af | cubic(80ms) | 23.63 | cubic(80ms) | 23.03 | 1.000 |

| 50Mbit | cnq_codel_af | prague(80ms) | 27.10 | cubic(80ms) | 20.13 | 0.979 |

| 50Mbit | cnq_codel_af | cubic(80ms) | 20.70 | prague(80ms) | 26.57 | 0.985 |

| 50Mbit | cnq_codel_af | prague(80ms) | 23.87 | prague(80ms) | 23.83 | 1.000 |

| 50Mbit | cnq_codel_af | cubic(160ms) | 20.80 | prague(10ms) | 26.18 | 0.987 |

| 50Mbit | cnq_codel_af | cubic(160ms) | 18.20 | cubic(10ms) | 28.99 | 0.950 |

| 50Mbit | cnq_codel_af | prague(160ms) | 24.83 | cubic(10ms) | 22.49 | 0.998 |

| 50Mbit | cnq_codel_af | prague(160ms) | 23.57 | prague(10ms) | 23.88 | 1.000 |

| 50Mbit | fq_codel | cubic(10ms) | 23.49 | prague(10ms) | 23.82 | 1.000 |

| 50Mbit | fq_codel | prague(10ms) | 23.83 | cubic(10ms) | 23.53 | 1.000 |

| 50Mbit | fq_codel | cubic(10ms) | 23.46 | cubic(10ms) | 23.56 | 1.000 |

| 50Mbit | fq_codel | prague(10ms) | 23.82 | prague(10ms) | 23.81 | 1.000 |

| 50Mbit | fq_codel | prague(20ms) | 23.84 | cubic(10ms) | 23.51 | 1.000 |

| 50Mbit | fq_codel | cubic(20ms) | 23.32 | prague(10ms) | 24.02 | 1.000 |

| 50Mbit | fq_codel | cubic(20ms) | 23.30 | cubic(10ms) | 23.72 | 1.000 |

| 50Mbit | fq_codel | prague(20ms) | 23.84 | prague(10ms) | 23.81 | 1.000 |

| 50Mbit | fq_codel | cubic(20ms) | 23.58 | cubic(20ms) | 23.59 | 1.000 |

| 50Mbit | fq_codel | prague(20ms) | 23.84 | prague(20ms) | 23.84 | 1.000 |

| 50Mbit | fq_codel | cubic(20ms) | 23.31 | prague(20ms) | 24.02 | 1.000 |

| 50Mbit | fq_codel | prague(20ms) | 24.03 | cubic(20ms) | 23.32 | 1.000 |

| 50Mbit | fq_codel | prague(80ms) | 23.86 | prague(20ms) | 23.83 | 1.000 |

| 50Mbit | fq_codel | cubic(80ms) | 22.02 | prague(20ms) | 24.81 | 0.996 |

| 50Mbit | fq_codel | prague(80ms) | 23.97 | cubic(20ms) | 23.31 | 1.000 |

| 50Mbit | fq_codel | cubic(80ms) | 22.24 | cubic(20ms) | 24.54 | 0.998 |

| 50Mbit | fq_codel | prague(80ms) | 25.29 | cubic(80ms) | 22.03 | 0.995 |

| 50Mbit | fq_codel | prague(80ms) | 23.86 | prague(80ms) | 23.86 | 1.000 |

| 50Mbit | fq_codel | cubic(80ms) | 22.91 | cubic(80ms) | 23.35 | 1.000 |

| 50Mbit | fq_codel | cubic(80ms) | 22.03 | prague(80ms) | 25.27 | 0.995 |

| 50Mbit | fq_codel | cubic(160ms) | 21.35 | prague(10ms) | 25.55 | 0.992 |

| 50Mbit | fq_codel | prague(160ms) | 23.87 | cubic(10ms) | 23.49 | 1.000 |

| 50Mbit | fq_codel | cubic(160ms) | 21.32 | cubic(10ms) | 25.36 | 0.993 |

| 50Mbit | fq_codel | prague(160ms) | 23.87 | prague(10ms) | 23.83 | 1.000 |

| 10Mbit | dualpi2 | cubic(10ms) | 2.93 | prague(10ms) | 6.22 | 0.886 |

| 10Mbit | dualpi2 | prague(10ms) | 4.31 | prague(10ms) | 4.27 | 1.000 |

| 10Mbit | dualpi2 | prague(10ms) | 6.25 | cubic(10ms) | 2.93 | 0.884 |

| 10Mbit | dualpi2 | cubic(10ms) | 4.82 | cubic(10ms) | 4.47 | 0.999 |

| 10Mbit | dualpi2 | cubic(20ms) | 3.52 | cubic(10ms) | 5.75 | 0.945 |

| 10Mbit | dualpi2 | prague(20ms) | 2.48 | prague(10ms) | 6.17 | 0.846 |

| 10Mbit | dualpi2 | cubic(20ms) | 2.28 | prague(10ms) | 6.87 | 0.799 |

| 10Mbit | dualpi2 | prague(20ms) | 5.00 | cubic(10ms) | 4.22 | 0.993 |

| 10Mbit | dualpi2 | prague(20ms) | 4.33 | prague(20ms) | 4.43 | 1.000 |

| 10Mbit | dualpi2 | cubic(20ms) | 4.58 | cubic(20ms) | 4.75 | 1.000 |

| 10Mbit | dualpi2 | cubic(20ms) | 3.28 | prague(20ms) | 5.93 | 0.924 |

| 10Mbit | dualpi2 | prague(20ms) | 6.01 | cubic(20ms) | 3.23 | 0.917 |

| 10Mbit | dualpi2 | cubic(80ms) | 2.71 | cubic(20ms) | 6.56 | 0.853 |

| 10Mbit | dualpi2 | prague(80ms) | 3.64 | cubic(20ms) | 5.67 | 0.955 |

| 10Mbit | dualpi2 | cubic(80ms) | 1.22 | prague(20ms) | 7.88 | 0.651 |

| 10Mbit | dualpi2 | prague(80ms) | 1.61 | prague(20ms) | 7.36 | 0.709 |

| 10Mbit | dualpi2 | cubic(80ms) | 4.26 | cubic(80ms) | 4.97 | 0.994 |

| 10Mbit | dualpi2 | cubic(80ms) | 3.48 | prague(80ms) | 5.90 | 0.938 |

| 10Mbit | dualpi2 | prague(80ms) | 4.57 | prague(80ms) | 4.70 | 1.000 |

| 10Mbit | dualpi2 | prague(80ms) | 5.94 | cubic(80ms) | 3.42 | 0.933 |

| 10Mbit | dualpi2 | cubic(160ms) | 1.21 | cubic(10ms) | 8.04 | 0.647 |

| 10Mbit | dualpi2 | cubic(160ms) | 1.18 | prague(10ms) | 7.89 | 0.646 |

| 10Mbit | dualpi2 | prague(160ms) | 1.22 | cubic(10ms) | 8.10 | 0.648 |

| 10Mbit | dualpi2 | prague(160ms) | 0.20 | prague(10ms) | 8.66 | 0.523 |

| 10Mbit | pfifo(200) | cubic(10ms) | 6.51 | prague(10ms) | 2.92 | 0.874 |

| 10Mbit | pfifo(200) | prague(10ms) | 5.09 | prague(10ms) | 4.38 | 0.994 |

| 10Mbit | pfifo(200) | cubic(10ms) | 4.56 | cubic(10ms) | 4.87 | 0.999 |

| 10Mbit | pfifo(200) | prague(10ms) | 2.48 | cubic(10ms) | 6.97 | 0.816 |

| 10Mbit | pfifo(200) | prague(20ms) | 3.76 | prague(10ms) | 5.71 | 0.959 |

| 10Mbit | pfifo(200) | cubic(20ms) | 3.10 | cubic(10ms) | 6.34 | 0.895 |

| 10Mbit | pfifo(200) | cubic(20ms) | 6.77 | prague(10ms) | 2.64 | 0.838 |

| 10Mbit | pfifo(200) | prague(20ms) | 3.40 | cubic(10ms) | 6.06 | 0.927 |

| 10Mbit | pfifo(200) | cubic(20ms) | 5.50 | prague(20ms) | 3.95 | 0.974 |

| 10Mbit | pfifo(200) | prague(20ms) | 4.36 | prague(20ms) | 5.13 | 0.993 |

| 10Mbit | pfifo(200) | cubic(20ms) | 4.65 | cubic(20ms) | 4.80 | 1.000 |

| 10Mbit | pfifo(200) | prague(20ms) | 2.96 | cubic(20ms) | 6.48 | 0.878 |

| 10Mbit | pfifo(200) | prague(80ms) | 4.13 | prague(20ms) | 5.34 | 0.984 |

| 10Mbit | pfifo(200) | prague(80ms) | 3.05 | cubic(20ms) | 6.39 | 0.889 |

| 10Mbit | pfifo(200) | cubic(80ms) | 5.78 | cubic(20ms) | 3.65 | 0.951 |

| 10Mbit | pfifo(200) | cubic(80ms) | 7.90 | prague(20ms) | 1.53 | 0.687 |

| 10Mbit | pfifo(200) | prague(80ms) | 1.79 | cubic(80ms) | 7.64 | 0.722 |

| 10Mbit | pfifo(200) | prague(80ms) | 4.72 | prague(80ms) | 4.77 | 1.000 |

| 10Mbit | pfifo(200) | cubic(80ms) | 7.76 | prague(80ms) | 1.69 | 0.708 |

| 10Mbit | pfifo(200) | cubic(80ms) | 4.82 | cubic(80ms) | 4.61 | 1.000 |

| 10Mbit | pfifo(200) | cubic(160ms) | 4.80 | cubic(10ms) | 4.63 | 1.000 |

| 10Mbit | pfifo(200) | prague(160ms) | 1.33 | cubic(10ms) | 8.13 | 0.659 |

| 10Mbit | pfifo(200) | prague(160ms) | 2.40 | prague(10ms) | 7.08 | 0.804 |

| 10Mbit | pfifo(200) | cubic(160ms) | 6.58 | prague(10ms) | 2.85 | 0.864 |

| 10Mbit | cnq_codel_af | prague(10ms) | 4.44 | prague(10ms) | 4.41 | 1.000 |

| 10Mbit | cnq_codel_af | cubic(10ms) | 4.53 | cubic(10ms) | 4.53 | 1.000 |

| 10Mbit | cnq_codel_af | prague(10ms) | 4.46 | cubic(10ms) | 4.59 | 1.000 |

| 10Mbit | cnq_codel_af | cubic(10ms) | 4.53 | prague(10ms) | 4.52 | 1.000 |

| 10Mbit | cnq_codel_af | prague(20ms) | 4.29 | prague(10ms) | 4.64 | 0.998 |

| 10Mbit | cnq_codel_af | cubic(20ms) | 4.21 | cubic(10ms) | 4.85 | 0.995 |

| 10Mbit | cnq_codel_af | cubic(20ms) | 4.29 | prague(10ms) | 4.71 | 0.998 |

| 10Mbit | cnq_codel_af | prague(20ms) | 4.47 | cubic(10ms) | 4.65 | 1.000 |

| 10Mbit | cnq_codel_af | cubic(20ms) | 4.68 | prague(20ms) | 4.47 | 0.999 |

| 10Mbit | cnq_codel_af | prague(20ms) | 4.55 | prague(20ms) | 4.52 | 1.000 |