litehub for onnxruntime/ncnn/mnn/tnn. This library integrates some interesting models. I use them as practice cases when I was learning some mobile inference engines.

Most of the models come from ONNX-Model-Zoo, PytorchHub and other open source projects. All models used will be cited. Many thanks to these contributors. What you see is what you get, and hopefully you get something out of it.

- Mac OS.

installopencvandonnxruntimelibraries using Homebrew.

brew update

brew install opencv

brew install onnxruntimeor you can download the built dependencies from this repo. See third_party and build-docs1.

- Linux & Windows.

- TODO

- Inference Engine Plans:

- Doing:

- onnxruntime c++

- TODO:

-

NCNN -

MNN -

TNN

-

- Doing:

Disclaimer: The following test pictures are from the Internet search, if it has any impact on you, please contact me immediately, I will remove it immediately.

More examples can find at ortcv-examples.

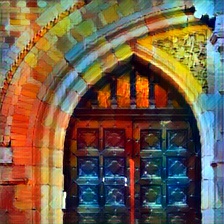

3.1.1 Style transfer using FastStyleTransfer. Download model from Model-Zoo2.

#include <iostream>

#include <vector>

#include "ort/cv/fast_style_transfer.h"

#include "ort/core/ort_utils.h"

static void test_ortcv_fast_style_transfer()

{

std::string candy_onnx_path = "../../../hub/onnx/cv/style-candy-8.onnx";

std::string mosaic_onnx_path = "../../../hub/onnx/cv/style-mosaic-8.onnx";

/**skip other onnx models ... **/

std::string test_img_path = "../../../examples/ort/resources/test_ortcv_fast_style_transfer.jpg";

std::string save_candy_path = "../../../logs/test_ortcv_fast_style_transfer_candy.jpg";

std::string save_mosaic_path = "../../../logs/test_ortcv_fast_style_transfer_mosaic.jpg";

/**skip other saved images ... **/

ortcv::FastStyleTransfer *candy_fast_style_transfer = new ortcv::FastStyleTransfer(candy_onnx_path);

ortcv::FastStyleTransfer *mosaic_fast_style_transfer = new ortcv::FastStyleTransfer(mosaic_onnx_path);

ortcv::types::StyleContent candy_style_content, mosaic_style_content; /** skip other contents ... **/

cv::Mat img_bgr = cv::imread(test_img_path);

candy_fast_style_transfer->detect(img_bgr, candy_style_content);

mosaic_fast_style_transfer->detect(img_bgr, mosaic_style_content); /** skip other transferring ... **/

if (candy_style_content.flag) cv::imwrite(save_candy_path, candy_style_content.mat);

if (mosaic_style_content.flag) cv::imwrite(save_mosaic_path, mosaic_style_content.mat);

/** ... **/

std::cout << "Style Transfer Done." << std::endl;

delete candy_fast_style_transfer; delete mosaic_fast_style_transfer;

/** ... **/

}

int main(__unused int argc, __unused char *argv[])

{

test_ortcv_fast_style_transfer();

return 0;

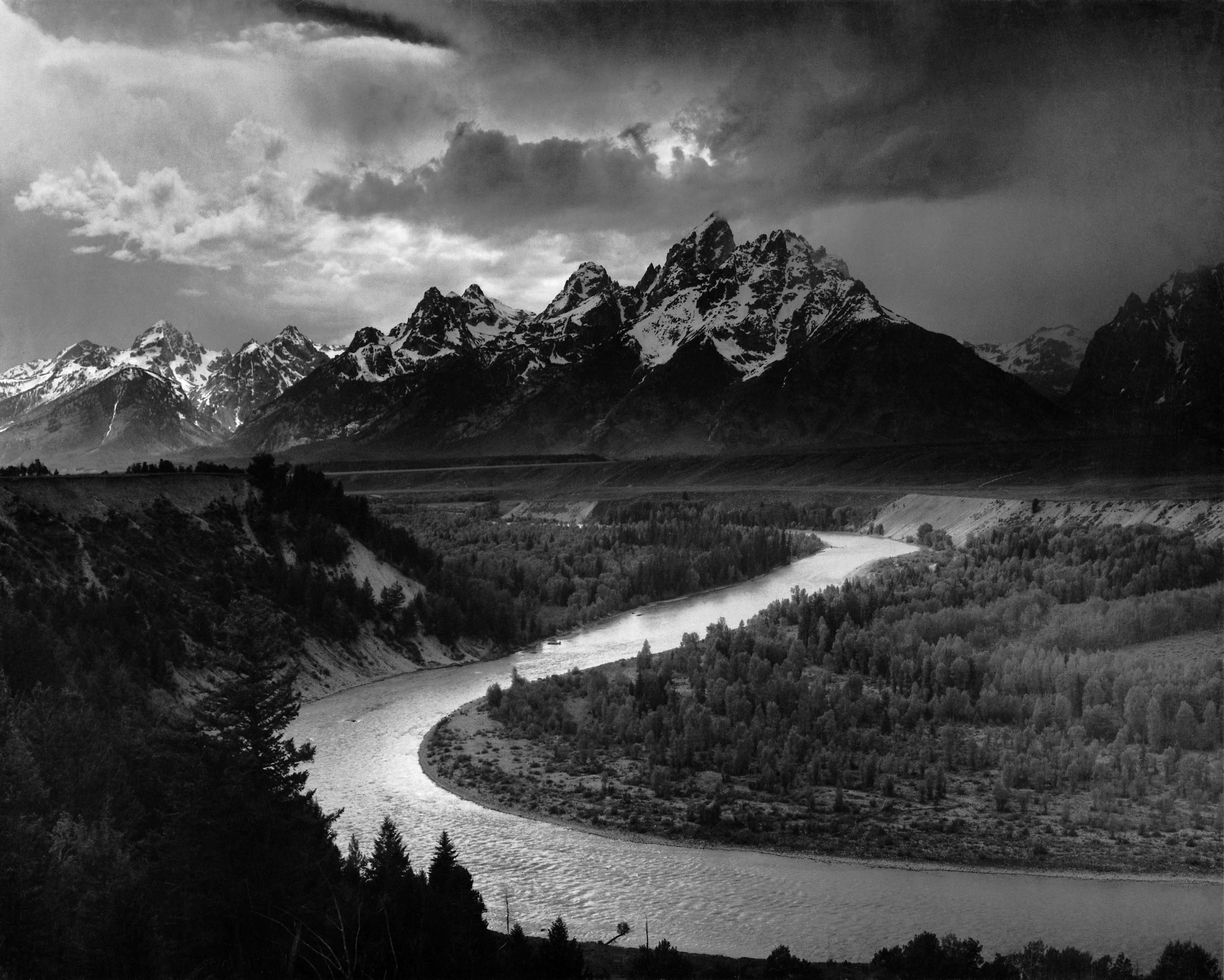

}The output is:

3.1.2 Colorization using colorization. Download model from Model-Zoo2.

#include <iostream>

#include <vector>

#include "ort/cv/colorizer.h"

#include "ort/core/ort_utils.h"

static void test_ortcv_colorizer()

{

std::string eccv16_onnx_path = "../../../hub/onnx/cv/eccv16-colorizer.onnx";

std::string siggraph17_onnx_path = "../../../hub/onnx/cv/siggraph17-colorizer.onnx";

std::string test_img_path1 = "../../../examples/ort/resources/test_ortcv_colorizer_1.jpg";

std::string test_img_path2 = "../../../examples/ort/resources/test_ortcv_colorizer_2.jpg";

std::string test_img_path3 = "../../../examples/ort/resources/test_ortcv_colorizer_3.jpg";

std::string save_eccv_img_path1 = "../../../logs/test_ortcv_eccv16_colorizer_1.jpg";

std::string save_eccv_img_path2 = "../../../logs/test_ortcv_eccv16_colorizer_2.jpg";

std::string save_eccv_img_path3 = "../../../logs/test_ortcv_eccv16_colorizer_3.jpg";

std::string save_siggraph_img_path1 = "../../../logs/test_ortcv_siggraph17_colorizer_1.jpg";

std::string save_siggraph_img_path2 = "../../../logs/test_ortcv_siggraph17_colorizer_2.jpg";

std::string save_siggraph_img_path3 = "../../../logs/test_ortcv_siggraph17_colorizer_3.jpg";

ortcv::Colorizer *eccv16_colorizer = new ortcv::Colorizer(eccv16_onnx_path);

ortcv::Colorizer *siggraph17_colorizer = new ortcv::Colorizer(siggraph17_onnx_path);

cv::Mat img_bgr1 = cv::imread(test_img_path1);

cv::Mat img_bgr2 = cv::imread(test_img_path2);

cv::Mat img_bgr3 = cv::imread(test_img_path3);

ortcv::types::ColorizeContent eccv16_colorize_content1;

ortcv::types::ColorizeContent eccv16_colorize_content2;

ortcv::types::ColorizeContent eccv16_colorize_content3;

ortcv::types::ColorizeContent siggraph17_colorize_content1;

ortcv::types::ColorizeContent siggraph17_colorize_content2;

ortcv::types::ColorizeContent siggraph17_colorize_content3;

eccv16_colorizer->detect(img_bgr1, eccv16_colorize_content1);

eccv16_colorizer->detect(img_bgr2, eccv16_colorize_content2);

eccv16_colorizer->detect(img_bgr3, eccv16_colorize_content3);

siggraph17_colorizer->detect(img_bgr1, siggraph17_colorize_content1);

siggraph17_colorizer->detect(img_bgr2, siggraph17_colorize_content2);

siggraph17_colorizer->detect(img_bgr3, siggraph17_colorize_content3);

if (eccv16_colorize_content1.flag) cv::imwrite(save_eccv_img_path1, eccv16_colorize_content1.mat);

if (eccv16_colorize_content2.flag) cv::imwrite(save_eccv_img_path2, eccv16_colorize_content2.mat);

if (eccv16_colorize_content3.flag) cv::imwrite(save_eccv_img_path3, eccv16_colorize_content3.mat);

if (siggraph17_colorize_content1.flag) cv::imwrite(save_siggraph_img_path1, siggraph17_colorize_content1.mat);

if (siggraph17_colorize_content2.flag) cv::imwrite(save_siggraph_img_path2, siggraph17_colorize_content2.mat);

if (siggraph17_colorize_content3.flag) cv::imwrite(save_siggraph_img_path3, siggraph17_colorize_content3.mat);

std::cout << "Colorization Done." << std::endl;

delete eccv16_colorizer;

delete siggraph17_colorizer;

}

int main(__unused int argc, __unused char *argv[])

{

test_ortcv_colorizer();

return 0;

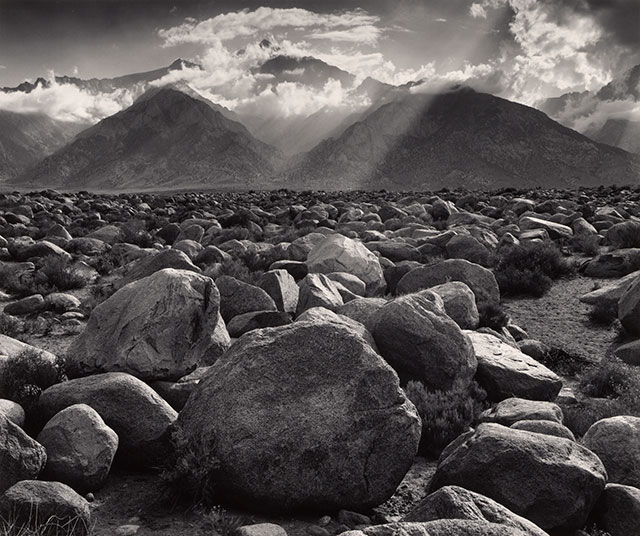

}The output is:

#include <iostream>

#include <vector>

#include "ort/cv/age_googlenet.h"

#include "ort/core/ort_utils.h"

static void test_ortcv_age_googlenet() {

std::string onnx_path = "../../../hub/onnx/cv/age_googlenet.onnx";

std::string test_img_path = "../../../examples/ort/resources/test_ortcv_age_googlenet.jpg";

std::string save_img_path = "../../../logs/test_ortcv_age_googlenet.jpg";

ortcv::AgeGoogleNet *age_googlenet = new ortcv::AgeGoogleNet(onnx_path);

ortcv::types::Age age;

cv::Mat img_bgr = cv::imread(test_img_path);

age_googlenet->detect(img_bgr, age);

ortcv::utils::draw_age_inplace(img_bgr, age);

cv::imwrite(save_img_path, img_bgr);

std::cout << "Detected Age: " << age.age << std::endl;

delete age_googlenet;

}

int main(__unused int argc, __unused char *argv[]) {

test_ortcv_age_googlenet();

return 0;

}The output is:

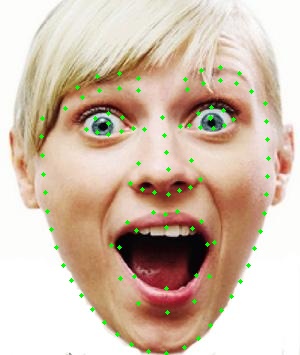

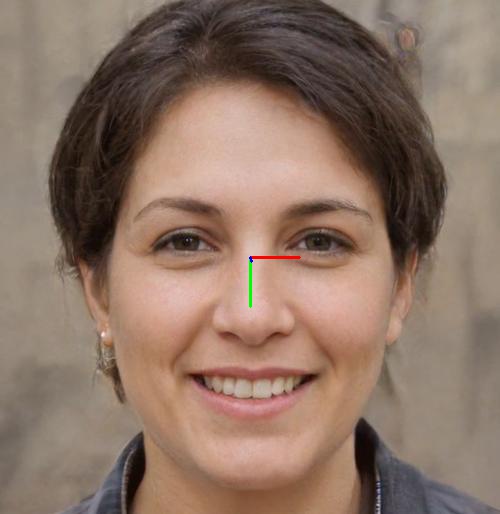

#include <iostream>

#include <vector>

#include "ort/cv/pfld.h"

#include "ort/core/ort_utils.h"

static void test_ortcv_pfld() {

std::string onnx_path = "../../../hub/onnx/cv/pfld-106-v3.onnx";

std::string test_img_path = "../../../examples/ort/resources/test_ortcv_pfld.jpg";

std::string save_img_path = "../../../logs/test_ortcv_pfld.jpg";

ortcv::PFLD *pfld = new ortcv::PFLD(onnx_path);

ortcv::types::Landmarks landmarks;

cv::Mat img_bgr = cv::imread(test_img_path);

pfld->detect(img_bgr, landmarks);

ortcv::utils::draw_landmarks_inplace(img_bgr, landmarks);

cv::imwrite(save_img_path, img_bgr);

std::cout << "Detected Landmarks Num: " << landmarks.points.size() << std::endl;

delete pfld;

}

int main(__unused int argc, __unused char *argv[]) {

test_ortcv_pfld();

return 0;

}The output is:

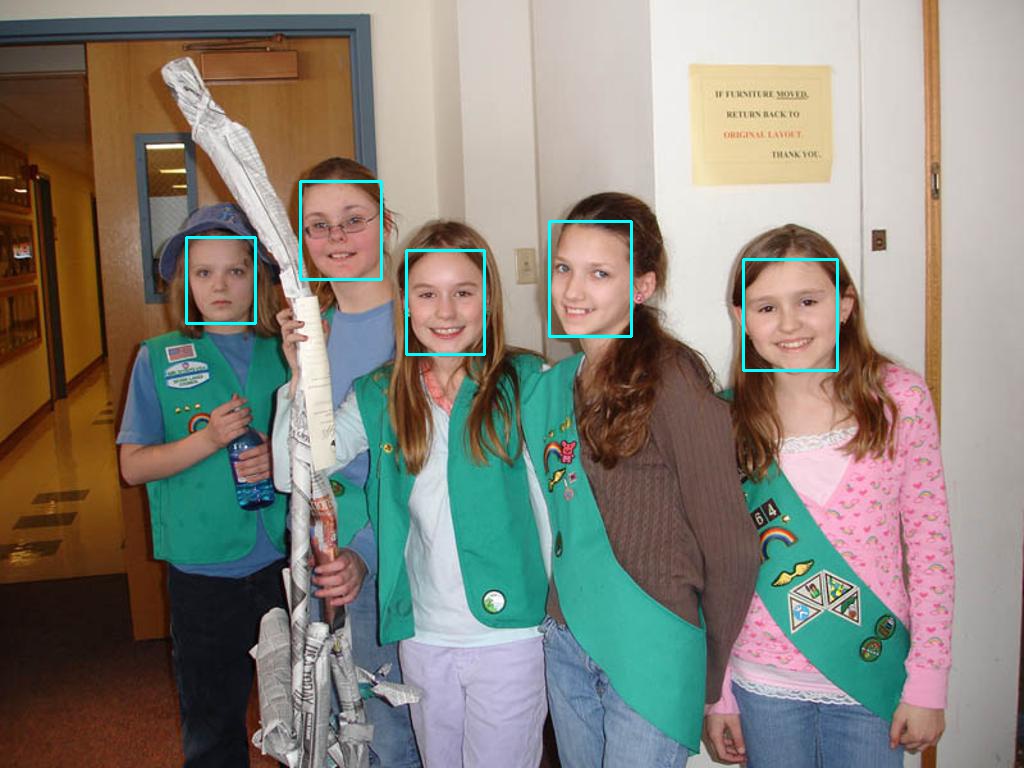

#include <iostream>

#include <vector>

#include "ort/cv/ultraface.h"

#include "ort/core/ort_utils.h"

static void test_ortcv_ultraface() {

std::string onnx_path = "../../../hub/onnx/cv/ultraface-rfb-640.onnx";

std::string test_img_path = "../../../examples/ort/resources/test_ortcv_ultraface.jpg";

std::string save_img_path = "../../../logs/test_ortcv_ultraface.jpg";

ortcv::UltraFace *ultraface = new ortcv::UltraFace(onnx_path);

std::vector<ortcv::types::Boxf> detected_boxes;

cv::Mat img_bgr = cv::imread(test_img_path);

ultraface->detect(img_bgr, detected_boxes);

ortcv::utils::draw_boxes_inplace(img_bgr, detected_boxes);

cv::imwrite(save_img_path, img_bgr);

std::cout << "Detected Face Num: " << detected_boxes.size() << std::endl;

delete ultraface;

}

int main(__unused int argc, __unused char *argv[]) {

test_ortcv_ultraface();

return 0;

}The output is:

3.1.6 Emotion detection using EmotionFerPlus. Download model from Model-Zoo2.

#include <iostream>

#include <vector>

#include "ort/cv/emotion_ferplus.h"

#include "ort/core/ort_utils.h"

static void test_ortcv_emotion_ferplus() {

std::string onnx_path = "../../../hub/onnx/cv/emotion-ferplus-8.onnx";

std::string test_img_path = "../../../examples/ort/resources/test_ortcv_emotion_ferplus.jpg";

std::string save_img_path = "../../../logs/test_ortcv_emotion_ferplus.jpg";

ortcv::EmotionFerPlus *emotion_ferplus = new ortcv::EmotionFerPlus(onnx_path);

ortcv::types::Emotions emotions;

cv::Mat img_bgr = cv::imread(test_img_path);

emotion_ferplus->detect(img_bgr, emotions);

ortcv::utils::draw_emotion_inplace(img_bgr, emotions);

cv::imwrite(save_img_path, img_bgr);

std::cout << "Detected Emotion: " << emotions.text << std::endl;

delete emotion_ferplus;

}

int main(__unused int argc, __unused char *argv[]) {

test_ortcv_emotion_ferplus();

return 0;

}The output is:

- TODO.

- TODO.

- TODO.

The * symbol indicates that the C ++ inference interface for the model has been implemented, and more models may be added.

But I don't guarantee that there will be more models.

namespace ortcv

{

class FSANet; // [0] * reference: https://github.com/omasaht/headpose-fsanet-pytorch

class PFLD; // [1] * reference: https://github.com/Hsintao/pfld_106_face_landmarks

class UltraFace; // [2] * reference: https://github.com/Linzaer/Ultra-Light-Fast-Generic-Face-Detector-1MB

class AgeGoogleNet; // [3] * reference: https://github.com/onnx/models/tree/master/vision/body_analysis/age_gender

class GenderGoogleNet; // [4] * reference: https://github.com/onnx/models/tree/master/vision/body_analysis/age_gender

class EmotionFerPlus; // [5] * reference: https://github.com/onnx/models/blob/master/vision/body_analysis/emotion_ferplus

class VGG16Age; // [6] * reference: https://github.com/onnx/models/tree/master/vision/body_analysis/age_gender

class VGG16Gender; // [7] * reference: https://github.com/onnx/models/tree/master/vision/body_analysis/age_gender

class SSRNet; // [8] * reference: https://github.com/oukohou/SSR_Net_Pytorch

class FastStyleTransfer; // [9] * reference: https://github.com/onnx/models/blob/master/vision/style_transfer/fast_neural_style

class ArcFaceResNet; // [10] * reference: https://github.com/onnx/models/blob/master/vision/body_analysis/arcface

class Colorizer; // [11] * reference: https://github.com/richzhang/colorization

class SubPixelCNN; // [12] * reference: https://github.com/niazwazir/SUB_PIXEL_CNN

class ChineseOCR; // [13] reference: https://github.com/DayBreak-u/chineseocr_lite

class ChineseOCRAngleNet; // [14] reference: https://github.com/DayBreak-u/chineseocr_lite

class ChineseOCRLiteLSTM; // [15] reference: https://github.com/DayBreak-u/chineseocr_lite

class ChineseOCRDBNet; // [16] reference: https://github.com/DayBreak-u/chineseocr_lite

class YoloV4; // [17] reference: https://github.com/AllanYiin/YoloV4

class YoloV5; // [18] reference: https://github.com/ultralytics/yolov5

class YoloV3; // [19] reference: https://github.com/onnx/models/blob/master/vision/object_detection_segmentation/yolov3

class EfficientNetLite4; // [20] reference: https://github.com/onnx/models/blob/master/vision/classification/efficientnet-lite4

class ShuffleNetV2; // [21] reference: https://github.com/onnx/models/blob/master/vision/classification/shufflenet

class TinyYoloV3; // [22] reference: https://github.com/onnx/models/blob/master/vision/object_detection_segmentation/tiny-yolov3

class SSD; // [23] reference: https://github.com/onnx/models/blob/master/vision/object_detection_segmentation/ssd

class SSDMobileNetV1; // [24] reference: https://github.com/onnx/models/blob/master/vision/object_detection_segmentation/ssd-mobilenetv1

class DeepLabV3ResNet101; // [25] reference: https://pytorch.org/hub/pytorch_vision_deeplabv3_resnet101/

class DenseNet; // [26] reference: https://pytorch.org/hub/pytorch_vision_densenet/

class FCNResNet101; // [27] reference: https://pytorch.org/hub/pytorch_vision_fcn_resnet101/

class GhostNet; // [28] reference:https://pytorch.org/hub/pytorch_vision_ghostnet/

class HdrDNet; // [29] reference: https://pytorch.org/hub/pytorch_vision_hardnet/

class IBNNet; // [30] reference: https://pytorch.org/hub/pytorch_vision_ibnnet/

class MobileNetV2; // [31] reference: https://pytorch.org/hub/pytorch_vision_mobilenet_v2/

class ResNet; // [32] reference: https://pytorch.org/hub/pytorch_vision_resnet/

class ResNeXt; // [33] reference: https://pytorch.org/hub/pytorch_vision_resnext/

class UNet; // [34] reference: https://github.com/milesial/Pytorch-UNet

}

See ort-core for more details.

- TODO.

- TODO.

- TODO.

- Rapid implementation of your inference using BasicOrtHandler

- Some very useful interfaces in onnxruntime c++

- How to compile a single model in this library you needed

- How to convert SubPixelCNN to ONNX and implements with onnxruntime c++

- How to convert Colorizer to ONNX and implements with onnxruntime c++

- How to convert SSRNet to ONNX and implements with onnxruntime c++

- TODO.

- TODO.

- TODO.

5.5 How to build third_party.

Other build documents for different engines and different targets will be added later.

| Library | Target | Docs |

|---|---|---|

| OpenCV | MacOS-x86_64 | opencv-mac-x86_64-build-cn.md |

| onnxruntime | MacOS-x86_64 | onnxruntime-mac-x86_64-build-cn.md |

| onnxruntime | Android-arm | TODO |

| NCNN | MacOS-x86_64 | TODO |

| MNN | MacOS-x86_64 | TODO |

| TNN | MacOS-x86_64 | TODO |

Some of the models were converted by this repo, and others were referenced from third-party libraries.

| Model | Size | Download | From | Docs |

|---|---|---|---|---|

| FSANet | 1.2Mb | Baidu Drive code:1dsc; Google Drive: TODO | FSANet | - |

| PFLD | 1.0Mb~5.5Mb | Baidu Drive code:1dsc; Google Drive: TODO | PFLD | - |

| UltraFace | 1.1Mb~1.5Mb | Baidu Drive code:1dsc; Google Drive: TODO | UltraFace | - |

| AgeGoogleNet | 23Mb | Baidu Drive code:1dsc; Google Drive: TODO | AgeGoogleNet | - |

| GenderGoogleNet | 23Mb | Baidu Drive code:1dsc; Google Drive: TODO | GenderGoogleNet | - |

| EmotionFerPlus | 33Mb | Baidu Drive code:1dsc; Google Drive: TODO | EmotionFerPlus | - |

| VGG16Age | 514Mb | Baidu Drive code:1dsc; Google Drive: TODO | VGG16Age | - |

| VGG16Gender | 512Mb | Baidu Drive code:1dsc; Google Drive: TODO | VGG16Gender | - |

| SSRNet | 190Kb | Baidu Drive code:1dsc; Google Drive: TODO | litehub | ort_ssrnet-cn.md |

| FastStyleTransfer | 6.4Mb | Baidu Drive code:1dsc; Google Drive: TODO | FastStyleTransfer | - |

| ArcFaceResNet | 249Mb | Baidu Drive code:1dsc; Google Drive: TODO | ArcFaceResNet | - |

| Colorizer | 123Mb~130Mb | Baidu Drive code:1dsc; Google Drive: TODO | litehub | ort_colorizer-cn.md |

| SubPixelCNN | 234Kb | Baidu Drive code:1dsc; Google Drive: TODO | litehub | ort_subpixel_cnn-cn.md |

- TODO

- TODO

- TODO