This repository contains the code and data for our paper:

Meta-Learning Triplet Network with Adaptive Margins for Few-Shot Named Entity Recognition

If you find this work useful and use it on your own research, please cite our paper.

@article{han2023meta,

title={Meta-Learning Triplet Network with Adaptive Margins for Few-Shot Named Entity Recognition},

author={Chengcheng Han and

Renyu Zhu and

Jun Kuang and

FengJiao Chen and

Xiang Li and

Ming Gao and

Xuezhi Cao and

Wei Wu},

journal={arXiv preprint arXiv:2302.07739},

year={2023}

}

We propose an improved triplet network with adaptive margins (MeTNet) and a new inference procedure for few-shot NER.

We release the first Chinese few-shot NER dataset FEW-COMM.

The datasets used by our experiments are in the data/ folder, including FEW-COMM, FEW-NERD, WNUT17, Restaurant and Multiwoz.

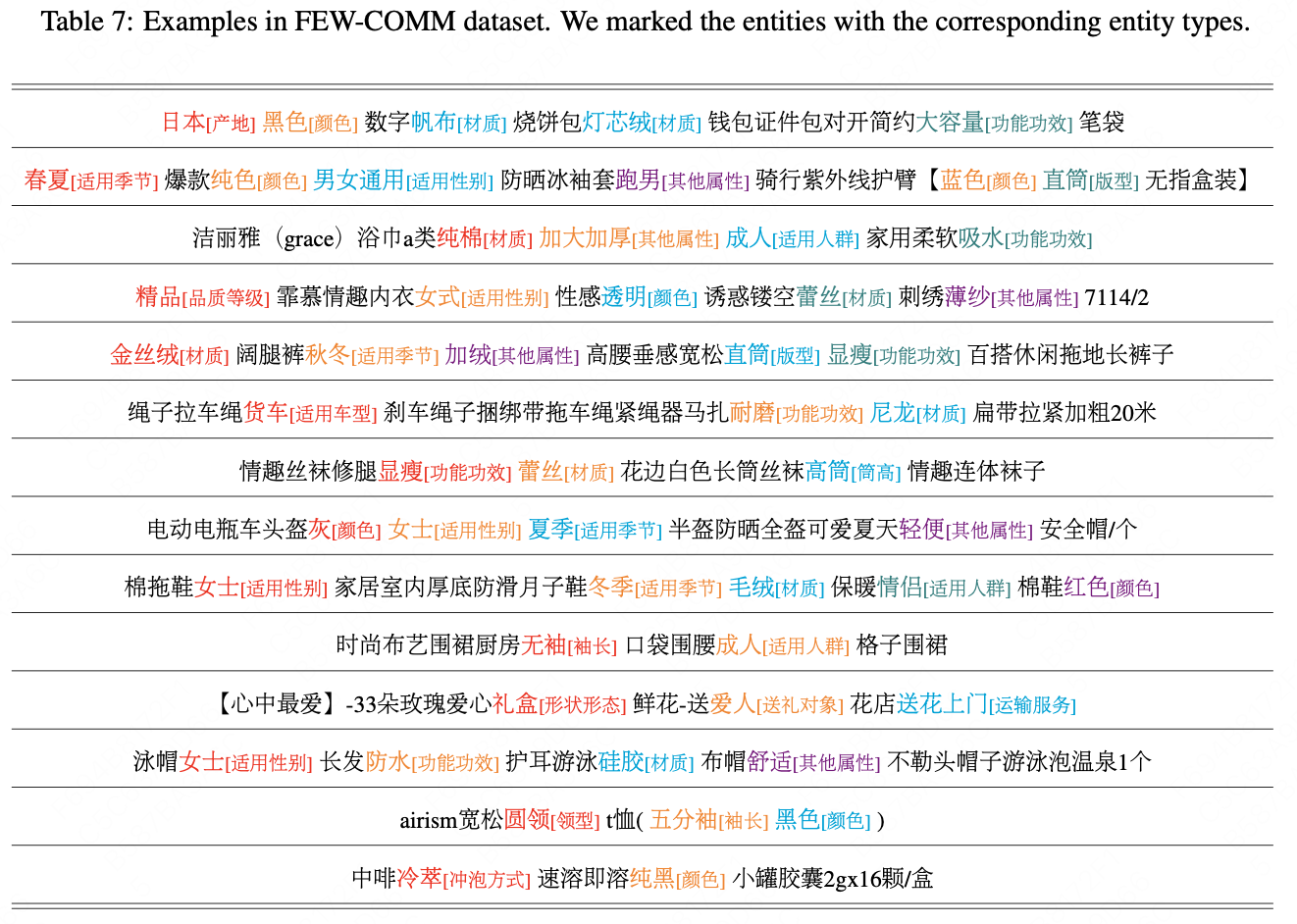

FEW-COMM is a Chinese few-shot NER dataset we released, which consists of 66,165 product description texts that merchants display on a large e-commerce platform, including 140,936 entities and 92 pre-defined entity types. These entity types are various commodity attributes that are manually defined by domain experts, such as "material", "color" and "origin". Please see Appendix C of our paper for more details on the dataset.

This implemetation is based on Python3.7. To run the code, you need the following dependencies:

- nltk>=3.6.4

- numpy==1.21.0

- pandas==1.3.5

- torch==1.7.1

- transformers==4.0.1

- apex==0.9.10dev

- scikit_learn==0.24.1

- seqeval

You can simply run

pip install -r requirements.txtWe select some important files for detailed description.

|-- data # experiments for five datasets

|-- Few-COMM/ # a Chinese few-shot NER dataset we released

|-- model # includes all model implementations

|-- transformer_model # includes BERT pre-trained checkpoints

|--bert-base-chinese

|--bert-base-uncased

|-- utils

|-- config.py # configuration

|-- data_loader.py # load data

|-- fewshotsampler.py # construst meta-tasks

|-- framework.py # includes train\eval\test procedure

|-- tripletloss.py # an improved triplet loss

|-- main.py

|-- run.sh-

Unzip our processed data file

data.zipand put the data files underdata/folder. -

Please download pretrained BERT files bert-base-chinese and bert-base-uncased and put them under

transformer_model/folder. -

sh run.shYou can also adjust the model by modifying the parameters in the

run.shfile.

Currently, the benchmarks on the FEW-COMM dataset are as follows:

| FEW-COMM | 5-way 1-shot | 5-way 5-shot | 10-way 1-shot | 10-way 5-shot |

|---|---|---|---|---|

| MAML | 28.16 | 54.38 | 26.23 | 44.66 |

| NNShot | 48.40 | 71.55 | 41.75 | 67.91 |

| StructShot | 48.61 | 70.62 | 47.77 | 65.09 |

| PROTO | 22.73 | 53.95 | 22.17 | 45.81 |

| CONTaiNER | 57.13 | 63.38 | 51.87 | 60.98 |

| ESD | 65.37 | 73.29 | 58.32 | 70.93 |

| DecomMETA | 68.01 | 72.89 | 62.13 | 72.14 |

| SpanProto | 70.97 | 76.59 | 63.94 | 74.67 |

| MeTNet | 71.89 | 78.14 | 65.11 | 77.58 |

If you have the latest experimental results on the FEW-COMM dataset, please contact us to update the benchmark.

For the FewNERD dataset, please download it from its official website.

Parts of this code are based on the following repositories: