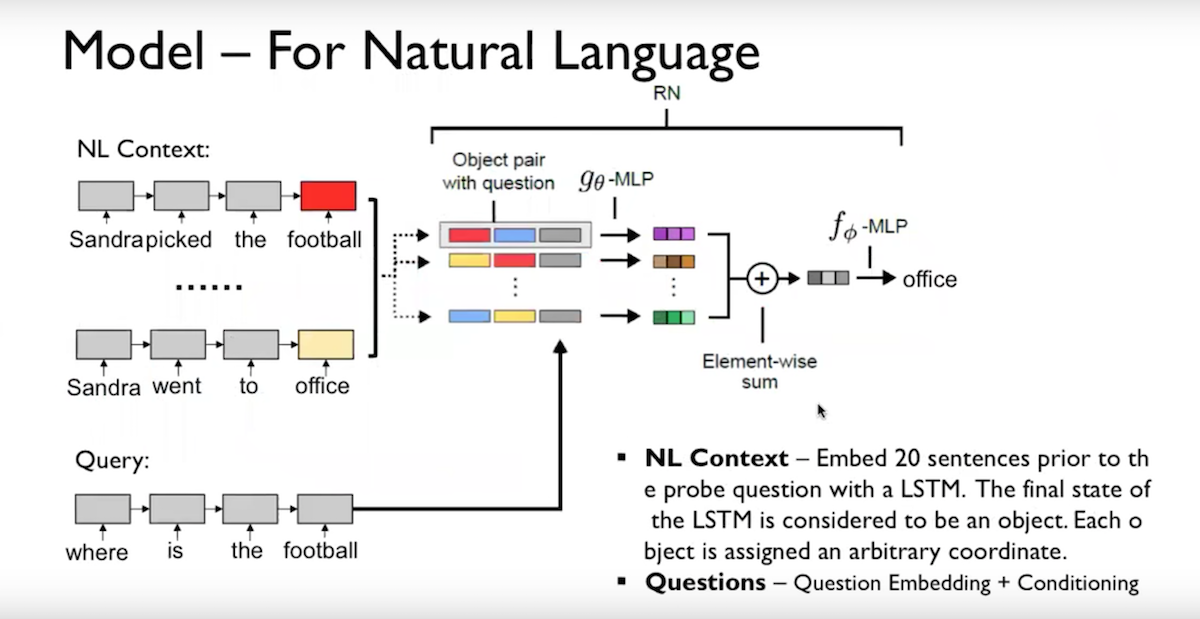

Relation Network

TensorFlow implementation of A simple neural network module for relational reasoning for bAbi task.

- image: A Simple Neural Network Module for Relational Reasoning Slides by Xiadong Gu

Requirements

- Python 3.6

- TensorFlow >= 1.4

- hb-config (Singleton Config)

- requests

- tqdm (progress bar)

- Slack Incoming Webhook URL

Project Structure

init Project by hb-base

.

├── config # Config files (.yml, .json) using with hb-config

├── data # dataset path

├── notebooks # Prototyping with numpy or tf.interactivesession

├── relation_network # relation network architecture graphs (from input to logits)

├── __init__.py # Graph logic

├── encoder.py # Encoder

└── relation.py # RN Module

├── data_loader.py # raw_date -> precossed_data -> generate_batch (using Dataset)

├── hook.py # training or test hook feature (eg. print_variables)

├── main.py # define experiment_fn

└── model.py # define EstimatorSpec

Reference : hb-config, Dataset, experiments_fn, EstimatorSpec

Todo

- model was trained on the joint version of bAbI (all 20 tasks simultaneously), using the full dataset of 10K examples per task. (paper experiments)

Config

Can control all Experimental environment.

example: bAbi_task1.yml

data:

base_path: 'data/'

task_path: 'en-10k/'

task_id: 1

PAD_ID: 0

model:

batch_size: 64

use_pretrained: false # (true or false)

embed_dim: 32 # if use_pretrained: only available 50, 100, 200, 300

encoder_type: uni # uni, bi

cell_type: lstm # lstm, gru, layer_norm_lstm, nas

num_layers: 1

num_units: 32

dropout: 0.5

g_units:

- 64

- 64

- 64

- 64

f_units:

- 64

- 128

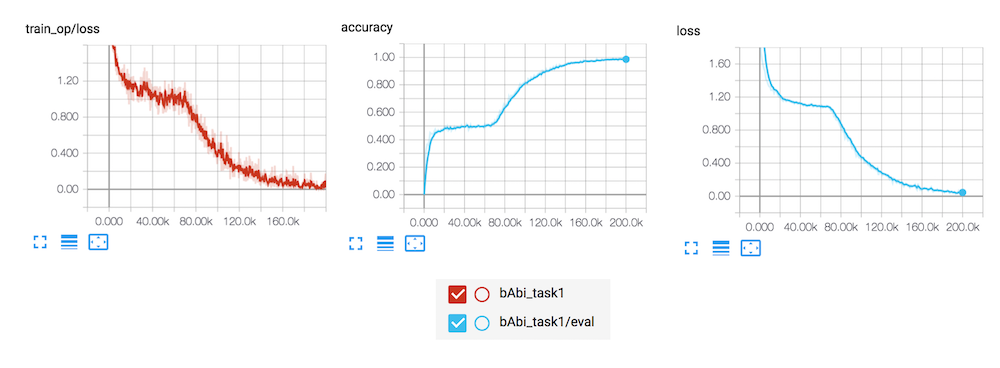

train:

learning_rate: 0.00003

optimizer: 'Adam' # Adagrad, Adam, Ftrl, Momentum, RMSProp, SGD

train_steps: 200000

model_dir: 'logs/bAbi_task1'

save_checkpoints_steps: 1000

check_hook_n_iter: 1000

min_eval_frequency: 1

print_verbose: False

debug: False

slack:

webhook_url: "" # after training notify you using slack-webhook- debug mode : using tfdbg

Usage

Install requirements.

pip install -r requirements.txt

Then, prepare dataset.

sh scripts/fetch_babi_data.sh

Finally, start train and evaluate model

python main.py --config bAbi_task1 --mode train_and_evaluate

Experiments modes

✅ : Working

◽ : Not tested yet.

- ✅

evaluate: Evaluate on the evaluation data. - ◽

extend_train_hooks: Extends the hooks for training. - ◽

reset_export_strategies: Resets the export strategies with the new_export_strategies. - ◽

run_std_server: Starts a TensorFlow server and joins the serving thread. - ◽

test: Tests training, evaluating and exporting the estimator for a single step. - ✅

train: Fit the estimator using the training data. - ✅

train_and_evaluate: Interleaves training and evaluation.

Tensorboar

tensorboard --logdir logs

- bAbi_task1

Reference

- hb-research/notes - A simple neural network module for relational reasoning

- arXiv - A simple neural network module for relational reasoning