This is a submission for Grab - AIforSEA. I choose the Computer Vision Challenge - Recognizing Car Details\

- This is the folder layout for the whole repository.

- Raw data from dataset are organized in the data folder. The processing (cropping) of the raw images are stored under data/crop_images.

- All meta data such as mat files and csv files are under dataframe folder.

- Weight saved from training / weight to load model during inference is inside snapshots folder.

- Data Processing

- Training

- Testing / Evaluation

- Extra (Using Object Detection)

root

.

├── src

│ ├── mat_to_csv.py

│ ├── data_preprocessing.py

│ ├── train_utils.py

│ ├── train_densenet.py

│ └── evaluate.py

|

├── data

| └── cars_train

| ├── 00001.jpg

| ├── ....

| └── 08144.jpg

| └── cars_test

| ├── 00001.jpg

| ├── ....

| └── 08041.jpg

| └── crop_images (created after running data_preprocessing.py)

│ ├── train

│ └── test

|

├── dataframe

│ ├── csv_files (created after running mat_to_csv.py)

│ └── mat_files

|

├── snapshots

│ └── DenseNet169-epochs-47-0.26.h5 (Placed pre-trained model weight here)

|

├── jupyter_notebook

│ ├── Evaluate_Test.ipynb

| └── test_image

|

├── extra

│ ├── Grab_object_detection.ipynb

| └── images

|

└── README.md

- Install python modules (change tensorflow-gpu to tensorflow if NVIDIA is not available)

pip3 install -r requirements.txt

- Download pre-trained weights and move it to snapshots folder

Exploratory Data Analysis (EDA) of the training dataset can be viewed in this Jupyter Notebook

First we need to convert the mat file car_train_annos.mat to csv.

python3 src/mat_to_csv.pyThe output file is cars_train.csv in the dataframe/csv_files

Then we need to process the raw images.

This script will crop the car images in data/cars_train according to the bounding box and save it in the data/crop_images.

It read the file and bounding box from the csv file created above (cars_train.csv).

It will then create csv file cars_train_crop.csv with structure "file_name, car_id" in dataframe/csv_files.

python3 src/data_preprocessing.pyBefore and after processing

- I focused to use the input of training and evaluation in CSV file format.

- CSV file is much easier to create, access, manipulate, convert to PandasDataframe and it is in general better compability format compared to Matlab file.

- Hence, the reason I've separate the process of converting mat file to csv file from the data clean up (croppping the image).

Class mapping

- CSV that map the class name to car make and model name

car_make_and_model, car_class_id

e.g.

Buick Verano Sedan 2012,49

Buick Enclave SUV 2012,50

Cadillac CTS-V Sedan 2012,51

Annotation mapping

- CSV mapping that created from mat file

file_path, x1, x2, y1, y2, car_class_id

e.g.

00066.jpg,30,29,221,125,017

00067.jpg,129,89,594,432,111

After crop mapping

- CSV mapping after cropped from its bounding box and used for training, evaluation

file_path, car_class_id

e.g.

data/crop_images/train/00066.jpg,017

data/crop_images/train/00067.jpg,111

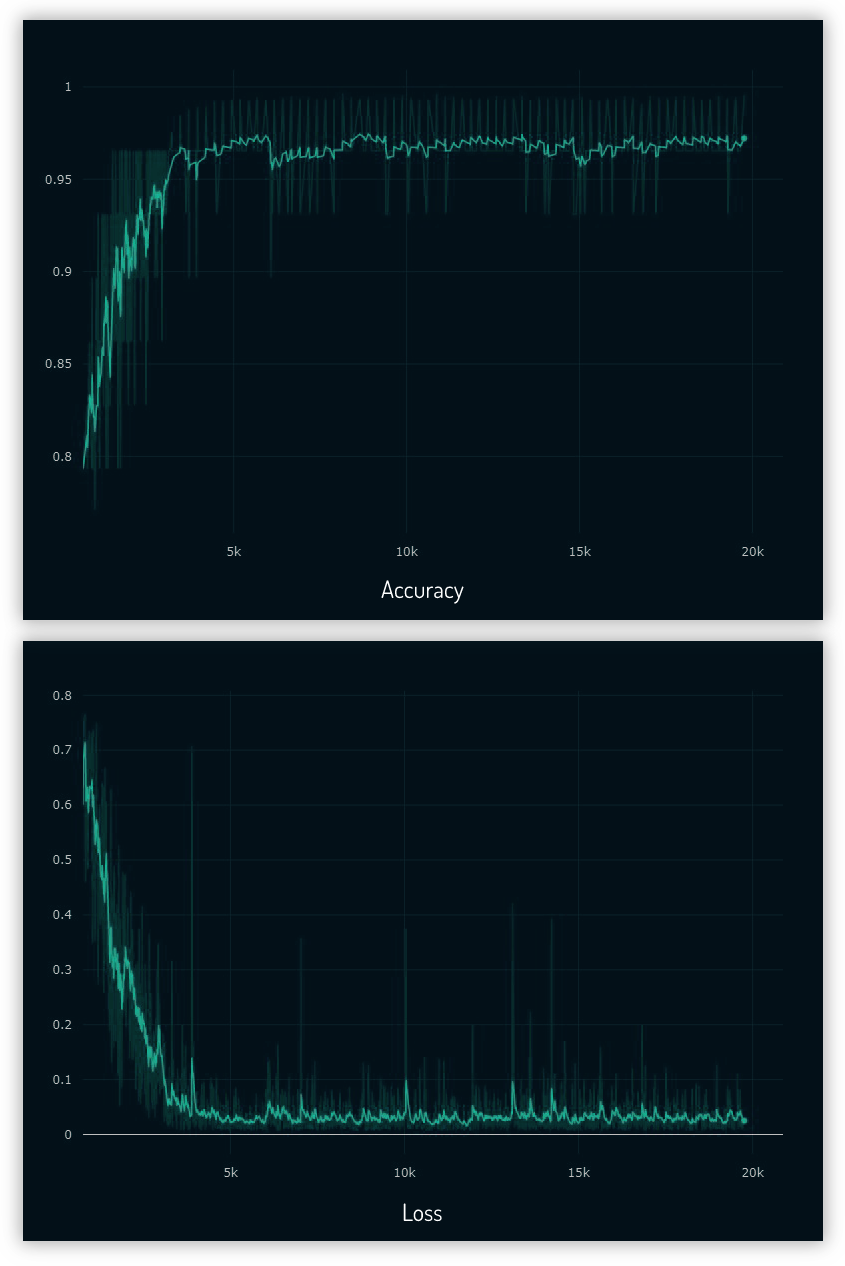

- I've tried the training with various backbone architecture such as Resnet, ResnetV2, InceptionResnetV2. In the end, DenseNet169 gave the lowest validation error. Not only that, DenseNet has much lower trainable parameters (12M) compared to the same number of layers in ResNet (60M).

python3 src/train_densenet.py- Using

model.fitto fit the whole train dataset in the memory will not be viable since we got more than 8000+ images and it is not scalable to train very large data in the future. - Since I already have data the CSV list, I just need to convert it to Pandas Dataframe to pass it to Image Generator via

flow_from_dataframe. This way, the images will be generated on the fly batch by batch as the training process run. - Initially, using

flow_from_dataframe, the output class were totally out of order since it sort the class by 1,10,100,101.. instead of 1,2,3,4.. I've managed to overcome this by padding the class number with zeros 001,002,003 when converting from mat file to csv (mat_to_csv.py) flow_from_dataframealso better with this dataset thanflow_from_directorysince we don't have to rearrange the folders back according to the classes.

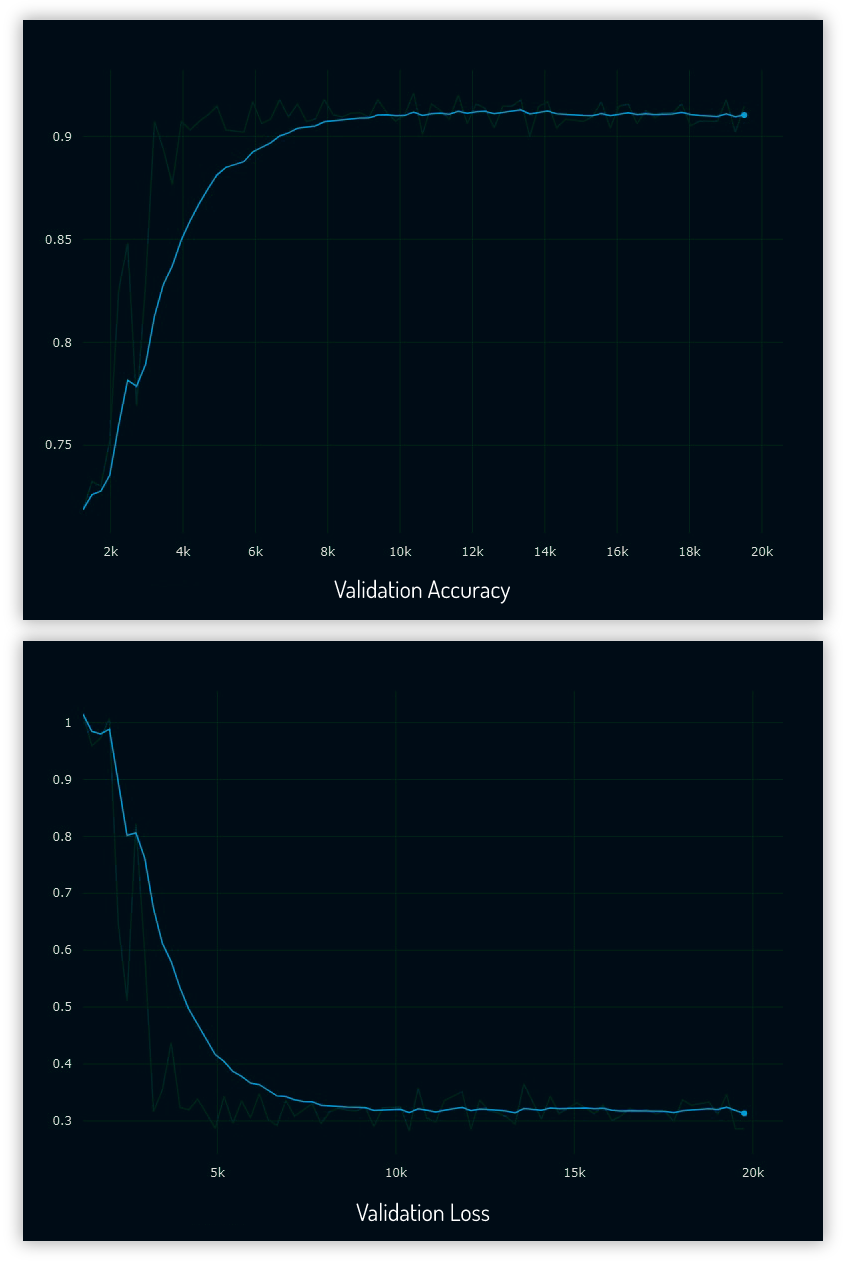

After 80 epochs, with image augmentation of translation and scaling, I got validation loss of 0.26

Validation Accuracy and Loss Graph

Validation Accuracy and Loss Graph

- After training complete, we can evaluate using the test dataset (8041 images). First we need to extract the test images from tar.gzip in the data/cars_test, and copy cars_test_annos_withlabels.mat to dataframe/mat_files. Then we need to convert the mat file to csv.

python3 src/mat_to_csv.py --testThe output will be cars_test.csv in the dataframe/csv_files folder

- After that we need to crop the test images according to its respective bounding box

python3 src/data_preprocessing.py --testThe output will be list of file name and car class saved in cars_test_crop.csv in the dataframe/csv_files folder. The actual cropped images are saved in data/crop_images/test

-

Run the test evaluation

The pre-trained weight can be download here

python3 src/evaluate.py --model snapshots/DenseNet169-epochs-47-0.26.h5 --testcsv dataframe/csv_files/cars_test_crop.csv --classcsv dataframe/csv_files/class.csvI got 91.1% Accuracy (7326 true predictions out of 8041 images)

Jupyter Notebook Evaluate_Test.ipynb has the same content as evaluate.py but with sample of processed images displayed in the notebook

-

To accurately predict the car make and model, we need to cropped the images since our model was trained on cropped images.

So far we processed car images that already have its bounding box defined.

This annotation process usually done manually by human annotators. -

But what if in real life we need to process images from camera/dashcam that does not have bounding box of cars.

Manually annotate bounding box of cars would be labour intensive.

We can use object detection algorithm to get accurate estimation of the car bounding box.The new processing pipeline would then be:

Raw image→Object Detection (to get bounding box coordinate)→Crop car according to its bounding box→Load model and predict -

There are many object detection algorithm out there such as Yolo, Faster-RCNN, SSD, but for this demonstration I will use single shot detector Retinanet by fizyr to train the cars dataset and show proof of concept getting the bounding box automatically from images of car the model never seen before.

The code flow example is shown in this Jupyter Notebook, however the full code to run Retinanet was not included in this repo since it would add extra complexitity to this repo. Retinanet use its own custom backbone; to load Retinanet model, we need to install / compile Cython code from its Github page.