Explorations on handling data in Python and R

Pandas and R both struggle with medium sized data. You may be asking what specifications differentiate small, medium, and big data. The lines are blurry as the size of the data increases and compute abilities improve. Here are my defintions.

- Small: The data that comes embeded in R or Python packages like vega-datasets.

- Medium: Any dataset that puts a stress on the users memory and compute constraints.

- Big: Any data that requires compute, storage, and memory beyond that availabe to standard local compute capabilities. This Mac Pro is not standard yet.

The latest technology advances in data handling have blurred the lines between each of these groups. Python and R can handle many other file formats and have their own formats (.rds and .pkl) for storing data more efficiently and effectively. When users ask for data or when many institutions share data the files are usually some type of text file (.txt, .csv, .tsv) or an Excel file. The most ubiquitous format is .csv.

We aim to reduce compute time and file sizes when we move into medium and big data applications. The Apache Arrow project facilitates the goal with the .parquet and .feather formats and their respective packages for leveraging those formats.

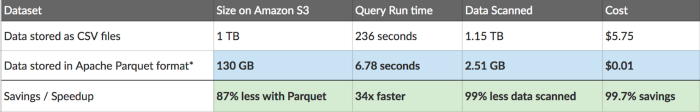

The following table from the openbridge blog provides a strong example of the benefits of these new formats.

Apache Arrow is a software development platform for building high performance applications that process and transport large data sets. A critical component of Apache Arrow is its in-memory columnar format, a standardized, language-agnostic specification for representing structured, table-like datasets in-memory. Apache Arrow

You can read much more about the in-memory Arrow format under their format documentation. The key aspect of Arrow is 'in-memory' and 'columnar' storage. Once we save those files for latter use they are stored outside of memory. For data scientists, the two most used formats are .parquet and .feather.

These new file format allows Python and R to wrangle data that is larger than memory in a performant manner. The Python package pyarrow and R arrow packages provide access to each of these file formats.

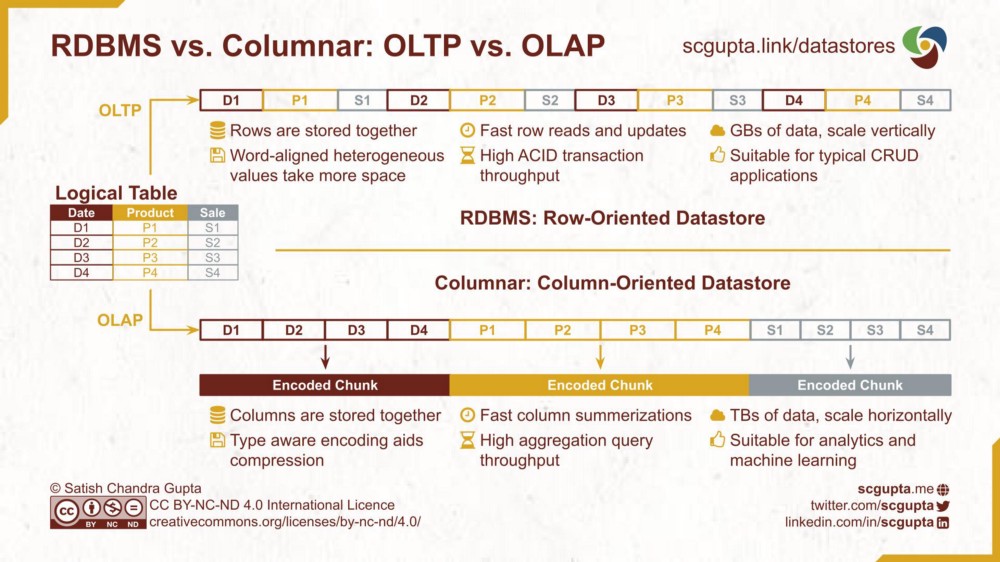

The image from a recent Towards Data Science article highlights what columnar storage means.

Cloudera's blog helps us understand what the concept of 'in-memory' is referencing.

Two processes utilizing Arrow as their in-memory data representation can “relocate” the data from one process to the other without serialization or deserialization. For example, Spark could send Arrow data to a Python process for evaluating a user-defined function.

Apache Parquet is designed for efficient as well as performant flat columnar storage format of data compared to row based files like CSV or TSV files. databricks

Apache also maintains the .parquet format. You can read more details about the development on their webpage. The .parquet format performs well with Arrow in memory objects as it is a columnar format as well.

Wes McKinney and Hadley Wickham developed the Feather file format around 2016 to facilitate data collaboration between Python and R. A short time after Wes incorporated the Feather format into the Apache Arrow project (reference). In 2020, the Apache Arrow team standardized a more robust Feather format called Feather V2 that provides a rich format that can compete with .parquet on most fronts (reference. In comparing .parquet to .feather, Wes MicKinney's April 23, 2020 blog post concludes;

Parquet format has become one of the “gold standard” binary file formats for data warehousing. We highly recommend it as a portable format that can be used in many different data processing systems. It also generally (but not always) produces very small files. Feather V2 has some attributes that can make it attractive:

- Accessible by any Arrow implementation. R and Python use the Arrow C++ library internally, which is a well-supported reference implementation.

- Generally faster read and write performance when used with solid state drives, due to simpler compression scheme. When read over the network, we expect Parquet will outperform.

- Internal structure supports random access and slicing from the middle. This also means that you can read a large file chunk by chunk without having to pull the whole thing into memory.

- Complete support for all Arrow data types. Parquet has a smaller type system and while most of Arrow can be stored in Parquet, there are some things that cannot (like unions). Support for reading nested Parquet data is not fully implemented yet, but should be in 2020 (see ARROW-1644).

These file formats and in-memory constructs are only helpful for medium data projects if we can leverage them using the tools on our local machines - R, Python, and SQL. Arrow is making progress on the available wrangling functions available before pulling the entire dataset into memory. Our goal is to leverage the Arrow methods before we run .to_pandas() in Python and collect() in R.

The pyarrow.parquet.read_table() returns a pyarrow.Table which facilitates data wrangling methods similar to pandas.DataFrame

Some of the most pertinent methods follow.

.slice(): Much likehead()orlimit()..add_column(): Add column to Table at position.append_column(): Append column at end of columns..remove_column(): Create new Table with the indicated column removed..set_column(): Replace column in Table at position..select(): Select columns of the Table..drop(): Drop one or more columns and return a new table..filter(): Select records from a Table..to_pandas(): Converts to a pandas DataFrame.

Arrow's documentation on leveraging dplyr. Importantly they highlight which dplyr verbs work using Arrow.

Arrow supports the dplyr verbs

mutate(),transmute(),select(),rename(),relocate(),filter(), andarrange(). Aggregation is not yet supported, so before you callsummarise()or other verbs with aggregate functions, usecollect()to pull the selected subset of the data into an in-memory R data frame.

They also help us understand how to handle a single file that is too big for memory.

For example, you have a single CSV file that is too big to read into memory. You could pass the file path to

open_dataset(), usegroup_by()to partition the Dataset into manageable chunks, then usewrite_dataset()to write each chunk to a separate Parquet file—all without needing to read the full CSV file into R.

The arrow package has open_dataset() and varied read_ functions for importing data. We want to leverage the options that keep the data in the Arrow Table format.

DuckDB is an in-process SQL OLAP database management system.

DuckDB provides and R package, SQL interface, and Python package that allows us to directly interact with the .parquet files. Their overview post in December 2021 provides clean examples.

We can leverage additional dplyr manipulations beyond what is available in the arrow R package. Notice in the following example that mutate() and summarise() are available in group_by().

library(duckdb)

library(arrow)

library(dplyr)

# Open dataset using year,month folder partition

ds <- arrow::open_dataset("nyc-taxi", partitioning = c("year", "month"))

ds %>%

# Look only at 2015 on, where the number of passenger is positive, the trip distance is

# greater than a quarter mile, and where the fare amount is positive

filter(year > 2014 & passenger_count > 0 & trip_distance > 0.25 & fare_amount > 0) %>%

# Pass off to DuckDB

to_duckdb() %>%

group_by(passenger_count) %>%

mutate(tip_pct = tip_amount / fare_amount) %>%

summarise(

fare_amount = mean(fare_amount, na.rm = TRUE),

tip_amount = mean(tip_amount, na.rm = TRUE),

tip_pct = mean(tip_pct, na.rm = TRUE)

) %>%

arrange(passenger_count) %>%

collect()

DuckDB has a short script that provides example for the Python methods.

We can make direct SQL queries using the .execute() method.

# Reads Parquet File to an Arrow Table

arrow_table = pq.read_table('integers.parquet')

# Gets Database Connection

con = duckdb.connect()

(con.execute('''

SELECT SUM(data)

FROM arrow_table

WHERE data > 50

''')

.fetch_arrow_table()

.to_pandas())You can review the full SQL Functionality of DuckDB.