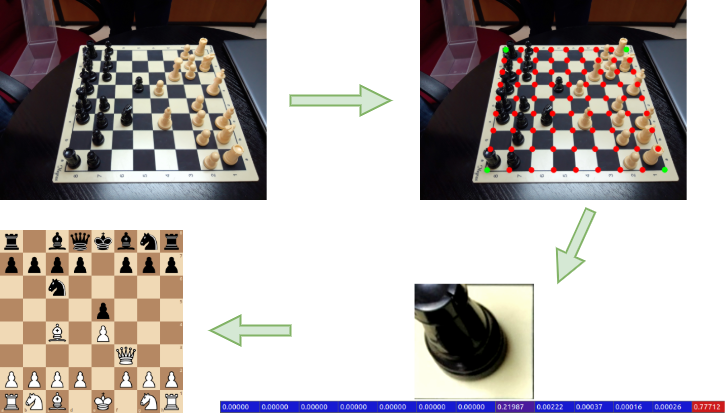

LiveChess2FEN is a fully functional framework that automatically digitizes the configuration of a chessboard. It is optimized for execution on a Nvidia Jetson Nano.

This repository contains the code used in our paper. If you find this useful, please consider citing us.

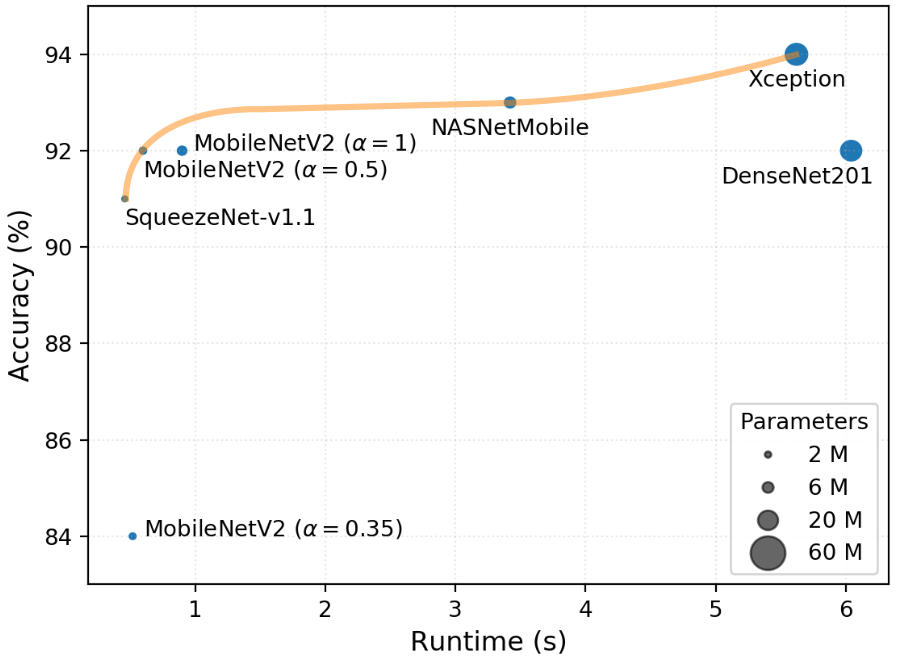

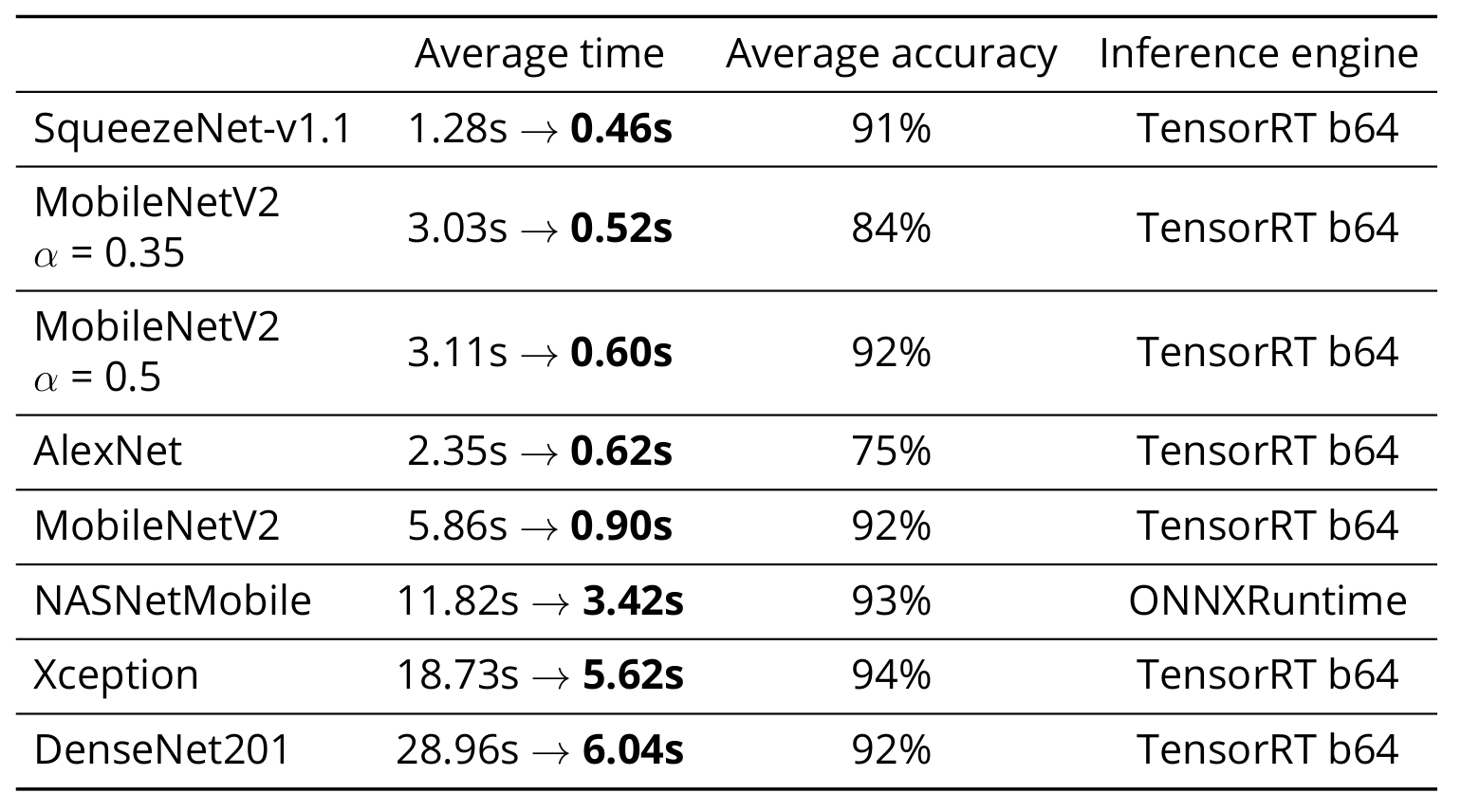

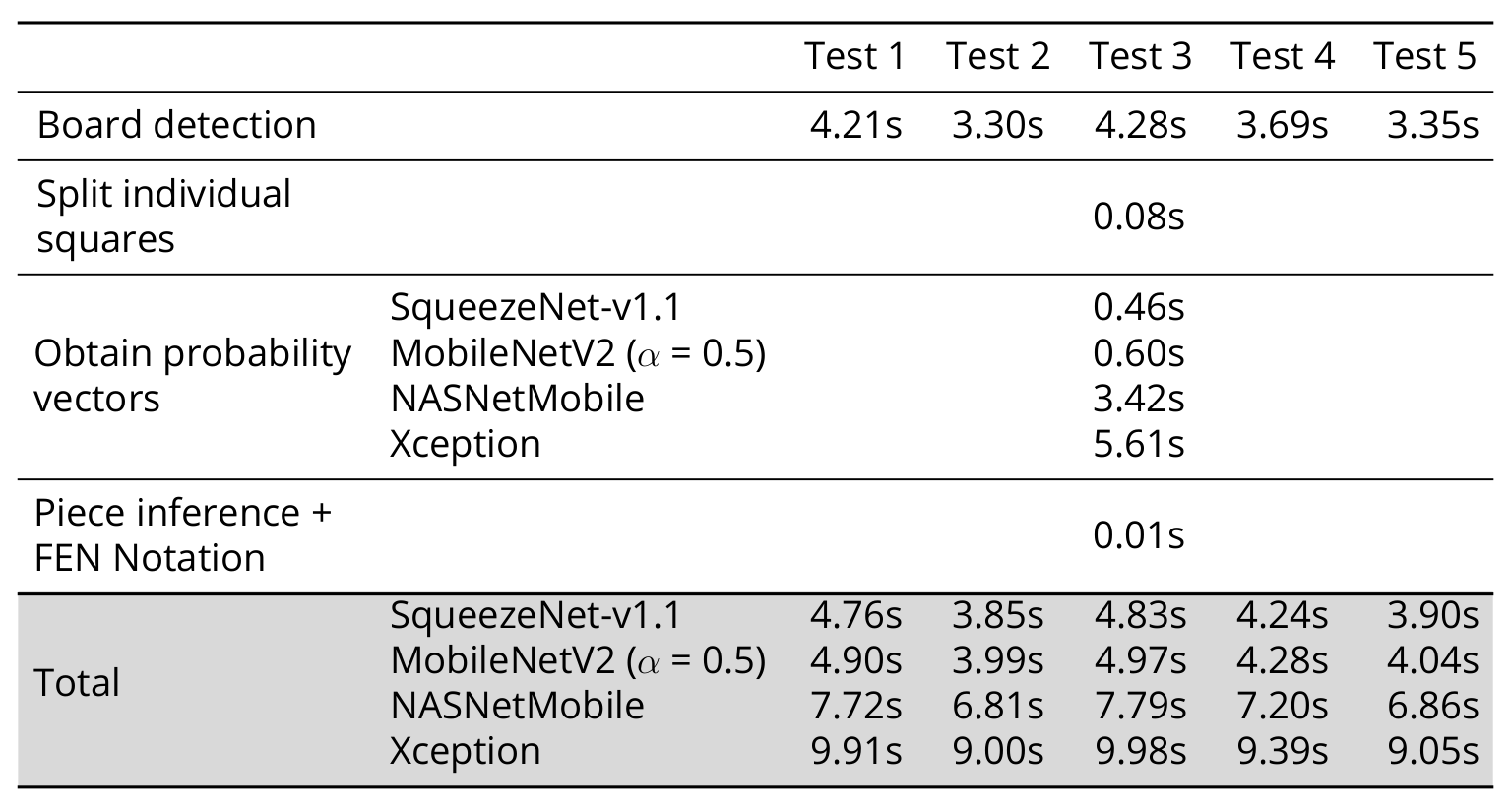

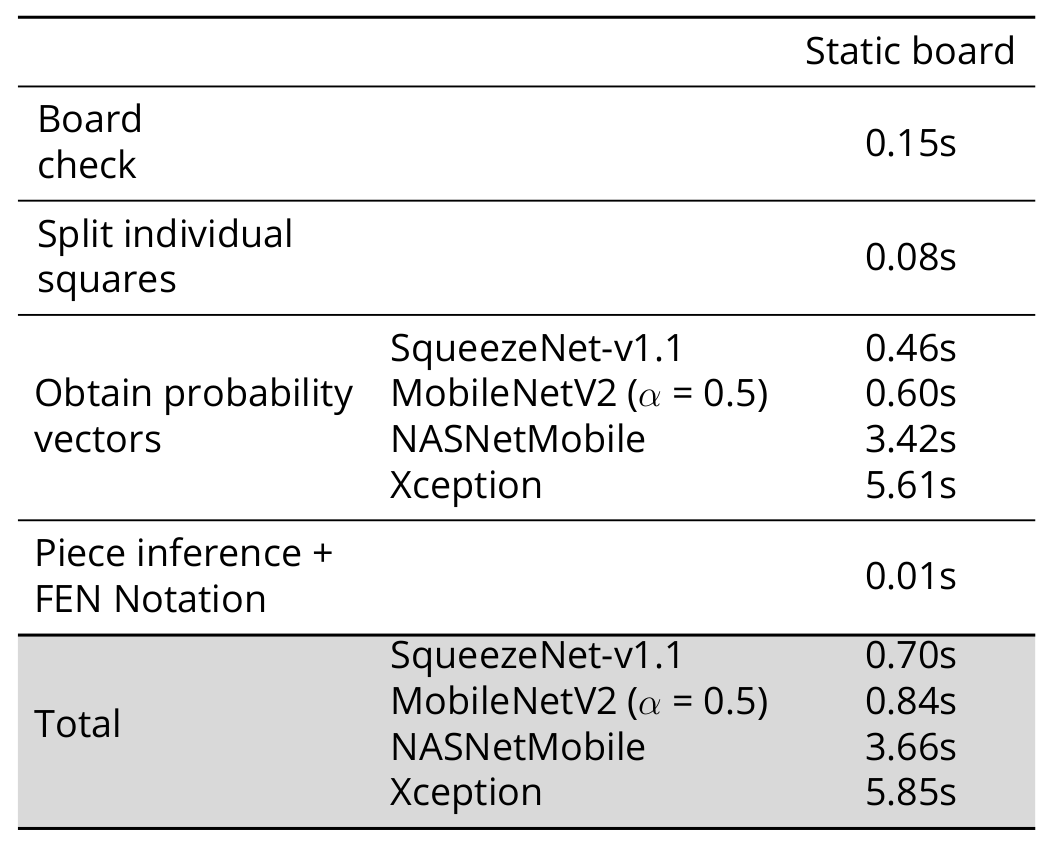

The following times are measured on the Nvidia Jetson Nano 4GB. Each time value is given per chessboard.

See lc2fen/detectboard/laps.py -> check_board_position()

Instructions for the JetPack 4.6. If you run into any problems see the Troubleshooting section below.

-

From the Jetson Zoo install:

-

Tensorflow

sudo apt-get install libhdf5-serial-dev hdf5-tools libhdf5-dev zlib1g-dev zip libjpeg8-dev liblapack-dev libblas-dev gfortran sudo apt-get install python3-pip sudo pip3 install -U pip testresources setuptools=49.6.0 sudo pip3 install -U numpy==1.19.4 future==0.18.2 mock==3.0.5 h5py==2.10.0 keras_preprocessing==1.1.1 keras_applications==1.0.8 gast==0.2.2 futures protobuf pybind11 sudo pip3 install --pre --extra-index-url https://developer.download.nvidia.com/compute/redist/jp/v46 tensorflow -

Keras

sudo apt-get install -y build-essential libatlas-base-dev gfortran sudo pip install keras -

ONNX Runtime Download the .whl file from here and run:

pip3 install onnxruntime_gpu-1.8.0-cp36-cp36m-linux_aarch64.whl

-

-

Install OpenCV 4.5 with CUDA enabled. To do so, download and execute the script found here. Warning, this process will take a few hours and you will need at least 4GB of swap space.

-

Install onnx-tensorrt.

git clone --recursive https://github.com/onnx/onnx-tensorrt.git cd onnx-tensorrt mkdir build && cd build cmake .. -DCUDA_INCLUDE_DIRS=/usr/local/cuda/include -DTENSORRT_ROOT=/usr/src/tensorrt -DGPU_ARCHS="53" make sudo make install export LD_LIBRARY_PATH=$PWD:$LD_LIBRARY_PATH

- jtop helps to monitor the usage of the Jetson Nano. To install:

Then reboot the Jetson Nano. You can execute it by running

sudo -H pip install -U jetson-statsjtop.

-

To upgrade CMake download CMake 3.14.7 and run:

tar -zxvf cmake-3.14.7.tar.gz cd cmake-3.14.7 sudo ./bootstrap sudo make sudo make install cmake --version -

To install protobuf download protobuf 3.17.3 and run:

tar -zxvf protobuf-cpp-3.17.3.tar.gz cd protobuf-3.17.3 ./configure make sudo make install sudo ldconfig -

If you get the error message:

ImportError: /usr/lib/aarch64-linux-gnu/libgomp.so.1: cannot allocate memory in static TLS blocksimply run:export LD_PRELOAD=/usr/lib/aarch64-linux-gnu/libgomp.so.1In order to solve permanently the error, add that line to the end of your

~/.bashrcfile. -

If you get the error

Illegal instruction (core dumped)run:export OPENBLAS_CORETYPE=ARMV8In order to solve permanently the error, add that line to the end of your

~/.bashrcfile.

Note: You can find a list of version numbers for the python packages that have been tested to work in the requirements.txt file.

-

Install Python 3.6.9 or later and the following dependencies:

- NumPy

- OpenCV4

- Matplotlib

- scikit-learn

- pillow

- pyclipper

- tqdm

-

Depending on the inference engine install the following dependencies:

- Keras with tensorflow backend. Slower than ONNX.

- ONNX Runtime.

- (Optional) TensorRT. Fastest available, although more tricky to set up.

-

Create a

selected_modelsand apredictionsfolder in the project root. -

Download the prediction models from the releases and save them to the

selected_modelsfolder. -

Download the contents of

TestImages.zip->FullDetectionfrom the releases into thepredictionsfolder. You should have 5 test images and a boards.fen file. -

Edit

test_lc2fen.pyand set theACTIVATE_*,MODEL_PATH_*,IMG_SIZE_*andPRE_INPUT_*constants. -

Run the

test_lc2fen.pyscript.

Contributions are very welcome! Please check the CONTRIBUTING file for more information on how to contribute to LiveChess2FEN.

You can find a non-legal quick summary here: tldrlegal AGPL

Copyright (c) 2020 David Mallasén Quintana

This program is free software: you can redistribute it and/or modify it under the terms of the GNU Affero General Public License as published by the Free Software Foundation, either version 3 of the License, or (at your option) any later version.

This program is distributed in the hope that it will be useful, but WITHOUT ANY WARRANTY; without even the implied warranty of MERCHANTABILITY or FITNESS FOR A PARTICULAR PURPOSE. See the GNU Affero General Public License for more details.

You should have received a copy of the GNU Affero General Public License along with this program. If not, see http://www.gnu.org/licenses/.