Gray-scale Image Colorization of low resolution images using a Conditional Deep Convolutional Generative Adversarial Network (DCGAN). This is a PyTorch implementation of the Conditional DCGAN(Deep Convolutional Generative Adversarial Networks).

- Python 3.6

- PyTorch

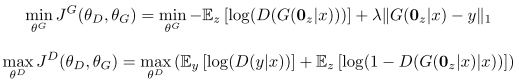

In a traditional GAN, the input of the generator is randomly generated noise data z. However, this approach is not applicable to the automatic colorization problem due to the nature of its inputs. The generator must be modified to accept grayscale images as inputs rather than noise. This problem was addressed by using a variant of GAN called Conditional Generative Adversarial Network. Since no noise is introduced, the input of the generator is treated as zero noise with the grayscale input as a prior. The equations describing the cost function to train a DCGAN are as follows:

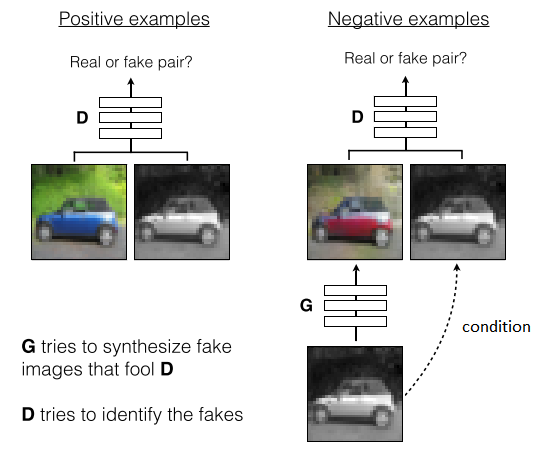

The discriminator gets colored images from both generator and original data along with the grayscale input as the condition and tries to tell which pair contains the true colored image. This is illustrated by the figure below:

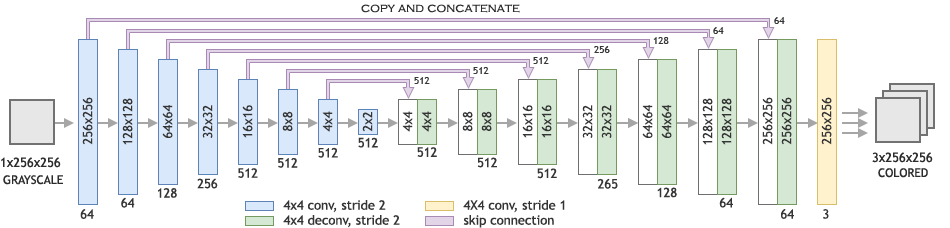

The architecture of generator is inspired by U-Net: The architecture of the model is symmetric, with n encoding units and n decoding units. The architecture of the generator is shown below:

For discriminator, we use similar architecture as the baselines contractive path.

- Clone the source code and run

python3 train.py.

-

It begins by downloading the Cifar-10 train dataset in the

/datadirectory. The Cifar-10 train dataset consists of 50,000 images distributed equally across 10 classes like plane, bird, cat, ship, truck to name a few and each image is of resolution 32x32. The images are PIL images in the range [0, 1]. The color space of the images is changed from RGB to CieLAB. Unlike RGB, CieLAB consists of an illuminance channel which entirely contains the brightness information and two chrominance channels which contain the enitre color information. This prevents any sudden variations in both color and brightness through small perturbations in intensity values that are experienced through RGB. Finally, a data loader is made over the transformed dataset. -

Finally, training begins. The training is carried for 100 epochs and the generator and discriminator models are saved periodically in directories

/cifar10_train_generatorand/cifar10_train_discriminatorrespectively.

- To evaluate the model on the Cifar-10 test dataset, run

python3 eval.py. It performs the evaluation on the entire test dataset and prints the mean absolute error(MAE: pixel-wise image distance between the source and the generated images) at the end.

CIFAR-10 is used as the dataset for training. To train the model on the full dataset, download dataset here.

Suggestions and pull requests are actively welcome.