Work in progress

This repository contains implementation of following Relational Q-Learning algorithms.

- Relational Regression Tree (RRT) by Džeroski et al. 2001.

- Relational Gradient Boosted Q-Learning (GBQL) as proposed by Das et al. 2020.

-

Create python virtual environment

python3 -m venv rql-venv source rql-venv/bin/activate -

Get the debug branch of the srlearn fork

git clone git@github.com:harshakokel/srlearn.git git checkout --track origin/debug pip install -e . -

Get this code

git clone git@github.com:harshakokel/Relational-Q-Learning.git pip install -e . -

Run the experiment

python experiments/rrt_stack.py python experiments/gbql_stack.py

Viskit is the recommended way to visualize all the experiment.

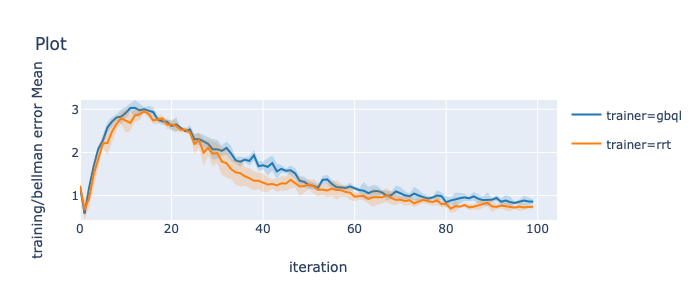

python viskit/frontend.py Relational-Q-learning/data/<exp_prefix>/Here is a sample plot generated by viskit comparing the bellman error of RRT and GBQL for the blocksworld stack environment.

Relational-Q-Learning

├── README.md # this file

├── core/ # Relational Q-Learning core code

| ├── data_management/ # contains buffer implementation for managing SARS

| ├── exploration_strategy/ # strategies for changing epsilon for exploration

| ├── learning_rate_strategy/ # strategies for changing learning rate while training

| ├── srl/ # Wrapper for BoostSRL call, currently only enables caching.

| ├── trainer/ # RRT and GBQL learing algorithms.

| └── util # logging utility code

├── data/ # results from experiments

├── environments/ # Symbolic Environments

| ├── blocksworld_stack.py # Blocksworld stack environment

| └── ...

└── experiments/ # runner files for different experimentsThe relational learning part uses the srlearn library by Alex Hayes which internally uses BoostSRL by Dr. Sriraam Natarajan and his lab. Some of the utility code is taken from the Vitchyr Pong's RLkit code. Visualization kit is originally form RL Lab and extracted by Vitchyr Pong.

[1] Džeroski, S., De Raedt, L., & Driessens, K. "Relational reinforcement learning." Machine learning 2001.

[2] Das, S., & Natarajan, S., & Roy, K., & Parr, R., & Kersting, K., "Fitted Q-Learning for Relational Domains", International Conference on Principles of Knowledge Representation and Reasoning (KR) 2020.