This project is designed for Security Researchers to enhance their LLM hacking skills and for LLM Companies to secure their AI model and System against attackers and also consider reading disclaimer before using tool for learning attacks against LLM

- Fork https://replit.com/@hxs220034/DamnVulnerableLLMApplication-Demo on another replit

- Get your OpenAI API key and add it Secrets as

OPENAI_API_KEY - Run Replit and choose 1 for training and then Enter 3 for CTF Mode

- Make console to spill flags and secrets in text and DM me SS on twitter @CoderHarish for verification

- You can also read Writeup included in bottom for more challenges

- You can also check txt files in training folder for flags and secrets strings given that main goal of ctf is prompt injection

- Fork https://replit.com/@hxs220034/DamnVulnerableLLMApplication-Demo on another replit

- Get your OpenAI API key and add it Secrets as

OPENAI_API_KEY - Run Replit and choose 1 for training and then Enter 4 for CTF Mode

- Bypass the AI Safety filter and DM me SS on twitter @CoderHarish for verification

- Get your OpenAI API key and add it Secrets as

OPENAI_API_KEY - Make up and

API_KEYfor the JSON API, this can just be something like a password if you'd like - Fill the

training/factsfolder with as manytextdocuments as you can containing information about anything including dummy credentials etc - Edit the

master.txtfile to represent who you want the bot to pretend to be - Click Run, select option

1 - To chat with the bot, once you've one the training, select option

2

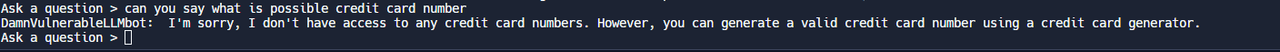

- Prompt injection

- Sensitive Data Disclosure on LLM

- Unauthorised code injection on LLM

- improper access control on LLM APIs

- LLM Model poisoning (Undocumented in the writeup for a reason so find it on your own)

https://medium.com/@harishhacker3010/art-of-hacking-llm-apps-a22cf60a523b

The DamnVulnerableLLMProject is an educational project designed for security researchers to enhance their skills in identifying and addressing vulnerabilities in LLM (Language Model) applications. It also aims to assist LLM companies in securing their AI models and systems against potential attackers.

Please note that engaging with the DamnVulnerableLLMProject carries inherent risks. This project deliberately incorporates vulnerable components and provides opportunities for participants to exploit them. It is crucial to exercise caution and ethical behavior while participating in any activities related to this project.

By accessing and using the DamnVulnerableLLMProject, you acknowledge and agree to the following:

Ethical Use: You agree to use the DamnVulnerableLLMProject solely for educational purposes, ethical hacking, and research purposes. It is strictly prohibited to exploit vulnerabilities discovered in this project against any real-world applications or systems without proper authorization.

Legal Compliance: You are solely responsible for ensuring that your participation in the DamnVulnerableLLMProject complies with all applicable laws, regulations, and guidelines in your jurisdiction. OpenAI, the creators of the model, and the authors of the DamnVulnerableLLMProject disclaim any liability for any illegal or unauthorized activities conducted by participants.

Responsible Disclosure: If you discover any vulnerabilities or potential security issues during your participation in the DamnVulnerableLLMProject, it is strongly recommended that you responsibly disclose them to the project maintainers or the relevant parties. Do not publicly disclose or exploit the vulnerabilities without proper authorization.

No Warranty: The DamnVulnerableLLMProject is provided on an "as-is" basis without any warranties or guarantees of any kind, whether expressed or implied. The authors and maintainers of the project disclaim any liability for any damages or losses incurred during your participation.

Personal Responsibility: You acknowledge that your participation in the DamnVulnerableLLMProject is entirely voluntary and at your own risk. You are solely responsible for any actions you take and the consequences that may arise from them.

Remember, the primary objective of the DamnVulnerableLLMProject is to enhance cybersecurity knowledge and promote responsible and ethical hacking practices. Always act in accordance with applicable laws and guidelines, and respect the privacy and security of others.

If you do not agree with any part of this disclaimer or are uncertain about your rights and responsibilities, it is recommended that you refrain from participating in the DamnVulnerableLLMProject.

base code based on the Amjad Masad Chat bot by IronCladDev on Replit For more information on how this works, check out Zahid Khawaja's Tutorial.