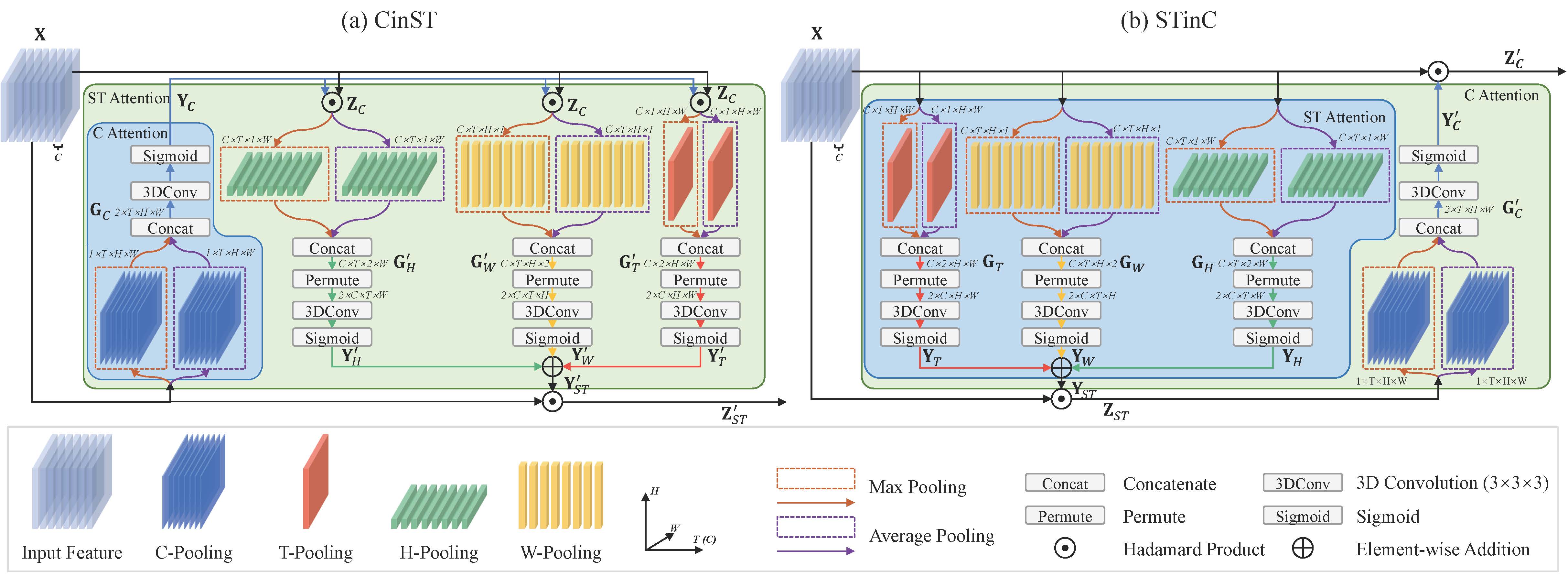

Attention in Attention: Modeling Context Correlation for Efficient Video Classification (IEEE TCVST 2022)

This is an official implementaion of paper "Attention in Attention: Modeling Context Correlation for Efficient Video Classification", which has been accepted by IEEE TCVST 2022. Paper link

- Release this V1 version (the version used in paper) to public. Complete codes and models will be released soon.

The code is built with following libraries:

- PyTorch >= 1.7, torchvision

- tensorboardx

For video data pre-processing, you may need ffmpeg.

Here we provide some of the pretrained models.

| Model | Frame * view * clip | Top-1 Acc. | Top-5 Acc. | Checkpoint |

|---|---|---|---|---|

| AIA(TSN) ResNet50 | 8 * 1 * 1 | 48.5% | 77.2% | link |

| Model | Frame * view * clip | Top-1 Acc. | Top-5 Acc. | Checkpoint |

|---|---|---|---|---|

| AIA(TSN) ResNet50 | 8 * 1 * 1 | 60.3% | 86.4% | link |

| Model | Frame * view * clip | Top-1 Acc. | Checkpoint |

|---|---|---|---|

| AIA(TSN) ResNet50 | 8 * 1 * 1 | 79.3% | link |

| AIA(TSM) ResNet50 | 8 * 1 * 1 | 79.4% | link |

| Model | Frame * view * clip | Split1 | Split2 | Split3 |

|---|---|---|---|---|

| AIA(TSN) ResNet50 | 8 * 1 * 1 | 63.7% | 62.1% | 61.5% |

| AIA(TSN) ResNet50 | 8 * 1 * 1 | 64.7% | 63.3% | 62.2% |

GC codes are jointly written and owned by Dr. Yanbin Hao and [Dr. Shuo Wang].

Thanks for the following Github projects: