This repository contains the code and implementation details for the research paper titled "Neural Network Diffusion." The paper explores novel paradigms in deep learning, specifically focusing on diffusion models for generating high-performing neural network parameters.

- Kai Wang1, Zhaopan Xu1, Yukun Zhou, Zelin Zang1, Trevor Darrell3, Zhuang Liu*2, and Yang You*1(* equal advising)

- 1National University of Singapore, 2Meta AI, and 3University of California, Berkeley

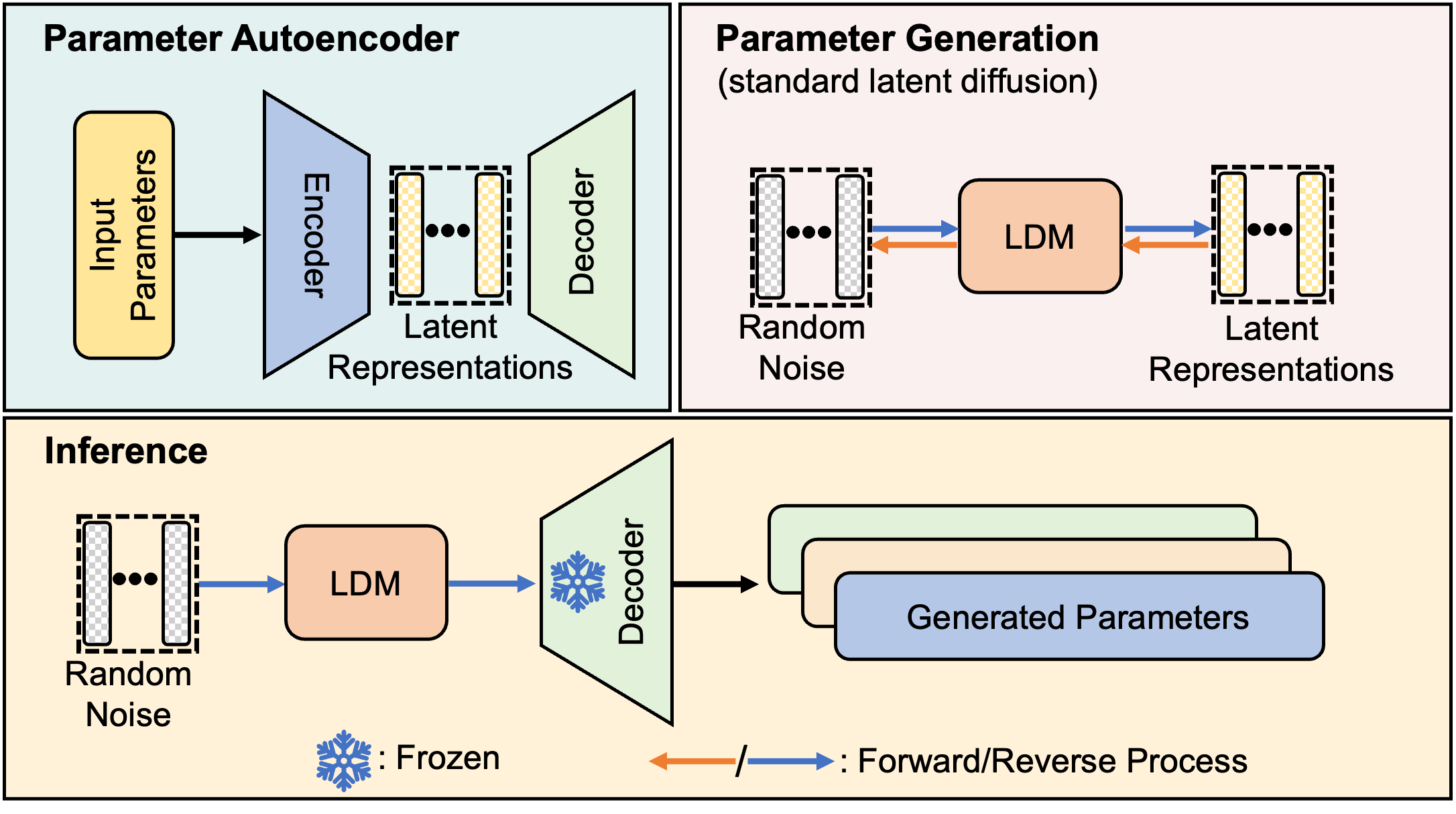

Abstract: Diffusion models have achieved remarkable success in image and video generation. In this work, we demonstrate that diffusion models can also generate high-performing neural network parameters. Our approach is simple, utilizing an autoencoder and a standard latent diffusion model. The autoencoder extracts latent representations of the trained network parameters. A diffusion model is then trained to synthesize these latent parameter representations from random noise. It then generates new representations that are passed through the autoencoder's decoder, whose outputs are ready to use as new sets of network parameters. Across various architectures and datasets, our diffusion process consistently generates models of comparable or improved performance over SGD-trained models, with minimal additional cost. Our results encourage more exploration on the versatile use of diffusion models.

- Clone the repository:

git clone https://github.com/NUS-HPC-AI-Lab/Neural-Network-Diffusion.git

- Create a new Conda environment and activate it:

conda env create -f environment.yml

conda activate pdiff

or install necessary package by:

pip install -r requirements.txt

For CIFAR100 Resnet18 parameter generation, you can run the script:

bash ./cifar100_resnet18_k200.sh

The script is run in two steps, one to obtain the relevant parameters for the task, the second to train and test the generative model of the parameters.

We use Hydra package for our configuration. You can modify the configuration by modifying the config file as well as command line.

For example, for CIFAR10 Resnet18, you can change the config file configs/task/cifar100.yaml.

data:

root: cifar100 path

dataset: cifar100

batch_size: 64

num_workers: 1

to:

data:

root: cifar10 path

dataset: cifar10

batch_size: 64

num_workers: 1

or you can change the Neural Network training data (K) by command line:

python train_p_diff.py task.param.k=xxx

If you found our work useful, please consider citing us.

@misc{wang2024neural,

title={Neural Network Diffusion},

author={Kai Wang and Zhaopan Xu and Yukun Zhou and Zelin Zang and Trevor Darrell and Zhuang Liu and Yang You},

year={2024},

eprint={2402.13144},

archivePrefix={arXiv},

primaryClass={cs.LG}

}

We thank Kaiming He, Dianbo Liu, Mingjia Shi, Zheng Zhu, Bo Zhao, Jiawei Liu, Yong Liu, Ziheng Qin, Zangwei Zheng, Yifan Zhang, Xiangyu Peng, Hongyan Chang, Zirui Zhu, David Yin, Dave Zhenyu Chen, Ahmad Sajedi and George Cazenavette for valuable discussions and feedbacks.