[Project Page] [Data] [Model Zoo]

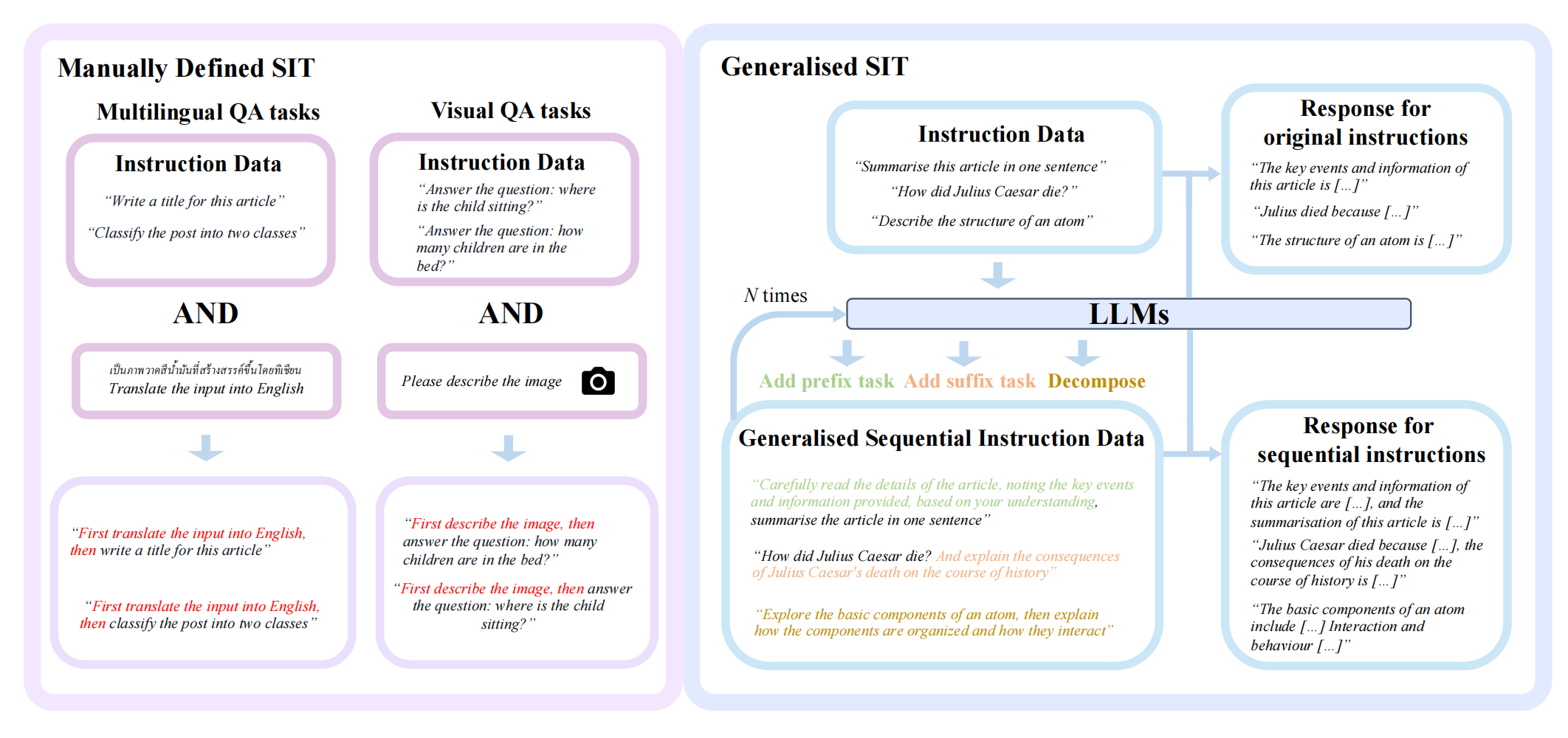

This is the code to replicate the sequential instruction tuning experiments in the paper SIT: Fine-tuning Large Language Models with Sequential Instructions. [cite]

Our implementation is based on the Open-Instruct and LAVIS repository.

For text-only experiments

#Prepare enviornment

conda create -n seq_ins python=3.8

conda activate seq_ins

bash setup.shFor vision-language experiments

cd LAVIS

conda create -n seq_ins_vl python=3.8

conda activate seq_ins_vl

pip install -e .Next, prepare train and eval data:

You can download sequential instruction data for training here, then move it to self-seq/data/

And you can download evaluation data by running:

bash scripts/prepare_eval_data.shfor vision-langauge data:

cd LAVIS

bash download_vqa.shConvert original instruction tuning dataset to sequential version:

bash self-seq/scripts/generation_flancot_llama_70b.shTo train both sequential instruction and original instruction data, you can specify your preferred LLM, path of training dataset at scripts/alpaca/finetune_accelerate_sit_llama_70b.sh and running:

bash scripts/alpaca/finetune_accelerate_sit_llama_70b.shTrain on vision-langauge data, you can first specify the pre-trained checkpoint at ./LAVIS/lavis/configs/models/blip2

then you can firstly specify the output models path at ./LAVIS/lavis/projects/instructblip/caption_coco_vicuna7b_train.yaml, then

bash run_scripts/blip2/train/eval_instruct_caption_coco.shFirst, you should prepare evaluation datasets:

bash scripts/prepare_eval_data.shThen, you can run eval of all general and sequential tasks, please replace YOUR_MODEL_NAME as the path of your trained models

bash scripts/evaluation.sh YOUR_MODEL_NAMEPlease consider citing us if you use our materials.

@article{hu2024fine,

title={Fine-tuning Large Language Models with Sequential Instructions},

author={Hu, Hanxu and Yu, Simon and Chen, Pinzhen and Ponti, Edoardo M},

journal={arXiv preprint arXiv:2403.07794},

year={2024}

}