ask_openai.py – call the OpenAI API, fill in your API_KEY

ask_ollama.py – call the local Ollama API (localhost:11434)

required:

- prompt_toolkit (input)

- openai (ask_openai.py)

- requests (ask_ollama.py)

- rich (output)

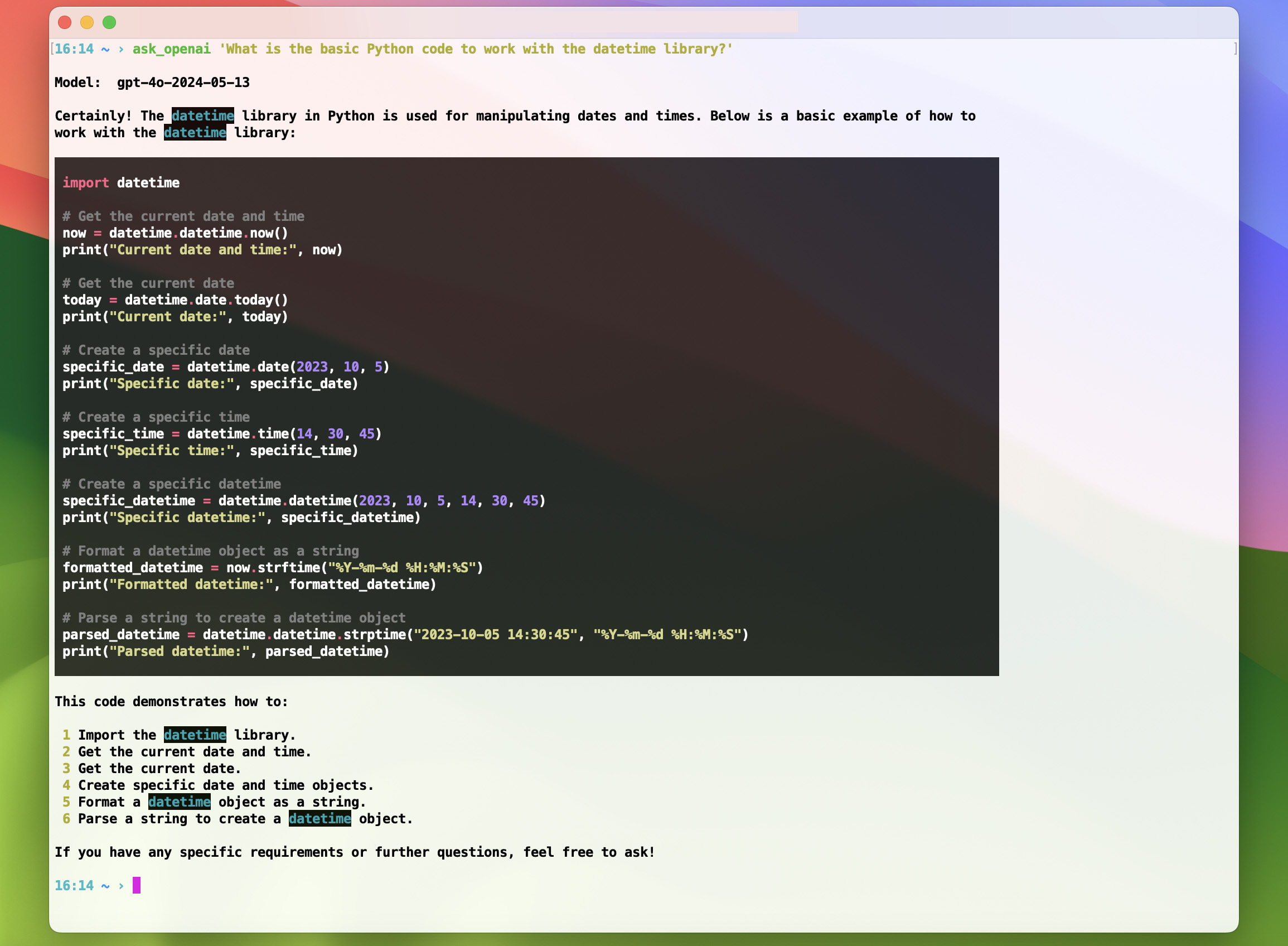

ask_openai.py uses the openai Python library to call the OpenAI API. An internet connection is required. You have to insert your API_KEY by editing the my_api_key = 'sk-***' line before running the script.

Choose a specific OpenAI model by editing the gpt_model = 'gpt-4o' line. See also: platform.openai.com/docs/models.

ask_ollama.py uses the requests Python library to call the local Ollama API (based on llama.cpp). An internet connection is not required. Install Ollama first and start the local server (localhost:11434). To download LLMs use the ollama run <model> command.

The best general LLMs for computers with 8…16 GB of RAM in July 2024 are gemma2 by Google (9B, 5.4GB), llama3.1 by Meta (8B, 4.7GB), phi3 by Microsoft (4B, 2.2GB), and mistral by MistralAI (7B, 4.1GB). See also: ollama.com/models.

Example: