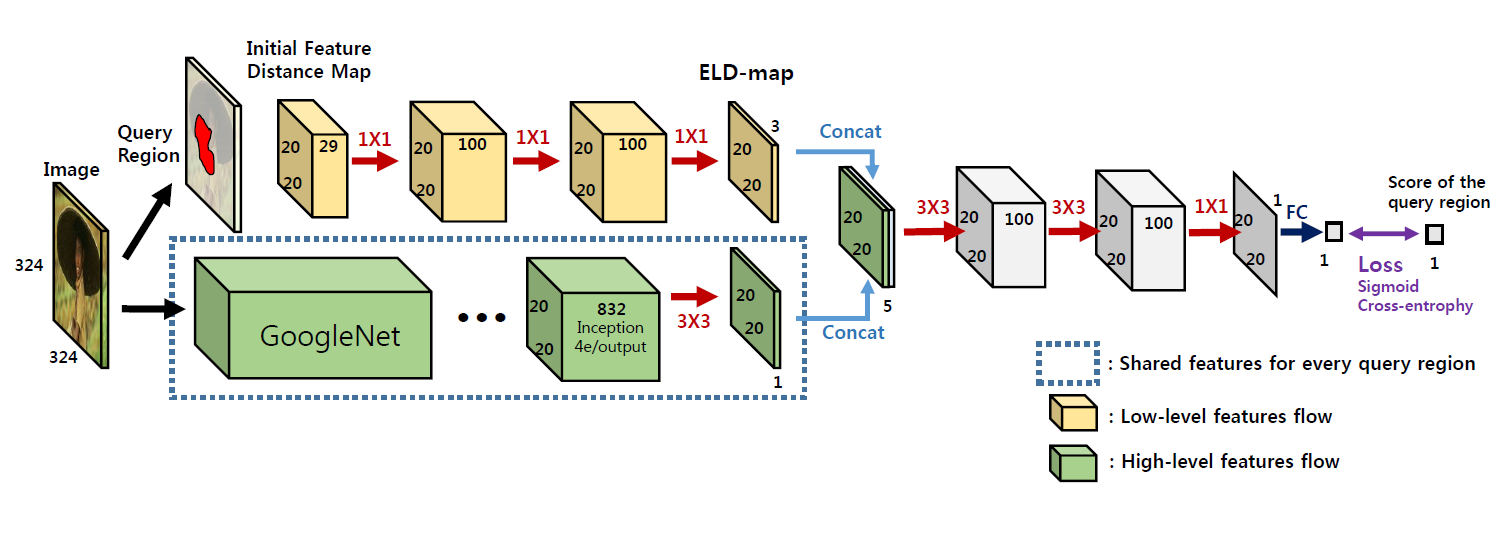

Source code for our journal submission "ELD-Net: An efficient deep learning architecture for accurate saliency detection" which is an extended version of our CVPR 2016 paper "Deep Saliency with Encoded Low level Distance Map and High Level Features" by Gayoung Lee, Yu-Wing Tai and Junmo Kim. ([ArXiv paper link for CVPR paper] (http://arxiv.org/abs/1604.05495))

Acknowledgement : Our code uses various libraries: Caffe, OpenCV and Boost. We utilize gSLICr with minor modification for superpixel generation.

-

Dependencies 0. OS : Our code is tested on Ubuntu 14.04 0. CMake : Tested on CMake 2.8.12 0. Caffe : Caffe that we used is contained in this repository. 0. OpenCV 3.0 : We used OpenCV 3.0, but the code may work with OpenCV 2.4.X version. 0. g++ : Our code was tested with g++ 4.9.2. 0. Boost : Tested on Boost 1.46

-

Installation 0. Download our pretrained model from (Link1) or (Link2)

-

Build Caffe in the project folder using CMake:

cd $(PROJECT_ROOT)/caffe/ mkdir build cd build/ cmake .. make -j4

-

Change library paths in $(PROJECT_ROOT)/CMakeLists.txt for your custom environment and build our test code:

cd $(PROJECT_ROOT) edit CMakeList.txt cmake . make

-

Run the executable file with four arguments (arg1: prototxt path, arg2: model binary path, arg3: images directory, arg4: results directory)

./get_results ELDNet_test.prototxt ELDNet_80K.caffemodel test_images/ results/

-

The results will be generated in the result directory.

-

We provide our results of benchmark datasets used in the paper for convenience. Link1 uses dropbox and link2 uses baidu.

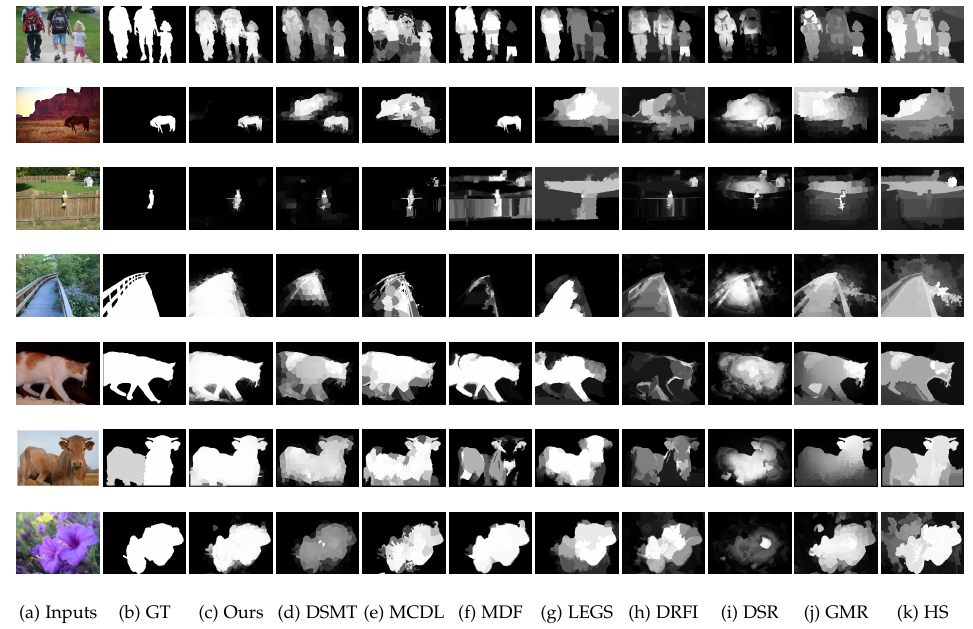

ECSSD results (link1) (link2) (ECSSD dataset site)

PASCAL-S results (link1) (link2) (PASCAL-S dataset site)

DUT-OMRON results (link1) (link2) (DUT-OMRON dataset site)

THUR15K results (link1) (link2) (THUR15K dastaset site)

HKU-IS results (link1) (link2) (ASD dataset site)

Please kindly cite our work if it helps your research:

@inproceedings{lee2016saliency,

title = {Deep Saliency with Encoded Low level Distance Map and High Level Features},

author={Gayoung, Lee and Yu-Wing, Tai and Junmo, Kim},

booktitle = {IEEE Conference on Computer Vision and Pattern Recognition (CVPR)},

year = {2016}

}