Yuzhang Shang, Dan Xu, Ziliang Zong, Liqiang Nie, and Yan Yan

The code for the Network Binarization via Contrastive Learning, which has been accepted to ECCV 2022. paper

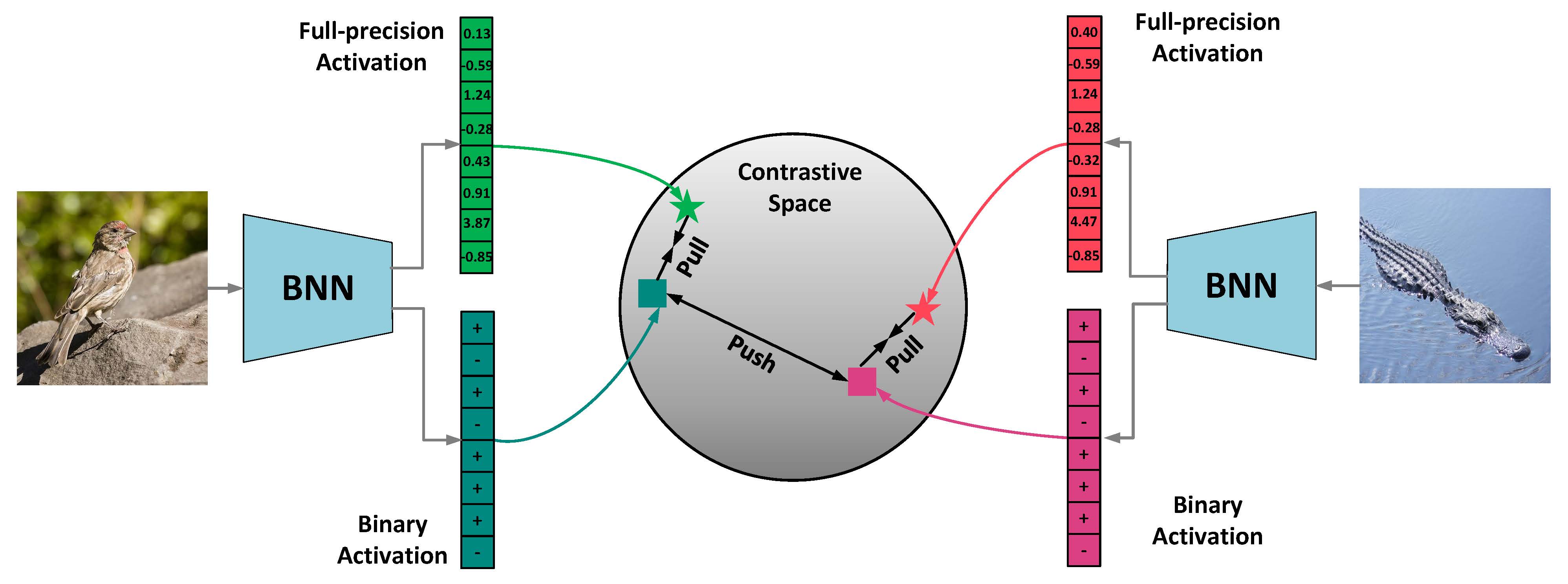

General Idea of CMIM: Feeding two images into a BNN, and obtaining the three pairs of binary and full-precision activations. Our goal is to embed the activations into a contrastive space, then learn from the pair correlation with the contrastive learning task in Eq.13.First, download our repo:

git clone https://github.com/42Shawn/CMIM.git

cd CMIM/cifar100Then, run our repo:

python main.py --save='v0' --data_path='path-to-dataset' --gpus='gpu-id' --alpha=3.2Note that the alpha can be change to conduct ablation studies, and alpha=0 is equal to RBNN itself.

Demo Result

RBNN (alpha = 0) => CMIM (alpha = 3.2)

65.4 => 71.2

If you find our code useful for your research, please cite our paper.

@inproceedings{

shang2022cmim,

title={Network Binarization via Contrastive Learning},

author={Yuzhang Shang and Dan Xu and Ziliang Zong and Liqiang Nie and Yan Yan},

booktitle={ECCV},

year={2022}

}

Related Work

Our repo is modified based on the Pytorch implementations of Forward and Backward Information Retention for Accurate Binary Neural Networks (IR-Net, CVPR 2020) and Rotated Binary Neural Network (RBNN, NeurIPS 2020). Thanks to the authors for releasing their codebases!