Quick Links: [Paper] [Project Page] [dataset-tiktok] [dataset-reddit] [Models]

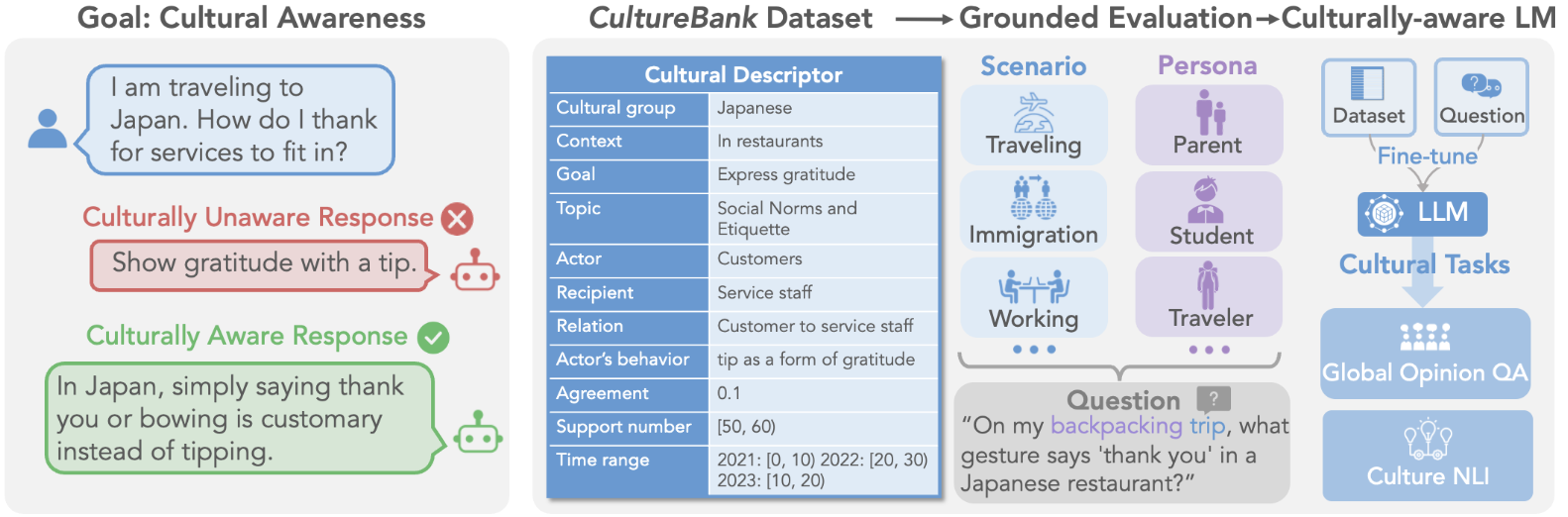

We provide:

- an easy-to-use and generalizable pipeline to construct cultural knowledge bank from online communities

- two cultural knowledge datasets, [CultureBank-TikTok] and [CultureBank-Reddit]

- grounded cultural evaluation and fine-tuning scripts

- Setup the environment

conda env create -f environment.yml

- Setup the api keys

-

OpenAI:

os.getenv("OPENAI_API_KEY") -

Perspective api:

os.getenv("PERSPECTIVE_API")

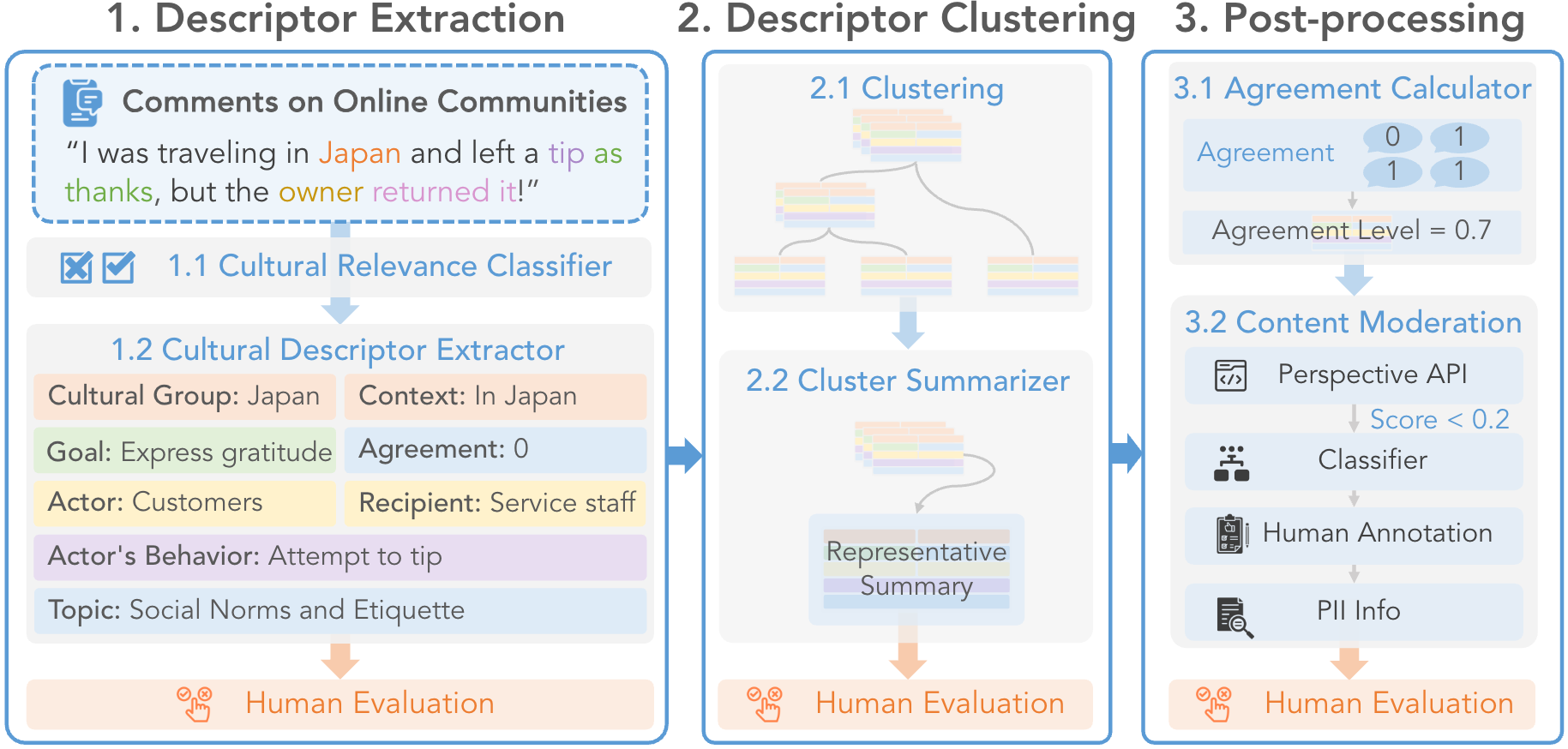

The pipeline contains 9 components (see data_process_pipeline/pipeline/main_pipeline.py).

data_process_pipeline/pipeline/component_0_culture_relevance_classifier.py: classify if a comment is related to culturedata_process_pipeline/pipeline/component_1_knowledge_extractor.py.py: extract cultural information from the commentdata_process_pipeline/pipeline/component_2_negation_converter.py: convert positive sentences to negative formsdata_process_pipeline/pipeline/component_3_clustering.py: perform clusteringdata_process_pipeline/pipeline/component_4_cluster_summarizer.py: summarize the clustersdata_process_pipeline/pipeline/component_5_topic_normalization.py: normalize the cultural groups and topicsdata_process_pipeline/pipeline/component_6_agreement_calculator.py: calculate the agreement valuesdata_process_pipeline/pipeline/component_7_content_moderation.py: identify potentially controversial and PII data for annotationdata_process_pipeline/pipeline/component_8_final_formatter.py: format the final data

-

Prepare a data file, e.g., the provided

dummy data file -

Set up the paths in the config, e.g., the provided

config_dummy_data_vanilla_mistral.yaml -

Run this command to run the components with index 0,1,3,4,5,6,7,8 in order with the config

python data_process_pipeline/main.py -i 0,1,3,4,5,6,7,8 -c ./data_process_pipeline/configs/config_dummy_data_vanilla_mistral.yaml

- The final output will be at

data_process_pipeline/results/8_final_formatter/output.csv, as specified inconfig_dummy_data_vanilla_mistral.yaml.

How to run individual components

We can also run individual components, but need to make sure the input file exists.

# load the 0th component, relevance_classifier

python data_process_pipeline/main.py -i 0 -c ./data_process_pipeline/configs/config_dummy_data_vanilla_mistral.yaml

Some notes

- The pipeline will also generate a file with controversial data for human annotation,

output_file_for_manual_annotation, you need to annotate it and put it incontroversial_annotation_file - We prepare two sample configs:

config_dummy_data_vanilla_mistral.yaml: uses vanilla mistral models as the extractor and summarizer, light-weightconfig_dummy_data_finetuned_mixtral.yaml: uses vanilla mixtral models plus our fine-tuned adapters on Reddit as the extractor and summarizer, requires more GPU memory (at least ~27GB)

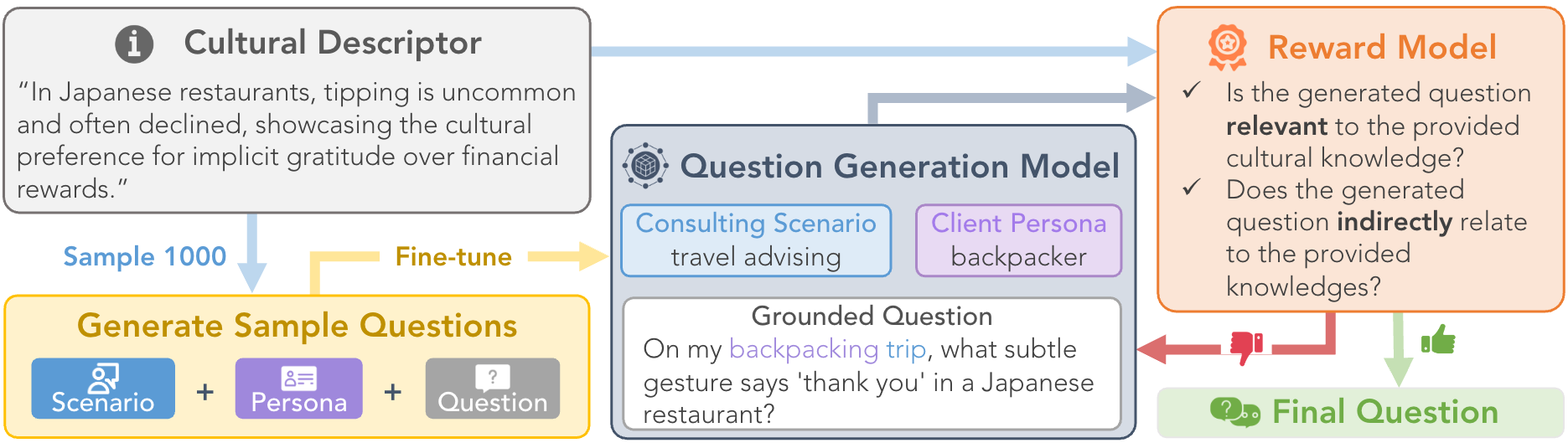

evaluation/convert_to_desc.py: concatenates the fields in CultureBank data and translates them into free-text paragraphs of cultural descriptors.evaluation/generate_questions.py: generates questions for grounded evaluation based on the cultural descriptors. The released adapter is here.evaluation/generate_questions_aug.py: generates questions for grounded evaluation based on the cultural descriptions with self-refinement method (very similar toevaluation/generate_questions.py, the only difference is that GPT-4 will score the generated question until max trials or good results). The released adapter is here.evaluation/grounded_eval.py: performs grounded evaluation on language models on the generated cultural questions. if-aug(augmentation) is turned on, it means we will have the golden cultural descriptor in the input for the evaluation; and the golden-knowledge-augmented responses from GPTs can be used for further SFT training steps.evaluation/knowledge_entailment.py: computes the knowledge entailment scores of models' generated responses in the grounded evaluations.evaluation/direct_eval.py: performs direct evaluation on language models on CultureBank data.

Evaluation on two downstream tasks

evaluation/downstream_tasks/cultural_nli.py: evaluate on cultural nli.evaluation/downstream_tasks/world_value_survey.py: evaluate on the world value survey based on methods in this paper.

finetuning/sft_mixtral.py: a sample script to supervised-finetune a mixtral model on various tasks (extractor, summarizer, culturally-aware model, etc) with proper data preparation.finetuning/dpo_mixtral.py: a sample script to train a mixtral model with DPO on various tasks (culturally-aware model, etc) with proper data preparation.

- Knowledge extractor

- Cluster summarizer

- Evaluation question generator

- Llama2-7B SFT fine-tuned on CultureBank-TikTok

- Mixtral-8X7B SFT fine-tuned on CultureBank-TikTok

- Mixtral-8X7B DPO fine-tuned on CultureBank-TikTok

The codebase is adapted from Candle (paper) which is under this license. Thanks for the amazing work!

If you find our work helpful, please consider citing our paper:

@misc{shi2024culturebank,

title={CultureBank: An Online Community-Driven Knowledge Base Towards Culturally Aware Language Technologies},

author={Weiyan Shi and Ryan Li and Yutong Zhang and Caleb Ziems and Chunhua Yu and Raya Horesh and Rogério Abreu de Paula and Diyi yang},

year={2024},

eprint={2404.15238},

archivePrefix={arXiv},

primaryClass={cs.CL}

}

We welcome all kinds of contributions. If you have any questions, feel free to leave issues or email us.