- External Links

- Installation and usage

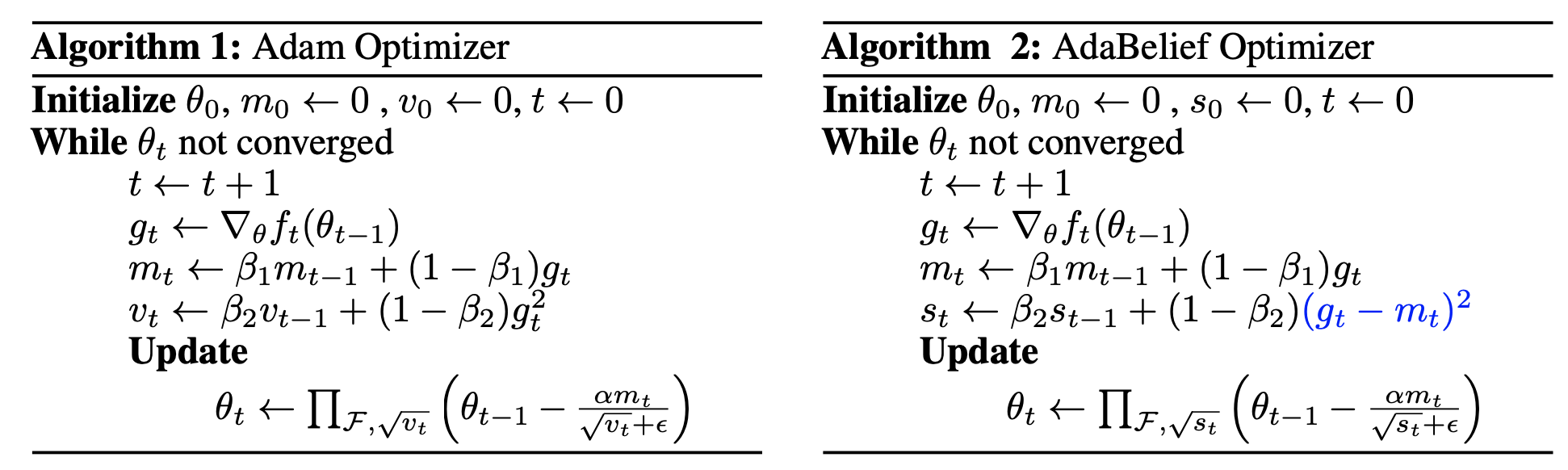

- A quick look at the algorithm

- Discussions (Important) Please read the discussion, important info on hyper-params there.

- Reproduce results in the paper

- Citation

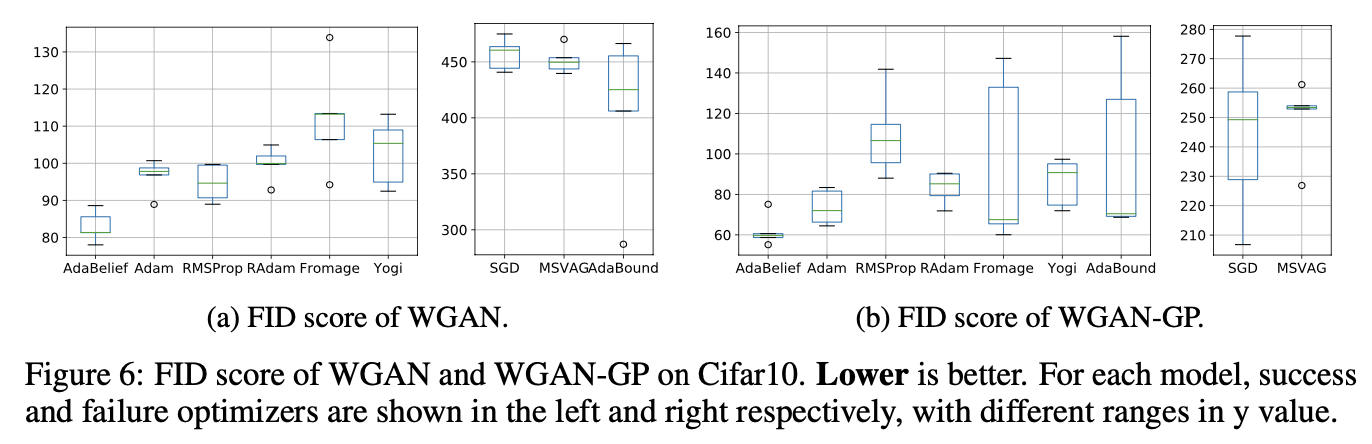

Someone (under the wechat group Jiqizhixin) points out that the results on GAN is bad, this might be due to the choice of GAN model (We pick the simplest code example from PyTorch docs without adding more tricks), and we did not perform cherry-picking or worsen the baseline perfomance intentionally. We will update results on new GANs (e.g. SN-GAN) and release code later.- Updated results on an SN-GAN is in https://github.com/juntang-zhuang/SNGAN-AdaBelief, AdaBelief achieves 12.87 FID (lower is beeter) on Cifar10, while Adam achieves 13.25 (number taken from the log of official repository

PyTorch-studioGAN). - Upload code for LSTM experiments.

Project Page, arXiv , Reddit , Twitter

( Results in the paper are all generated using the PyTorch implementation in adabelief-pytorch package, which is the ONLY package that I have extensively tested for now.)

pip install adabelief-pytorch==0.0.5

from adabelief_pytorch import AdaBelief

optimizer = AdaBelief(model.parameters(), lr=1e-3, eps=1e-12, betas=(0.9,0.999))

pip install ranger-adabelief==0.0.9

from ranger_adabelief import RangerAdaBelief

optimizer = RangerAdaBelief(model.parameters(), lr=1e-3, eps=1e-12, betas=(0.9,0.999))

pip install adabelief-tf==0.0.1

from adabelief_tf impoty AdaBeliefOptimizer

optimizer = AdaBeliefOptimizer(learning_rate, epsilon=1e-12)

See folder PyTorch_Experiments, for each subfolder, execute sh run.sh. See readme.txt in each subfolder for visualization, or

refer to jupyter notebook for visualization.

Please instal the latest version from pip, old versions might suffer from bugs. Source code for up-to-date package is available in folder pypi_packages.

-

Decoupling (argument

weight_decouple (default:False)appears inAdaBeliefandRangerAdaBelief):

Currently there are two ways to perform weight decay for adaptive optimizers, directly apply it to the gradient (Adam), ordecoupleweight decay from gradient descent (AdamW). This is passed to the optimizer by argumentweight_decouple (default: False). -

Fixed ratio (argument

fixed_decay (default: False)appears inAdaBelief):

(1) Ifweight_decouple == False, then this argument does not affect optimization.

(2) Ifweight_decouple == True:

- If

fixed_decay == False, the weight is multiplied by1 -lr x weight_decay- If

fixed_decay == True, the weight is multiplied by1 - weight_decay. This is implemented as an option but not used to produce results in the paper. -

What is the acutal weight-decay we are using?

This is seldom discussed in the literature, but personally I think it's very important. When we setweight_decay=1e-4for SGD, the weight is scaled by1 - lr x weight_decay. Two points need to be emphasized: (1)lrin SGD is typically larger than Adam (0.1 vs 0.001), so the weight decay in Adam needs to be set as a larger number to compensate. (2)lrdecays, this means typically we use a larger weight decay in early phases, and use a small weight decay in late phases.

AdaBelief seems to require a different epsilon from Adam. In CV tasks in this paper, epsilon is set as 1e-8. For GAN training and LSTM, it's set as 1e-12. We recommend try different epsilon values in practice, and sweep through a large region, e.g. 1e-8, 1e-10, 1e-12, 1e-14, 1e-16, 1e-18. Typically a smaller epsilon makes it more adaptive.

Whether to turn on the rectification as in RAdam. The recitification basically uses SGD in early phases for warmup, then switch to Adam. Rectification is implemented as an option, but is never used to produce results in the paper.

Whether to take the max (over history) of denominator, same as AMSGrad. It's set as False for all experiments.

- Results in the paper are generated using the PyTorch implementation in

adabelief-pytorchpackage. This is the ONLY package that I have extensively tested for now.

| Task | beta1 | beta2 | epsilon | weight_decay | weight_decouple | fixed_decay | rectify | amsgrad |

|---|---|---|---|---|---|---|---|---|

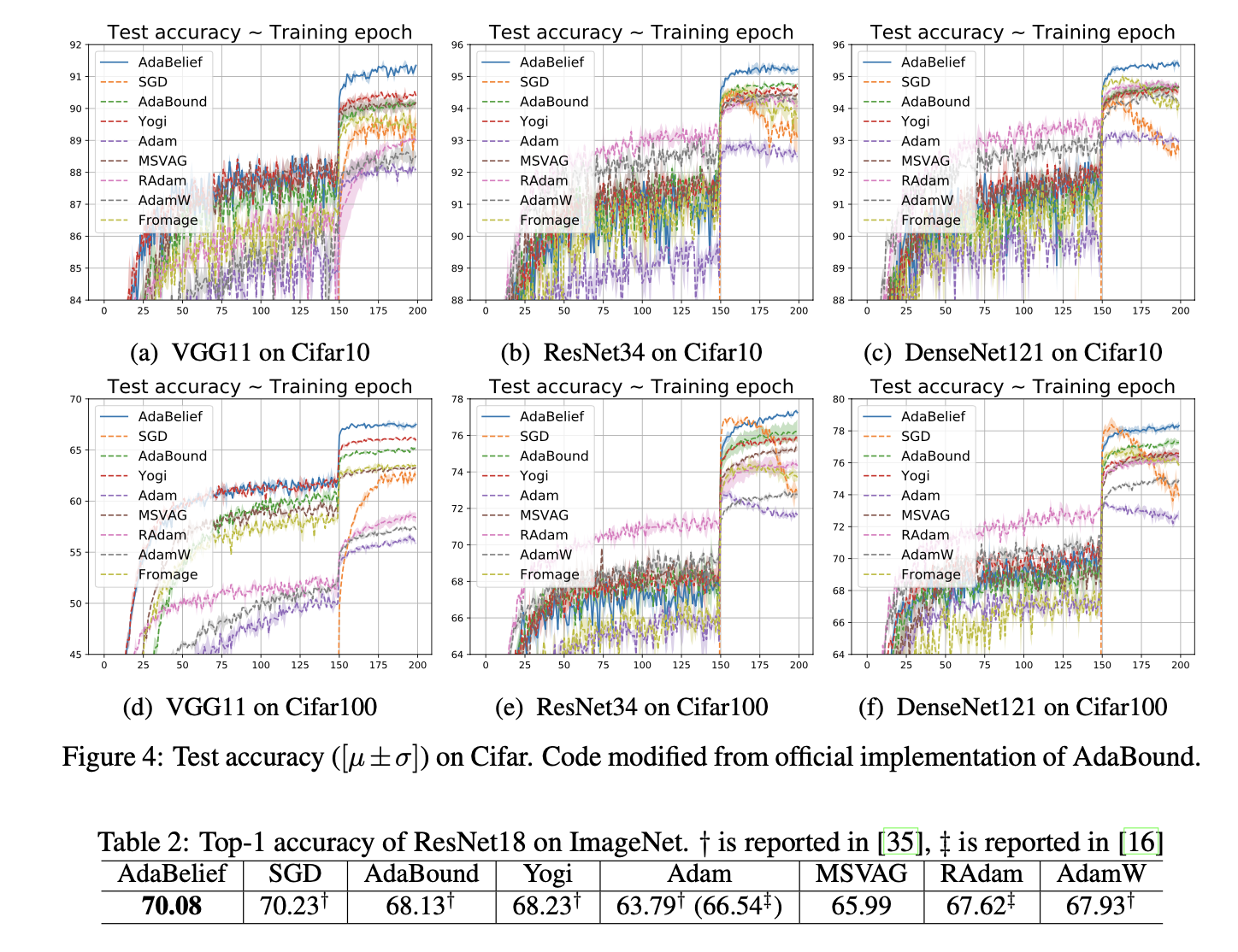

| Cifar | 0.9 | 0.999 | 1e-8 | 5e-4 | False | False | False | False |

| ImageNet | 0.9 | 0.999 | 1e-8 | 1e-2 | True | False | False | False |

| GAN | 0.5 | 0.999 | 1e-12 | 0 | False | False | False | False |

- We also provide a modification of

rangeroptimizer inranger-adaveliefwhich combinesRAdam + LookAhead + Gradient Centralization + AdaBelief, but this is not used in the paper and is not extensively tested. - The

adabelief-tfis a naive implementation in Tensorflow. It lacks many features such asdecoupled weight decay, and is not extensively tested. Currently I don't have plans to improve it since I seldom use Tensorflow, please contact me if you want to collaborate and improve it.

The experiments on Cifar is the same as demo in AdaBound, with the only difference is the optimizer. The ImageNet experiment uses a different learning rate schedule, typically is decayed by 1/10 at epoch 30, 60, and ends at 90. For some reasons I have not extensively experimented, AdaBelief performs good when decayed at epoch 70, 80 and ends at 90, using the default lr schedule produces a slightly worse result. If you have any ideas on this please open an issue here or email me.

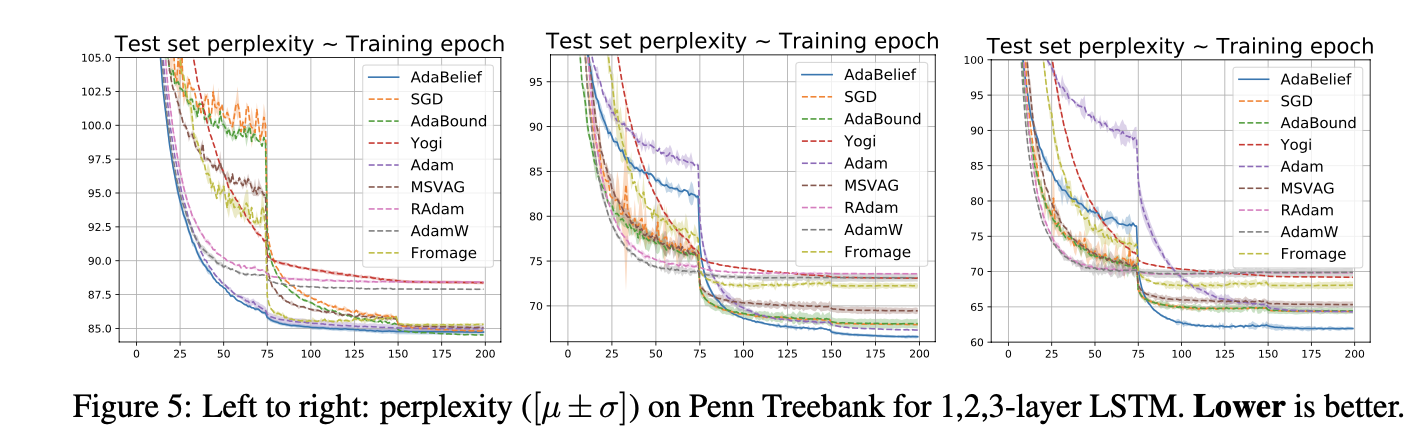

I got some feedbacks on RNN on reddit discussion, here are a few tips:

- The epsilon is suggested to set as a smaller value for RNN (e.g. 1e-12, 1e-14, 1e-16) though the default is 1e-8. Please try different epsilon values, it varies from task to task.

- Use gradient cliping carefully. If the gradient is too large, clipping to the SAME value will cause serious problem, because the demonitor is roughly |gt-mt|, observing the same gradient throughout training will generate a denominator really close to 0, this will cause explosion.

Please contact me at j.zhuang@yale.edu or open an issue here if you would like to help improve it, especially the tensorflow version, or explore combination with other methods, some discussion on the theory part, or combination with other methods to create a better optimizer. Any thoughts are welcome!

@article{zhuang2020adabelief,

title={AdaBelief Optimizer: Adapting Stepsizes by the Belief in Observed Gradients},

author={Zhuang, Juntang and Tang, Tommy and Tatikonda, Sekhar and and Dvornek, Nicha and Ding, Yifan and Papademetris, Xenophon and Duncan, James},

journal={Conference on Neural Information Processing Systems},

year={2020}

}