Project Website https://graspnet.net/publications.html

git clone https://github.com/GouMinghao/rgb_matters

cd rgb_matters

# virtualenv, conda or docker are strongly suggested.

python3 -m pip install -r requirements.txtDownload and setup following the official website.

- Modify the values in constant.py to your own.

- Run

gen_labels.py

# Around 260G space is needed if all the labels are generated.

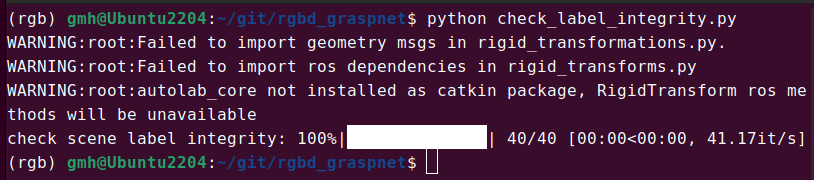

python3 gen_labels.py- Check if all labels are generated.

python3 check_label_integrity.pyNo errors will be presented if all labels exist.

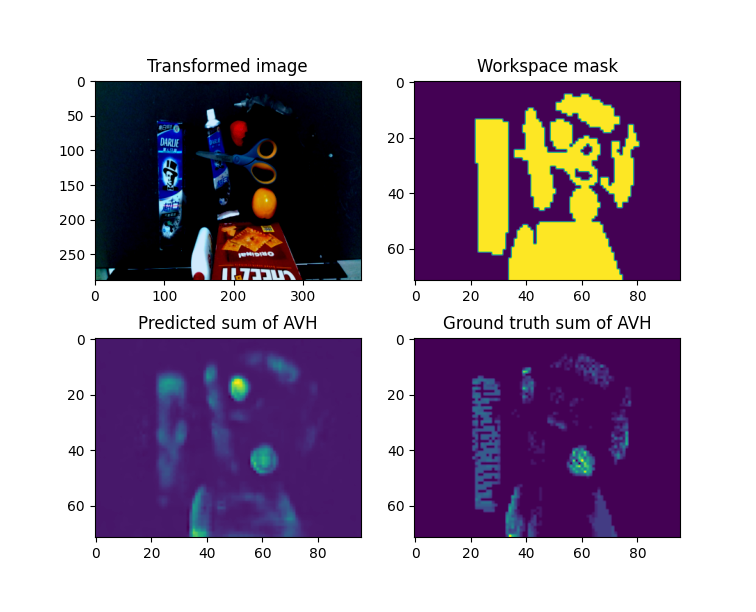

- Visualize the generated labels.

python3 vis_label.pyIf you want to use the normal modality, you should generate normals by running the following scripts.

# Around 130G space is needed if all the normals are generated.

python3 gen_normals.pycd rgb_matters

# bash train.sh [config name] [GPU Ids] [ddp port](default=12345)

bash train.sh 0 rs_rs_no_norm 12345 # training with rs_rs_no_norm.yaml config with GPU 0.cd rgb_matters

# bash train.sh [config name] [GPU Ids] [ddp port](default=12345)

bash train.sh 1,2 rs_rs_no_norm # training with rs_rs_no_norm.yaml config with GPU 1 and 2.Q1: Can I train on CPU.

A1: We don't support training on CPU, you can modify the code to train on it. However, it is not suggested.

Q2: CUDA out of memory error.

A2: Try to reduce the batch size in config file.

-

Download from Google Drive.

-

Create a directory named

weightsand put the downloaded files into it as below.

rgbd_graspnet/

├── check_label_integrity.py

├── train.py

├── train.sh

├── vis_label.py

...

└── weights

├── kn_jitter_79200.pth

├── kn_no_norm_76800.pth

├── kn_norm_63200.pth

├── kn_norm_only_73600.pth

└── rs_norm_56400.pth

Change resume in demo.sh and run it.

bash demo.shThis repo is under MIT license.

If you find this repo useful in your research, you should cite the following two papers

@inproceedings{gou2021RGB,

title={RGB Matters: Learning 7-DoF Grasp Poses on Monocular RGBD Images},

author={Minghao Gou, Hao-Shu Fang, Zhanda Zhu, Sheng Xu, Chenxi Wang, Cewu Lu},

booktitle={Proceedings of the International Conference on Robotics and Automation (ICRA)},

year={2021}

}

@inproceedings{fang2020graspnet,

title={GraspNet-1Billion: A Large-Scale Benchmark for General Object Grasping},

author={Fang, Hao-Shu and Wang, Chenxi and Gou, Minghao and Lu, Cewu},

booktitle={Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR)},

pages={11444--11453},

year={2020}

}