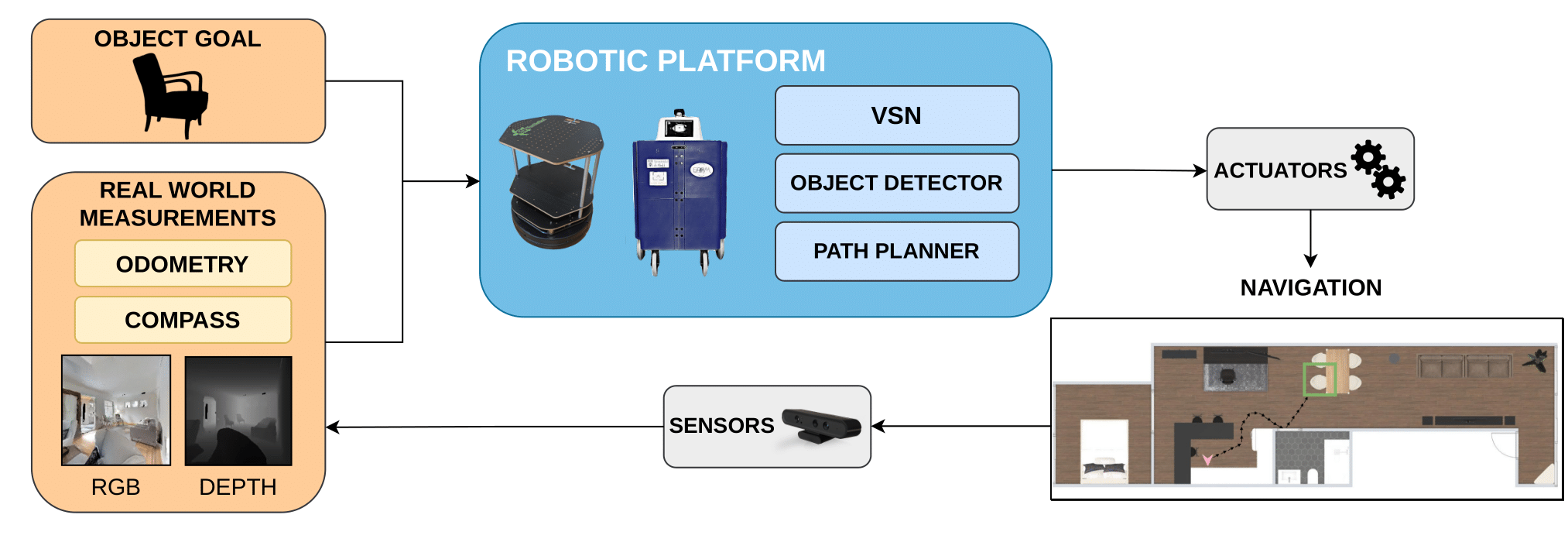

We are glad to introduce a novel ROS-based framework for Visual Semantic Navigation (VSN), designed to simplify the deployment of VSN models on any ROS-compatible robot and tested in the real world. The next figure shows how these VSN model work when deployed on our robotic platforms.

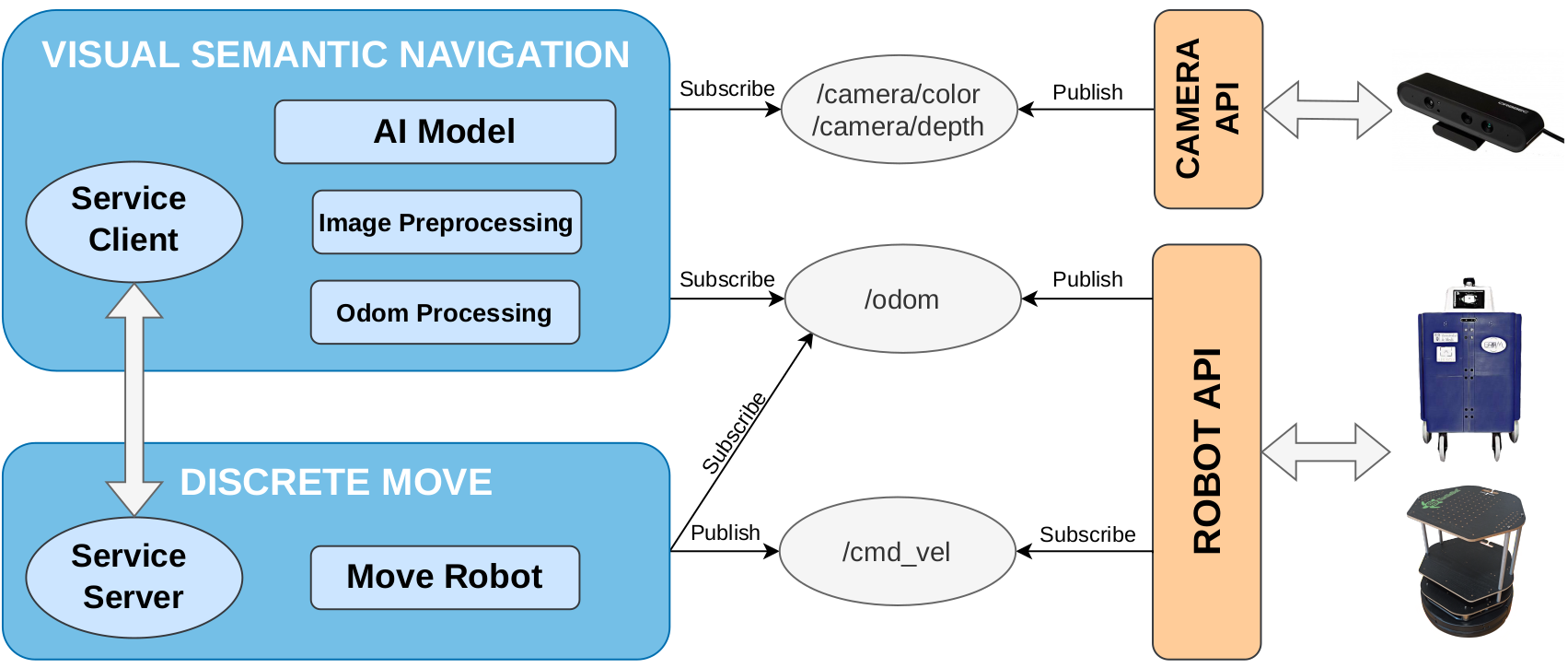

The ROS framework we present is composed of two main packages:

- visual_semantic_navigation (vsn)

- discrete_move

The following image shows the architecture presented in the paper:

The vsn Package is designed to deploy artificial intelligence models capable of autonomous navigation. This package is a client that interacts with the discrete_move package, which is responsible for performing the discrete movements inferred by the AI model.

Additionally, This package contains two submodules:

- Image_preprocessing that is responsible for connecting to the camera via ROS topic and returning an RGB or RGB+D image in the form of a NumPy array.

- Odometry that return the odometry value of the robot in case it needs to be used in the IA model.

In summary, VSN package is designed to be a template that can be easily customized to deploy any AI model. By using the discrete_move service, the robot can execute the movements generated by the model in a precise and controlled manner.

This ROS package is designed to control the Robot through parameterized movements, allowing the user to specify the distance and angle of each movement. The package supports four possible movements:

- Forward

- Backward

- Turn left

- Turn right

Furthermore, a ROS server has been designed to enable other ROS packages to connect as clients and execute parameterized discrete movements. The forward and backward movements can be controlled by specifying the distance that the robot needs to travel, while the turn left and turn right movements can be parameterized with the exact angle of movement required.

In summary, this ROS package provides a powerful tool for controlling the Lola Robot with a high degree of precision, making it an excellent choice for a wide range of applications.

- Ubuntu 20.04

- ROS Noetic

- Turtlebot2 Robot

- Camera compatible with ROS

-

Turtlebot2 Package Installation on ROS Noetic: To begin, install the Turtlebot2 package on ROS Noetic. We've created a script for your convenience.

-

Making our Packages Compatible with Turtlebot: To ensure compatibility with Turtlebot, add the following line to the beginning of the minimal.launch file within the ROS package named turtlebot:

<remap from="mobile_base/commands/velocity" to="cmd_vel"/>

-

Camera ROS Compatibility Installation: Ensure your camera is ROS-compatible, in our case we have used Orbecc Astra Camera. If needed, we've also prepared a script to assist with the installation.

-

Compilling the Project: Once the previous steps have been completed, compile the project using the following commands:

cd catkin_ws

catkin_make

This project can be launched with random movements to test the functionality of the discrete_move server. This random movements substitute the AI model and are generated by the ia.py python script.

Before launching the project, ensure that the Turtlebot and camera is connected to the Turtlebot.

- Launching the discrete_move server: To begin, launch the discrete_move server using the following command in a terminal:

. devel/setup.bash

roslaunch discrete_move turtlebot_server.launch

- Launching the VSN client: To begin, launch the VSN client using the following command in a terminal:

. devel/setup.bash

roslaunch vsn vsn.launch

By following these steps, you'll be able to launch the project with randomized movements for a comprehensive functionality test.

This repository is designed for seamless integration with the PIRLNAV model, enhancing your robotic navigation capabilities.

To deploy the PIRLNAV model successfully using ROS4VSN, follow the step-by-step instructions in the examples directory. In this folder, you will find a detailed guide in the README file.

If you use this code in your research, please consider citing:

@misc{gutierrez-alvarez2023visual,

title={Visual Semantic Navigation with Real Robots},

author={Carlos Gutiérrez-Álvarez and Pablo Ríos-Navarro and Rafael Flor-Rodríguez and Francisco Javier Acevedo-Rodríguez and Roberto J. López-Sastre},

year={2023},

eprint={2311.16623},

archivePrefix={arXiv},

primaryClass={cs.RO}

}