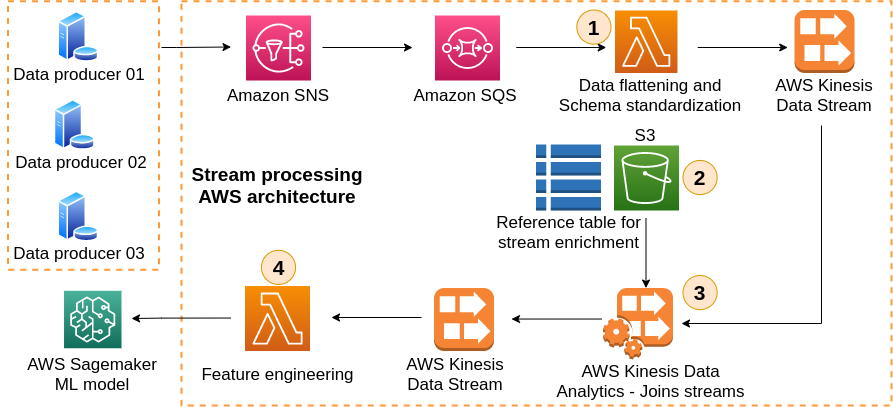

This code repository aims at shares the resources described in this article. The image below shows an AWS architecture of a data pipeline that uses Kinesis Data Analytics for joining and enriching three sets of streaming data in real-time. The architecture shown below is coded here, and it can be easily deployed in your AWS environment.

Requirements

- AWS Access and Secret Keys with enough privileges to deploy S3 buckets, Lambdas, SNS, SQS, Cloudwatch logs, Kinesis Data Stream and Kinesis Data Analytics on your account.

- Terraform >= 0.12

Local Deployment

- Clone this repository

git clone https://github.com/gpass0s/streaming-processing-on-aws.git

- Access this project's infra-as-code folder

cd streaming-processing-on-aws/infra-as-code

- Set your AWS credentials as local environment variables

export AWS_ACCESS_KEY_ID=<your-aws-access-key-id>

export AWS_SECRET_ACCESS_KEY=<your-aws-secret-access-key>

export AWS_DEFAULT_REGION=<your-aws-region>

- Initiate terraform

terraform init

- Create a terraform plan

terraform plan

- Apply resources in your account. If you get any error at this stage, execute again the command below.

terraform apply