Tensorflow implementations of (Conditional) Variational Autoencoder concepts

cd {simple-vae|conditional-vae}/src

python main.py

VAE settings (β and latent dimension) can easily be modified inside main.py.

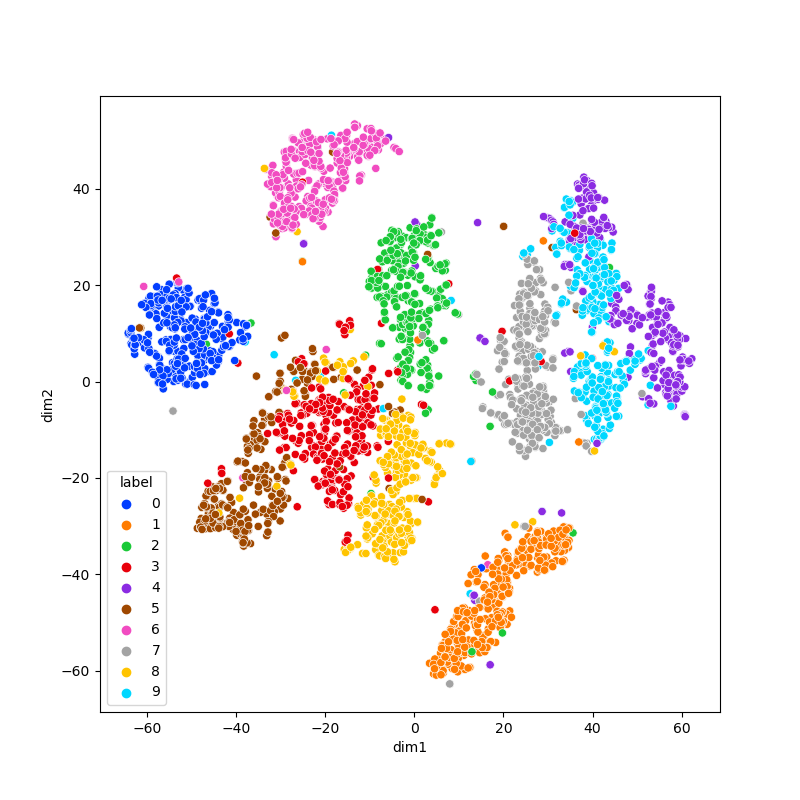

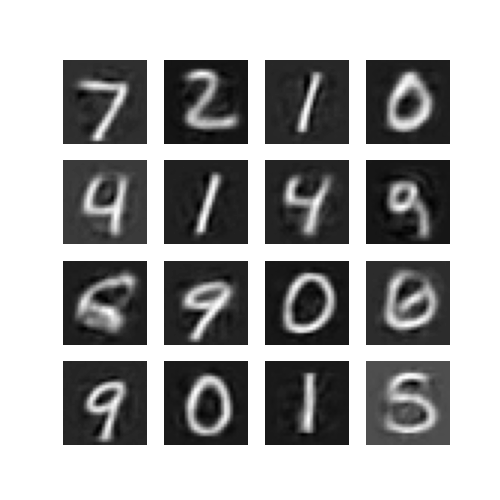

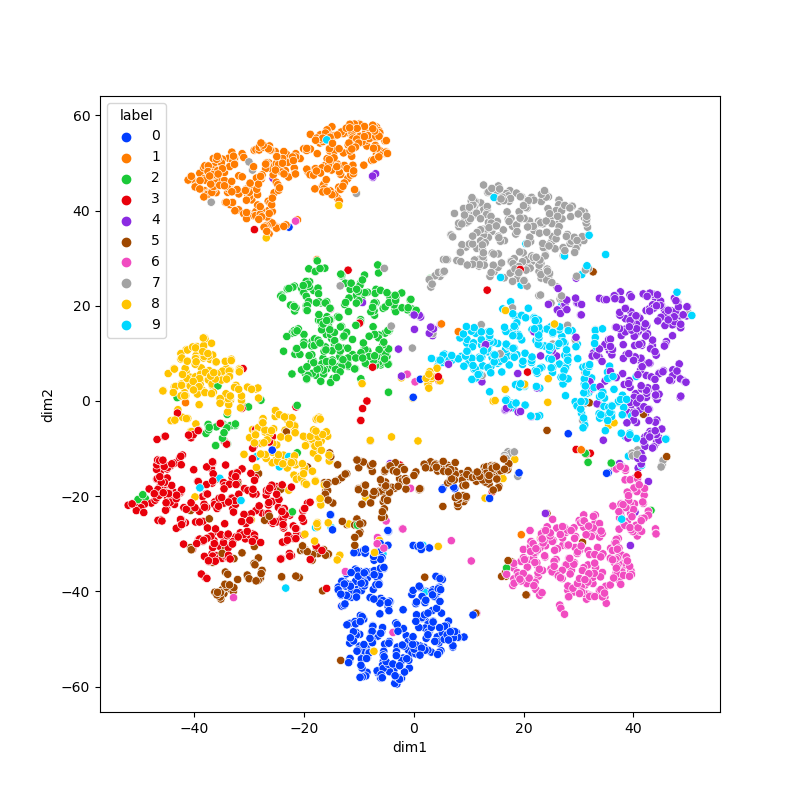

In experiments below, latent space visualization is obtained by TSNE on encoder outputted means for each sample in the training set.

- β = 0.0 (optimising only reconstruction loss): Latent space idea is not used because encoder can put each sample in separate places with punctual variations. Test image reconstruction quality is high even if they are not used in training, but generation ability is very low.

| 2d latent space | reconstructed test set images | Inewly generated images |

|

|

|

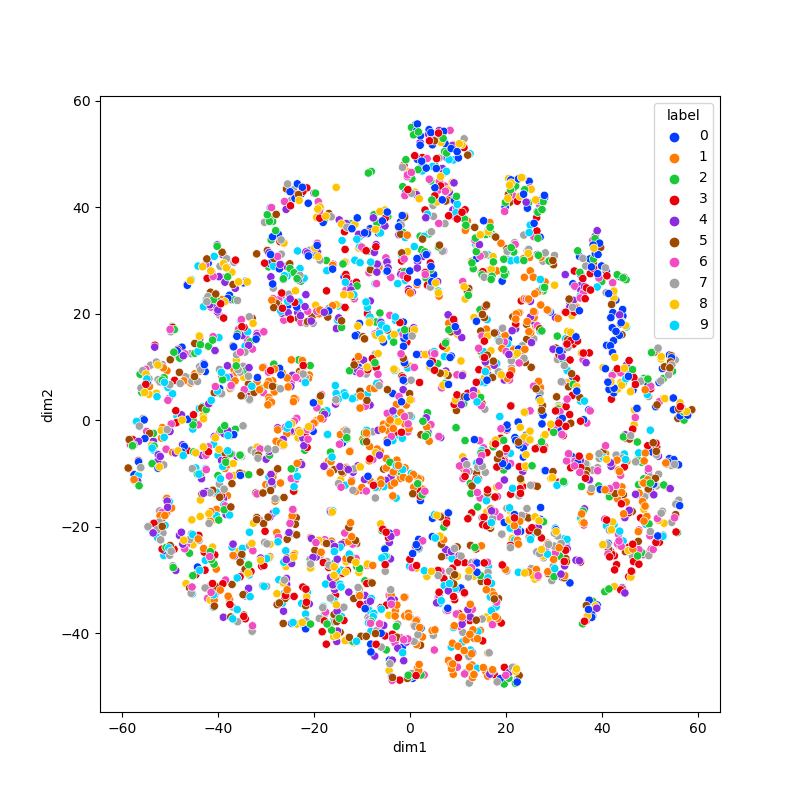

- β = 200.0 (optimising only kl loss): Without reconstruction pressure, all samples will have unit gaussian parameters, thus in the latent space no label(or similarity)-based clustering will be observed. Test image reconstruction quality, and generation ability are very low.

| 2d latent space | reconstructed test set images | Inewly generated images |

|

|

|

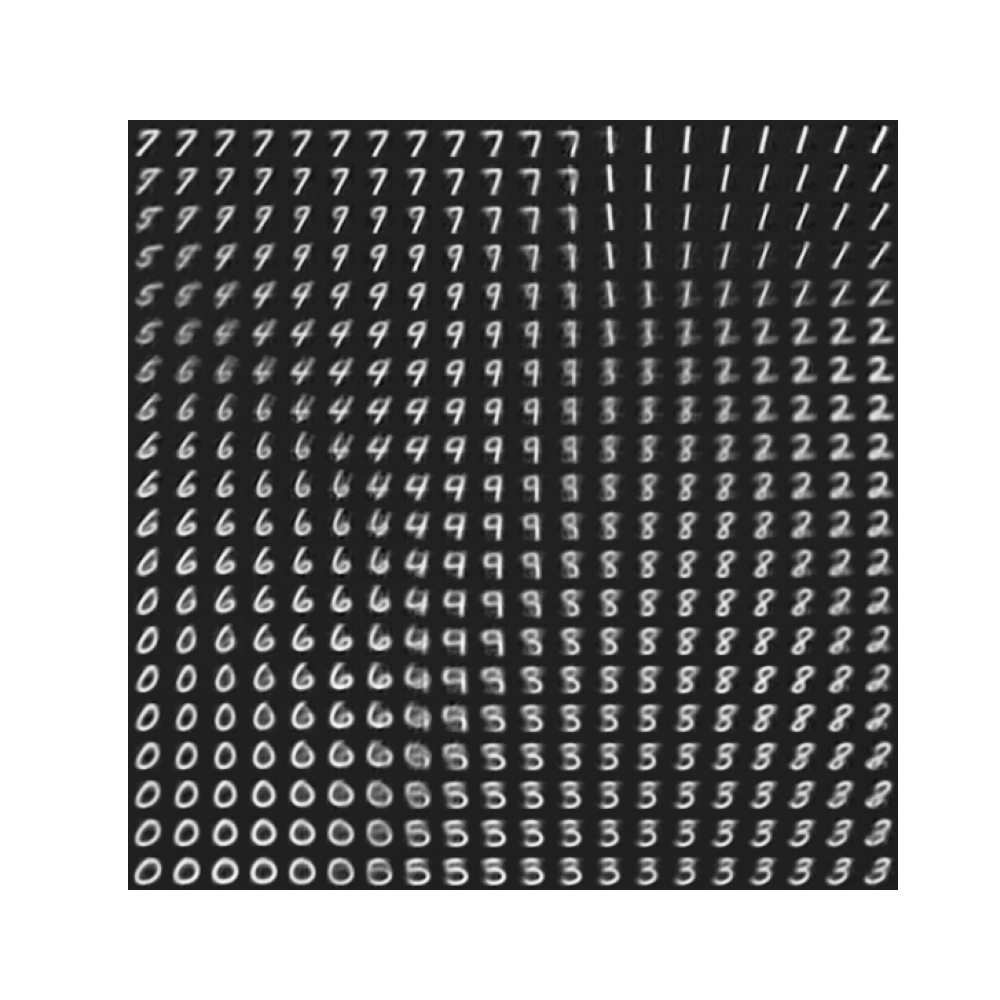

- β = 12.0 (optimising both losses): Both clustering nature of reconstruction loss and dense packing nature of kl loss observed.

| 2d latent space | digit manifold when latent dimension = 2 |

|

|

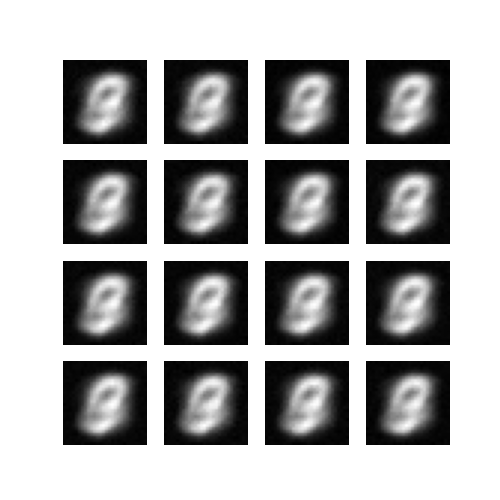

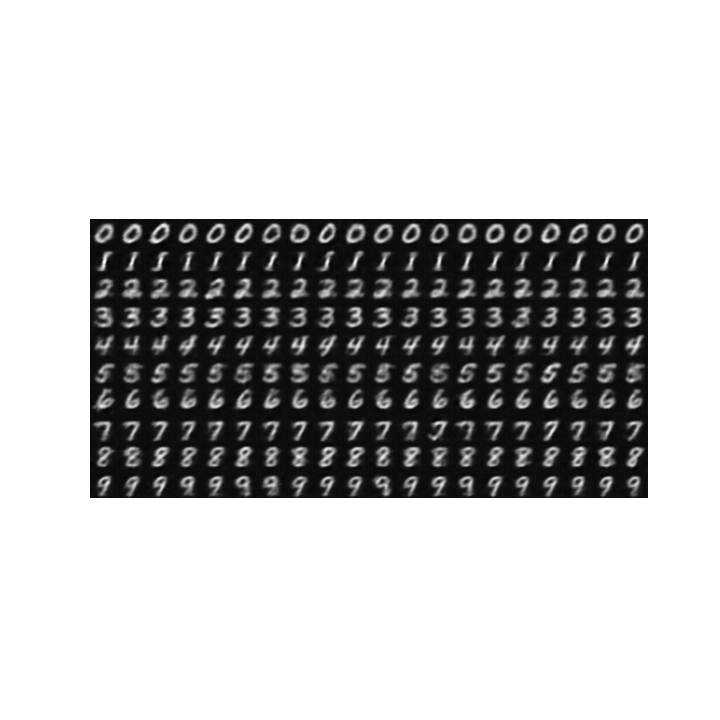

Instead of having one normal distribution where each digit tries to find a place for itself, with the label conditioning, now each digit has its own Gaussian distribution. Thus, 2d latent space concept is no longer valid, but this is compansated by the option of producing desired digit. For the following experiments, even though I tried with various architectures, enough variation is not present. I observed the same problem for different implementations such as in this.

| β = 1.0 | β = 1e-11 |

|

|

- simple-vae: Both encoder and decoder consist of two fully connected hidden layers.

- conditional-vae: Encoder consists of two convolutional layers. One-hot label vector concatenated on the flattened output of these. For decoder, after sampling, one hot vector concatenation applied. Decoder consists of 3 transposed convolution layers, where the final single feature map is decoded image.

Various ways of VAE implementation is possible in TF, but I computed both losses after forward pass, which means model provides both encoder and decoder outputs.

Training is done on MNIST training set, using Adam optimizer with learning rate 1e-3 for maximum of 15 epochs. Depending on latent channel capacity, overfitting is possible, so early stopping via visual inspection is advised.

I experimented with different formulations of re-parametrization trick and found that z = μ + σ ⊙ ε is less stable than z = μ + log(1 + exp(ρ)) ⊙ ε, although both produce nice outcomes.