Source code for EMNLP 2018 paper: RESIDE: Improving Distantly-Supervised Neural Relation Extraction using Side Information.

Resources: Paper | Slides | Poster | Video

Overview of RESIDE (proposed method): RESIDE first encodes each sentence in the bag by concatenating embeddings (denoted by ⊕) from Bi-GRU and Syntactic GCN for each token, followed by word attention.

Then, sentence embedding is concatenated with relation alias information, which comes from the Side Information Acquisition Section, before computing attention over sentences. Finally, bag representation with entity type information is fed to a softmax classifier. Please refer to paper for more details.

Overview of RESIDE (proposed method): RESIDE first encodes each sentence in the bag by concatenating embeddings (denoted by ⊕) from Bi-GRU and Syntactic GCN for each token, followed by word attention.

Then, sentence embedding is concatenated with relation alias information, which comes from the Side Information Acquisition Section, before computing attention over sentences. Finally, bag representation with entity type information is fed to a softmax classifier. Please refer to paper for more details.

Improvements over original RESIDE model:

- support for ELMo embeddings

- Compatible with TensorFlow 1.x and Python 3.x.

- Dependencies can be installed using

requirements.txt.

-

We use Riedel NYT and Google IISc Distant Supervision (GIDS) dataset for evaluation.

-

The processed version of the datasets can be downloaded from RiedelNYT and GIDS. The structure of the processed input data is as follows.

{ "voc2id": {"w1": 0, "w2": 1, ...}, "type2id": {"type1": 0, "type2": 1 ...}, "rel2id": {"NA": 0, "/location/neighborhood/neighborhood_of": 1, ...} "max_pos": 123, "train": [ { "X": [[s1_w1, s1_w2, ...], [s2_w1, s2_w2, ...], ...], "Y": [bag_label], "Pos1": [[s1_p1_1, sent1_p1_2, ...], [s2_p1_1, s2_p1_2, ...], ...], "Pos2": [[s1_p2_1, sent1_p2_2, ...], [s2_p2_1, s2_p2_2, ...], ...], "SubPos": [s1_sub, s2_sub, ...], "ObjPos": [s1_obj, s2_obj, ...], "SubType": [s1_subType, s2_subType, ...], "ObjType": [s1_objType, s2_objType, ...], "ProbY": [[s1_rel_alias1, s1_rel_alias2, ...], [s2_rel_alias1, ... ], ...] "DepEdges": [[s1_dep_edges], [s2_dep_edges] ...] }, {}, ... ], "test": { same as "train"}, "valid": { same as "train"}, }voc2idis the mapping of word to its idtype2idis the maping of entity type to its id.rel2idis the mapping of relation to its id.max_posis the maximum position to consider for positional embeddings.- Each entry of

train,testandvalidis a bag of sentences, whereXdenotes the sentences in bag as the list of list of word indices for GloVe and list of text sentences for ELMo.Yis the relation expressed by the sentences in the bag.Pos1andPos2are position of each word in sentences wrt to target entity 1 and entity 2.SubPosandObjPoscontains the position of the target entity 1 and entity 2 in each sentence.SubTypeandObjTypecontains the target entity 1 and entity 2 type information obtained from KG.ProbYis the relation alias side information (refer paper) for the bag.DepEdgesis the edgelist of dependency parse for each sentence (required for GCN).

reside.pycontains TensorFlow (1.x) based implementation of RESIDE (proposed method).- Download the pretrained model's parameters from RiedelNYT and GIDS (put downloaded folders in

checkpointdirectory). - Execute

evaluate.shfor comparing pretrained RESIDE model against baselines (plots Precision-Recall curve).

- Entity Type information for both the datasets is provided in

side_info/type_info.zip.- Entity type information can be used directly in the model.

- Relation Alias Information for both the datasets is provided in

side_info/relation_alias.zip.

- Execute

setup.shfor downloading GloVe embeddings. - Execute

load_pickle_glove.shto load preprocessed dataset for RESIDE-GloVe model. - Execute

load_pickle_elmo.shto load preprocessed dataset for RESIDE-ELMo model. - For training RESIDE run:

python reside.py --data data/riedel_processed_elmo.pkl --name new_run

- By default, ELMo embeddings are used. To run with GloVe embeddings add

--embed_type GloVe

-

The above model needs to be further trained with SGD optimizer for few epochs to match the performance reported in the paper. For that execute

python reside.py --name new_run --restore -opt sgd --lr 0.001 -l2 0.0 -epoch 4

-

Finally, run

python plot_pr.py --name new_runto get the plot.

preprocdirectory contains code for getting a new dataset in the required format (riedel_processed.pkl) forreside.py.- Get the data in the same format as followed in riedel_raw for

Riedel NYTdataset. - Finally, run the script

preprocess.sh.make_bags.pyis used for generating bags from sentence.generate_pickle.pyis for converting the data in the required pickle format.

-

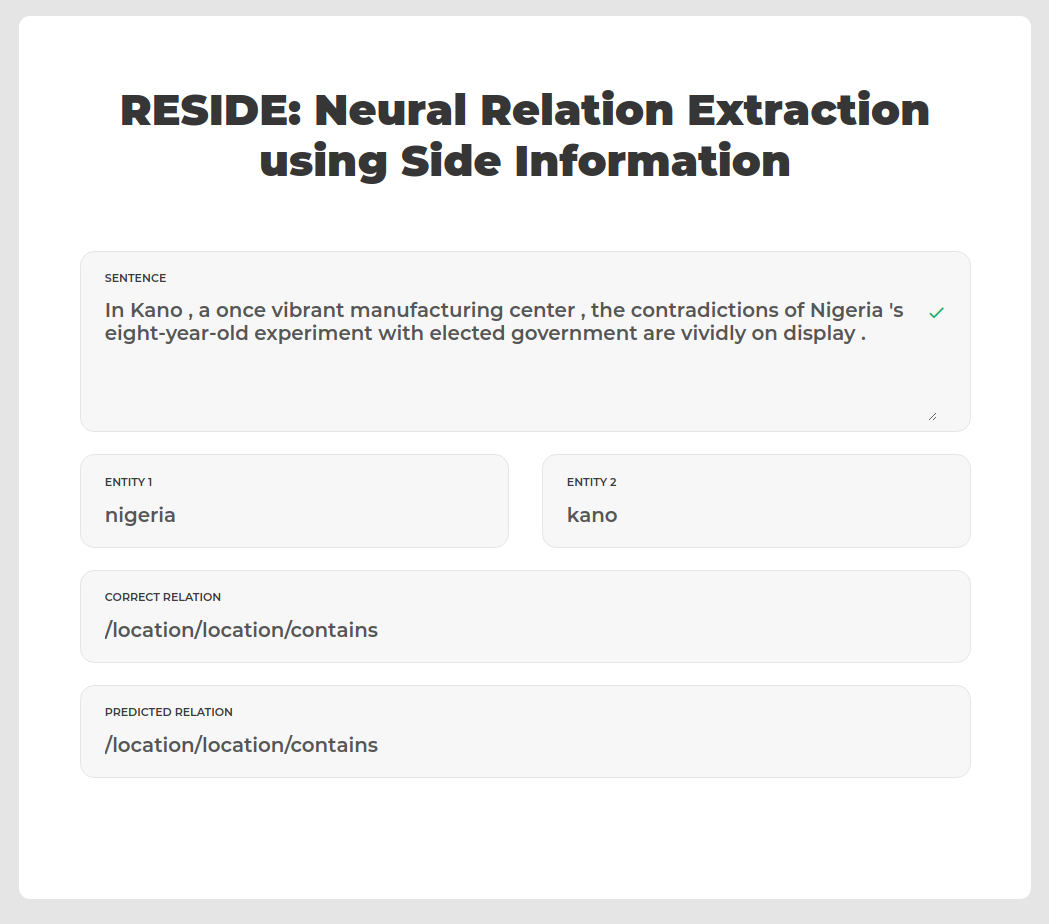

The code for running pretrained model on a sample is included in

onlinedirectory. -

A flask based server is also provided. Use

python online/server.pyto start the server.- riedel_test_bags.json and other required files can be downloaded from the provided links.