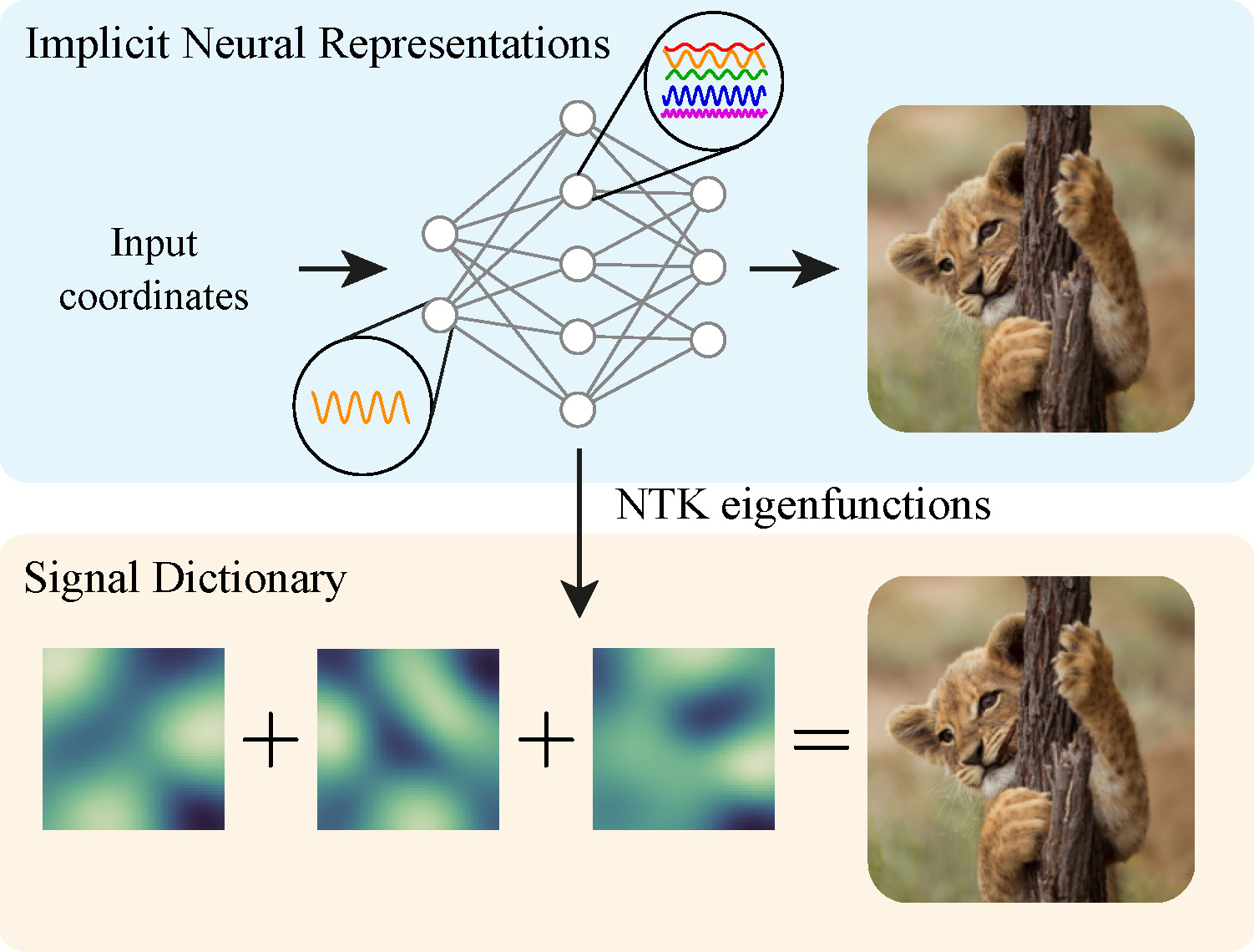

This is the source code to reproduce the experiments of the CVPR 2022 paper "A structured dictionary perspective on implicit neural representations" by Gizem Yüce*, Guillermo Ortiz-Jimenez*, Beril Besbinar and Pascal Frossard.

To run the code, please install all its dependencies by running:

$ pip install -r requirements.txtThis assumes that you have access to a Linux machine with an NVIDIA GPU with CUDA>=11.1. Otherwise, please check the instructions to install JAX with your setup in the corresponding repository.

The repository contains code to reproduce each figure in the paper, as well as to pretrain a SIREN on the CelebA dataset using meta-learning:

- Figure 2: Imperfect reconstruction

- Figure 3: Aliasing

- Figure 4: NTK alignment

- Figure 5: NTK eigenvectors

- Meta-learning pretraining

Figure 2 shows examples of imperfect reconstruction of an image using INRs with a limited frequency support. To reproduce this figure you can run

$ python figure_2.pyThis script will save the resulting pdf figures under figures/figure_2/.

Figure 3 shows an example of induced aliasing when reconstructing an ambiguous single-tone signal using an INR with a high-frequency emphasis. To reproduce this figure you can run

$ python figure_3.pyThis script will save the resulting pdf figures under figures/figure_3/.

Figure 3 compares the alignment of the eigenvectors of the NTK at initialization with 100 CelebA images for different INR architectures. To reproduce this figure you can run

$ python figure_4.pyThis script will save the resulting pdf figures under figures/figure_4/.

⚠️ Need to runmeta_learn.pyfirst!figure_4.pyassumes that you have access to the pretrained weights of a meta-learned SIREN. In this repository, we provide such weights for our default hyperparameters. However, if you want to experiment with other configurations, you will need to runmeta_learn.py.

Figure 5 plots the eigenvectors of the NTK at initialization for different architectures. To reproduce this figure you can run

$ python figure_5.pyThis script will save the resulting pdf figures under figures/figure_5/.

⚠️ Need to runmeta_learn.pyfirst!figure_5.pyassumes that you have access to the pretrained weights of a meta-learned SIREN. In this repository, we provide such weights for our default hyperparameters. However, if you want to experiment with other configurations, you will need to runmeta_learn.py.

For completeness we provide a standalone script meta_learn.py to meta-train a SIREN network on the CelebA dataset. The code of this script has been taken from Tancik et al. 2021.. Running this script will override the weights in maml_celebA_5000.pickle.

If you use this code, please cite the following paper:

@InCollection{inr_dictionaries2022,

title = {A structured dictionary perspective on implicit neural representations},

author = {Y\"uce, Gizem, and {Ortiz-Jimenez}, Guillermo and Besbinar, Beril and Frossard, Pascal},

booktitle = {Proc. IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR 2022)},

month = Jun,

year = {2022}

}