A wrapper for scipy.optimize.minimize to make it a PyTorch

Optimizer implementing Conjugate Gradients, BFGS, l-BFGS, SLSQP, Newton

Conjugate Gradient, Trust Region methods and others in PyTorch.

Warning: this project is a proof of concept and is not necessarily reliable, although the code (that's all of it) is small enough to be readable.

- Quickstart

- Which Algorithms Are Supported?

- Global Optimizers

- How Does it Work?

- How Does This Evaluate the Hessian?

- Credits

Dependencies:

pytorchscipy

The following install procedure isn't going to check these are installed.

This package can be installed with pip directly from Github:

pip install git+https://github.com/gngdb/pytorch-minimize.git

Or by cloning the repository and then installing:

git clone https://github.com/gngdb/pytorch-minimize.git

cd pytorch-minimize

python -m pip install .

The Optimizer class is MinimizeWrapper in pytorch_minimize.optim. It

has the same interface as a PyTorch Optimizer, taking

model.parameters(), and is configured by passing a dictionary of

arguments, here called minimizer_args, that will later be passed to

scipy.optimize.minimize:

from pytorch_minimize.optim import MinimizeWrapper

minimizer_args = dict(method='CG', options={'disp':True, 'maxiter':100})

optimizer = MinimizeWrapper(model.parameters(), minimizer_args)

The main difference when using this optimizer as opposed to most PyTorch

optimizers is that a closure (torch.optim.LBFGS also

requires this) must be defined:

def closure():

optimizer.zero_grad()

output = model(input)

loss = loss_fn(output, target)

loss.backward()

return loss

optimizer.step(closure)

This optimizer is intended for deterministic optimisation problems,

such as full batch learning problems. Because of this,

optimizer.step(closure) should only need to be called once.

Can .step(closure) be called more than once? Technically yes, but it

shouldn't be necessary because multiple steps are run internally up to the

maxiter option in minimizer_args and multiple calls are not

recommended. Each call to optimizer.step(closure) is an independent

evaluation of scipy.optimize.minimize, so the internal state of any

optimization algorithm will be interrupted.

Using PyTorch to calculate the Jacobian, the following algorithms are supported:

- Conjugate Gradients:

'CG' - Broyden-Fletcher-Goldfarb-Shanno (BFGS):

'BFGS' - Limited-memory BFGS:

'L-BFGS-B'but requires double precision:nn.Modulecontaining parameters must be cast to double, example:model = model.double()

- Sequential Least Squares Programming:

'SLSQP' - Truncated Newton:

'TNC'but also requires double precision

The method name string is given on the right, corresponding to the names used by scipy.optimize.minimize.

Warning: this is experimental and probably unpredictable.

To use the methods that require evaluating the Hessian, a Closure object

with the following methods is required (full MNIST example

here):

class Closure():

def __init__(self, model):

self.model = model

@staticmethod

def loss(model):

output = model(data)

return loss_fn(output, target)

def __call__(self):

optimizer.zero_grad()

loss = self.loss(self.model)

loss.backward()

return loss

closure = Closure(model)

The following methods can then be used:

- Newton Conjugate Gradient:

'Newton-CG' - Newton Conjugate Gradient Trust-Region:

'trust-ncg' - Krylov Subspace Trust-Region:

'trust-krylov' - Nearly Exact Trust-Region:

'trust-exact' - Constrained Trust-Region:

'trust-constr'

The code contains hacks to make it possible to call torch.autograd.functional.hessian (which is itself only supplied in PyTorch as beta).

If using the scipy.optimize.minimize algorithms that don't require

gradients (such as 'Nelder-Mead', 'COBYLA' or 'Powell'), ensure that

minimizer_args['jac'] = False when instancing MinimizeWrapper.

Algorithms I tested didn't converge on a toy problem or hit errors. You can still select them but they may not work:

- Dogleg:

'dogleg'

All the other methods that require gradients converged on a toy problem that is tested in Travis-CI.

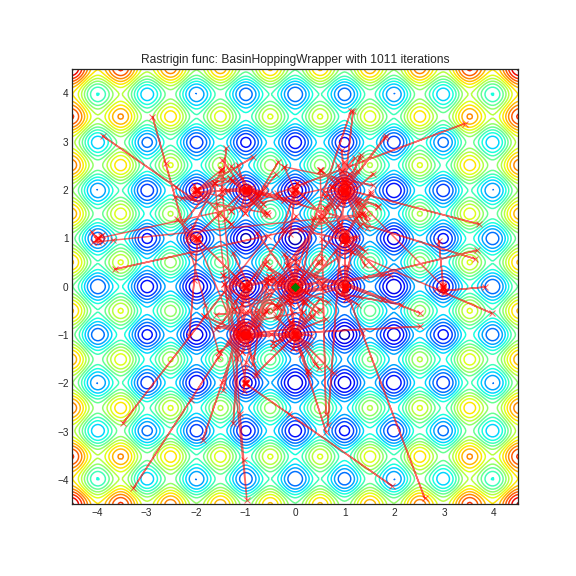

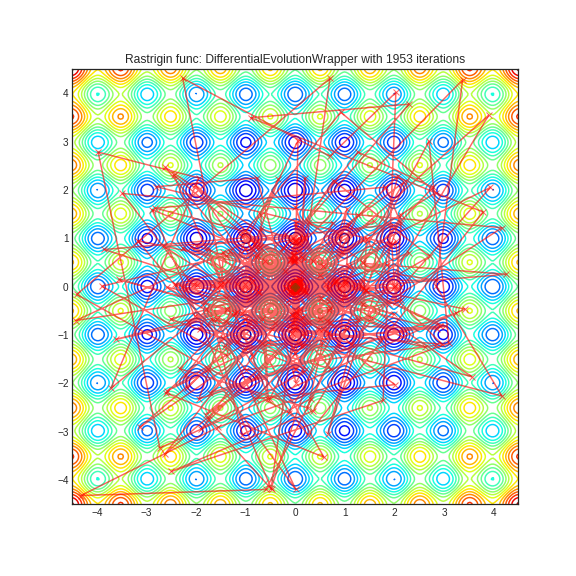

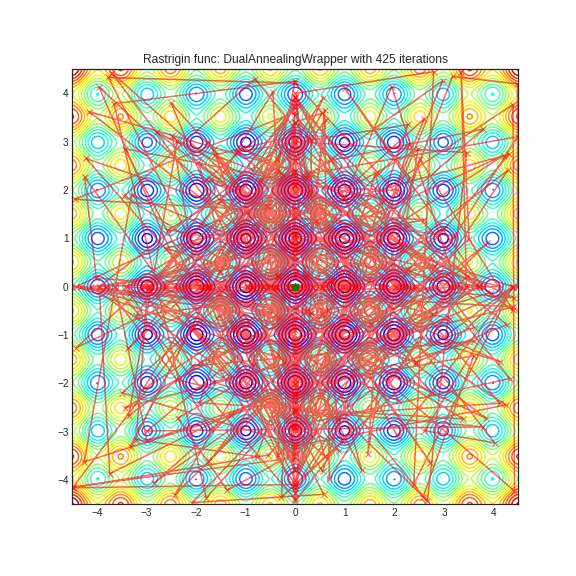

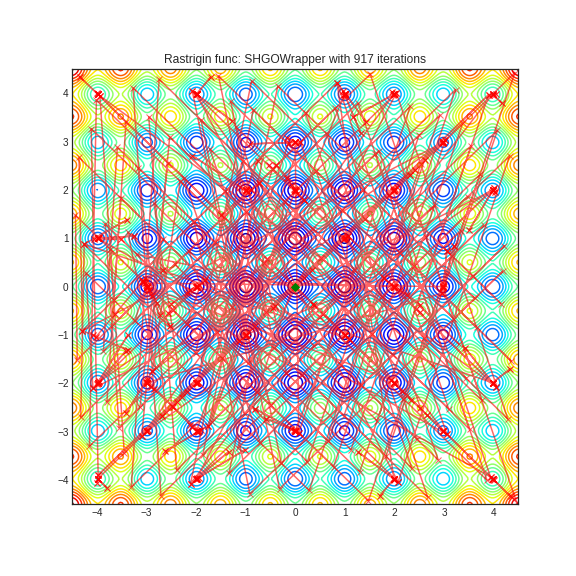

There are a few global optimization algorithms in

scipy.optimize. The following are supported via their own

wrapper classes:

- Basin Hopping via

BasinHoppingWrapper - Differential Evolution via

DifferentialEvolutionWrapper - Simplicial Homology Global Optimization via

SHGOWrapper - Dual Annealing via

DualAnnealingWrapper

An example of how to use one of these wrappers:

from pytorch_minimize.optim import BasinHoppingWrapper

minimizer_args = dict(method='CG', options={'disp':True, 'maxiter':100})

basinhopping_kwargs = dict(niter=200)

optimizer = BasinHoppingWrapper(model.parameters(), minimizer_args, basinhopping_kwargs)

These are also illustrated in this colab notebook, where the following plots were generated:

scipy.optimize.minimize is expecting to receive a function fun that

returns a scalar and an array of gradients the same size as the initial

input array x0. To accomodate this, MinimizeWrapper does the following:

- Create a wrapper function that will be passed as

fun - In that function:

- Unpack the umpy array into parameter tensors

- Substitute each parameter in place with these tensors

- Evaluate

closure, which will now use these parameter values - Extract the gradients

- Pack the gradients back into one 1D Numpy array

- Return the loss value and the gradient array

Then, all that's left is to call scipy.optimize.minimize and unpack the

optimal parameters found back into the model.

This procedure involves unpacking and packing arrays, along with moving back and forth between Numpy and PyTorch, which may incur some overhead. I haven't done any profiling to find out if it's likely to be a big problem and it completes in seconds when optimizing a logistic regression on MNIST by conjugate gradients.

There are a few other projects that incorporate scipy.optimize and

pytorch:

- This gist I wrote in 2018 then forgot about creates an

Objective object to pass into

scipy.optimizebut packs the arrays and gradients in approximately the same way. - botorch's

gen_candidates_scipywrapsscipy.optimize.minimizeand uses it to optimize acquisition functions as part of Bayesian Optimization. - autograd-minimize wraps the

minimizefunction itself, allowing PyTorch or Tensorflow objectives to be passed directly to a function with the same interface asscipy.optimize.minimize.

rfeinman has implemented some of the algorithms available in scipy.optimize

in a repository with the same name as this repository. That

implementation is much more efficient and avoids switching between

32 and 64 bit floats between Numpy and PyTorch.

That repository also contains a wrapper around scipy.optimize.minimize.

To evaluate the Hessian in PyTorch,

torch.autograd.functional.hessian takes two arguments:

func: function that returns a scalarinputs: variables to take the derivative wrt

In most PyTorch code, inputs is a list of tensors embedded as parameters

in the Modules that make up the model. They can't be passed as inputs

because we typically don't have a func that will take the parameters as

input, build a network from these parameters and then produce a scalar

output.

From a discussion on the PyTorch forum the only way to calculate

the gradient with respect to the parameters would be to monkey patch

inputs into the model and then calculate the loss. I wrote a recursive

monkey patch that operates on a deepcopy of the original

model. This involves copying everything in the model so it's not very

efficient.

The function passed to scipy.optimize.minimize as hess does the

following:

copy.deepcopythe entiremodelModule- Input

xis a Numpy array so cast it to tensor float32 andrequire_grad - Define a function

fthat unpacks this 1D Numpy array into parameter tensors- Recursively navigate the module object

- Deleting all existing parameters

- Replacing them with unpacked parameters from step 2

- Calculate the loss using the static method stored in the

closureobject

- Recursively navigate the module object

- Pass

ftotorch.autograd.functional.hessianandxthen cast the result back into a Numpy array

If you use this in your work, please cite this repository using the following Bibtex entry, along with Numpy, Scipy and PyTorch.

@misc{gray2021minimize,

author = {Gray, Gavia},

title = {PyTorch Minimize},

year = {2021},

publisher = {GitHub},

journal = {GitHub repository},

howpublished = {\url{https://github.com/gngdb/pytorch-minimize}}

}

This package was created with Cookiecutter and the

audreyr/cookiecutter-pypackage project template.