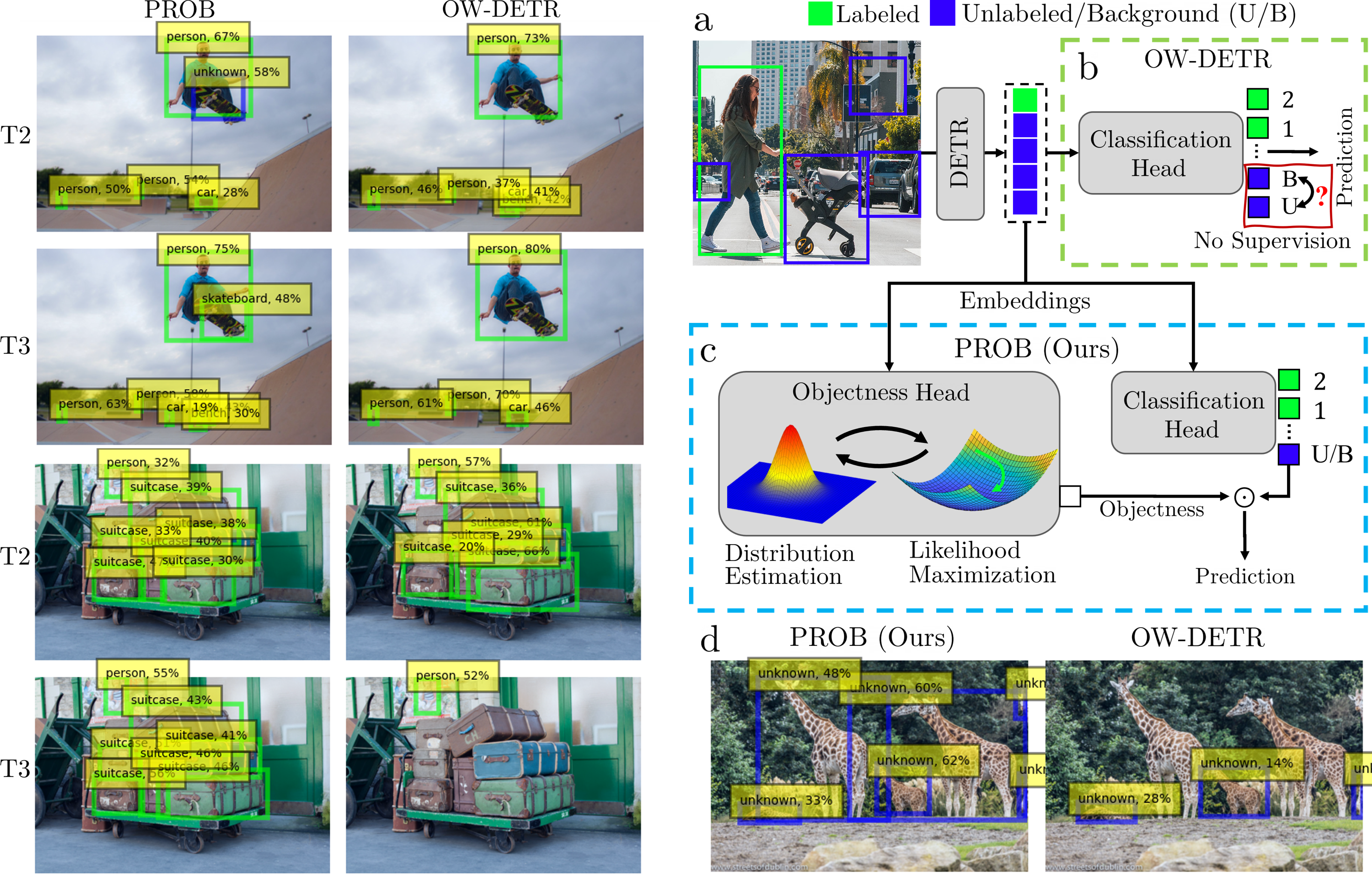

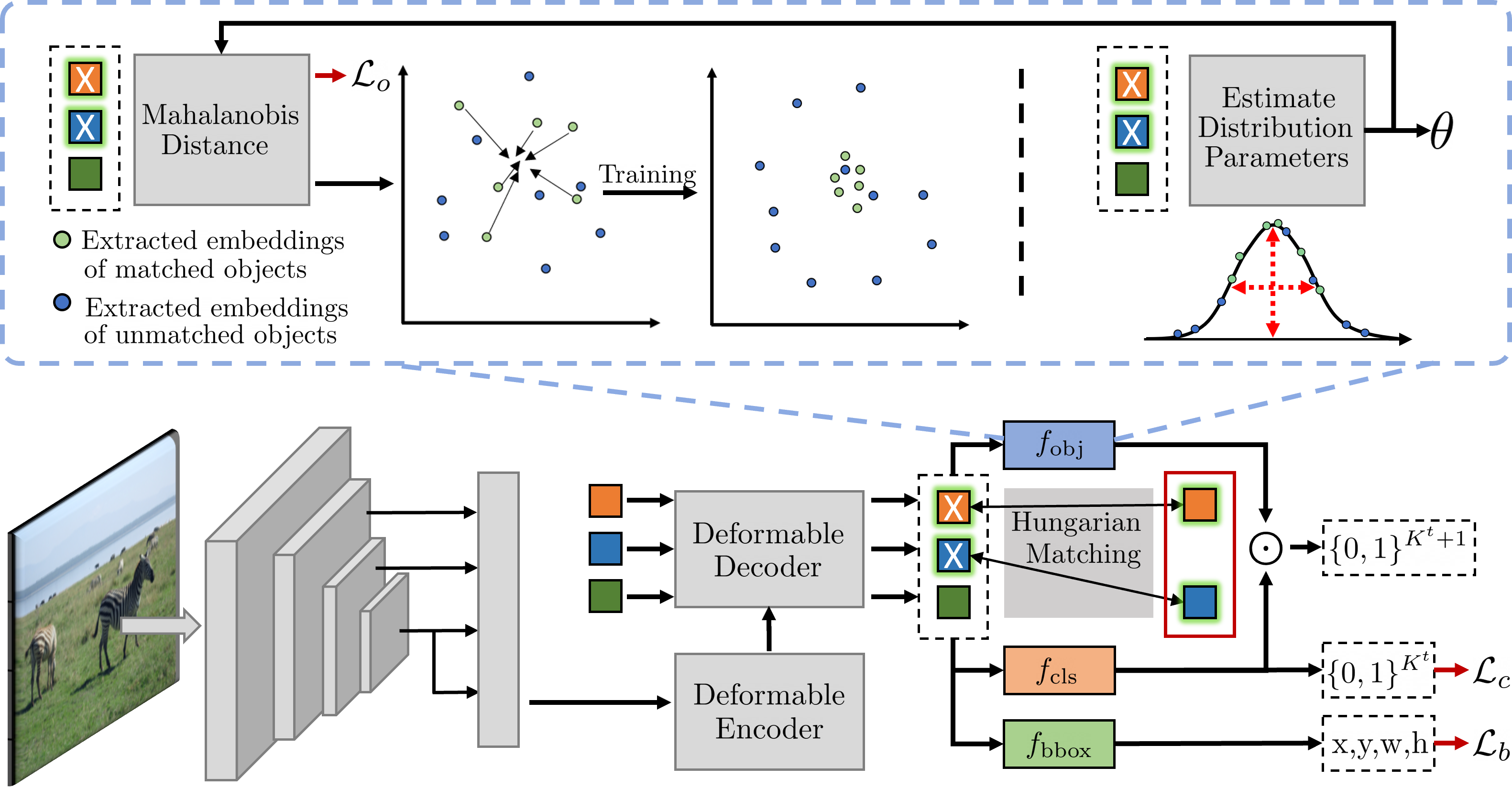

Open World Object Detection (OWOD) is a new and challenging computer vision task that bridges the gap between classic object detection (OD) benchmarks and object detection in the real world. In addition to detecting and classifying seen/labeled objects, OWOD algorithms are expected to detect novel/unknown objects - which can be classified and incrementally learned. In standard OD, object proposals not overlapping with a labeled object are automatically classified as background. Therefore, simply applying OD methods to OWOD fails as unknown objects would be predicted as background. The challenge of detecting unknown objects stems from the lack of supervision in distinguishing unknown objects and background object proposals. Previous OWOD methods have attempted to overcome this issue by generating supervision using pseudo-labeling - however, unknown object detection has remained low. Probabilistic/generative models may provide a solution for this challenge. Herein, we introduce a novel probabilistic framework for objectness estimation, where we alternate between probability distribution estimation and objectness likelihood maximization of known objects in the embedded feature space - ultimately allowing us to estimate the objectness probability of different proposals. The resulting Probabilistic Objectness transformer-based open-world detector, PROB, integrates our framework into traditional object detection models, adapting them for the open-world setting. Comprehensive experiments on OWOD benchmarks show that PROB outperforms all existing OWOD methods in both unknown object detection (~2x unknown recall) and known object detection (~10% mAP).

PROB adapts the Deformable DETR model by adding the proposed 'probabalistic objectness' head. In training, we alterante between distribution estimation (top right) and objectness likelihood maximization of matched ground-truth objects (top left). For interence, the objectness probability multiplies the classification probabilities. For more, see the manuscript.

| Task1 | Task2 | Task3 | Task4 | ||||

|---|---|---|---|---|---|---|---|

| Method | U-Recall | mAP | U-Recall | mAP | U-Recall | mAP | mAP |

| OW-DETR | 7.5 | 59.2 | 6.2 | 42.9 | 5.7 | 30.8 | 27.8 |

| PROB | 19.4 | 59.5 | 17.4 | 44.0 | 19.6 | 36.0 | 31.5 |

We have trained and tested our models on Ubuntu 16.04, CUDA 11.1/11.3, GCC 5.4.0, Python 3.10.4

conda create --name prob python==3.10.4

conda activate prob

pip install -r requirements.txt

pip install torch==1.12.0+cu113 torchvision==0.13.0+cu113 torchaudio==0.12.0 --extra-index-url https://download.pytorch.org/whl/cu113Download the self-supervised backbone from here and add in models folder.

cd ./models/ops

sh ./make.sh

# unit test (should see all checking is True)

python test.pyPROB/

└── data/

└── OWOD/

├── JPEGImages

├── Annotations

└── ImageSets

├── OWDETR

├── TOWOD

└── VOC2007

The splits are present inside data/OWOD/ImageSets/ folder.

- Download the COCO Images and Annotations from coco dataset into the

data/directory. - Unzip train2017 and val2017 folder. The current directory structure should look like:

PROB/

└── data/

└── coco/

├── annotations/

├── train2017/

└── val2017/

- Move all images from

train2017/andval2017/toJPEGImagesfolder. - Use the code

coco2voc.pyfor converting json annotations to xml files. - Download the PASCAL VOC 2007 & 2012 Images and Annotations from pascal dataset into the

data/directory. - untar the trainval 2007 and 2012 and test 2007 folders.

- Move all the images to

JPEGImagesfolder and annotations toAnnotationsfolder.

Currently, we follow the VOC format for data loading and evaluation

To train PROB on a single node with 4 GPUS, run

bash ./run.sh**note: you may need to give permissions to the .sh files under the 'configs' and 'tools' directories by running chmod +x *.sh in each directory.

By editing the run.sh file, you can decide to run each one of the configurations defined in \configs:

- EVAL_M_OWOD_BENCHMARK.sh - evaluation of tasks 1-4 on the MOWOD Benchmark.

- EVAL_S_OWOD_BENCHMARK.sh - evaluation of tasks 1-4 on the SOWOD Benchmark.

- M_OWOD_BENCHMARK.sh - training for tasks 1-4 on the MOWOD Benchmark.

- M_OWOD_BENCHMARK_RANDOM_IL.sh - training for tasks 1-4 on the MOWOD Benchmark with random exemplar selection.

- S_OWOD_BENCHMARK.sh - training for tasks 1-4 on the SOWOD Benchmark.

To train PROB on a slurm cluster having 2 nodes with 8 GPUS each (not tested), run

bash run_slurm.sh**note: you may need to give permissions to the .sh files under the 'configs' and 'tools' directories by running chmod +x *.sh in each directory.

For reproducing any of the aforementioned results, please download our pretrain weights and place them in the

'exps' directory. Run the run_eval.sh file to utilize multiple GPUs.

**note: you may need to give permissions to the .sh files under the 'configs' and 'tools' directories by running chmod +x *.sh in each directory.

PROB/

└── exps/

├── MOWODB/

| └── PROB/ (t1.ph - t4.ph)

└── SOWODB/

└── PROB/ (t1.ph - t4.ph)

Note: For more training and evaluation details please check the Deformable DETR reposistory.

If you use PROB, please consider citing:

@misc{zohar2022prob,

author = {Zohar, Orr and Wang, Kuan-Chieh and Yeung, Serena},

title = {PROB: Probabilistic Objectness for Open World Object Detection},

publisher = {arXiv},

year = {2022}

}

Should you have any question, please contact 📧 orrzohar@stanford.edu

Acknowledgments:

PROB builds on previous works' code base such as OW-DETR, Deformable DETR, Detreg, and OWOD. If you found PROB useful please consider citing these works as well.