This program downloads tweets from Twitter APIs(v2) using Academic development accounts. All returned information are stored. Note the APIs are still evolving, so the code here may not work in the future.

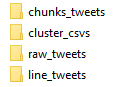

There are four folders to store downloaded tweets:

-

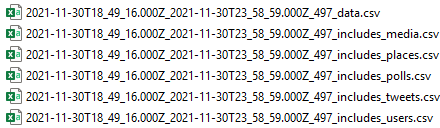

raw_tweets: raw data from Twitter, including five parts of each request:data,media,places,users, andpolls. Each part is stored as a individual .CSV file. Strings in these .CSV files are cleaned by removing newlines, tabs, and commas (,is replaced by;). Please refer to Twitter API Documentation.file name:oldest posted timelastest posted timetweets_count_part. For example: -

line_tweets: Five parts are merged together, each row in this file contains all columns returned by the API. The result of each request is a single file. There are 100 lines (withcontext_annotation) or 500 lines (withoutcontext_annotation) per file.context_annotationis like a topic assigned to a tweet.About

context_annotation:Context annotations: Derived from the analysis of a Tweet’s text and will include a domain and entity pairing which can be used to discover Tweets on topics that may have been previously difficult to surface. At present, we’re using a list of 50+ domains to categorize Tweets.

-

chunks_tweets: Mergedline_tweetsfor better managements of Hadoop systems. Each merged .CSV files have about 50,000 - 500,000 tweets. -

cluster_csvs: Convertedchunks_tweets. Some fields are expanded for our Hadoop systems.

-

Prerequisite:

- Develop account. Put your keys in

tweet_api_keys.txt(put this file in the same folder as thedownload_tweet_APIv2.py).

Consumer API Key: copy_your_key_here Consumer API Secret Key: copy_your_key_here Bearer Token: copy_your_key_here Access Token: copy_your_key_here Access Token Secret: copy_your_key_here- Packages: tqdm, requests, pandas, vaderSentiment, emoji

- Develop account. Put your keys in

-

Using

download_tweet_APIv2.pyto download tweets.Edit the parameters in the fucntion of

execute_download, such assaved_path,query,start_time,end_time, andchunk_size.To build a query, you need to carefully read Building a query or How to write search queries, and understand the meaning of space

OR,"", and(). Again, carefully check the downloaded tweet to verify your queries. Here is some examples:vaccin OR vaccination OR vaccine OR vaccinate place_country:AU. It means to collect tweets posted in Australia contain wordsvaccin,vaccination, orvaccinate.

has:geo, from:cnn OR from:FoxNews, (wildfire OR earthquake). Note "OR" is capitalized.

- Please pay attention to the tweet count returned at the begining. If it is not your expectation, just stop the program then refine the query.

A use example in the download_tweet_APIv2.py:

if __name__ == '__main__':

query = '(Canada wildfire) OR (wildfire smoke) OR (canada smoke) OR (canada air)'

saved_path = 'H:\Research\Canada_widefire'

start_time = "2023-05-01T01:00:00Z"

end_time = "2023-06-13T01:00:00Z"

execute_download(query=query,

saved_path=saved_path,

start_time=start_time,

end_time=end_time,

)According to official documentation, 300 requests are allowed per 15 minutes; 500 tweets per requets (or 100 tweet with context_annotation). So the highest speed is 600,000 tweets per hour. However, due to heavy converting computations, the current speed is about 100 request per 15 minutes, which is 200,000 tweets per hour.

Upated: after adding sub-processes to merge responses and to convert the tweet chunks, the downloading speed now is about 500,000 tweet/hour.

If you do not like this repository, twarc is highly recommanded. The purpose my repository is to collect all data from API, which twarc does not, at least its plug-in twarc-csv.

- Adaptive sleeping when breaking the rate limits.

- Code refining.

- Result merging and compressing.