ConfMix: Unsupervised Domain Adaptation for Object Detection via Confidence-based Mixing

Giulio Mattolin, Luca Zanella, Yiming Wang, Elisa Ricci

WACV 2023

Paper: ArXiv

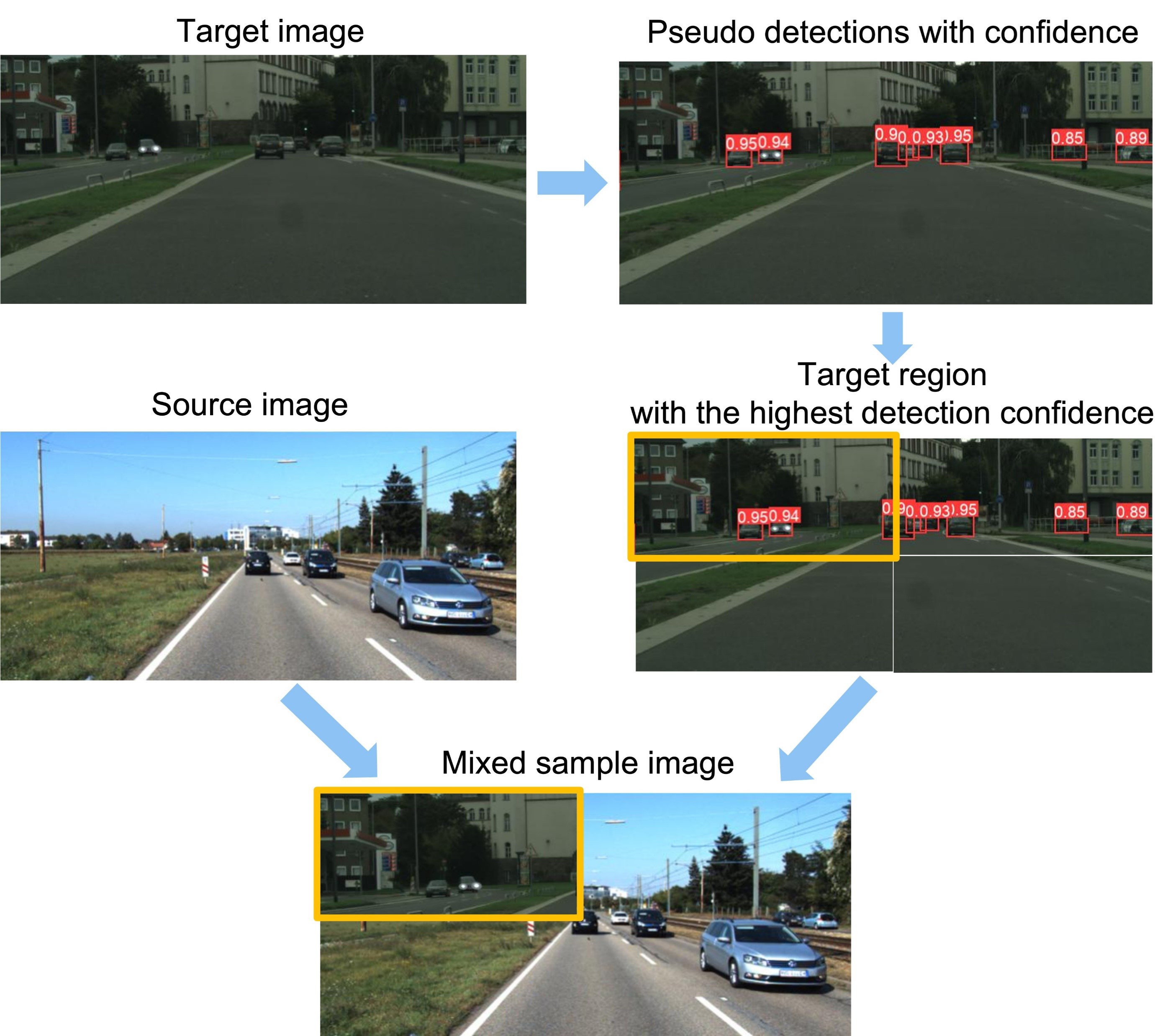

Abstract: Unsupervised Domain Adaptation (UDA) for object detection aims to adapt a model trained on a source domain to detect instances from a new target domain for which annotations are not available. Different from traditional approaches, we propose ConfMix, the first method that introduces a sample mixing strategy based on region-level detection confidence for adaptive object detector learning. We mix the local region of the target sample that corresponds to the most confident pseudo detections with a source image, and apply an additional consistency loss term to gradually adapt towards the target data distribution. In order to robustly define a confidence score for a region, we exploit the confidence score per pseudo detection that accounts for both the detector-dependent confidence and the bounding box uncertainty. Moreover, we propose a novel pseudo labelling scheme that progressively filters the pseudo target detections using the confidence metric that varies from a loose to strict manner along the training. We perform extensive experiments with three datasets, achieving state-of-the-art performance in two of them and approaching the supervised target model performance in the other.

We recommend the use of a Linux machine equipped with CUDA compatible GPUs. The execution environment can be installed through Conda or Docker.

Clone repo:

git clone https://github.com/giuliomattolin/ConfMix # clone

cd ConfMixInstall requirements.txt in a Python>=3.7.0 environment, including PyTorch>=1.7. The environment can be installed and activated with:

conda create --name ConfMix python=3.7

conda activate ConfMix

pip install -r requirements.txt # installUse the Dockerfile to build the docker image:

docker build -t confmix:1.0 -f utils/docker/Dockerfile .Run the docker image mounting the root directory to /usr/src/app in the docker container:

docker run -it --gpus all --ipc=host -v /path/to/directory/ConfMix:/usr/src/app confmix:1.0 /bin/bashWe construct the training and testing set for the three benchmark settings, by performing the following steps:

- Cityscapes -> Foggy Cityscapes

- Download Cityscapes and Foggy Cityscapes dataset from the following link. Particularly, we use leftImg8bit_trainvaltest.zip for Cityscapes and leftImg8bit_trainvaltest_foggy.zip for FoggyCityscapes, considering only images with

$\beta$ = 0.02. - Download and extract the converted annotation from the following links: Cityscapes (YOLO format) and Foggy Cityscapes (YOLO format) move the them to

Cityscapes/labels/trainandFoggyCityscapes/labels/train,FoggyCityscapes/labels/valdirectories. - Extract the training sets from leftImg8bit_trainvaltest.zip, then move the folder

leftImg8bit/train/toCityscapes/images/traindirectory. - Extract the training and validation set from leftImg8bit_trainvaltest_foggy.zip, then move the folder

leftImg8bit_foggy/train/andleftImg8bit_foggy/val/toFoggyCityscapes/images/trainandFoggyCityscapes/images/valdirectory, respectively.

- Download Cityscapes and Foggy Cityscapes dataset from the following link. Particularly, we use leftImg8bit_trainvaltest.zip for Cityscapes and leftImg8bit_trainvaltest_foggy.zip for FoggyCityscapes, considering only images with

- Sim10k -> Cityscapes (car category only)

- Download Sim10k dataset and Cityscapes dataset from the following links: Sim10k and Cityscapes. Particularly, we use repro_10k_images.tgz and repro_10k_annotations.tgz for Sim10k and leftImg8bit_trainvaltest.zip for Cityscapes.

- Download and extract the converted annotation from the following links: Sim10k (YOLO format) and Cityscapes (YOLO format) and move the them to

Sim10K/labels/trainandCityscapes/labels/train,Cityscapes/labels/valdirectories. - Extract the training set from repro_10k_images.tgz and repro_10k_annotations.tgz, then move all images under

VOC2012/JPEGImages/toSim10k/images/traindirectory - Extract the training and validation set from leftImg8bit_trainvaltest.zip, then move the folder

leftImg8bit/train/andleftImg8bit/val/toCityscapes/images/trainandCityscapes/images/valdirectory, respectively.

- KITTI -> Cityscapes (car category only)

- Download KITTI dataset and Cityscapes dataset from the following links: KITTI and Cityscapes. Particularly, we use data_object_image_2.zip for KITTI and leftImg8bit_trainvaltest.zip for Cityscapes.

- Download and extract the converted annotation from the following links: KITTI (YOLO format) and Cityscapes (YOLO format) and move the them to

KITTI/labels/trainandCityscapes/labels/train,Cityscapes/labels/valdirectories. - Extract the training set from data_object_image_2.zip, then move all images under

training/image_2/toKITTI/images/traindirectory. - Extract the training and validation set from leftImg8bit_trainvaltest.zip, then move the folder

leftImg8bit/train/andleftImg8bit/val/toCityscapes/images/trainandCityscapes/images/valdirectory, respectively.

Pretrained models are available from Google Drive.

To reproduce the experimental result, we recommend training the model with the following steps.

Before training, please check data/Cityscapes2Foggy.yaml, data/Sim10K2Cityscapes.yaml, data/KITTI2Cityscapes.yaml, and enter the correct data paths.

The model is trained in 2 successive phases:

- Phase 1: Model pre-train

- Phase 2: Adaptive learning

The first phase of training consists in the pre-training of the model on the source domain. Training can be performed by running the following bash script:

Cityscapes -> Foggy Cityscapes:

python train.py \

--name cityscapes \

--batch 2 \

--img 600 \

--epochs 20 \

--data data/Cityscapes2Foggy.yaml \

--weights yolov5s.ptSim10k -> Cityscapes (car category only):

python train.py \

--name sim10k \

--batch 2 \

--img 600 \

--epochs 20 \

--data data/Sim10K2Cityscapes.yaml \

--weights yolov5s.ptKITTI -> Cityscapes (car category only):

python train.py \

--name kitti \

--batch 2 \

--img 600 \

--epochs 20 \

--data data/KITTI2Cityscapes.yaml \

--weights yolov5s.ptQualitative results are saved under the runs/train/{name} directory, while checkpoints are saved under the runs/train/{name}/weights directory. Please note that in all our experiments we only consider the weights associated with the last training epoch, i.e. last.pt.

The second phase of training consists in performing the adaptive learning. Training can be performed by running the following bash script:

Cityscapes -> Foggy Cityscapes:

python uda_train.py \

--name cityscapes2foggy \

--batch 2 \

--img 600 \

--epochs 50 \

--data data/Cityscapes2Foggy.yaml \

--weights runs/train/cityscapes/weights/last.ptSim10k -> Cityscapes (car category only):

python uda_train.py \

--name sim10k2cityscapes \

--batch 2 \

--img 600 \

--epochs 50 \

--data data/Sim10K2Cityscapes.yaml \

--weights runs/train/sim10k/weights/last.ptKITTI -> Cityscapes (car category only):

python uda_train.py \

--name kitti2cityscapes \

--batch 2 \

--img 600 \

--epochs 50 \

--data data/KITTI2Cityscapes.yaml \

--weights runs/train/kitti/weights/last.ptThe trained models can be evaluated by running the following bash script:

Cityscapes -> Foggy Cityscapes:

python uda_val.py \

--name cityscapes2foggy \

--img 600 \

--data data/Cityscapes2Foggy.yaml \

--weights runs/train/cityscapes2foggy/weights/last.pt \

--iou-thres 0.5Sim10k -> Cityscapes (car category only):

python uda_val.py \

--name sim10k2cityscapes \

--img 600 \

--data data/Sim10K2Cityscapes.yaml \

--weights runs/train/sim10k2cityscapes/weights/last.pt \

--iou-thres 0.5KITTI -> Cityscapes (car category only):

python uda_val.py \

--name kitti2cityscapes \

--img 600 \

--data data/KITTI2Cityscapes.yaml \

--weights runs/train/kitti2cityscapes/weights/last.pt \

--iou-thres 0.5Please note that in all our experiments we only consider the weights associated with the last training epoch, i.e. last.pt.

Please consider citing our paper in your publications if the project helps your research.

@inproceedings{mattolin2023confmix,

title={ConfMix: Unsupervised Domain Adaptation for Object Detection via Confidence-based Mixing},

author={Mattolin, Giulio and Zanella, Luca and Ricci, Elisa and Wang, Yiming},

booktitle={Proceedings of the IEEE/CVF Winter Conference on Applications of Computer Vision},

pages={423--433},

year={2023}

}