PyTorch implementation of the YOLO architecture presented in "You Only Look Once: Unified, Real-Time Object Detection" by Joseph Redmon, Santosh Divvala, Ross Girshick, Ali Farhadi

For the sake of convenience, PyTorch's pretrained ResNet50 architecture was used as the backbone for the model instead of Darknet. However, the detection

layers at the end of the model exactly follow those described in the paper. The data was augmented by randomly scaling dimensions,

shifting position, and adjusting hue/saturation values by up to 20% of their original values.

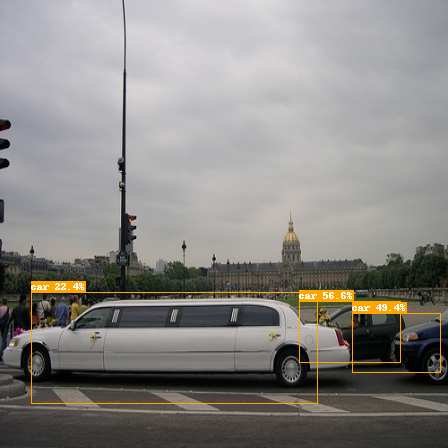

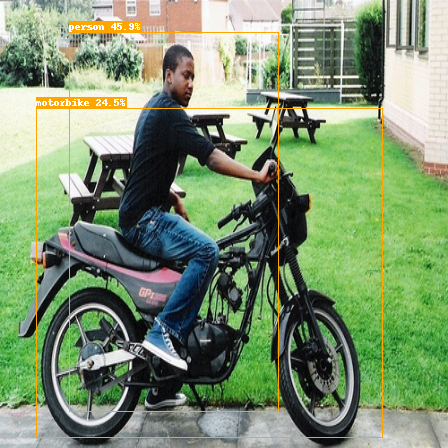

Overall, the bounding boxes look convincing, though it is interesting to note that YOLOv1 has trouble detecting tightly grouped objects as well as small, distant ones.

Additionally, the fully vectorized SumSquaredLoss function achieves roughly a 4x speedup in training time compared to using a for-loop to determine bounding box responsibility.

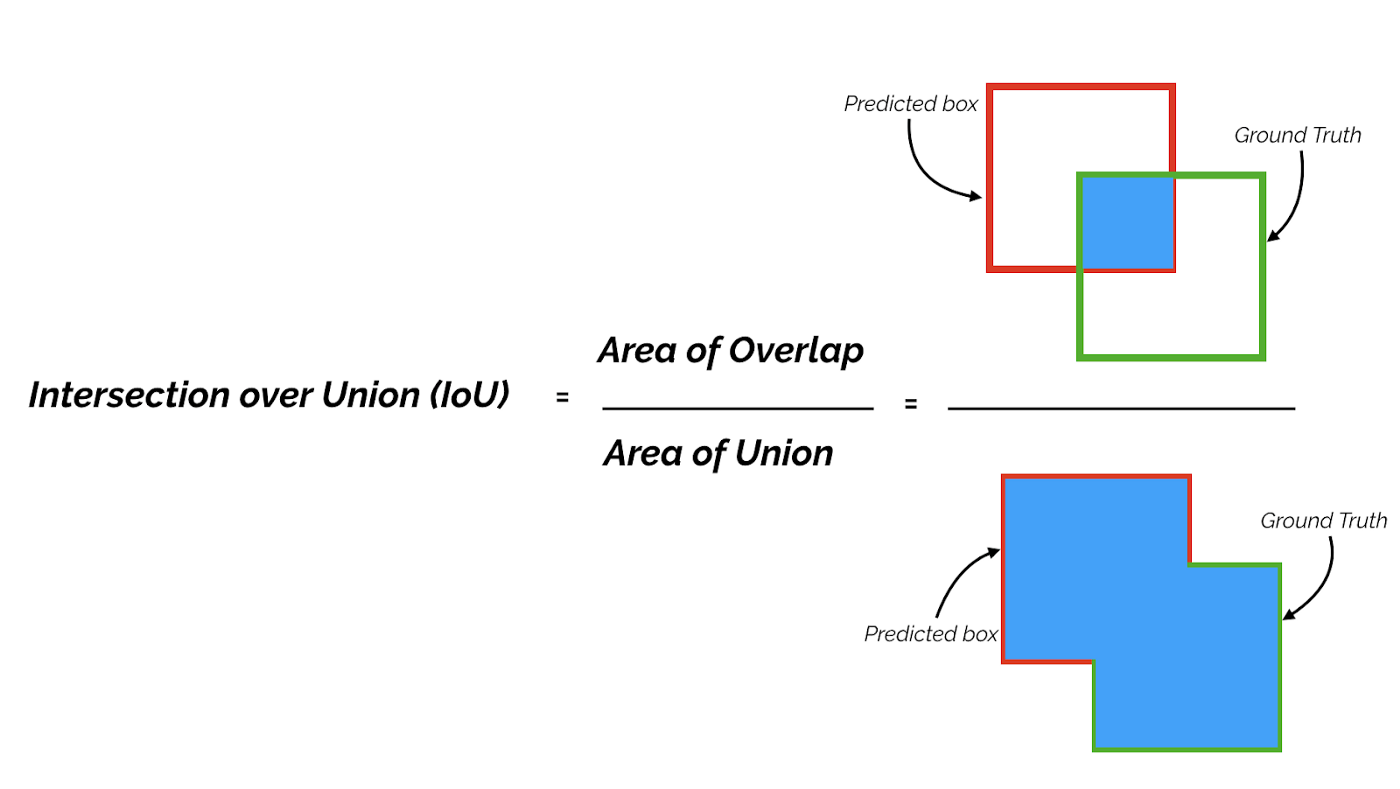

Area of intersection between ground truth and predicted bounding box, divided by the area of their union. The confidence score

for each square in the grid is Pr(object in square) * IOU(truth, pred). If the IOU is greater than some threshold t,

then the prediction is a True Positive (TP), otherwise the prediction is a False Positive (FP).

Here's a great visual from [2]:

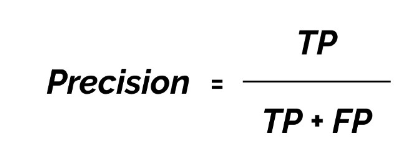

Measures how much you can trust a Positive prediction from the model. Out of all positive predictions, calculates what proportion are correctly identified.

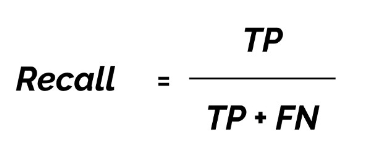

Measures how well the model does in finding all the positives. TP gives the number of correct finds, and FN gives

the number of objects that were present but missed by the model. Together, TP + FN equals the total number of labeled objects.

[1] Joseph Redmon, Santosh Divvala, Ross Girshick, Ali Farhadi. You Only Look Once: Unified, Real-Time Object Detection. arXiv:1506.02640v5 [cs.CV] 9 May 2016

[2] Towards Datascience. mAP (mean Average Precision) might confuse you!