This toolkit improves the performance of HuggingFace transformer models on downstream NLP tasks, by domain-adapting models to the target domain of said NLP tasks (e.g. BERT -> LawBERT).

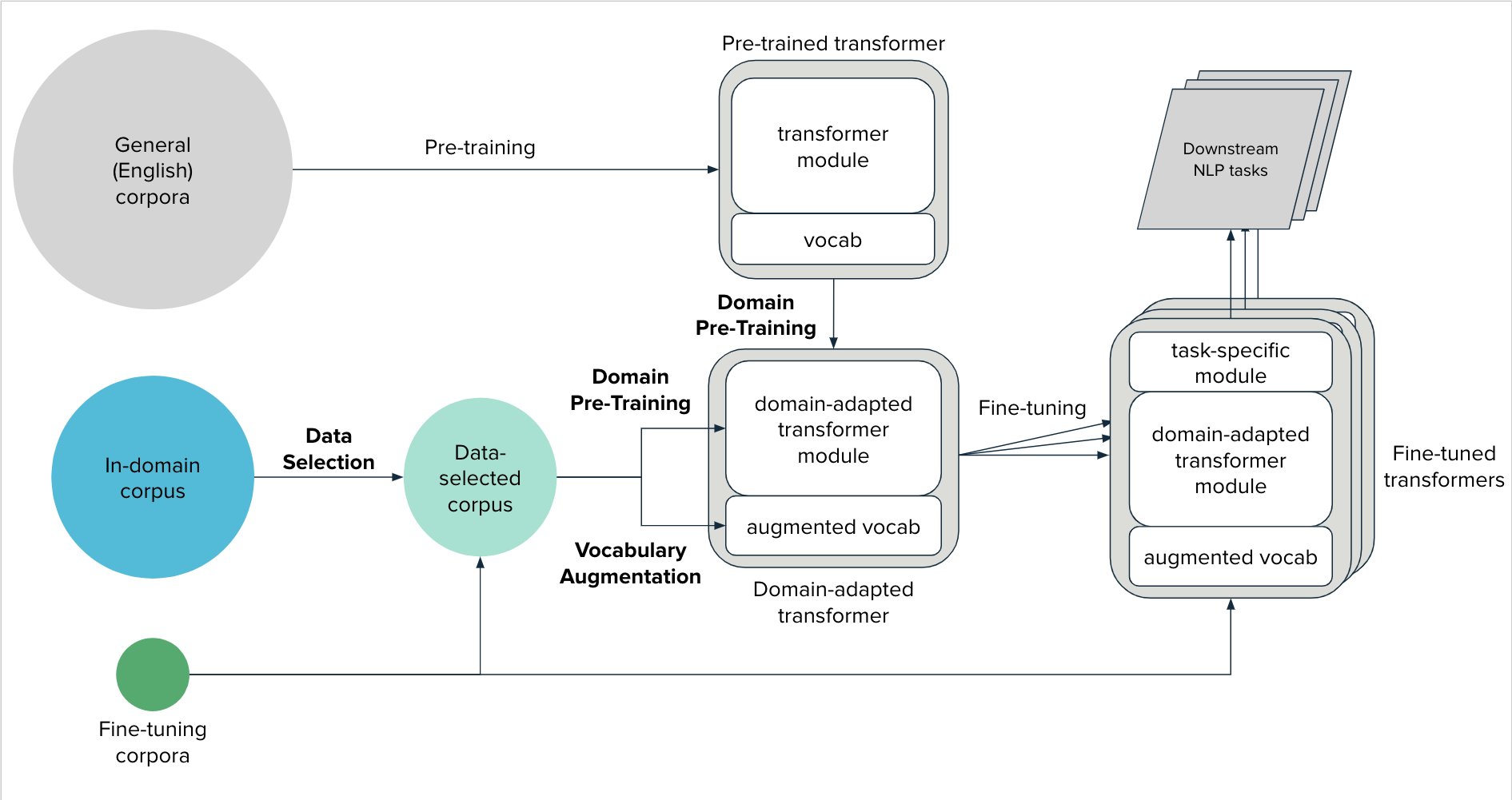

The overall Domain Adaptation framework can be broken down into three phases:

- Data Selection

Select a relevant subset of documents from the in-domain corpus that is likely to be beneficial for domain pre-training (see below)

- Vocabulary Augmentation

Extending the vocabulary of the transformer model with domain specific-terminology

- Domain Pre-Training

Continued pre-training of transformer model on the in-domain corpus to learn linguistic nuances of the target domain

After a model is domain-adapted, it can be fine-tuned on the downstream NLP task of choice, like any pre-trained transformer model.

This toolkit provides two classes, DataSelector and VocabAugmentor, to simplify the Data Selection and Vocabulary Augmentation steps respectively.

This package was developed on Python 3.6+ and can be downloaded using pip:

pip install transformers-domain-adaptation

- Compatible with the HuggingFace ecosystem:

transformers 4.xtokenizersdatasets

Please refer to our Colab guide!

TODO