This experiment uses Wasserstein GANs (WGANs) onboard the European Space Agency's OPS-SAT-1 spacecraft to reconstruct corrupted pictures of Earth thus showcasing the very first use of Generative AI in space.

The camera onboard the European Space Agency's OPS-SAT-1 spacecraft has been operating past its design life [1]. As the payload ages, there is an increasing risk of sensor degradation leading to corrupted images. This paper evaluates Generative AI models with Wasserstein GANs (WGANs)—an enhanced type of Generative Adversarial Networks—as a noise reduction solution to reconstruct noisy images directly onboard the spacecraft. Autoencoder neural networks are also trained and evaluated for comparative purposes given their common use in noise reduction.

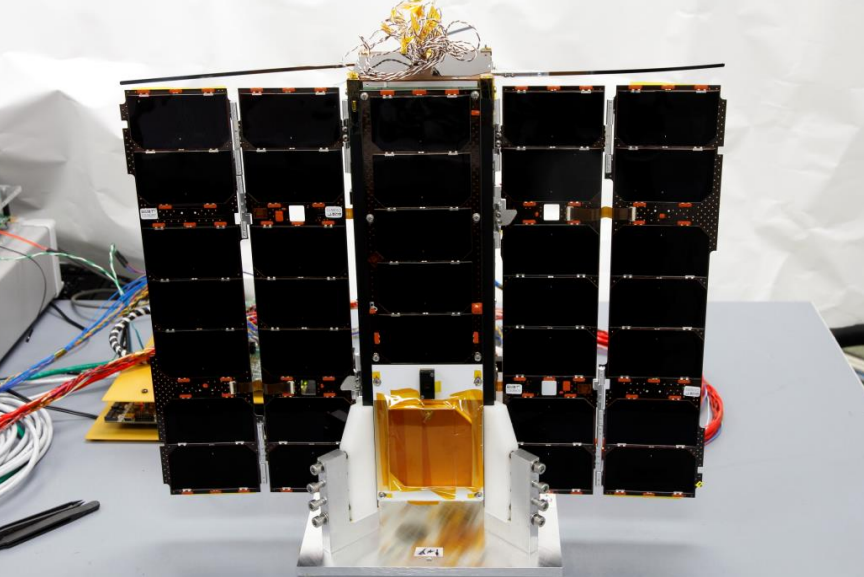

Figure 1: The OPS-SAT spacecraft in the clean room with deployed solar arrays (TU Graz).

Images downlinked from the spacecraft serve as training data to which artificial fixed-pattern noise is applied to simulate sensor degradation. The trained neural networks are uplinked to the spacecraft's edge computer payload where they are processed by the onboard TensorFlow Lite interpreter to output noise-free images.

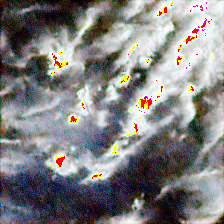

On September 29, 2023, the OPS-SAT-1 mission achieved a significant milestone when it successfully captured, noised, and subsequently denoised two images using WGANs, marking the pioneering first application of Generative AI in space.

The restored images have remarkably high structural similarity indices of 0.894 and 0.922—where 1 would indicate that they are identical to their original images. Interestingly, some reconstructed images are more confidently labeled by the onboard convolutional neural network image classifier than their original counterparts. The counterintuitive observation challenges the conventional understanding that higher resolution always yields better results. This suggests that simplifying or modifying certain data features enhances the ability of some models to accurately interpret given inputs.

|

|

|

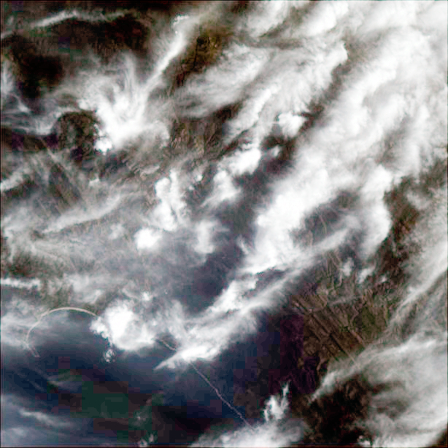

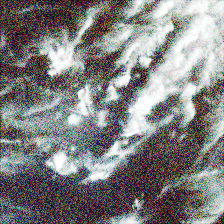

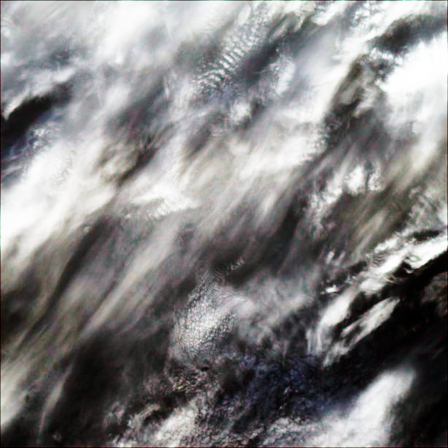

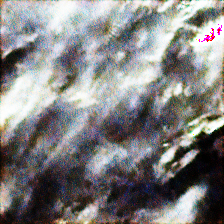

| (a) Original. | (b) Noised. | (c) Denoised. |

|

|

|

| (d) Original. | (e) Noised. | (f) Denoised. |

|

|

|

| (g) Original. | (h) Noised. | (i) Denoised. |

Figure 2: Fixed-Pattern Noise (FPN) factor 50 noising and WGANs Generative AI denoising onboard the spacecraft. Images are post-processed.

Links to our paper will be provided as soon as it's published. Meanwhile, enjoy browsing to the repository and reaching out via GitHub Issues!

We appreciate citations to our upcoming peer-reviewed IEEE publication. Thank you!

@inproceedings{ieee_aeroconf_labreche2024,

title = {{Generative AI... in Space! Adversarial Networks to Denoise Images Onboard the OPS-SAT-1 Spacecraft}},

author = {Labrèche, Georges and Guzman, Cesar and Bammens, Sam},

booktitle = {{2024 IEEE Aerospace Conference}},

year = {2024}

}Labrèche, G., Guzman, C., & Bammens, S. (2024). Generative AI... in Space! Adversarial Networks to Denoise Images Onboard the OPS-SAT-1 Spacecraft. 2024 IEEE Aerospace Conference.

The OPS-SAT-1 mission has achieved many firsts in onboard machine learning with neural networks. Past experiments have successfully executed TensorFlow Lite model interpreters on the spacecraft's edge computer payload [2, 3, 4]. Onboard ML has since been a common practice by the spacecraft's mission control team, particularly for image-based autonomous decision-making. The experiment presented in this paper builds on those successes to train and evaluate onboard Generative AI.

Have any experiment idea? Register to become an experimenter!

The authors would like to thank the OPS-SAT-1 Mission Control Team at ESA's European Space Operations Centre (ESOC) for their continued support in validating the experiment, especially Vladimir Zelenevskiy, Rodrigo Laurinovics, Marcin Jasiukowicz, and Adrian Calleja. Their persistence in scheduling and running the experiment onboard the spacecraft until sufficient data was acquired and downlinked was crucial to the success of this experiment. A special thank you to Kevin Cheng from Subspace Signal for granting and facilitating remote access to the GPU computing infrastructure used to train the models presented in this work.

[1] Segert T., Engelen S., Buhl M., & Monna B. (2011). Development of the Pico Star Tracker ST-200 – Design Challenges and Road Ahead. 25th Annual Small Satellite Conference, Logan, UT, USA.

[2] Labrèche, G., Evans, D., Marszk, D., Mladenov, T., Shiradhonkar, V., Soto, T., & Zelenevskiy, V. (2022). OPS-SAT Spacecraft Autonomy with TensorFlow Lite, Unsupervised Learning, and Online Machine Learning. 2022 IEEE Aerospace Conference, Big Sky, MT, USA,

[3] Mladenov T., Labrèche G., Syndercombe T., & Evans D. (2022). Augmenting Digital Signal Processing with Machine Learning techniques using the Software Defined Radio on the OPS-SAT Space Lab. 73rd International Astronautical Congress, Paris, France.

[4] Kacker S., Meredith A., Cahoy K., & Labrèche G. (2022). Machine Learning Image Processing Algorithms Onboard OPS-SAT. 36th Annual Small Satellite Conference, Logan, UT, USA.

Get to know the experimenters!

Georges enjoys running marathons and running experiments on the OPS-SAT-1 Space Lab. Through his work, he has established himself as a leading figure in applied artificial intelligence for in-orbit machine learning in onboard spacecraft autonomy. He lives in Queens, NY, and supports the OPS-SAT-1 mission through his Estonian-based consultancy Tanagra Space. Georges received his B.S. in Software Engineering from the University of Ottawa, Canada, M.A. in International Affairs from the New School University in New York, NY, and M.S. in Spacecraft Design from Luleå University of Technology in Kiruna, Sweden.

César is an interdisciplinary R&D engineer with a background in reactive planning, plan execution and monitoring, space systems engineering, and machine learning. Cesar holds a Ph.D. in AI at the University Politechnical of Valencia. He enjoys developing machine learning experiments on the OPS-SAT-1 Space Lab. In 2012, He collaborated with NASA Ames Research Center and is partner at the Estonian-based consultancy Tanagra Space.

Sam is a distinguished graduate with an M.S. degree in electronics and computer engineering technology from Hasselt University and KU Leuven in 2021. He embarked on his career journey with the ESA. During his initial two years, Sam served as a Young Graduate Trainee on the mission control team of OPS-SAT, showcasing his expertise. From there he moved to the role of Spacecraft Operations Engineer within ESA's ESOC interplanetary department as part of the flight control team dedicated to ESA's Solar Orbiter mission, solidifying his impact in the realm of space exploration.