Neural search through protein sequences using the ProtBert model and the Jina AI framework.

App demo:

protein_search.mp4

- ProtBert https://huggingface.co/Rostlab/prot_bert

- Jina AI https://jina.ai

- 3D protein models http://3dmol.org

First, clone the repository with git,

git clone https://github.com/georgeamccarthy/protein_search/ # Cloning

cd protein_search # Changing directoryIf you're familiar with Docker, you can simply run make docker (assuming you're running Linux).

The above command will,

- Create the container for the

frontend, installs dependencies, starts theStreamlitapplication - Create the container for the

backend, installs dependencies, starts theJinaapplication - Provide you with links as logs to access the two containers

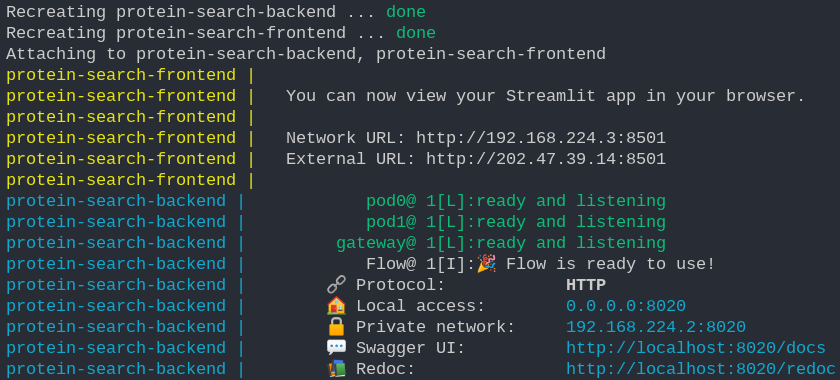

Visually, you should see something like,

From there on, you should be able to visit the Streamlit frontend, and enter your protein relatd query.

Some notes before you use this route,

Dockertakes a few moments to build the wheel for the dependencies, so thepipstep in each of the containers my last as long as 1-2 minutes.- The

torchdependency inbackend/requirements.txtis 831.1 MBs large at the time of writing. Unless you get red colored logs, everything is fine and just taking time to be installed fortorch - This project uses the

Rostbert/prot_bertpre-trained model fromHuggingFacewhich is 1.68 GBs in size.

The great news is that you will need to install these dependencies and build the images only once. Docker will cache all of the layers and steps, and caching for the pre-trained model has been integrated.

Some more functionalites provided are,

- To stop the logs from

docker, pressCtrl^C - For resuming, run

make up - To remove the containers from the background, run

make remove - To build the containers again, run

make docker

As for introducing new changes, both the containers do not need to be restarted to do so.

For each of the folders frontend, and backend, run the following commands

- Making a new

venvvirtual environment,

cd folder_to_go_into/ # `folder_to_go_into` is either `frontend` or `backend`

python3 -m venv env

source venv/bin/activate- Installing dependencies

pip install -r requirements.txtIf in backend, run python3 src/app.py

Open a new terminal, head back into the frontend folder, repeat venv creation and dependency

installation, and run streamlit run app.py.

Refer to the Makefile for the specific commands

To format code following the black standard

$ make format

Code linting with flake8

$ make lint

Testing

$ make test

Testing with coverage analysis

$ make coverage

Format, test and coverage

$ make build