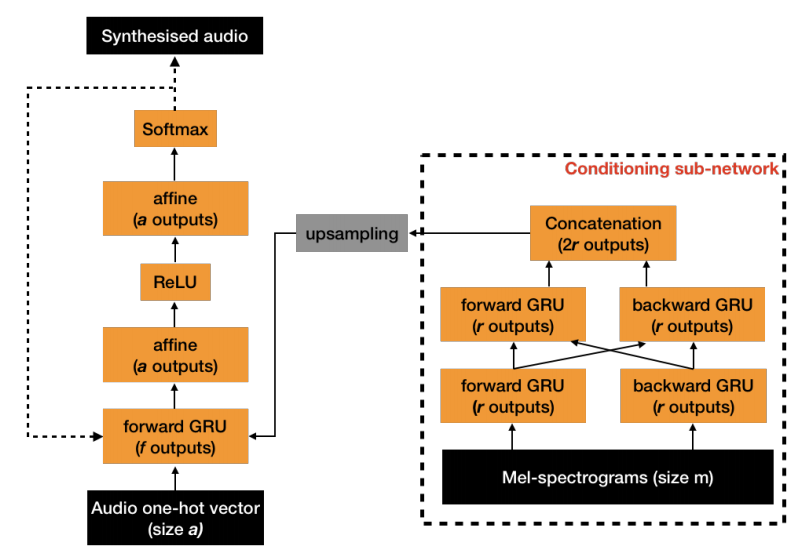

Robust Universal Neural Vocoding

A PyTorch implementation of Robust Universal Neural Vocoding. Audio samples can be found here.

Quick Start

-

Ensure you have Python 3 and PyTorch 1.

-

Clone the repo:

git clone https://github.com/bshall/UniversalVocoding

cd ./UniversalVocoding

- Install requirements:

pip install -r requirements.txt

- Download and extract ZeroSpeech2019 TTS without the T English dataset:

wget https://download.zerospeech.com/2019/english.tgz

tar -xvzf english.tgz

- Extract Mel spectrograms and preprocess audio:

python preprocess.py

- Train the model:

python train.py

- Generate:

python generate.py --checkpoint=/path/to/checkpoint.pt --wav-path=/path/to/wav.wav

Pretrained Models

Pretrained weights for the 9-bit model are available here.

Notable Differences from the Paper

- Trained on 16kHz audio from 102 different speakers (ZeroSpeech 2019: TTS without T English dataset)

- The model generates 9-bit mu-law audio (planning on training a 10-bit model soon)

- Uses an embedding layer instead of one-hot encoding