A proof-of-concept to show how redis is a viable option for the following use case:

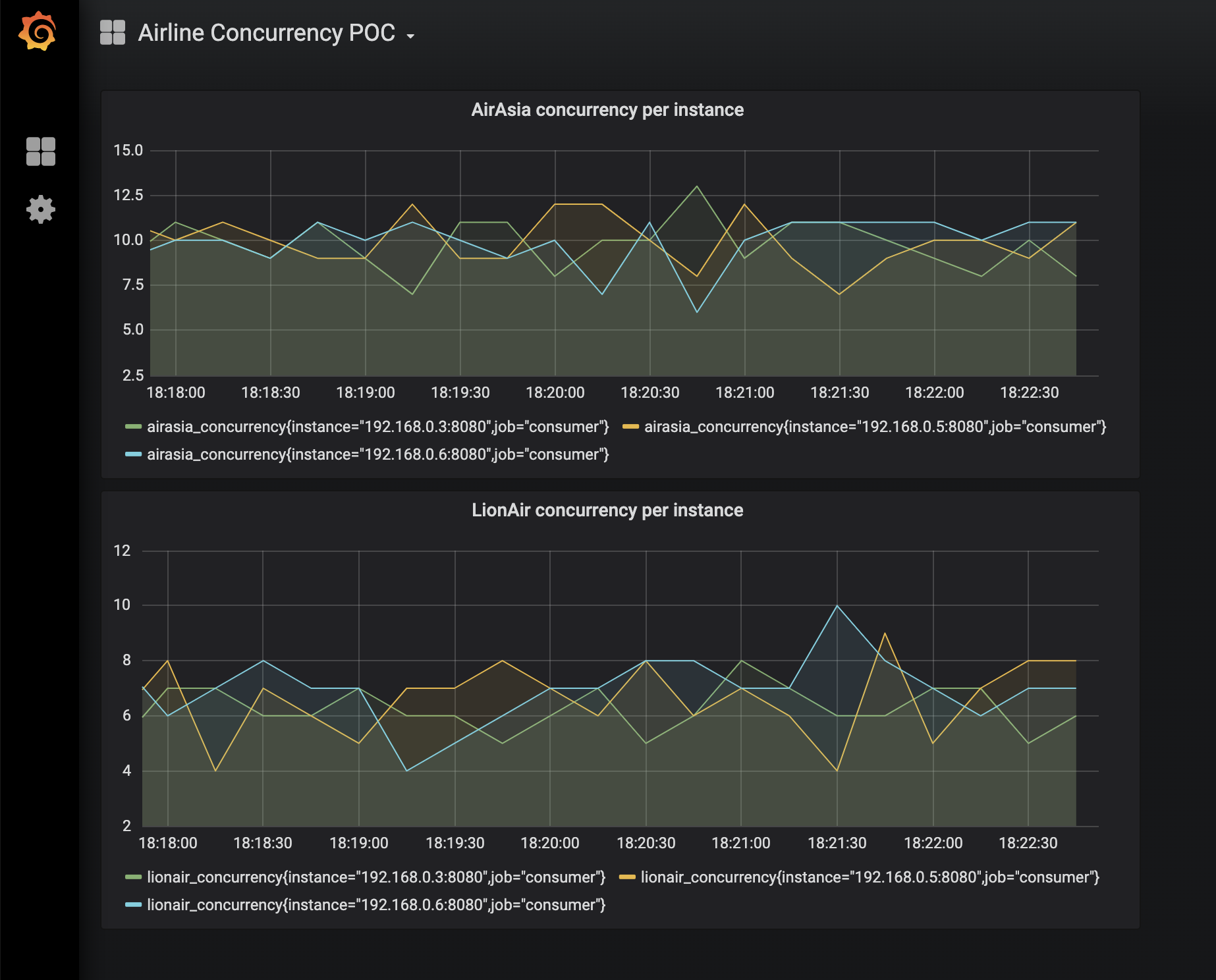

- multiple job queues (lionair, airasia)

- multiple consumer pools reading from queues

- distributed queue / job scheduler

- max concurrency of jobs being processed per queue (example: lionair = 20 jobs, airasia = 30 jobs concurrently)

- Install k6

- Run the entire setup, with 3 consumers -

docker-compose up --scale consumer=3 - Run the loadtest with (X concurrent users over Y duration) -

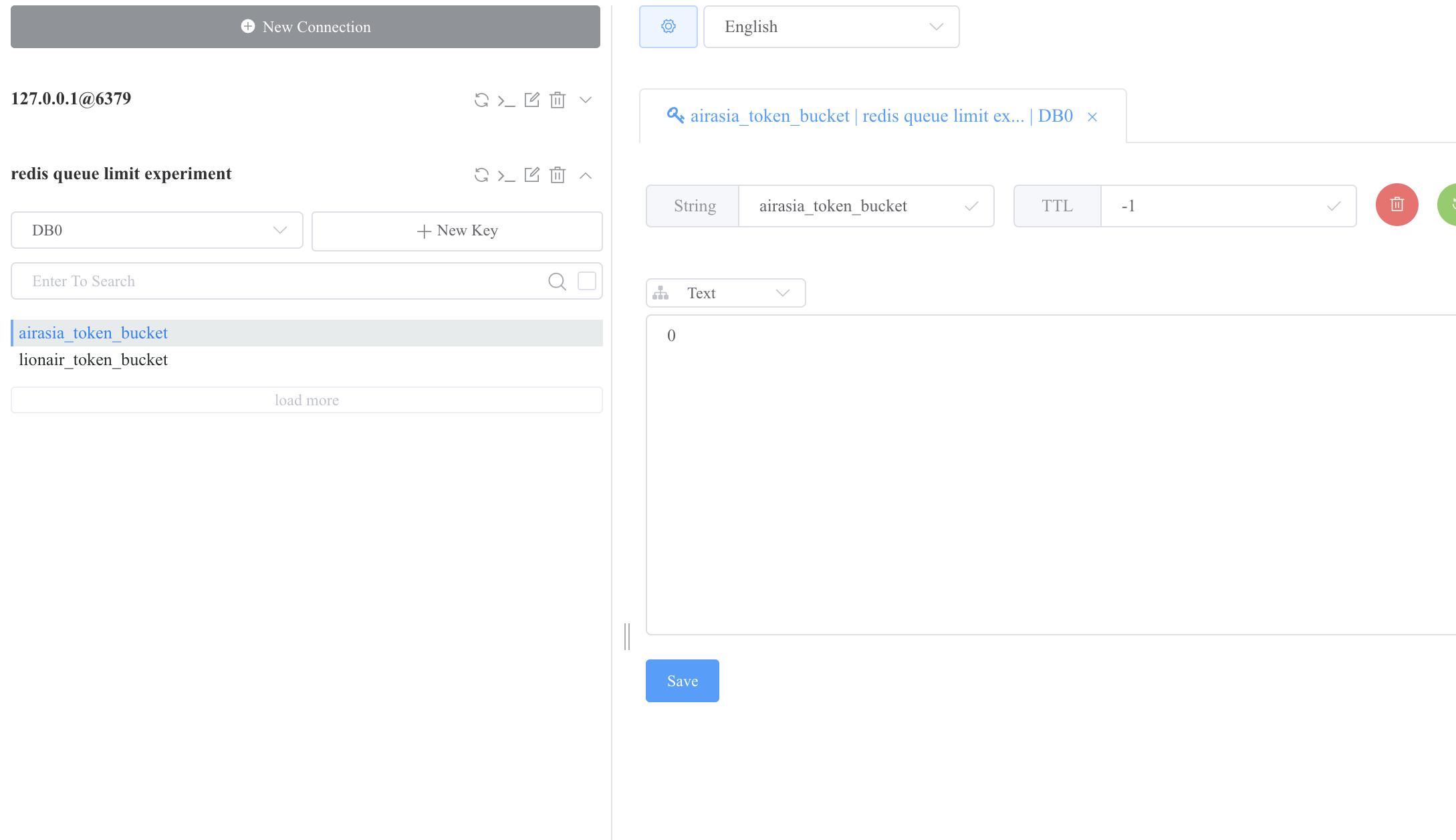

k6 run --vus 300 --duration 120s k6.js - Observe the redis token bucket drop to 0:

- Observe distribution of load on grafana.

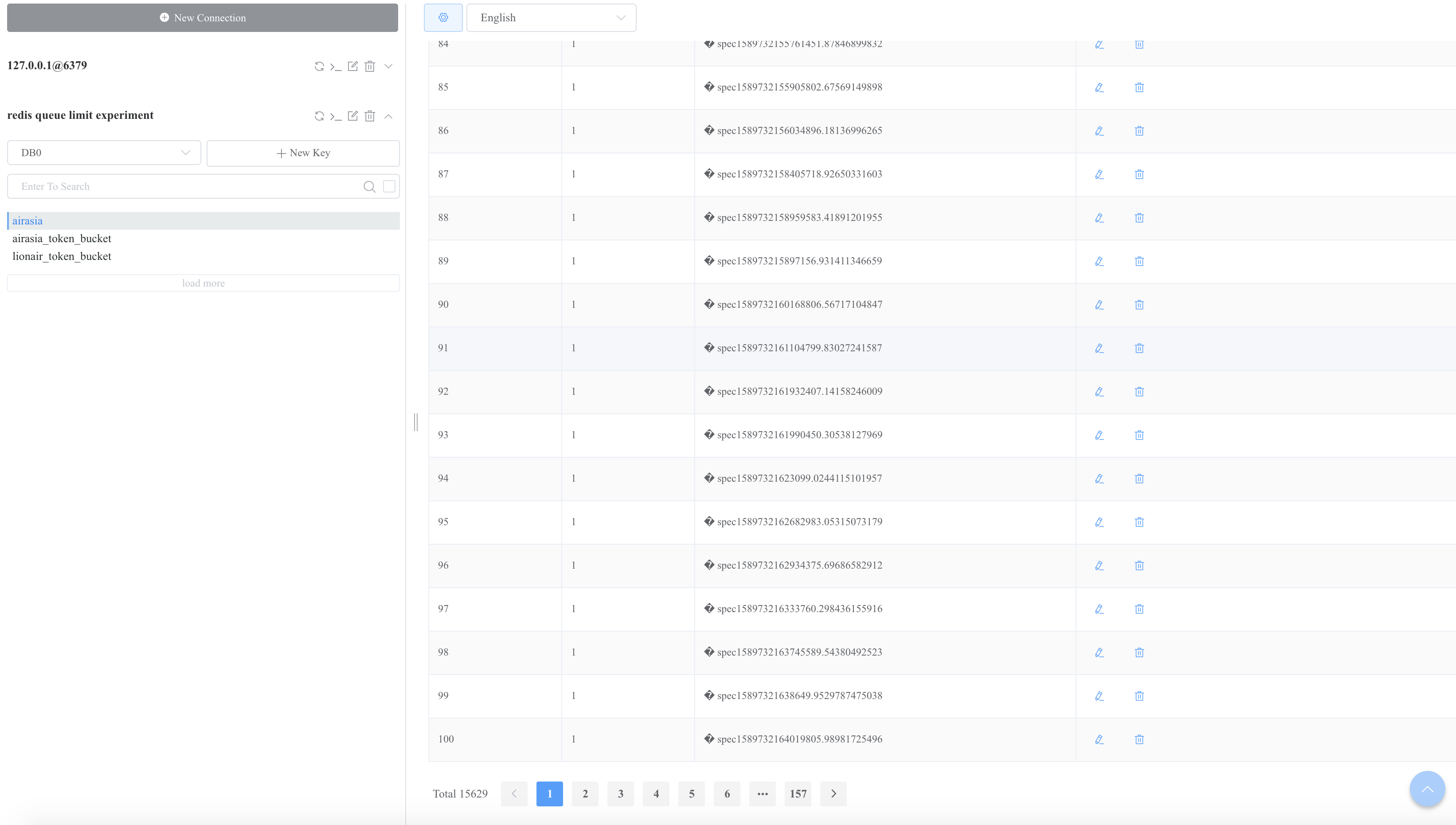

- Observe the queue build up

- Make your code changes

- Rebuild changed code -

docker-compose build - Bring up the entire setup -

docker-compose up

Queue a job:

curl --request POST \

--url http://localhost:8081/queueJob \

--header 'content-type: application/json' \

--data '{

"queueId": "airasia",

"name": "spec2",

"score": 2.1

}'

- More error handling: unlock job concurrency when thread dies / instance dies

- Pending addition of pumba to simulate failures

- Fix the karate loadtests, somehow its not triggering load in the same behavior as k6