A repository of different implementations of variational autoencoders (VAEs) in PyTorch.

The architecture is inspired by U-Net, an encoder-decoder architecture. The encoder path consists of ConvBlock and Downsample modules that progressively reduce the spatial dimensions while increasing feature channels. At the bottleneck, ResidualBlock modules refine the encoded features. The decoder path mirrors the encoder, using ConvBlock and Upsample modules to restore the original spatial dimensions. The network begins with an input projection layer and ends with an output projection layer, ensuring the output matches the input's spatial dimensions.

Taken directly from Autoencoding Variational Bayes.

|

|---|

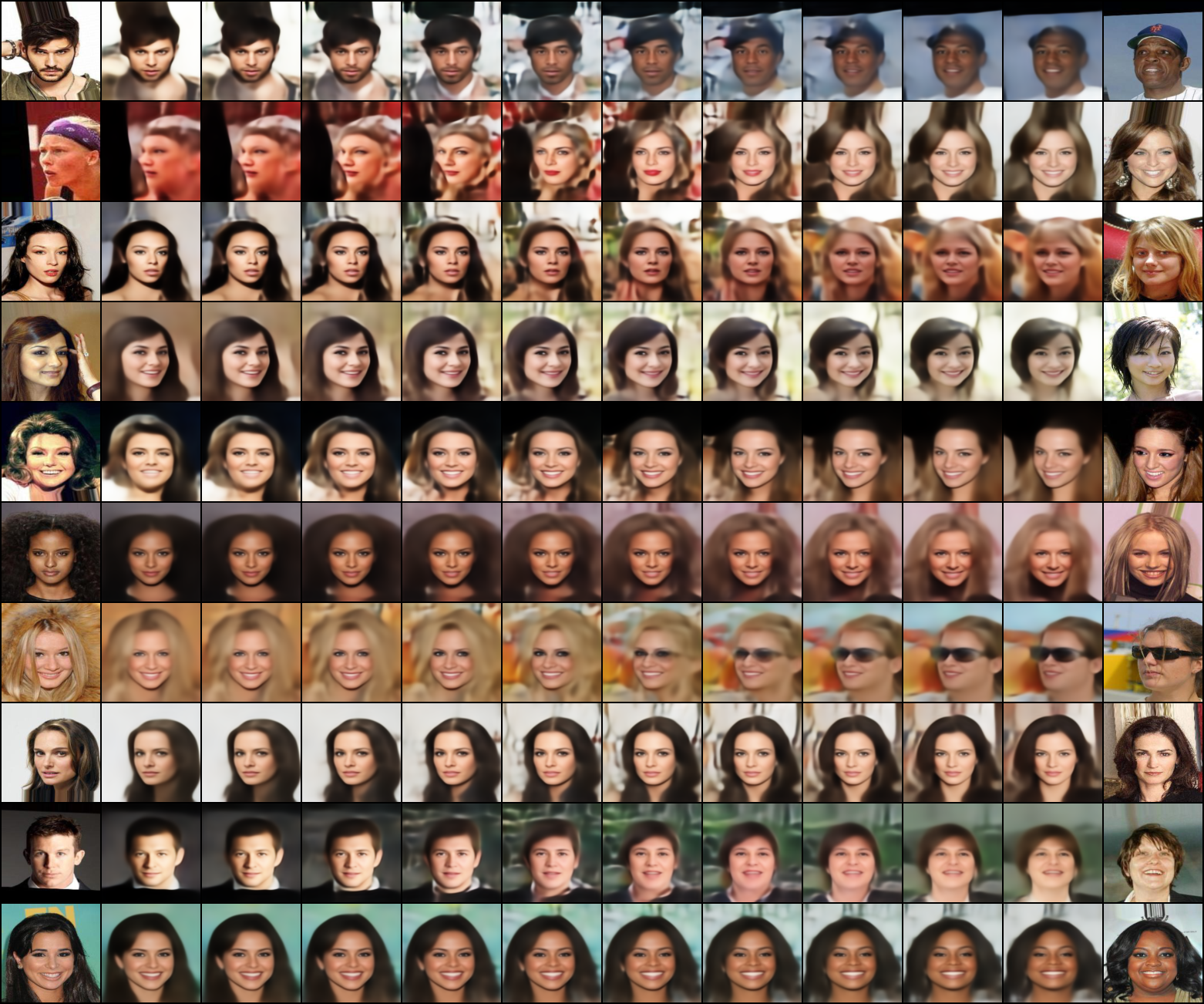

| Figure 3: Interpolations. The leftmost and rightmost images are interpolation endpoints (inputs), while the middle images are interpolations of these two, generated by the model. |

|

|---|

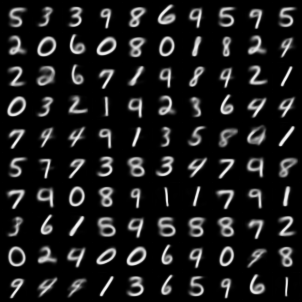

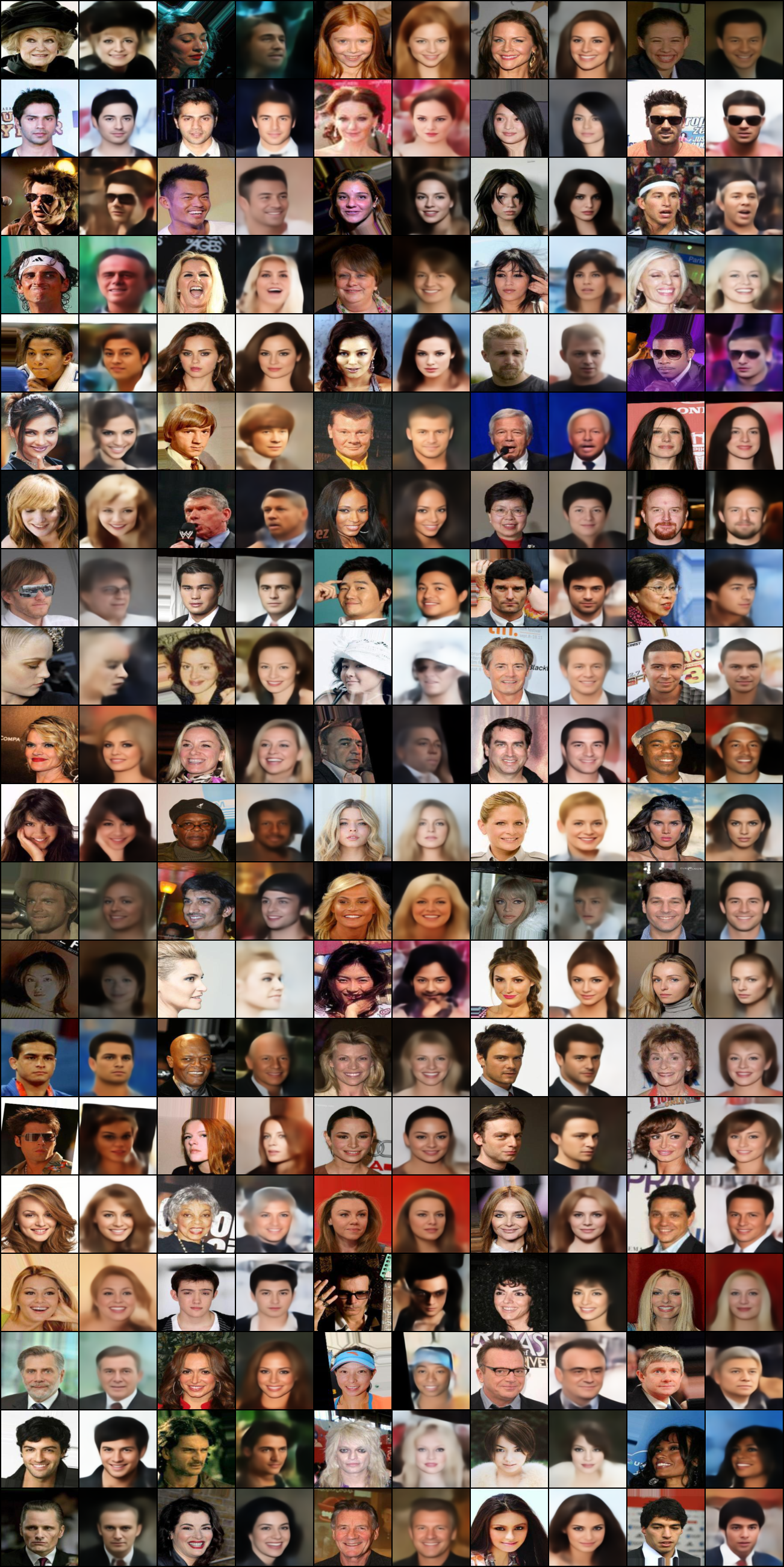

| Figure 4: Samples. |

|

|---|

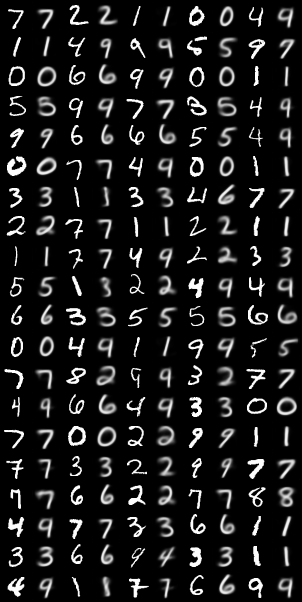

| Figure 3: Interpolations. The leftmost and rightmost images are interpolation endpoints (inputs), while the middle images are interpolations of these two, generated by the model. |

|

|---|

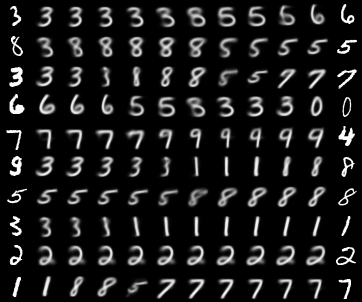

| Figure 4: Samples. |

To reproduce these results, download the appropriate checkpoint from HuggingFace dir and run the notebook.