The key idea of LoRA (Low-Rank Adaptation) in the context of adapting large pre-trained models is to approximate the weight updates with low-rank matrices. This can be represented by the following matrix equation:

where:

-

$W$ is the original weight matrix. -

$\Delta W$ is the full-rank weight update. -

$A$ and$B$ are low-rank matrices such that$\Delta W \approx AB$ .

This equation shows that instead of applying a full-rank update to

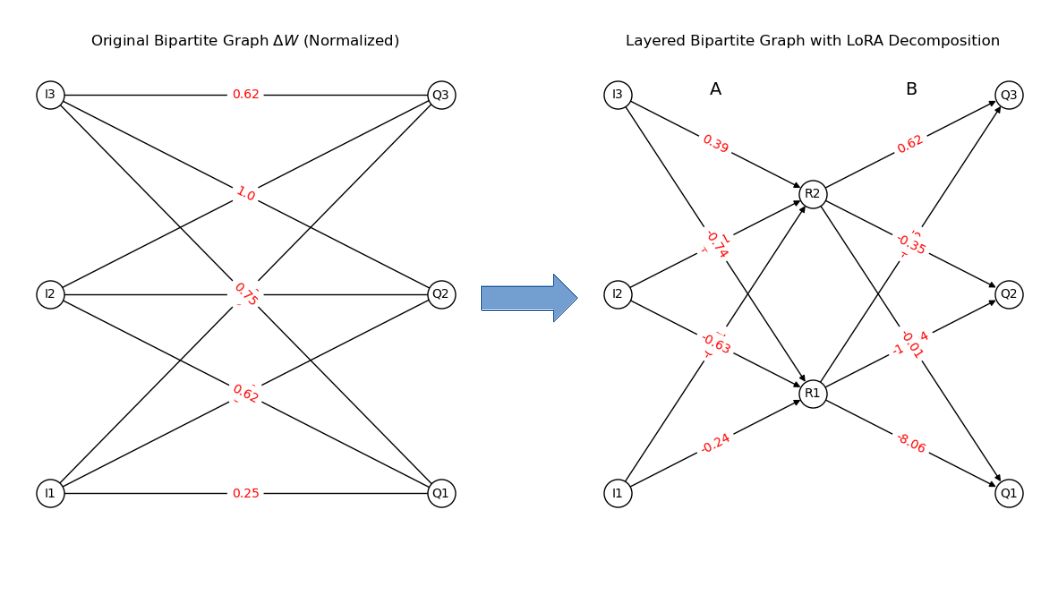

We can also think of this as decomposing the bipartite graph

This repository contains code and examples for implementing Low-Rank Adaptation (LoRA) in neural networks, particularly focusing on attention mechanisms. LoRA efficiently adapts large pre-trained models to specific tasks by injecting trainable low-rank matrices into the model's layers, reducing computational resources and time required for fine-tuning.

- LoRA - Low Rank Adaptation.ipynb: A Jupyter notebook demonstrating the implementation and application of LoRA in attention models. This notebook includes detailed explanations, code examples, and visualizations to help understand how LoRA works.

To explore the implementation and results, open the Jupyter notebook:

jupyter notebook LoRA - Low Rank Adaptation.ipynb-

Introduction to LoRA: An overview of the Low-Rank Adaptation technique, including its motivation and advantages.

-

Mathematical Formulation: Detailed mathematical explanation of how LoRA works, including the decomposition of weight matrices and the optimization process.

-

Implementation: Code examples demonstrating how to implement LoRA in attention models, with step-by-step explanations.

-

Visualization: Visual representations of the original and decomposed weight matrices, showing how LoRA efficiently captures the important features with reduced parameters.

-

Applications and Results: Examples of applying LoRA to different tasks, along with performance metrics and comparisons to standard fine-tuning methods.

LoRA is based on the principle of low-rank decomposition. In the context of attention models, it decomposes weight matrices into two smaller matrices, significantly reducing the number of parameters and computational cost.

Given a weight matrix

- Efficiency: Reduces the number of parameters to be fine-tuned.

- Scalability: Makes it feasible to adapt large models to new tasks with limited resources.

- Performance: Maintains high performance while significantly reducing computational requirements.

Contributions are welcome! If you have suggestions or improvements, please create an issue or submit a pull request.

This project is licensed under the MIT License - see the LICENSE file for details.