This repository implements Mesh-VQ-VAE, a vector-quantized autoencoder for human meshes used in VQ-HPS.

If you find this code useful, please consider citing the associated paper.

conda create -n meshvqvae python=3.9

conda activate meshvqvae

conda install pytorch=1.13.0 torchvision pytorch-cuda=11.6 -c pytorch -c nvidia

# Install dependencies for pytorch3d

conda install -c fvcore -c iopath -c conda-forge fvcore iopath

conda install -c bottler nvidiacub

# Install pytorch3d

conda install pytorch3d -c pytorch3d

Please refer to the official pytorch3d documentation for installation.

python -m pip install -r requirements.txt

This repository relies on various external downloads in order to run the code. Here is a description of each of them.

First, we create folders to store the downloads: mkdir body_models mkdir datasets

The SMPL-H body model is used to obtain human meshes. To install the body model:

- Create an account on the project-page

- Go to the Downloads page and download the "Extended SMPL+H model (used in AMASS)". Place the downloaded smplh.tar.xz in the

body_modelsfolder and extract it. - This should create a

smplhfolder. Rename themalesubfolder tomand thefemalesubfolder tof.

The Mesh-VQ-VAE encoder-decoder architecture is based on the fully convolutional mesh autoencoder (see the project page of the corresponding paper [here]). In order to use this model, please download:

- The

template.plyfile available here, and place it in thebody_modelsfolder. - The connection matrices of the mesh autoencoder available here under the

dfaust2folder. Rename the folderConnectionMatricesand place it under thebody_modelsfolder.

Mesh-VQ-VAE is trained on the SMPL animation files used to create the BEDLAM dataset. To download this data:

- Create an account on the project-page

- Go to the Downloads page and download

SMPL neutralplaced in theSMPL ground truth/animation filessection. - Extract the downloaded data in the

datasetfolder and rename itbedlam_animations.

To finetune and test Mesh-VQ-VAE, we use the annotations of the 3DPW dataset:

- Download the

sequenceFiles.zipfile, and place it in adatasets/3DPWfolder. Note that we do not need to download the images. - Run

python mesh_vq_vae/data/preprocess.py. This will createnpzfiles for each split of the 3DPW dataset. You can then delete the original dataset annotations.

You can train Mesh-VQ-VAE by running:

python train_mesh_vqvae.py

The configuration of the model used in VQ-HPS can be found under config_autoencoder/config_medium. The pre-trained weights are in checkpoint/mesh-vqvae_54.

To finetune Mesh-VQ-VAE, update the checkpoint in the configuration file and use:

python finetune_mesh_vq_vae.py

Mesh-VQ-VAE can be tested using:

python test_mesh_vqvae.py

Like for finetuning, the path of the checkpoint can be updated in the config file.

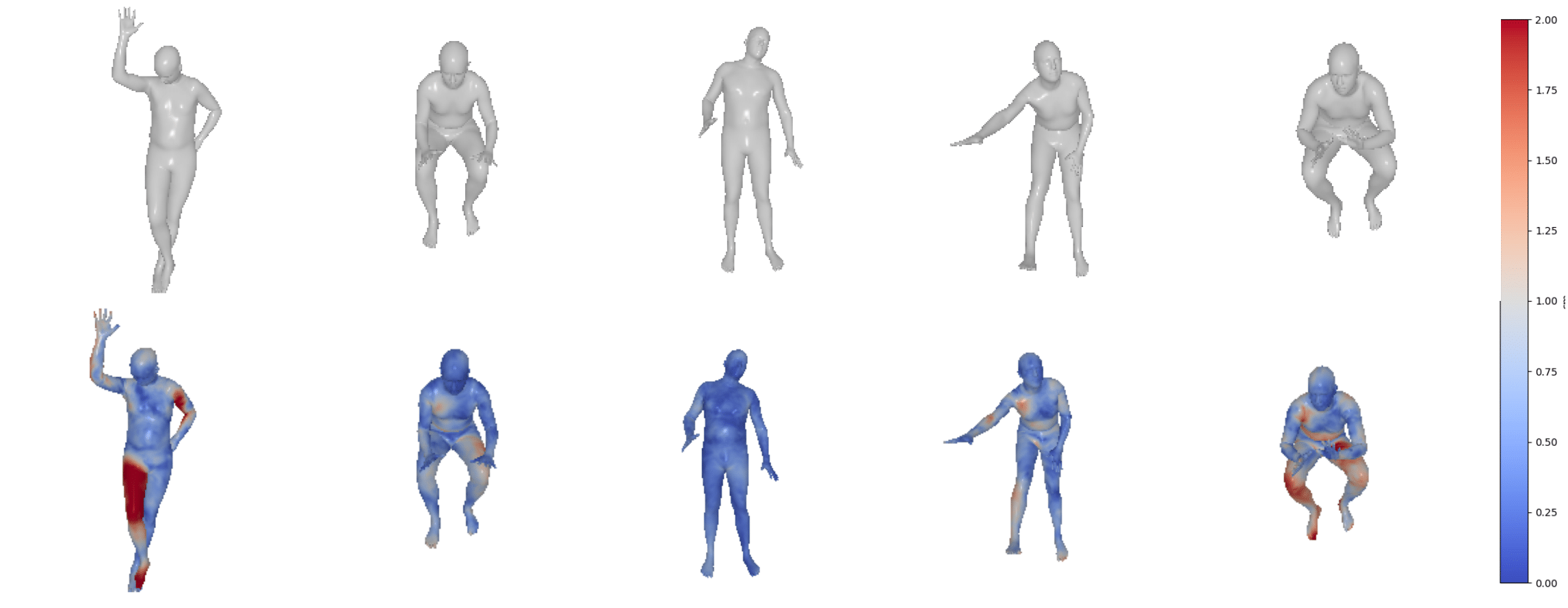

To visualize operations in the latent space, use:

python analyze_vqvae.py --p "path_to_save_visualizations"

This study is part of the EUR DIGISPORT project supported by the ANR within the framework of the PIA France 2030 (ANR-18-EURE-0022). This work was performed using HPC resources from the “Mésocentre” computing center of CentraleSupélec, École Normale Supérieure Paris-Saclay, and Université Paris-Saclay supported by CNRS and Région Île-de-France.

Some code in this repository is adapted from the following repositories:

@inproceedings{fiche2024vq,

title={VQ-HPS: Human Pose and Shape Estimation in a Vector-Quantized Latent Space},

author={Fiche, Gu{\'e}nol{\'e} and Leglaive, Simon and Alameda-Pineda, Xavier and Agudo, Antonio and Moreno-Noguer, Francesc},

booktitle={European Conference on Computer Vision ({ECCV})},

year={2024}

}