Welcome! This repository contains the code to obtain the OCR annotations of Industry Document Library (IDL) located in https://www.industrydocuments.ucsf.edu/.

More details can be found in the paper.

We exclusively used the Amazon's OCR engine Textract to get the OCR annotations. We will explain in-depth how we use Textract and parallize to get annotations for 26 Million pages. But first dependencies/requirements:

botocore==1.23.10

boto3==1.20.10

joblib==1.0.0

tqdm==4.61.0

All the code is run with python 3.7.9.

- You need to have an AWS account on https://aws.amazon.com/.

- You need to have the root account to create IAM users, more details later.

- At some point, you may want to ask for limit increase for Textract by contacting support since otherwise it will be arduously slow.

Textract works with synchronous and asynchronous operations. We will be using the asynchronous one which means that you can give all the documents to Textract, and when Textract finishes all the processes, you can download it.

First hard limit is 3000 pages for document. Second hard limit is transactions per second per account for all get (asynchronous) operations which is 10 by default. And maximum number of asynchronous jobs per account that can simultaneously exist is 600.

More about the hard limits in here and here.

IDL has a somewhat unorthodox file structure. We keep this structure also in our annotations.

f/

|-f/f/

|--f/f/b

|---f/f/b/b

...

Each folder has 16 folders inside and 4 depth and at the final depth usually around ~220 pdfs. You can check the folder structure from AWS in here.

Now, we download the IDL documents to our local drive even though we only give point the S3 location when we use Textract. Reasons are as follows.

- There are some empty or invalid or corrupted documents. We want to identify those before giving it to Textract.

- There are some documents that has more than 3000 pages which makes the Textract hang forever.

So to download the data of IDL, you can run the command:

aws s3 cp s3://edu.ucsf.industrydocuments.artifacts [PATH_TO_SAVE] --recursiveYou can also specify the specific folder to download by changing into

s3://edu.ucsf.industrydocuments.artifacts/f or s3://edu.ucsf.industrydocuments.artifacts/g/h.

You get the idea!

Now, we are done with the preliminaries and know about the hard limits and downloaded the pdfs, let's get to work.

First, as mentioned above we need to some cleaning. We check for corrupted files and move them (you need to change the ROOT and OUT in check_pdf_corrupted.py, sorry I am lazy):

python check_pdf_corrupted.pyThen we find all the big files if they have more than 3000 pages,

find ./IDL/ -type f -size +50M -name "*.pdf" > ./big_files.txtand run (by changing the path information inside the file.)

python move_big_files.pyAll the metadata we provided in the paper can be downloaded and is located in https://www.industrydocumentslibrary.ucsf.edu/dataset. For more information, you can go and check here: https://www.industrydocuments.ucsf.edu/research-tools/api/.

Now that we more or less cleaned the data, we need to run Textract. Because of the hard limits we mentioned, we need to circumvent that by creating IAM users in AWS.

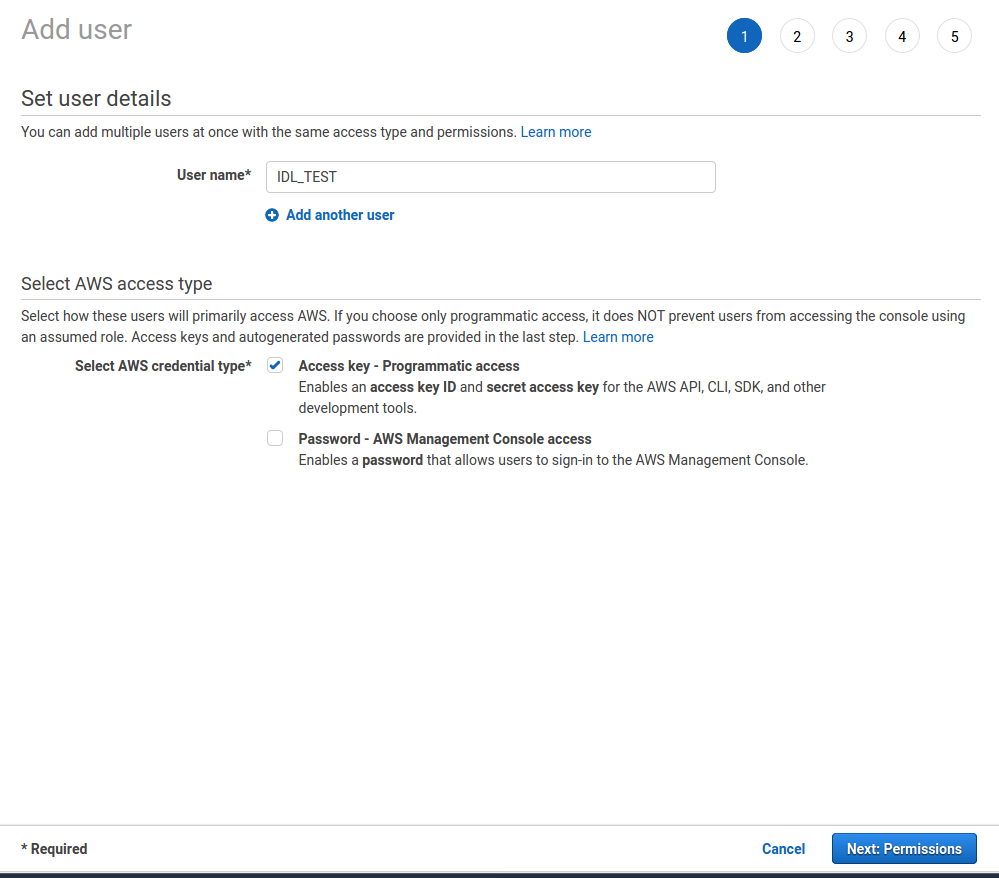

You sign-in to AWS with your account, go to IAM page and click Users in the left panel. Then, click on the Add users. Put in the username, check the box on Access key - Programmatic access, click Next.

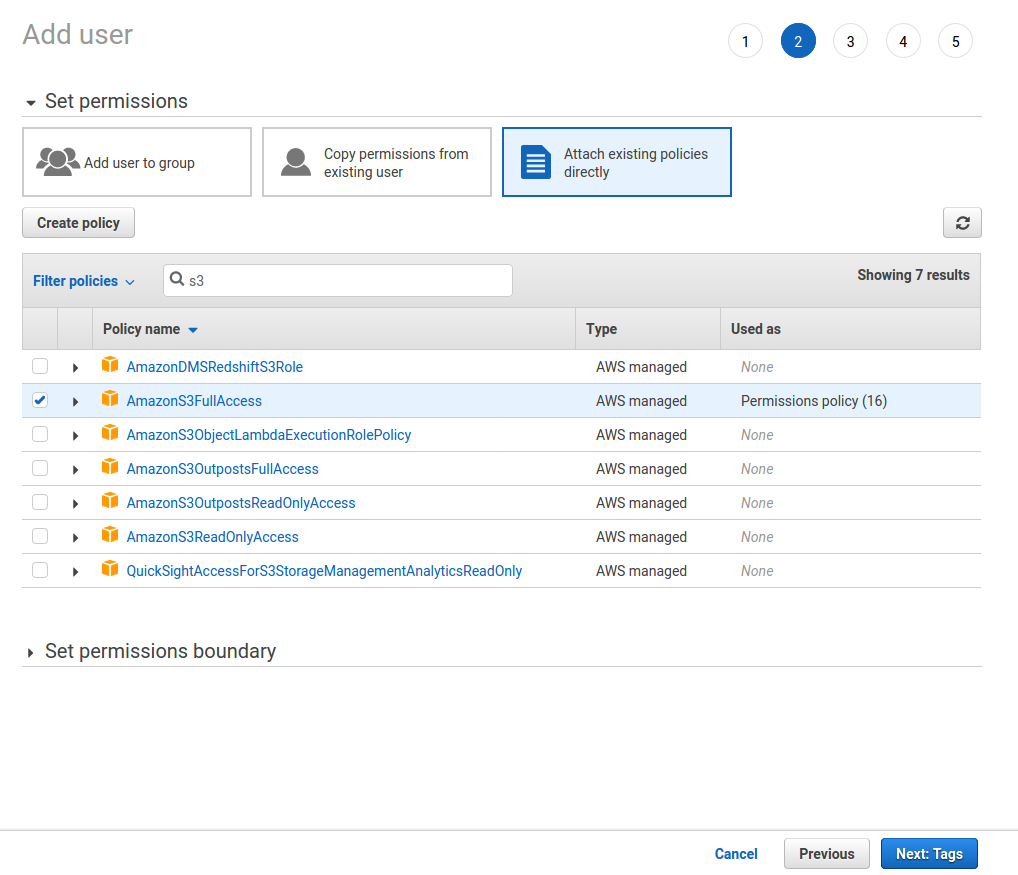

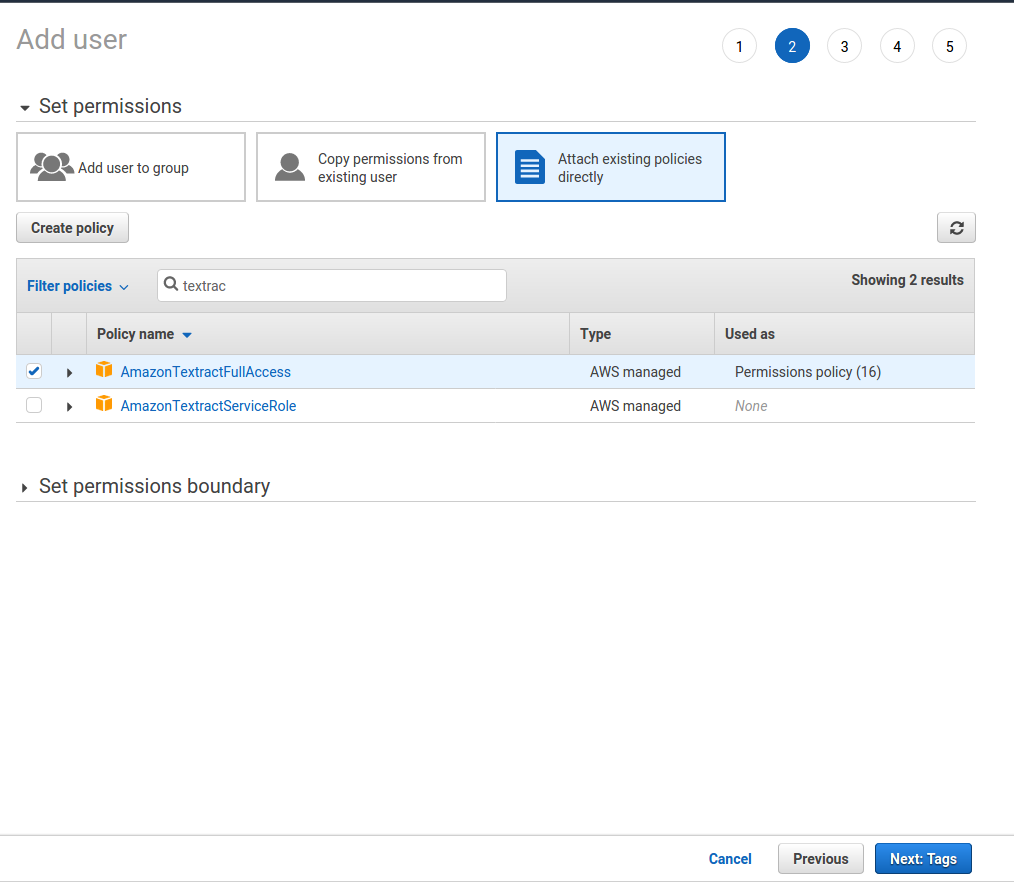

Then you need to give AmazonS3FullAccess and AmazonTextractFullAccess to these accounts.

| S3 Permission | Textract Permission |

|---|---|

|

|

Then click Next and Review and Create user.

Once you have the user, it will give Access key ID and Secret access key.

Save them and put them inside the KEYS variable in run_textract.py.

Since KEYS is a list of tuple, first element of tuple should be Access key ID and the second element Secret access key

NOW, DO THIS 16 TIMES! No, I am not kidding. Since we want to parallelize all the process, we have to create 16 IAM users, one user for each core. Or do it according to how many cores you want to run this process. And then, well, you know it already:

python run_textract.pyPS: If at any point, something happens and the code stops (which is bound to happen), fear not, just run the command again, and it will skip all the files it already processed.

Well, we all know why you are here, it is certainly not the RAMBLING that is going on above. It is for the data. The links to download the raw OCR annotations are here.

Since this can take a while (50GB * 25 times), you can first download a sample of the data here. So you can start having a look, understanding the structure, preparing your code, etc... This sample zip contains not only the OCR information but also the images, which are not included in the rest of the OCR files.

In addition, we have processed the OCR information by keeping only the words and their bounding boxes for each document page and arranged them in files of 500,000 pages. Therefore, the processed files consists on 54 imdb files. As this can also take some time, we provide a subset with imdb samples, so you can work on your code while downloading the rest of the data.

Here is a small script to download all the raw annotations and extract:

wget http://datasets.cvc.uab.es/UCSF_IDL/index.txt

wget -i index.txt

# extract folder f, can be repeated for g,h,j,k

cat f.*| tar xvzf -Each OCR file corresponds to a single document with all its pages. Amazon Textract provides detections at three different levels: Page, Line and Word. The detections can be found in the key Blocks, and the detection level can be found in the key BlockType of each block. For each detection you can find the Confidence and the bounding box or polygon in the key Geometry. The bounding box is normalized and in the format (Left, Top, Width, Height). In addition, for each block Textract also provides the relationship that allows to link the different hierarchical levels of words, lines and pages. However, if you are interested in a particular level you don't need to follow these relationships, since you can just get the text for each block with the level you prefer and ignore the rest because the information is duplicated but grouped in different ways.

The IMDB file is specially designed to work with the Facebook MMF framework (at least the Pythia version). Therefore, the information at the first position is the header containing the version, split name, creation date, etc. The following information corresponds to a document page with its detected text at word level and their normalized bounding boxes - This time in the format of (Left, Top, Right, Bottom).

Amazon's OCR engine Textract costs quite a lot of money. For the first 1M pages 1.5$ per page and after 1M, each page costs 0.6$. So, in total, the whole annotation cost ~18K$. We are thankful to Amazon for the scholarship and fellowship. And special thanks to IDL for putting the data in S3 and making it public.

Q: How much time did it take to get the data?

A: Around 1 month.

Q: Where did you store all the data in your local computer?

A: We had to buy a 14TB external drive.

Q: How many machines did you use?

A: Single machine, my university computer with 12 cores.

Q: Why are you doing this?

A: Science? Open Science? I am crazy? I don't know?

Q: This data is nice but I don't have the resources to train with this data?

A: Not a question but I feel/know/am you!

Q: What is the licence?

A: WTFPL from my side. Check it from the IDL side.

More to be added...

To err is human.

@article{biten2022ocr,

title={Ocr-idl: Ocr annotations for industry document library dataset},

author={Biten, Ali Furkan and Tito, Ruben and Gomez, Lluis and Valveny, Ernest and Karatzas, Dimosthenis},

journal={arXiv preprint arXiv:2202.12985},

year={2022}

}