LMFlow

English | 简体中文 | Español | 日本語 | 한국어 | हिंदी

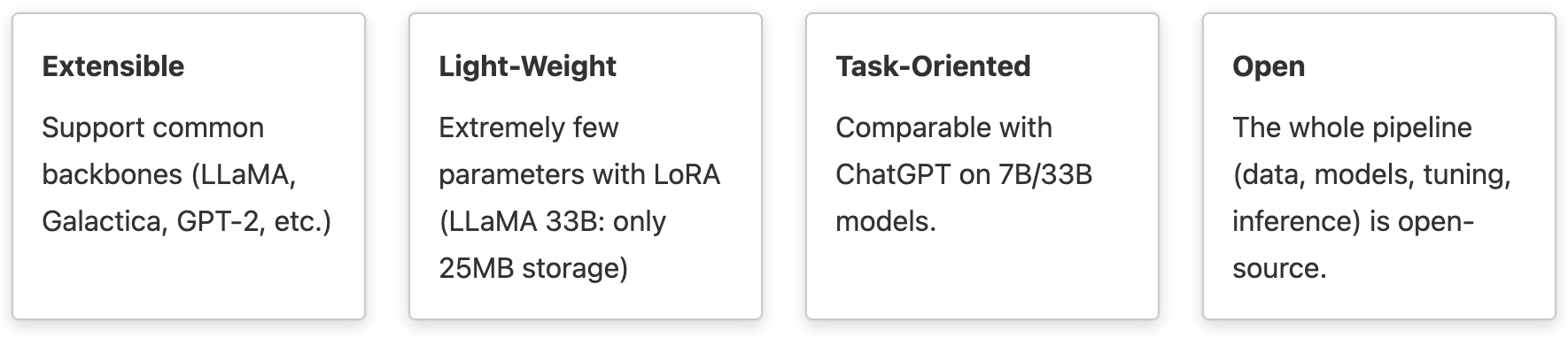

An extensible, convenient, and efficient toolbox for finetuning large machine learning models, designed to be user-friendly, speedy and reliable, and accessible to the entire community.

Latest News

- [2023-09-11] Support speculative decoding. Check out speculative_decoding for the usage and acceleration details.

- [2023-08-14] Support long context inference with position interpolation (Linear & NTK scaling ) for LLaMA models. Check out postion_interpolation for more details.

- [2023-08-07] Support Flash Attention-2. Check out flash_attention for more details.

- [2023-08-02] Support Llama2, ChatGLM2, and Baichuan models.

- [2023-07-23] 🚀 LMFlow multimodal chatbot is now available! Support multimodal inputs of images and texts. Online Demo is also provided (We hold the service on a single GPU, hence one may experience "queuing" or "application busy" sometimes when multiple users are accessing at the same time, please wait and attempt again later when such event happens) 🚀

- [2023-06-22] LMFlow paper is out! Check out our implementation details at https://arxiv.org/abs/2306.12420

- [2023-06-16] Our finetuned Robin-33B-V2 scored an impressive 64.1 on the Huggingface LLM leaderboard in our offline evaluation, outperforming major open-source LLMs! All checkpoints (7B, 13B, 33B, and 65B) are released! Checkout the performance here.

- [2023-06-07] LMFlow is now officially available on PyPI! Install it with

pip install lmflow-finetune! - [2023-05-30] Release Robin-13B-v2 and Robin-33B-v2!

More news...

- [2023-05-15] Release LMFlow-data, the training dataset of Robin-7B-v2. A new test data is also released.

- [2023-05-09] Release Robin-7B-v2, achieving competitive performance on chitchat, commonsense reasoning and instruction-following tasks. Refer to our comprehensive study.

- [2023-05-08] Release LMFlow Benchmark, an automatic evaluation framework for open-source chat-style LLMs. Benchmark results on 31 popular models are reported. Participate in LMFlow Benchmark.

- [2023-04-21] Release Robin-7B (based on LLaMA-7B), and two models for commercial use: Parakeets-2.7B (based on GPT-NEO-2.7B) and Cokatoo-7B (based on StableLM-7B) Download here

- [2023-04-15] Inference: Support streaming output and ChatGLM.

- [2023-04-10] We propose a new alignment algorithm: Reward rAnked FineTuning (RAFT), which is more efficient than conventional (PPO-based) RLHF. [Paper]

- [2023-04-02] Web service is online!

- [2023-04-01] Release three instruction-tuned checkpoints and three medical checkpoints in model zoo: LLaMA-7B-tuned, LLaMA-13B-tuned, LLaMA-33B-tuned, LLaMA-7B-medical, LLaMA-13B-medical, and LLaMA-33B-medical.

- [2023-03-27] Support full tuning and lora tuning for all decoder models.

- [2023-03-27] Tasked tuned model beats ChatGPT on medical domain.

- [2023-03-27] Release code and checkpoints - version 0.0.1! Our tasked-tuned model beats ChatGPT on medical domain.

Table of Contents

Quick Start

Setup

Our package has been fully tested on Linux OS (Ubuntu 20.04). Other OS platforms (MacOS, Windows) are not fully tested.

You may encounter some unexpected errors. You may try it first on a Linux machine or use Google Colab to experience it.

CUDA versions 10.3-11.7 are supported in our stable branch v0.0.5. For CUDA versions greater than 11.7, one can use our unstable branch main first.

git clone -b v0.0.5 https://github.com/OptimalScale/LMFlow.git

cd LMFlow

conda create -n lmflow python=3.9 -y

conda activate lmflow

conda install mpi4py

bash install.shPrepare Dataset

Please refer to our doc.

Finetuning (Full)

Full training updates all the parameters to finetune a language model. Here is an example to finetune a GPT-2 base model

cd data && ./download.sh alpaca && cd -

./scripts/run_finetune.sh \

--model_name_or_path gpt2 \

--dataset_path data/alpaca/train \

--output_model_path output_models/finetuned_gpt2Finetuning (LoRA)

LoRA is a parameter-efficient finetuning algorithm and is more efficient than full finetuning.

cd data && ./download.sh alpaca && cd -

# Saves lora only

./scripts/run_finetune_with_lora.sh \

--model_name_or_path facebook/galactica-1.3b \

--dataset_path data/alpaca/train \

--output_lora_path output_models/finetuned_galactica_lora

# Saves lora and merges into original model

./scripts/run_finetune_with_lora_save_aggregated_weights.sh \

--model_name_or_path facebook/galactica-1.3b \

--dataset_path data/alpaca/train \

--output_model_path output_models/finetuned_galacticaInference

After finetuning, you can run the following command to chat with the model.

./scripts/run_chatbot.sh output_models/finetuned_gpt2Deployment

If you want to deploy your own model locally, we provide a gradio-based UI for building chatbots. Running the following command will launch the demo for robin-7b:

pip install gradio

python ./examples/chatbot_gradio.py --deepspeed configs/ds_config_chatbot.json --model_name_or_path YOUR-LLAMA --lora_model_path ./robin-7b --prompt_structure "A chat between a curious human and an artificial intelligence assistant. The assistant gives helpful, detailed, and polite answers to the human's questions.###Human: {input_text}###Assistant:" --end_string "#" --max_new_tokens 200We also hosted it on Hugging Face Space.

Evaluation

LMFlow Benchmark is an automatic evaluation framework for open-source large language models. We use negative log likelihood (NLL) as the metric to evaluate different aspects of a language model: chitchat, commonsense reasoning, and instruction following abilities.

You can directly run the LMFlow benchmark evaluation to obtain the results to participate in the LLM comparision. For example, to run GPT2 XL, one may execute

./scripts/run_benchmark.sh --model_name_or_path gpt2-xl--model_name_or_path is required, you may fill in huggingface model name or local model path here.

To check the evaluation results, you may check benchmark.log in ./output_dir/gpt2-xl_lmflow_chat_nll_eval,

./output_dir/gpt2-xl_all_nll_eval and ./output_dir/gpt2-xl_commonsense_qa_eval.

Supported Features

Finetune Acceleration & Memory Optimization

-

LoRA

LoRA is a parameter-efficient finetuning algorithm and is more efficient than full finetuning. Check out finetuning-lora for more details.

-

FlashAttention

LMFlow supports both FlashAttention-1 and the latest FlashAttention-2. Check out flash_attention for more details.

-

Gradient Checkpointing

Gradient checkpointing is a memory optimization technique that trades compute for memory. It is useful when the model is too large to fit into GPU memory. Use it by just adding

--gradient_checkpointingto your training command. -

Deepspeed Zero3

LMFlow supports Deepspeed Zero-3 Offload. We provide an example deepspeed config, and you can directly use it.

Inference Acceleration

-

LLaMA Inference on CPU

Thanks to the great efforts of llama.cpp. It is possible for everyone to run their LLaMA models on CPU by 4-bit quantization. We provide a script to convert LLaMA LoRA weights to

.ptfiles. You only need to useconvert-pth-to-ggml.pyin llama.cpp to perform quantization. -

FlashAttention

LMFlow supports both FlashAttention-1 and the latest FlashAttention-2. Check out flash_attention for more details.

Long Context

-

Position Interpolation for LLaMA Models

Now LMFlow supports the latest Linear & NTK (Neural Kernel theory) scaling techniques for LLaMA models. Check out postion_interpolation for more details.

Model Customization

-

Vocabulary Extension

Now you can train your own sentencepiece tokenizer and merge it with model's origin hf tokenizer. Check out vocab_extension for more details.

Multimodal

-

Multimodal Chatbot

LMFlow supports multimodal inputs of images and texts. Check out our LMFlow multimodal chatbot. Online Demo is also provided.

Support

If you need any help, please submit a Github issue.

License

The code included in this project is licensed under the Apache 2.0 license. If you wish to use the codes and models included in this project for commercial purposes, please sign this document to obtain authorization.

Citation

If you find this repository useful, please consider giving ⭐ and citing our paper:

@article{diao2023lmflow,

title={Lmflow: An extensible toolkit for finetuning and inference of large foundation models},

author={Diao, Shizhe and Pan, Rui and Dong, Hanze and Shum, Ka Shun and Zhang, Jipeng and Xiong, Wei and Zhang, Tong},

journal={arXiv preprint arXiv:2306.12420},

year={2023}

}

@article{dong2023raft,

title={Raft: Reward ranked finetuning for generative foundation model alignment},

author={Dong, Hanze and Xiong, Wei and Goyal, Deepanshu and Pan, Rui and Diao, Shizhe and Zhang, Jipeng and Shum, Kashun and Zhang, Tong},

journal={arXiv preprint arXiv:2304.06767},

year={2023}

}