DenoiseLoc: Boundary Denoising for Video Activity Localization, ICLR 2024

Mengmeng Xu, Mattia Soldan, Jialin Gao, Shuming Liu, Juan-Manuel Pérez-Rúa, Bernard Ghanem

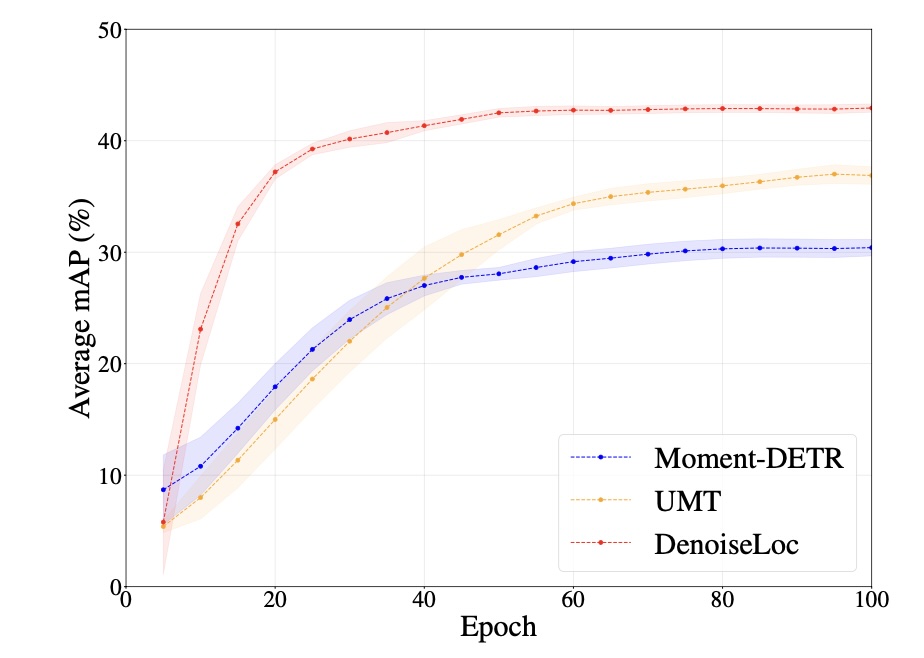

This repo hosts the original code of our DenoiseLoc work, along with a copy of the QVHighlights dataset for moment retrieval and highlight detections. DenoiseLoc is an encoder-decoder model to tackle the video activity localization problem from a denoising perspective. During training, a set of action spans is randomly generated from the ground truth with a controlled noise scale. The inference reverses this process by boundary denoising, allowing the localizer to predict activities with precise boundaries and resulting in faster convergence speed. This code works for the QV-Highlights dataset, where we observe a gain of +12.36% average mAP over the baseline.

The code is developed on top of Moment-DETR. We keep minimal changes for simplicity but make necessary adaptions for clarity. We keep the official data and evaluation tools in folders data and standalone_eval, respectively.

- Clone this repo

git clone https://github.com/frostinassiky/denoise_loc.git

cd moment_detr

- Prepare feature files

Download feature file (8GB) moment_detr_features.tar.gz. The instruction can be found from Moment-DETR. Then

extract it under the project root directory:

tar -xf path/to/moment_detr_features.tar.gz

The features are extracted using Linjie's HERO_Video_Feature_Extractor.

If you have any issues with the feature file, please email us for alternative solutions.

- Install dependencies.

This code requires Python 3.7, PyTorch, and a few other Python libraries. We recommend creating a conda environment and installing all the dependencies as follows:

# create conda env

conda create --name denoise_loc python=3.7

# activate env

conda activate denoise_loc

# install pytorch with CUDA 11.6

conda install pytorch torchvision pytorch-cuda=11.6 -c pytorch -c nvidia

# install other python packages

pip install tqdm ipython easydict tensorboard tabulate scikit-learn pandas

# compile and install nms1d and align1d

cd lib/align1d

python setup.py install

cd ../nms1d

python setup.py install

The PyTorch version we tested is 1.9.0.

Training can be launched by running the following batch script:

sbatch slurm/trainval_v100_snr.sh

Alternatively, you may revise the original training script and run it locally:

bash moment_detr/scripts/train.sh

For more configurable options, please check out the config file moment_detr/config.py.

Once the model is trained, you can use the following command for inference:

bash moment_detr/scripts/inference.sh CHECKPOINT_PATH SPLIT_NAME

where CHECKPOINT_PATH is the path to the saved checkpoint, SPLIT_NAME is the split name for inference, and can be one of val and test.

We provide the results in Google Drive

Since our project was developed from Moment-DETR. Please refer to their codebase for:

- Pretraining and Finetuning

- Evaluation and Codalab Submission

- Train Moment-DETR on your dataset

- Run predictions on your videos and queries

This code is based on Moment-DETR, and the implementation is referred from G-TAD and DiffusionDet. We thank the authors for their awesome open-source contributions. We also thank @HSPUPIL for sharing the results and verifying the reproducibility.

The annotation files are under CC BY-NC-SA 4.0 license, see ./data/LICENSE. All the code are under MIT license; see LICENSE.