ETL pipeline for a datawarehouse (DWH) hosted on Redshift AWS

- AWS/ used to create aws infrastucture read more on section Creating Infrastucture

- sql_queries.py: contains all sql queries

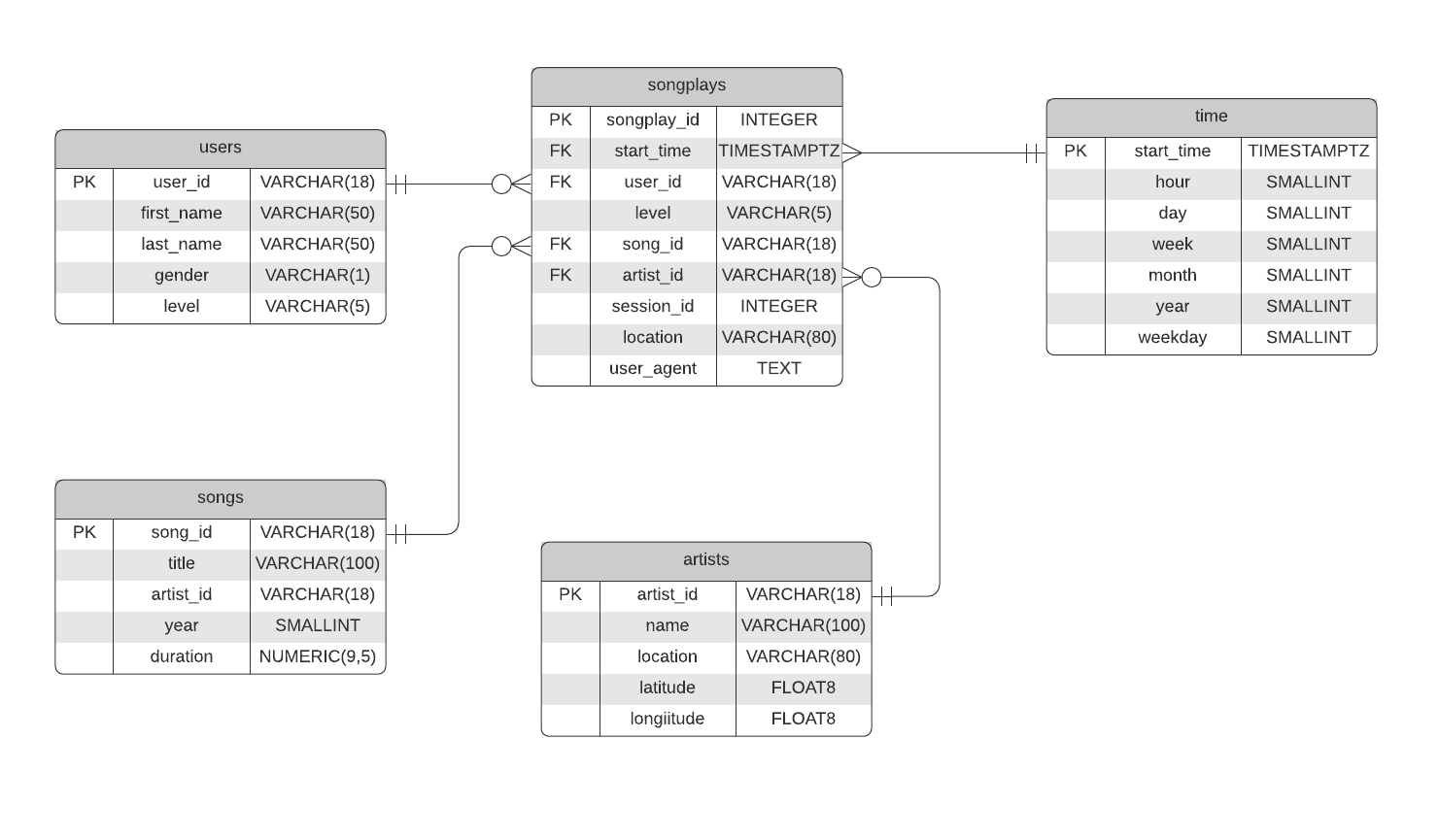

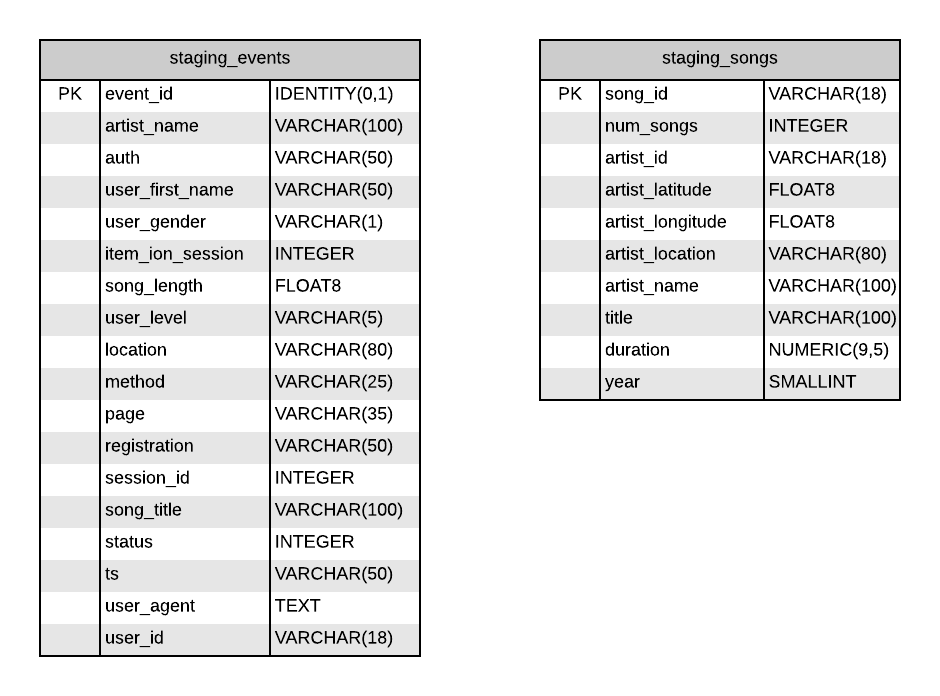

- create_tables.py: Create all tables. Read more on details about the tables schema on section Schemas

- etl.py: Run ETL pipeline. The pipeline read data from s3 (schema format on subsection Data in s3), and converts the data to a Star Schema format (schema format on subsection *schema format on subsection Datawarehouse Schema)

- load_s3.py: Upload

*.jsondata on folderdata/to s3. - dwg.cfg: Config file

We are using aws as environment variables in this repo:

export AWS_ACCESS_KEY_ID=<YOUR_AWS_ACCESS_KEY_ID>

export AWS_SECRET_ACCESS_KEY=<YOUR_AWS_SECRET_ACCESS_KEY>

export AWS_DEFAULT_REGION=<YOUR_REGION>Create IAM roles for Redshift, run file: iam.py. It will create a role for Redshift with s3 Read access

Creates Redshift cluster and add Ingress rule for TCP connection on port 5439. Run file redshift.py

Upload all dataset to s3. Run load_s3.py

This data will pass trough an ETL to be stored in Redshift

Fill out the dwh.cfg with the desired redshift confirguration, s3 and IAM role

Once you have all the infraestructure ready. Run the commands:

- Run

python create_tables.py - Run

python etl.py