This repository contains the official PyTorch implementation for the paper

Frederic Z. Zhang, Dylan Campbell, Stephen Gould; Efficient Two-Stage Detection of Human-Object Interactions With a Novel Unary-Pairwise Transformer; Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), 2022, pp. 20104-20112.

[project page] [paper] [video] [preprint]

...

However, the success of such one-stage HOI detectors can largely be attributed to the representation power of transformers. We discovered that when equipped with the same transformer, their two-stage counterparts can be more performant and memory-efficient, while taking a fraction of the time to train. In this work, we propose the Unary–Pairwise Transformer, a two-stage detector that exploits unary and pairwise representa-tions for HOIs. We observe that the unary and pairwise parts of our transformer network specialise, with the former preferentially increasing the scores of positive examples and the latter decreasing the scores of negative examples. We evaluate our method on the HICO-DET and V-COCO datasets, and significantly outperform state-of-the-art approaches. At inference time, our model with ResNet50 approaches real-time performance on a single GPU.

We provide weights for UPT models pre-trained on HICO-DET and V-COCO for potential downstream applications. In addition, we also provide weights for fine-tuned DETR models to facilitate reproducibility. To attempt fine-tuning the DETR model yourself, refer to this repository.

| Model | Dataset | Default Settings | Inference | UPT Weights | DETR Weights |

|---|---|---|---|---|---|

| UPT-R50 | HICO-DET | (31.66, 25.94, 33.36) |

0.042s |

weights | weights |

| UPT-R101 | HICO-DET | (32.31, 28.55, 33.44) |

0.061s |

weights | weights |

| UPT-R101-DC5 | HICO-DET | (32.62, 28.62, 33.81) |

0.124s |

weights | weights |

| Model | Dataset | Scenario 1 | Scenario 2 | Inference | UPT Weights | DETR Weights |

|---|---|---|---|---|---|---|

| UPT-R50 | V-COCO | 59.0 |

64.5 |

0.043s |

weights | weights |

| UPT-R101 | V-COCO | 60.7 |

66.2 |

0.064s |

weights | weights |

| UPT-R101-DC5 | V-COCO | 61.3 |

67.1 |

0.131s |

weights | weights |

The inference speed was benchmarked on a GeForce RTX 3090. Note that weights of the UPT model include those of the detector (DETR). You do not need to download the DETR weights, unless you want to train the UPT model from scratch. Training UPT-R50 with 8 GeForce GTX TITAN X GPUs takes around 5 hours on HICO-DET and 40 minutes on V-COCO, almost a tenth of the time compared to other one-stage models such as QPIC.

For general inquiries regarding the paper and code, please post them in Discussions. For bug reports and feature requests, please post them in Issues. You can also contact me at frederic.zhang@anu.edu.au.

- Install the lightweight deep learning library Pocket. The recommended PyTorch version is 1.9.0.

Make sure the environment for Pocket is activated (

conda activate pocket), and install the packaging library withpip install packaging. - Download the repository and the submodules.

git clone https://github.com/fredzzhang/upt.git

cd upt

git submodule init

git submodule update- Prepare the HICO-DET dataset.

- If you have not downloaded the dataset before, run the following script.

cd /path/to/upt/hicodet bash download.sh- If you have previously downloaded the dataset, simply create a soft link.

cd /path/to/upt/hicodet ln -s /path/to/hicodet_20160224_det ./hico_20160224_det - Prepare the V-COCO dataset (contained in MS COCO).

- If you have not downloaded the dataset before, run the following script

cd /path/to/upt/vcoco bash download.sh- If you have previously downloaded the dataset, simply create a soft link

cd /path/to/upt/vcoco ln -s /path/to/coco ./mscoco2014

UPT is released under the BSD-3-Clause License.

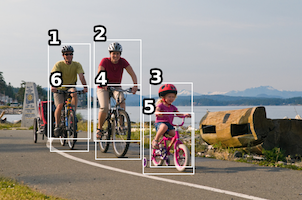

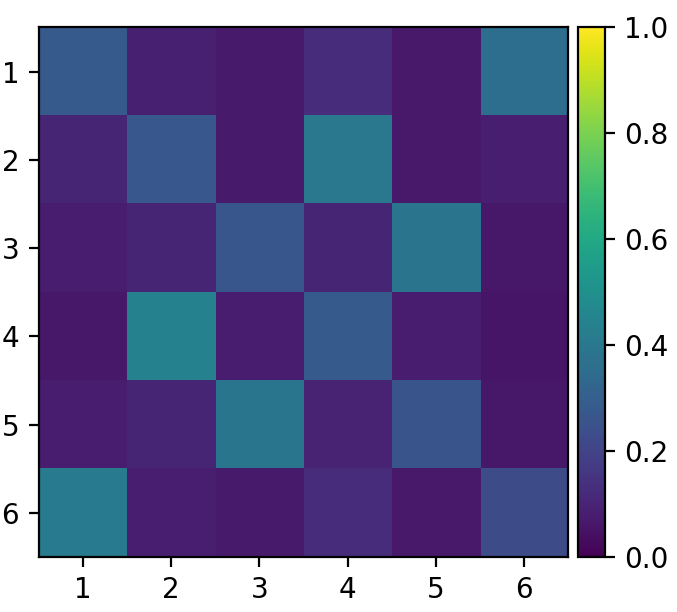

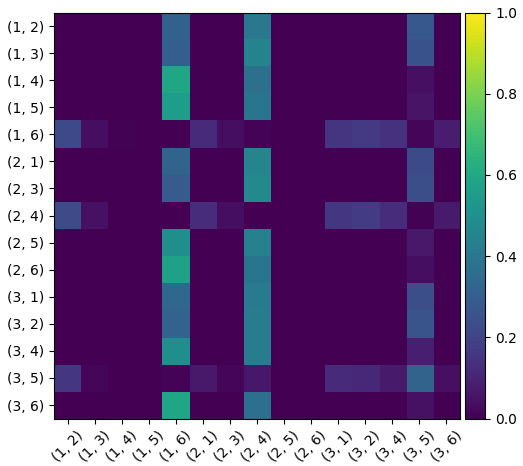

We have implemented inference utilities with different visualisation options. Provided you have downloaded the model weights to checkpoints/, run the following command to visualise detected instances together with the attention maps from the cooperative and competitive layers. Use the flag --index to select images, and --box-score-thresh to modify the filtering threshold on object boxes.

python inference.py --resume checkpoints/upt-r50-hicodet.pt --index 8789Here is the sample output. Note that we manually selected some informative attention maps to display. The predicted scores for each action will be printed by the script as well.

To select the V-COCO dataset and V-COCO models, use the flag --dataset vcoco, and then load the corresponding weights. To visualise interactive human-object pairs for a particular action class, use the flag --action to specify the action index. Here is a lookup table for the action indices.

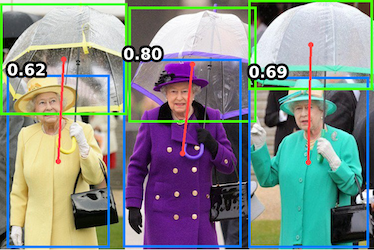

Additionally, to cater for different needs, we implemented an option to run inference on custom images, using the flag --image-path. The following is an example for interaction holding an umbrella.

python inference.py --resume checkpoints/upt-r50-hicodet.pt --image-path ./assets/umbrella.jpeg --action 36Refer to launch_template.sh for training and testing commands with different options. To train the UPT model from scratch, you need to download the weights for the corresponding DETR model, and place them under /path/to/upt/checkpoints/. Adjust --world-size based on the number of GPUs available.

To test the UPT model on HICO-DET, you can either use the Python utilities we implemented or the Matlab utilities provided by Chao et al.. For V-COCO, we did not implement evaluation utilities, and instead use the utilities provided by Gupta et al.. Refer to these instructions for more details.

If you find our work useful for your research, please consider citing us:

@inproceedings{zhang2022upt,

author = {Frederic Z. Zhang and Dylan Campbell and Stephen Gould},

title = {Efficient Two-Stage Detection of Human-Object Interactions with a Novel Unary-Pairwise Transformer},

booktitle = {Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR)},

month = {June},

year = {2022},

pages = {20104-20112}

}

@inproceedings{zhang2021scg,

author = {Frederic Z. Zhang, Dylan Campbell and Stephen Gould},

title = {Spatially Conditioned Graphs for Detecting Human–Object Interactions},

booktitle = {Proceedings of the IEEE/CVF International Conference on Computer Vision (ICCV)},

month = {October},

year = {2021},

pages = {13319-13327}

}