This repository contains the implementation of the following paper:

Collaborative Diffusion for Multi-Modal Face Generation and Editing

Ziqi Huang, Kelvin C.K. Chan, Yuming Jiang, Ziwei Liu

IEEE/CVF International Conference on Computer Vision (CVPR), 2023

From MMLab@NTU affiliated with S-Lab, Nanyang Technological University

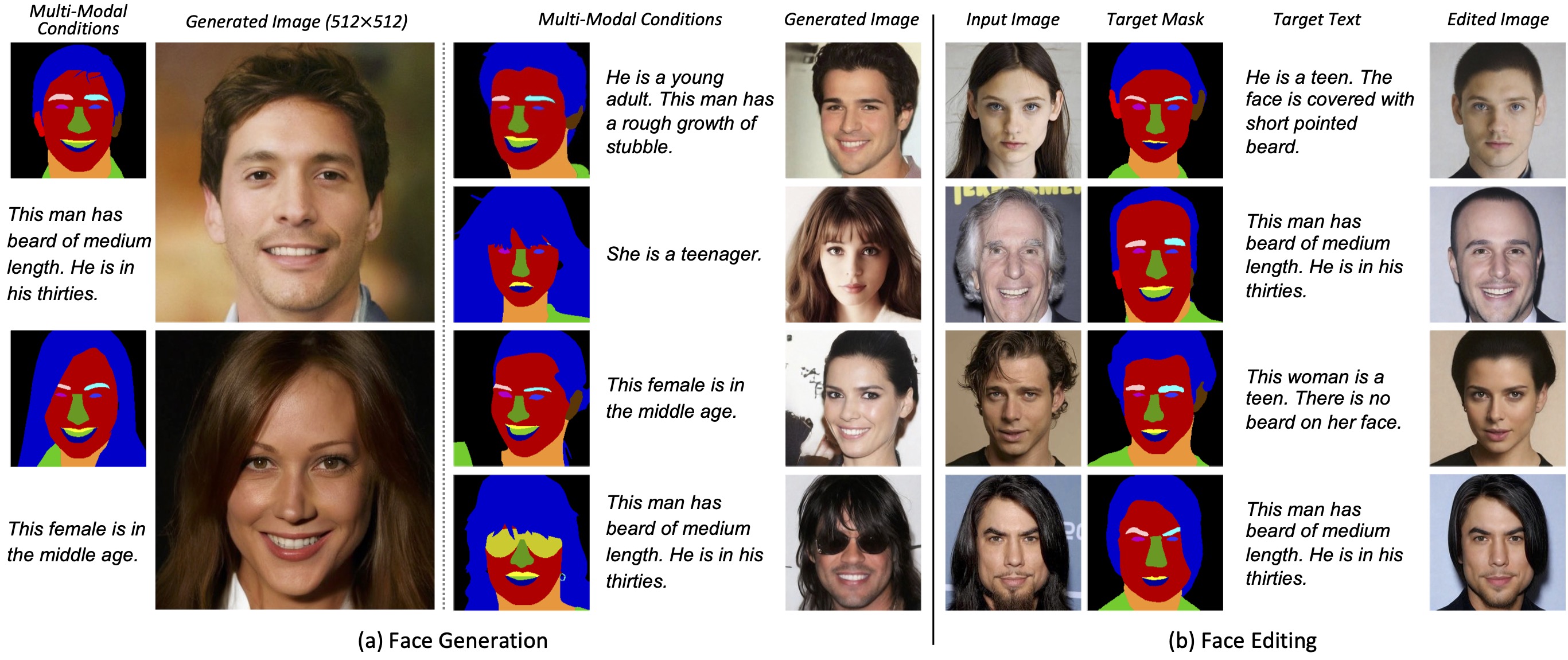

We propose Collaborative Diffusion, where users can use multiple modalities to control face generation and editing. (a) Face Generation. Given multi-modal controls, our framework synthesizes high-quality images consistent with the input conditions. (b) Face Editing. Collaborative Diffusion also supports multi-modal editing of real images with promising identity preservation capability.

- [04/2023] Inference code for multi-modal face generation (512x512) released.

-

Clone repo

git clone https://github.com/ziqihuangg/Collaborative-Diffusion cd Collaborative-Diffusion -

Create conda environment.

If you already have anldmenvironment installed according to LDM, you do not need to go throught this step (i.e., step 2). You can simplyconda activate ldmand jump to step 3.conda env create -f environment.yaml conda activate codiff

-

Install dependencies

pip install transformers==4.19.2 scann kornia==0.6.4 torchmetrics==0.6.0 conda install -c anaconda git pip install git+https://github.com/arogozhnikov/einops.git

-

Download the pre-trained models from here.

-

Put the models under

pretrainedas follows:Collaborative-Diffusion └── pretrained ├── 512_codiff_mask_text.ckpt ├── 512_mask.ckpt ├── 512_text.ckpt └── 512_vae.ckpt

You can control face generation using text and segmentation mask.

-

mask_pathis the path to the segmentation mask, andinput_textis the text condition.python generate_512.py \ --mask_path test_data/512_masks/27007.png \ --input_text "This man has beard of medium length. He is in his thirties."python generate_512.py \ --mask_path test_data/512_masks/29980.png \ --input_text "This woman is in her forties." -

You can view different types of intermediate outputs by setting the flags as

1. For example, to view the influence functions, you can setreturn_influence_functionto1.python generate_512.py \ --mask_path test_data/512_masks/27007.png \ --input_text "This man has beard of medium length. He is in his thirties." \ --ddim_steps 10 \ --batch_size 1 \ --save_z 1 \ --return_influence_function 1 \ --display_x_inter 1 \ --save_mixed 1Note that producing intermediate results might consume a lot of GPU memory, so we suggest setting

batch_sizeto1, and settingddim_stepsto a smaller value (e.g.,10) to save memory and computation time.

The code for training the Dynamic Diffusers will be released soon.

The code for training the uni-modal diffusion models will be released soon.

If you find our repo useful for your research, please consider citing our paper:

@InProceedings{huang2023collaborative,

author = {Huang, Ziqi and Chan, Kelvin C.K. and Jiang, Yuming and Liu, Ziwei},

title = {Collaborative Diffusion for Multi-Modal Face Generation and Editing},

booktitle = {Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition},

year = {2023},

}The codebase is maintained by Ziqi Huang.

This project is built on top of LDM.